Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

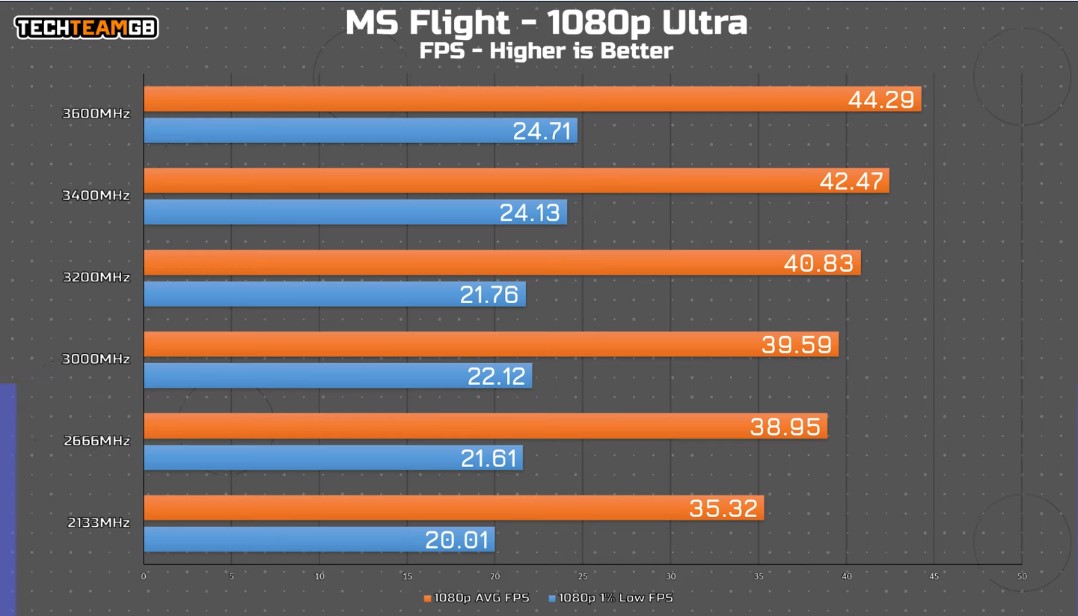

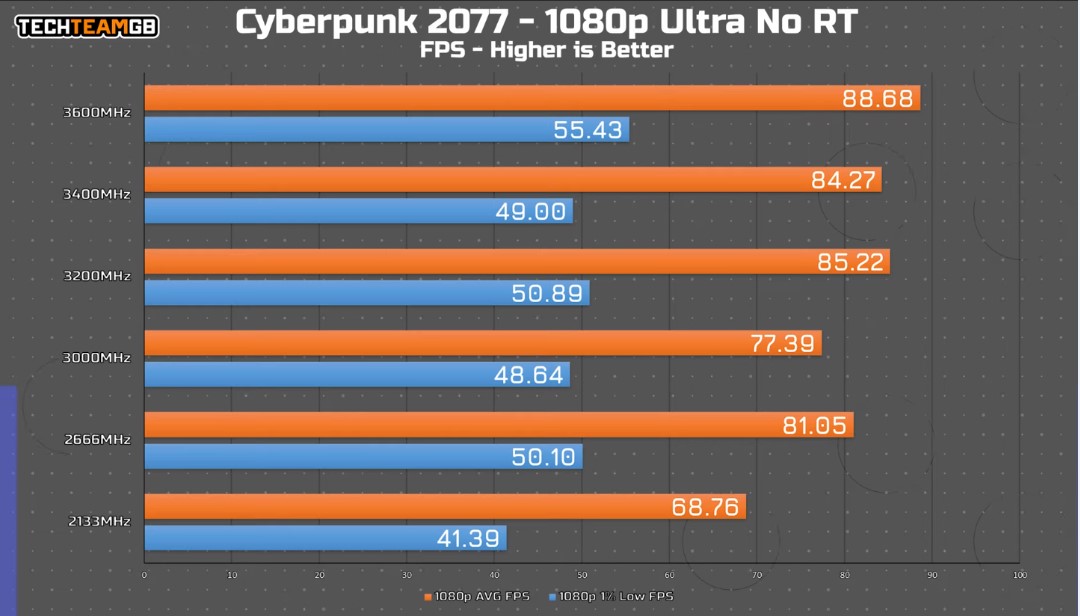

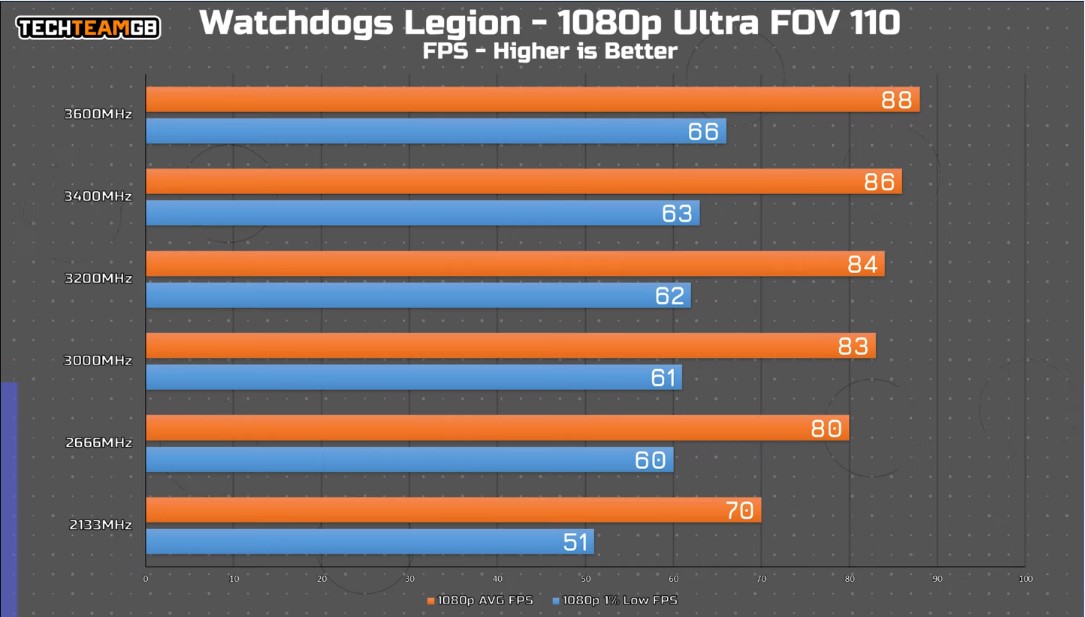

Exactly, and with this particular part (5800X3D), I'd venture to say the majority of purchases are people upgrading that are already on AM4. I don't imagine many buying an AM4 board right now to use this thing on. They'll either go Intel DDR5, or wait for AM5 w/ DDR5 if buying new.That makes perfect sense, but I also want to see real world performance gains. Yes it might be 15% faster when CPU bound, but how does that translate to actual game performance? It is a useless thing to measure because no one actually games at those resolutions with settings turned down. I don't mind including it, but they should always include 1080/1440/1440 ultra wide and 4K at max settings. Of course CPUs tend not to make much of a difference there, but it would be nice to see how much or how little the gain would be from an older CPU to inform a purchasing decision.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)