OhSigmaChi

Limp Gawd

- Joined

- Jan 27, 2019

- Messages

- 194

So, I was upgrading a friend's computer; he had an old iBuypower machine with an fx 4100 and an HD 7750 (awful) and 8 GB of 1033mHz RAM. This thing couldn't even spell firestrike, let alone run it (it wouldn't even run the Heaven Extreme benchmark without crashing)

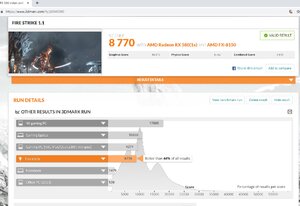

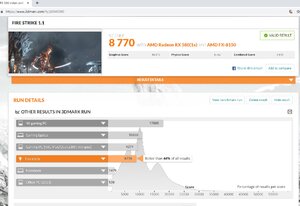

I was able to get him a new PSU, FX 8150, 16GB of 1866 DDR3, and an XFX RX 580 8GB for just a hair over $200. I kept his case, mobo and HDD (he's getting an SSD right now at Microcenter).

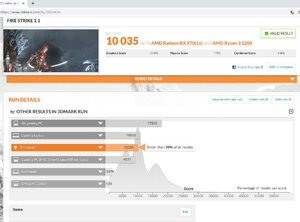

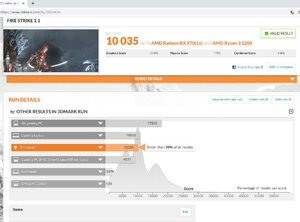

What's blowing my mind is that his machine could not keep up with the cheapest Ryzen build I have ever done. both machines had very mild CPU/GPU overclocks, and the R3 1200 with an ASUS Strix RX 570 and 8GB of DDR4 2666, just ran away with the firestrike results...

"Talk amongst yourselves"

I was able to get him a new PSU, FX 8150, 16GB of 1866 DDR3, and an XFX RX 580 8GB for just a hair over $200. I kept his case, mobo and HDD (he's getting an SSD right now at Microcenter).

What's blowing my mind is that his machine could not keep up with the cheapest Ryzen build I have ever done. both machines had very mild CPU/GPU overclocks, and the R3 1200 with an ASUS Strix RX 570 and 8GB of DDR4 2666, just ran away with the firestrike results...

"Talk amongst yourselves"

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)