NVidia has said nothing about this.

I must be misremembering and it was just a rumor mill article that was posted then.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

NVidia has said nothing about this.

R&D costs.When it comes to gaming hardware here's a few things that determine hardware pricing ...

What it cost to manufacture, wholesale markup and ultimately, how much are customers willing to pay for it.

Did I leave anything out?

The die shrink alone should give it about 25% performance increase. So if Ampere architechture gives and additional 20-25% then the 50% rumored performance increase doesn't seem far off.

RTX needs to be orders of magnitude faster (and it already is compared to previous generations) in order to reach the magical full RT@4K/60 that everyone is hoping for.

That's not going to happen in the inmediate future, so we'll most likely see some sort of DLSS 2.0 and some other tricks to get playable performance.

It would be stupid if they didn't, considering NVIDIA worked with LG on their X-series OLED televisions set to release this year.Maybe it will finally have hdmi 2.1

Die shrinks don't automagicly give you 20-25% performance bumps. It may appear that way... but remember every die shrink we have ever seen also accompanies a 3-4 year newer architecture. Its rare in any silicon market GPU CPU APU mobile that a die shrink involves nothing more then taking the exact same chip and just making it smaller. When they do the performance gain is extremely low. If you want proof of that just look at the recent AMD Ryzen 1600 re release on a shrunk node. It doesn't perform any better then the old ones...

More relevant example:While I agree that die shrinks aren't a big automatic gain, Ryzen 1600 12nm and heck even Ryzen 2600 are poor examples. This chips are essetnially identical to original Ryzen 1600. It didn't shrink. Dimensions are identical. In the old days before Fabs started exaggerating a lot, these would have been called new steppings, where you get a bit cleaner signals, so you can low voltage a bit, run a bit more clock or a bit lower power. That's it.

We can't predict what NVidia will get out the 7nm process, but it won't be as bad as the Ryzen 14nm->"12nm", update. Maybe look at Vega 64 to Radeon 7 as benefit of 7nm with minimal architecture improvement.

While I agree that die shrinks aren't a big automatic gain, Ryzen 1600 12nm and heck even Ryzen 2600 are poor examples. This chips are essetnially identical to original Ryzen 1600. It didn't shrink. Dimensions are identical. In the old days before Fabs started exaggerating a lot, these would have been called new steppings, where you get a bit cleaner signals, so you can low voltage a bit, run a bit more clock or a bit lower power. That's it.

We can't predict what NVidia will get out the 7nm process, but it won't be as bad as the Ryzen 14nm->"12nm", update. Maybe look at Vega 64 to Radeon 7 as benefit of 7nm with minimal architecture improvement.

More relevant example:

8800 GTX (90nm) -> 9800 GTX (65nm) -> 9800 GTX+ (55nm), all with similar performance to each other.

But in the end both process and architecture advancement are subordinate to the design targets.

IMO NVidia will target about 30% generational improvement (similar to the last decade average), regardless of what the process and architecture deliver.

VRAM usage shot up dramatically quite soon after this gen's console release at least for texture settings. Next gen consoles with more memory are at the end of this year. Whether or not have to run max-1 (or more) for texture settings due to VRAM limitations is an issue or not will be dependent on the person.

Rumored specs are so high that just your mere presence in a online game guarantees you victory.

Edit: Actually this is only true for the Glorious Founder Edition.

The only operational reason for the target to be higher than 30% would be the smaller bump with Turing along with the increase in price per SKU (perceived, if arguing semantics). Essentially, while Nvidia targets enthusiasts that upgrade every generation to a degree as well as new users, their biggest target is probably the regular upgraders that hit every second or third generation.

Few were likely interested in trading a top-end 1000-series GPU, generally under US$800, for a US$1000+ top-end 2000-series GPU, given the gains in rasterization.

Whatever is actually possible given available technology -- and remember that Nvidia is a company that defines available technology in the GPU industry -- they do need to set a target that will actually interest buyers and motivate sales. So I agree, a minimum of ~30% sounds good, given the combination of a die shrink, a new architecture, and the current architecture being less well received. If they can do better, they'll have trouble keeping stock.

They have trouble keeping stock as it is. As much as we can hate on the 2000s for not being a generational leap in pure performance and for increasing pricing. The stupid things sold as fast as they could make them. Its seems gamers have proven they are ok with 20-30% per gen.

We are all going to have to hope that Navi 2 was worth waiting for and that NV spies have been telling them that. Otherwise ya they have no reason to target more then 30%. So far 30% has proven to be all they need to do to sell dies as fast as they can fab them. Its not like they are going to be stuck with a ton of 2000 stock unless they do something dumb like order a ton a week before they launch ampere.

Its funny our microcenter has a case full of video cards(green and team red) a mile long and you can see the dust around the boxes that are not selling at these inflated non depreciated prices.

No citation needed, everyone knows the bigger the size of the chip the higher the failure rate becomes. If demand was as high for these chips as say Pascal was, then yeah I expect it would be a huge issue for Nvidia.

Don't need citations to point out the obvious. ... Still no doubt a 754mm die can simply not have a great yield. The number of fully functional chips coming off a wafer at that size can't be great... its just physics....

It's silly to pay MSRP this late into the release cycle. If you were going to drop $1300 on a GPU, 15 months ago was the time to do it. Now $800 is the most I'd be willing to pay for a (used) 2080 Ti.

If NVIDIA go insane with their pricing again I’ll probably throw AMD a bone and get big navi.

Sounds logical but without real numbers from the FAB's, you are just guessing with regards to yield rate on the 754nm² chip in question as compared to any other chip. The size is a relatively small factor in yields, as some designs/processes have great yields, and others have shit yields, while being the same size. Your logic is true assuming all else is equal between production of different semiconductors, however systemic production issues can vary greatly between runs.

I wouldn't count on AMD

The only operational reason for the target to be higher than 30% would be the smaller bump with Turing along with the increase in price per SKU (perceived, if arguing semantics). Essentially, while Nvidia targets enthusiasts that upgrade every generation to a degree as well as new users, their biggest target is probably the regular upgraders that hit every second or third generation.

Few were likely interested in trading a top-end 1000-series GPU, generally under US$800, for a US$1000+ top-end 2000-series GPU, given the gains in rasterization.

Whatever is actually possible given available technology -- and remember that Nvidia is a company that defines available technology in the GPU industry -- they do need to set a target that will actually interest buyers and motivate sales. So I agree, a minimum of ~30% sounds good, given the combination of a die shrink, a new architecture, and the current architecture being less well received. If they can do better, they'll have trouble keeping stock.

I don't think there are many people that are ok with a price increase. Those that are more then likely have money growing out their ass and willing to spend what ever it takes for the best.I fully expect at least 30% increase in performance from a new arch and smaller node.

I also fully expect a 30% increase in price, since they discovered some people are OK with that

I fully expect at least 30% increase in performance from a new arch and smaller node.

I also fully expect a 30% increase in price, since they discovered some people are OK with that

Unfortunately, I think you're right and that means I'm going to stick with my 1080 Ti a little longer unless an $800 part comes along that is 30%+ faster. I can afford the Ti tier cards, but I can't justify them - the value isn't there especially given my gaming patterns lately.

What are some high VRAM games I can try? I figured BF V would be one of the biggest users, but I haven't seen that go above ~6.5GB.

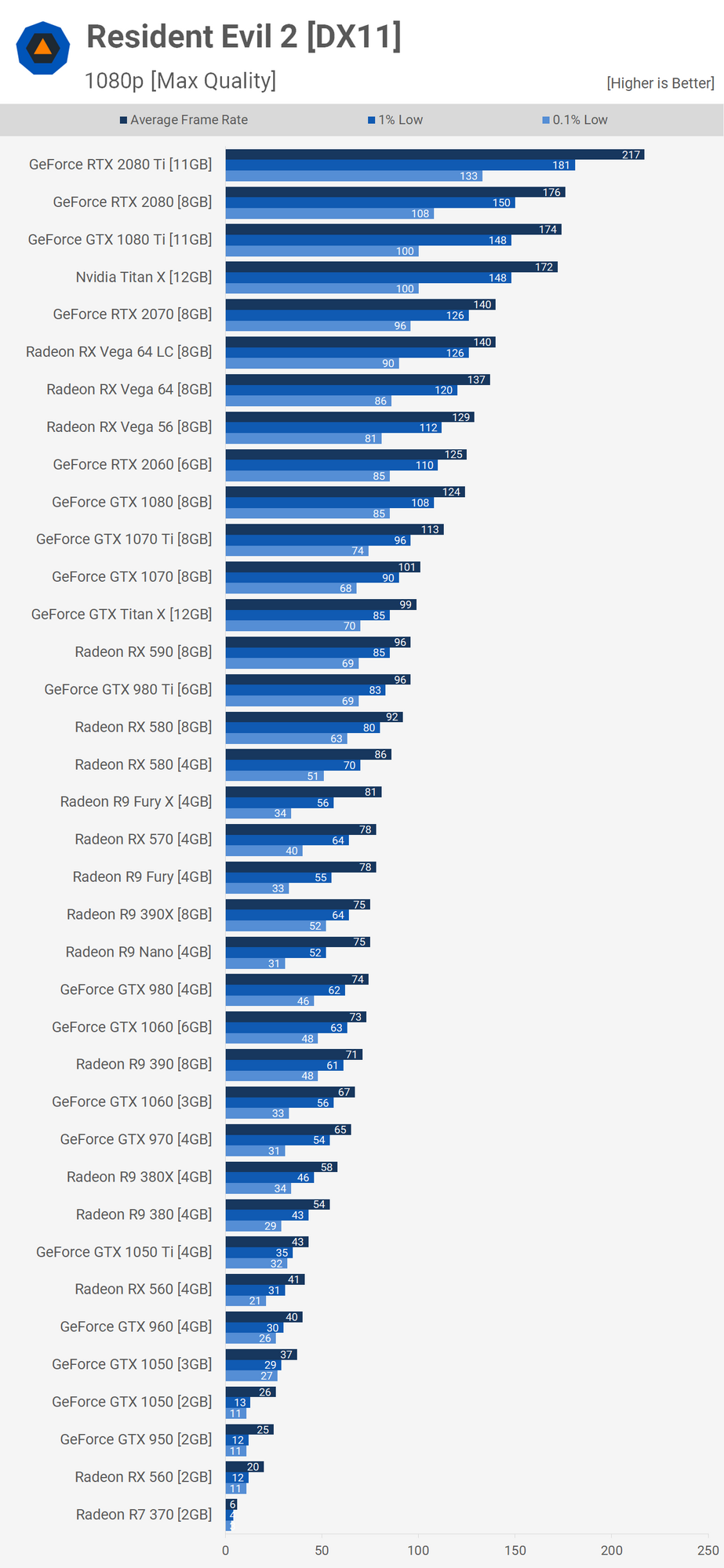

Resident Evil 2 was easily using atleast 7 - 7.5 GB of VRAM at 1080p for me for some reason. Haven't even tried in in 1440p.

If Ampere is 30% faster and the 2080 Ti was 30% faster, then the Ampere card will be about 70% faster than the 1080 Ti.

If your video card has 8gb vram, often games are just going to aggressively cache more than they need. But it's still quite playable with half that much.

I would imagine the $800 Ampere will be about the same as the 2080 Ti in performance, whereas the Ampere Ti will be about 30% faster than the 2080 Ti at 2080 Ti prices or above. So the $800 card would buy me 30% in boost if I'm right.

I can't justify $1200+ for a graphic card.

It's been over a year since the 2080 Ti started at $1200. Can we all just finally admit that the price on that card is now $1000? If people are going to whine about the 2080 Ti costing $1200, I'm going to whine about pre-launch AMD pricing and lousy AMD performance based on benchmarks from before they issue post-launch firmware bandaids.

If we stick to reality as it exists today, what we see here is that the 3080 is going to crush the 1080 Ti in terms of performance, and it's going to do it at the same price. The 3080 Ti is going to crush the 3080 with pricing TBD (but highly unlikely to exceed the $1200 2080 Ti FE, and most likely to match the $1000 2080 Ti AIB cards).

I would imagine the $800 Ampere will be about the same as the 2080 Ti in performance, whereas the Ampere Ti will be about 30% faster than the 2080 Ti at 2080 Ti prices or above. So the $800 card would buy me 30% in boost if I'm right.

I can't justify $1200+ for a graphic card.

don't forget taxIt's been over a year since the 2080 Ti started at $1200. Can we all just finally admit that the price on that card is now $1000? If people are going to whine about the 2080 Ti costing $1200, I'm going to whine about pre-launch AMD pricing and lousy AMD performance based on benchmarks from before they issue post-launch firmware bandaids.

If we stick to reality as it exists today, what we see here is that the 3080 is going to crush the 1080 Ti in terms of performance, and it's going to do it at the same price. The 3080 Ti is going to crush the 3080 with pricing TBD (but highly unlikely to exceed the $1200 2080 Ti FE, and most likely to match the $1000 2080 Ti AIB cards).