harmattan

Supreme [H]ardness

- Joined

- Feb 11, 2008

- Messages

- 5,129

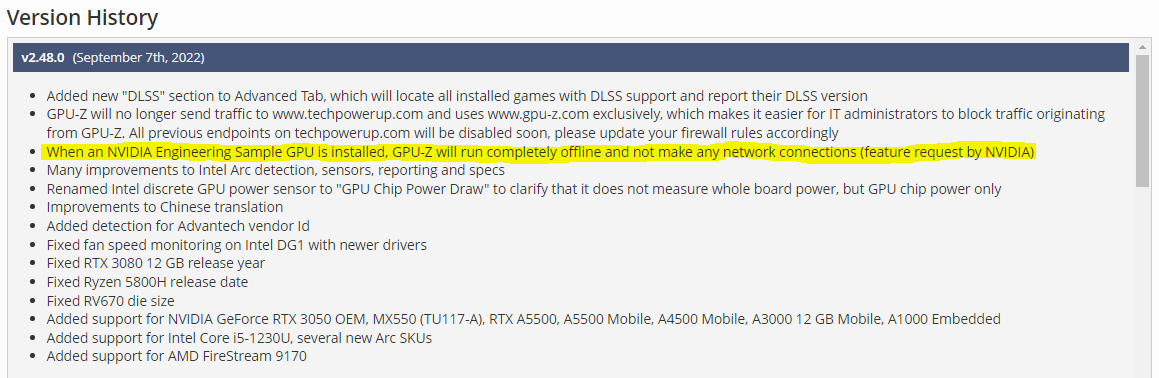

They're gonna do it. No one is playing at 8k and it's been proven heaps of cheaper cache memory can (somewhat) make up for a wide bus. Any way to cut costs.I will LOL so hard at a 192 bit 4080.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)