IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I don't think anyone is really questioning NV's focus on Raytracing. It's the occlusion of the other metrics that is suspect, that's what M76 was getting at.

RTX is all exciting until you realize very few games will use it in the next 12 months (AKA the main timeframe when Nvidia will sell 20 series before moving on to what's next), and only for certain effects at that. Therefore, rasterization performance is still a much more important topic until raytracing becomes more prevalent. Of course RTX is the new shiny stuff, but people are right to doubt and criticize NV for not sharing the values that actually matter for now: raster performance. I'm sure in the next 10 years the raster/raytrace balance will shift, and raytracing performance then could and should take precedence.

In the meantime, it's not very relevant to actual game performance = habitual NV shadiness. I'll be glad to see the 20 series kick butt, but even then, you can't ignore that it'll be a short-lived generation. 7nm is in production and around the corner, where the real benefits lay.

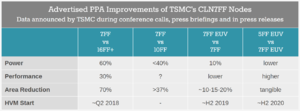

Real 7nm or PR 7 nm?

in for example BF V the raytracing results look impressive on their own but to me don't really blend in well with the rest of the game

I thought we were still waiting for mass DX12 adoption?I think of it like when the first DirectX 12 GPUs came out, not many games supported it, and some of the early games saw performance loss. But DX12 was still a step forward, it just takes time for developers to learn a new API and how to optimize for it. Additionally, it also takes time for the market to grow to the point of making a DX12 only game viable, which there are still few. And you can't have a market unless GPU makers start rolling out new cards, so you have to start somewhere.

I thought we were still waiting for mass DX12 adoption?

Yes, we are. At least Vulkan has had some wins (with DOOM, for example) and that is very similar in capabilities as DX12. So we know there are advantages if done right. But developers may be hesitant to go all in, for example if they want to support Windows 7 (still a large market) or people with older GPUs. But things are moving in that direction, for example the new WoW expansion is using DX12 (with a DX11 option), and some Microsoft games have been DX12 exclusive. It takes time, though, that was my point.I thought we were still waiting for mass DX12 adoption?

At least Vulkan has had some wins (with DOOM, for example) and that is very similar in capabilities as DX12.

Seem like Vulkan would be better since it's open? But MS can't control that and make money or pay somebody money, so probably harder.Yes, we are. At least Vulkan has had some wins (with DOOM, for example) and that is very similar in capabilities as DX12. So we know there are advantages if done right. But developers may be hesitant to go all in, for example if they want to support Windows 7 (still a large market) or people with older GPUs. But things are moving in that direction, for example the new WoW expansion is using DX12 (with a DX11 option), and some Microsoft games have been DX12 exclusive. It takes time, though, that was my point.

Seem like Vulkan would be better since it's open?

Better is always relative...

DX12 is close to what's used on the Xbox, one of the leading console platforms and alongside Windows represents a majority of non-phone game sales and thus development target.

Now, I'd prefer Vulkan myself as virtualization has advanced to the point that fluidity of host operating system is a thing, but as noted above we're not quite there yet.

Yes, we are. At least Vulkan has had some wins (with DOOM, for example) and that is very similar in capabilities as DX12. So we know there are advantages if done right. But developers may be hesitant to go all in, for example if they want to support Windows 7 (still a large market) or people with older GPUs. But things are moving in that direction, for example the new WoW expansion is using DX12 (with a DX11 option), and some Microsoft games have been DX12 exclusive. It takes time, though, that was my point.

Heh Xbox is basically dead. The PS4 is so far ahead it's a joke. Microsoft might as well throw in the towel at this point.

The problem with consoles is that their "value" is in how awful they make their competition; not how good they actually are themselves. Consoles are only as good as their exclusives. Sony got all of them this generation. So Sony became better by making their competition worse.

It's why console gaming is sick and needs to die.

if Bethesda decides to leverage that technology for their other IP families like Fallout and Elder Scrolls.

In which case, fuck yeah!

Yes, an exception but it proves that with the right optimization it can be done.

I wish more games leveraged Vulkan, the uplift is quite tangible. I'm hoping the next Elder Scrolls will follow this route.

DOOM had nearly 40% fps boost on certain systems when running Vulkan. Particularly on AMD GPUs and machines with weaker CPUs.Not a major win since it's not much different than just running it with OpenGL. For actual wins they need to be able to demonstrate compelling differences, and neither D3D12 nor Vulkan has managed to do that yet.

DOOM had nearly 40% fps boost on certain systems when running Vulkan. Particularly on AMD GPUs and machines with weaker CPUs.

Spot on. I'd also add that in for example BFV the raytracing results look impressive on their own but to me don't really blend in well with the rest of the game, it's like they are too in your face compared to the more traditional techniques used for the rest of the visuals. While this may be closer to reality, that's how they look in game to me. By comparison Tomb Raider seems to use raytracing mostly for shadows and to me those look more like stuff that you will miss while actually playing the game. I feel like we have to have raytracing handle more things in a scene to be truly better than the very good faking efforts we are used to.

I'm also a bit concerned about where they can go in terms of performance. 7nm is already pretty damn small. I imagine they would start hitting bigger barriers with rasterization if they had not introduced the raytracing aspect. At this point raytracing takes us back to something like GTX 780 performance where it can handle 1080p pretty well but beyond that starts to struggle. I'm sure some of that can be alleviated with optimizations as developers get more experience with it, sort of like going from PS4 release titles to something like Horizon or God of War.

I think the RTXs implementation of ray tracing is over hyped and doesn't add that much but if we ever get full real time ray tracing it will be freaking amazing.

It's possible the the RTXs raytracing has possibilities but I think we need to wait for a generation or two to see how they develop.

I think it also matters if AMD develops something comperable and it becomes a must have for games or if stays one of those Nvidia only features that don't get a ton of implementation and development.

RTX *is* full ray tracing. It's just that developers have the learn how to use it and currently most of what we have seen use hybrid approaches where ray-tracing is used for just reflections, or just shadows, etc. but the hardware is there for developers to leverage.I think the RTXs implementation of ray tracing is over hyped and doesn't add that much but if we ever get full real time ray tracing it will be freaking amazing.

RTX *is* full ray tracing. It's just that developers have the learn how to use it and currently most of what we have seen use hybrid approaches where ray-tracing is used for just reflections, or just shadows, etc. but the hardware is there for developers to leverage.

Right, but I doubt it can be retro-fitted on existing architecture, and we don't know what kind of lead time they need. Meaning, if the next 1 or 2 generations of AMD GPUs are already in the pipeline, how flexible are they to add a new design this late in the game?

If they have any relationship with Microsoft they would have heard it through the DirectX team indirectly in advance. Their fault if they didn’t.

Right, but I doubt it can be retro-fitted on existing architecture, and we don't know what kind of lead time they need. Meaning, if the next 1 or 2 generations of AMD GPUs are already in the pipeline, how flexible are they to add a new design this late in the game?

Technology gets cheaper after the first generation, not more expensive.yea and we will need a 60 month loan to buy one

T&L had its share of controversy and skeptics just as RT now. Sure, you didn't have to decrease the resolution, but it wasn't any faster than processors coming out with SSE and 3DNow! extensions at the time. The GeForce 256 was a few years too late to make a dramatic impact. RT is a few years too soon.Except you didn't have to go back to 320x200 to enjoy HW T&L on it. T&L was a game changer then and there, not 3-5 years later. At this point this seems like a distraction. From what you may ask? We'll find out soon when independent benchmarks on public drivers start to come.

Yeah, DXR has a fallback which will work with AMD cards (or non-RTX Nvidia cards) but it's supposedly super slow and not usable for release.

T&L had its share of controversy and skeptics just as RT now... The GeForce 256 was a few years too late to make a dramatic impact. RT is a few years too soon.

I just said AMD collaborated with MSFT just like NV to create DXR, they already knew it was coming because they helped create it...

NVidia really seems to be the driver of this.

Indeed, but to be fair, it's the only way to access it if GPUs don't have any dedicate DXR-accelerating hardware. Point being, there is 0 chance AMD's next GPUs won't have raytracing hardware. Considering they've acknowledged waiting on 7nm, plus their much lower R&D money compared to NV, it's all making sense that their next consumer GPUs won't be ready until next summer.

Also, remember that Navi is being designed also with XB/PS5 in mind, which strongly suggests the next consoles will have at least 1st gen raytracing capacity. That means, by 2020, we'll have 2nd gen raytracing GPUs, and 1st gen raytracing consoles. This will ensure DXR is not a niche feature, but it'll become pretty common in a couple years.

Yes, you said that. Do you have any evidence of serious AMD participation? No doubt AMD would have been informed and given some chance for feedback, but this looks much more like a NV and MS partnership.

I have a hard time believing next gen consoles would have the kind of horsepower needed to handle raytracing in any capacity. Too soon to be able to do that at low cost. We are more likely to see the GPU used to provide better framerates at checkerboard 4K. AMD's desktop hardware will have raytracing support but I think we need to go all the way to PS6 for consoles to get on that bandwagon.