The 64 is in the 1080 range and this new card is in the 1080 Ti / 2080 range if their supposed benchmarks end up being accurate.did Vega sneak up on the nvida 10 series in performance or am I just remembering wrong? I looked at some benches, and you guys are right - Vega 64 now trades blows with 1080.

The performance level wasn't like that a launch was it? I thought the Vega 56 was like a 1070 and the Vega 64 was pretty significantly slower than a 1080 at launch on most stuff and compared equitably on a few things? I'll admit I've not kept track of Vega since I sold mine three months after I bought my launch units. Either things have changed or my memory has failed me.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Radeon 7 (Vega 2, 7nm, 16GB) - $699 available Feb 7th with 3 games

- Thread starter Digital Viper-X-

- Start date

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

found this on guru3d

SEEMS LEGIT

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Could be real. Would be pretty nice if it was.

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

Could be real. Would be pretty nice if it was.

https://www.anandtech.com/show/1334...tx-2080-ti-and-2080-founders-edition-review/8

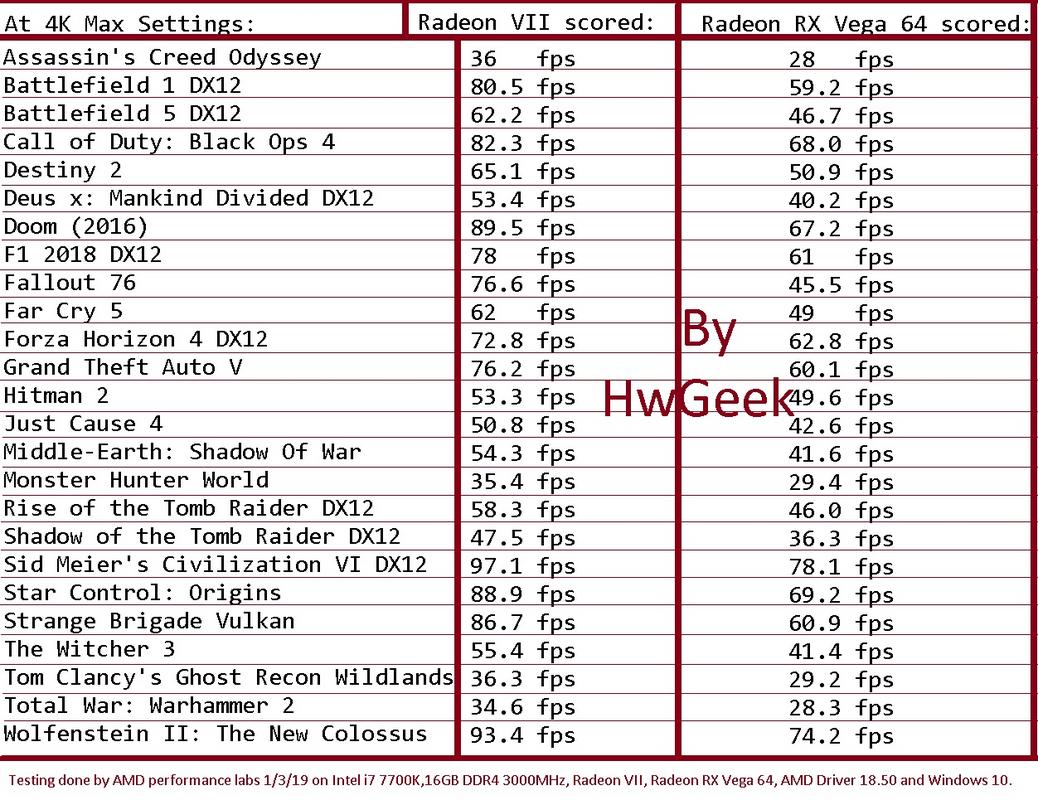

based on this review the numbers seem spot on for vega 64, if these numbers are accurate looks like it's right below the 2080 and faster than the 1080ti.

RamboZombie

Limp Gawd

- Joined

- Jul 11, 2018

- Messages

- 141

The card is DOA. The 2080 is not $800 anymore. Several models have been sub-$650 in recent weeks and even a brand new EVGA right now is $700 flat with a 2-game bundle.

https://www.newegg.com/Product/Product.aspx?Item=N82E16814487415

As for the DXR discussion, you can simply buy the 2080 and just never enable DXR and it's functionally the same as Vega 2. Except you also get DLSS. And I don't need to say that I have never been a proponent for either of these features. But if it's the difference between NOT having them and HAVING THEM for $0 price difference... Hello... Get the RTX.

And it's probably a silly comparison anyway since the 3 game charts they showed all favor AMD, particularly Far Cry 5 (BF5 to a lesser degree). It may end up being 5-10% SLOWER than the 2080 on average.

As for now this is a repeat of the Vega 1 launch except this time AMD has the RAM advantage so I have a pretty good idea what the next few weeks are going to sound like. AMD can launch an 8GB Vega 2 for a lot cheaper and maybe that card will be enticing.

whatever.. all i know is that i want this card - why the propaganda..do you fell that is it needed..? do you have buyers remorse?

RTX is dead in the water...

and also.. this does freesync over HDMI - the one killer feature for me - and i don't think nV will support that..

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

If the R7 can do close to 60fps in 4k on most popular titles out of the box it should do well.

That of course remains to be seen.

Of course I really don't know how many people there really are out there that are doing 4k gaming or thinking about going there.

But that's where I am heading personally so I am interested.

Outside of gaming, the 16Gb of memory would be useful for some creative apps.

And in that segment if it performs well it would be cheap at $700.

A

That of course remains to be seen.

Of course I really don't know how many people there really are out there that are doing 4k gaming or thinking about going there.

But that's where I am heading personally so I am interested.

Outside of gaming, the 16Gb of memory would be useful for some creative apps.

And in that segment if it performs well it would be cheap at $700.

A

I'm a little disappointed that this isn't available in a 8GB version for $499 - HBM is really expensive and having 16GB and 1TB/s worth of it is probably why the Radeon 7 needs to cost as much as it does. As such, right now you are stuck paying for hardware you don't use regardless of which manufacturer you go with - an extra 6-8GB of VRAM on the AMD side, tensor and RTX cores on the Nvidia side.

Having 16GB of memory and (potentially) good double precision performance in theory makes it a good workstation card, except...AMD's software support for content creation apps and scientific work is crap. Most GPU-accelerated renderers require CUDA, and popular scientific packages (e.g. MATLAB, Ansys) are also CUDA-only. You pretty much end up getting stuck with open-source software (Luxrender, some flavors of OPENFOAM), which is less common in the industry and has worse support.

Having 16GB of memory and (potentially) good double precision performance in theory makes it a good workstation card, except...AMD's software support for content creation apps and scientific work is crap. Most GPU-accelerated renderers require CUDA, and popular scientific packages (e.g. MATLAB, Ansys) are also CUDA-only. You pretty much end up getting stuck with open-source software (Luxrender, some flavors of OPENFOAM), which is less common in the industry and has worse support.

I'm a little disappointed that this isn't available in a 8GB version for $499 - HBM is really expensive and having 16GB and 1TB/s worth of it is probably why the Radeon 7 needs to cost as much as it does. As such, right now you are stuck paying for hardware you don't use regardless of which manufacturer you go with - an extra 6-8GB of VRAM on the AMD side, tensor and RTX cores on the Nvidia side.

I suspect they don't have half capacity HBM stacks with the same pinouts.

So is the 2080 you cuck.

Edit: sorry didnt mean to name call was thinking it and typed it out.

Except at least the 2080 has additional features (Ray Tracing and DLSS) that differentiates it from the 1080 Ti. The Radeon VII literally offers nothing tangible over the 1080 Ti or 2080 yet costs the same.

You can argue that it has 16GB of HBM, but until I see the performance advantage from it then its a null point.

Hell, I even consider the RTX 2080 overpriced, but at least it still offers something more upfront.

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I think that if I had to choose between Ray tracing and double the Vram I'd go for the latter.

At least at the current state of RT.

A

At least at the current state of RT.

A

Last edited:

I think that if I had to choose between Ray tracing and and double the Vram I'd go for the latter.

At least at the current state of RT.

A

Again, if utilizing HBM and doubling the VRAM capacity shows a note-worthy performance boost for the Radeon VII over the RTX 2080 then I'd be in the same boat.

But if that was the case then why would AMD cherry pick two games that demonstrate essentially equal performance between the two cards during their keynote? If the VRAM provided additional benefit over the 2080 in terms of performance wouldn't they want to present that during the keynote?

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I'm not analyzing AMD's choice of demo games.

Newer games seem to like more of everything, system ram, CPU cores, vram.

So for me personally the Vram seems more attractive.

A

Newer games seem to like more of everything, system ram, CPU cores, vram.

So for me personally the Vram seems more attractive.

A

This is where I'm at. I am putting together a system for video editing for my church, and depending on the budget I have, would love to have this card. The Vega Frontier would have been nice, but it's not available anywhere. This should be available in decent quantities at MSRP right at the time I would be putting the system together. That 16GB is a huge advantage, and at the price, makes this exactly what I want.Honestly this card is geared towards content creators as well. that 16GB HBM2 is damn good and for that price its a steal. Game and do other things that is the message here. Well worth the price. 16GB HBM2 or 8GB GDDR6? Choice is easy for them.

harmattan

Supreme [H]ardness

- Joined

- Feb 11, 2008

- Messages

- 5,129

I think that if I had to choose between Ray tracing and and double the Vram I'd go for the latter.

At least at the current state of RT.

A

This, without a doubt. More VRAM is usable in nearly every practical scenario depending on the application and your display capabilities. RT is usable in what... a single lackluster game, and has been shown to bog down performance at times to an unacceptable level?? RT and DLSS might be interesting in a few years if devs adopt, and the hardware can push it - for the forseeable I'm only praying to that old time religion called rasterization.

That said, I'm still salty AF that VII offers the same performance/dollar you could get two years ago.

Boil

[H]ard|Gawd

- Joined

- Sep 19, 2015

- Messages

- 1,439

I'm a little disappointed that this isn't available in a 8GB version for $499 - HBM is really expensive and having 16GB and 1TB/s worth of it is probably why the Radeon 7 needs to cost as much as it does. As such, right now you are stuck paying for hardware you don't use regardless of which manufacturer you go with - an extra 6-8GB of VRAM on the AMD side, tensor and RTX cores on the Nvidia side.

Having 16GB of memory and (potentially) good double precision performance in theory makes it a good workstation card, except...AMD's software support for content creation apps and scientific work is crap. Most GPU-accelerated renderers require CUDA, and popular scientific packages (e.g. MATLAB, Ansys) are also CUDA-only. You pretty much end up getting stuck with open-source software (Luxrender, some flavors of OPENFOAM), which is less common in the industry and has worse support.

ProRender...?!?

dvsman

2[H]4U

- Joined

- Dec 2, 2009

- Messages

- 3,628

After having some time to think about it, my current thoughts are - If the R7 performance was (for the money) better than announced in raster ops I'd be "Take my money now" but as is, both Nvidia and AMD have disappointed this gen.

- Nvidia focused on RT/Tenser - which down the road might be cool AF but as in every tech, the 1st gen is always the "Early Adopter Tax" edition and not an actual useable product except maybe for the very tip top tier = the 2080ti.

- AMD just tick tocked their Vega tech, smaller, a little bit faster and more power efficient. Price will be the NEW market average (higher in latest gen than previous gen, like Nvidia did as well). AMD claims +25-30%ish performance claims which is about the same as Nvidia's claims for new RTX over last gen GTX.

Although AMD hasn't announced their entire Radeon product stack, which may make things look better or worse (is the R7 the top of the stack @ $699? And everything slots below that? Might there be a R9?)

As a Titan Xp (SLI) owner, I'm starting to have my doubts about doing any graphics card updates this cycle and just waiting for gen 2 RTX (skipping RTX 1) or AMD Navi (skipping Vega 2). Though once R7 comes out and comparison benches show up, maybe that will make things more definitive for Team Green or Team Red.

- Nvidia focused on RT/Tenser - which down the road might be cool AF but as in every tech, the 1st gen is always the "Early Adopter Tax" edition and not an actual useable product except maybe for the very tip top tier = the 2080ti.

- AMD just tick tocked their Vega tech, smaller, a little bit faster and more power efficient. Price will be the NEW market average (higher in latest gen than previous gen, like Nvidia did as well). AMD claims +25-30%ish performance claims which is about the same as Nvidia's claims for new RTX over last gen GTX.

Although AMD hasn't announced their entire Radeon product stack, which may make things look better or worse (is the R7 the top of the stack @ $699? And everything slots below that? Might there be a R9?)

As a Titan Xp (SLI) owner, I'm starting to have my doubts about doing any graphics card updates this cycle and just waiting for gen 2 RTX (skipping RTX 1) or AMD Navi (skipping Vega 2). Though once R7 comes out and comparison benches show up, maybe that will make things more definitive for Team Green or Team Red.

I found it a bit shocking that AMD is sticking with HBM memory. Their profitability on this video card will be held hostage by the prices of HBM memory again?

And a 16GB frame buffer also seems completely unnecessary unless that is the most price efficient amount per video card?

The news that it competes with the 2080 performance-wise is good, the $699 price point is not so good though. I mean, I can already find the 2080 for $699 on Newegg today.

So what's the real benefit if the performance will be similar? If I'm picking raytracing vs an extra 8gb of memory... probably the RTX since at least it offers something new. There are ZERO games that take advantage of more than 8GB of RAM, on the other hand the RTX/DLSS games are a nonzero number.

I noticed they didn't brand it as Vega 2, rather as Radeon 7. Although she did say Vega technology powered it. Hmmm did Vega brand make that bad of a marketing impression?

PS I also feel a $499 8GB version of this Radeon 7 would be far more palatable/marketable for gamers than trying to convince people that they need 16GB of memory when nothing except workstation/professional apps would come even close to taking advantage of that much memory.

And a 16GB frame buffer also seems completely unnecessary unless that is the most price efficient amount per video card?

The news that it competes with the 2080 performance-wise is good, the $699 price point is not so good though. I mean, I can already find the 2080 for $699 on Newegg today.

So what's the real benefit if the performance will be similar? If I'm picking raytracing vs an extra 8gb of memory... probably the RTX since at least it offers something new. There are ZERO games that take advantage of more than 8GB of RAM, on the other hand the RTX/DLSS games are a nonzero number.

I noticed they didn't brand it as Vega 2, rather as Radeon 7. Although she did say Vega technology powered it. Hmmm did Vega brand make that bad of a marketing impression?

PS I also feel a $499 8GB version of this Radeon 7 would be far more palatable/marketable for gamers than trying to convince people that they need 16GB of memory when nothing except workstation/professional apps would come even close to taking advantage of that much memory.

Last edited:

Auer

[H]ard|Gawd

- Joined

- Nov 2, 2018

- Messages

- 1,972

I'm thinking 4k gaming will benefit from more Vram.

Looking forward to some benchmarks.

A

Looking forward to some benchmarks.

A

DarkLegacy

[H]ard|Gawd

- Joined

- Dec 26, 2004

- Messages

- 1,097

whatever.. all i know is that i want this card - why the propaganda..do you fell that is it needed..? do you have buyers remorse?

RTX is dead in the water...

and also.. this does freesync over HDMI - the one killer feature for me - and i don't think nV will support that..

Really comes down to the buyer but if I were to look at both cards being the same price, I would favor the 2080 since the technological advancements would justify the high price tag. The only thing I would look at the Radeon VII for is the 16GB of Vram. Which should give it a considerable edge in some games that can take advantage of it. Just looking at the reference cards tells me enough however. The card is going to run very hot & will most definitely have a higher power draw when compared to the 2080. I suspect that it will not be very good at overclocking as well but that is just an assumption. Like most, I will wait for the reviews to drop to see where the performance land but for the time being, I would consider the RTX 2080 as being the better buy at the $699 price point.

Last edited:

I think the bandwidth will help but the vram I'm not worried about yet. Still haven't loaded up 12GB on my gpu at 4K.I'm thinking 4k gaming will benefit from more Vram.

Looking forward to some benchmarks.

A

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

Could be real. Would be pretty nice if it was.

seems like the benchmarks I posted might be legit, same numbers are on hardocp front page.

I'm running into 8gb limits in VR on my Vega56 - if they fix the bad VR (geometry) performance of 1st gen Vega, coupled with 16GB of ram I'll be in the market for one to replace my GTX 1080 as VR with the Odyssey+ is starting to push it pretty hard. If not, I'll wait this gen out as it's been pretty disappointing. One game with RT, none with DLSS and the fact you have to sit there and look at stills to see if the shit is working means RTX is not even worth considering as a reason to buy. Going just by performance, it's going to be 2080 vs Radeon 7 and, even before launch, the 7 is a hair faster. Lets hope that extra billion transistors fixes the few issues 1st gen Vega had.

I don't know how people could be disappointed with this launch and be happy with Nvidia. They took a 1080ti, added a bunch of so far useless shit, cut the ram and sold it for the same price and gave use the pile that is the RTX 2080. At least you get ram that won't fill up in 4k games on the Radeon 7.

I don't know how people could be disappointed with this launch and be happy with Nvidia. They took a 1080ti, added a bunch of so far useless shit, cut the ram and sold it for the same price and gave use the pile that is the RTX 2080. At least you get ram that won't fill up in 4k games on the Radeon 7.

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

I'm running into 8gb limits in VR on my Vega56 - if they fix the bad VR (geometry) performance of 1st gen Vega, coupled with 16GB of ram I'll be in the market for one to replace my GTX 1080 as VR with the Odyssey+ is starting to push it pretty hard. If not, I'll wait this gen out as it's been pretty disappointing. One game with RT, none with DLSS and the fact you have to sit there and look at stills to see if the shit is working means RTX is not even worth considering as a reason to buy. Going just by performance, it's going to be 2080 vs Radeon 7 and, even before launch, the 7 is a hair faster. Lets hope that extra billion transistors fixes the few issues 1st gen Vega had.

I don't know how people could be disappointed with this launch and be happy with Nvidia. They took a 1080ti, added a bunch of so far useless shit, cut the ram and sold it for the same price and gave use the pile that is the RTX 2080. At least you get ram that won't fill up in 4k games on the Radeon 7.

this generation blows.

VII actually provides a nice improvement over vega considering it seems like it's just a die shrink and they doubled rops and memory bandwidth, it's just that vega was so late and we're still at around 1080ti performance for the same price the 1080ti launched at. If vega launched before/around the launch of the 1080ti and vega VII launched when 64 did I think AMD would look about 1000x better right now.

What the fuck was raja doing this whole time? Vega 64 was pretty much a tweaked fury with some added stages in the pipeline to bump up frequency. Good riddance on raja leaving.

VII makes sense where it is performance wise considering it's ~1 year after 64 launched.

I found it a bit shocking that AMD is sticking with HBM memory. Their profitability on this video card will be held hostage by the prices of HBM memory again?

And a 16GB frame buffer also seems completely unnecessary unless that is the most price efficient amount per video card?

The news that it competes with the 2080 performance-wise is good, the $699 price point is not so good though. I mean, I can already find the 2080 for $699 on Newegg today.

So what's the real benefit if the performance will be similar? If I'm picking raytracing vs an extra 8gb of memory... probably the RTX since at least it offers something new. There are ZERO games that take advantage of more than 8GB of RAM, on the other hand the RTX/DLSS games are a nonzero number.

I noticed they didn't brand it as Vega 2, rather as Radeon 7. Although she did say Vega technology powered it. Hmmm did Vega brand make that bad of a marketing impression?

PS I also feel a $499 8GB version of this Radeon 7 would be far more palatable/marketable for gamers than trying to convince people that they need 16GB of memory when nothing except workstation/professional apps would come even close to taking advantage of that much memory.

This is because amd is saving on other things. They don’t have to worry about production or new memory controller. This is pipe cleaner product. First gen 7nm basically mi50 with little higher clocks. So they basically repackaged mi50 with a new cooler and higher clocks to make it gaming card. Rumors were you won’t see these produced in super high quantities and would likely depend on demand . Amd is very strategic about this.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Not true. Deus Ex MD on 4K max settings can use above 11GB of VRAM. Also, if you have an Eyefinity multi-mon setup and enable MSAA, it'a possible to go above 8GB.There are ZERO games that take advantage of more than 8GB of RAM, on the other hand the RTX/DLSS games are a nonzero number.

Last edited:

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

This is because amd is saving on other things. They don’t have to worry about production or new memory controller. This is pipe cleaner product. First gen 7nm basically mi50 with little higher clocks. So they basically repackaged mi50 with a new cooler and higher clocks to make it gaming card. Rumors were you won’t see these produced in super high quantities and would likely depend on demand . Amd is very strategic about this.

yeah this seems like a place holder for people saying AMD doesnt compete on the high end. AMD now has a high end card and didn't have to do a ton of work to get it.

Boil

[H]ard|Gawd

- Joined

- Sep 19, 2015

- Messages

- 1,439

I noticed they didn't brand it as Vega 2, rather as Radeon 7. Although she did say Vega technology powered it. Hmmm did Vega brand make that bad of a marketing impression?

They are calling it Radeon VII, but look closely at that "VII"...

It is supposed to be a Roman numeral seven, but the "V" is actually for "Vega" & the "II" is actually for "Two"...

Vega II...

Hot, too much power draw, late, out of stock or overpriced on release...

Yeah, they wanted to step away a bit on the Vega brand being obvious...

Now, where is the Nano model...?!?

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

Some more news:

https://fudzilla.com/news/graphics/47922-amd-shares-more-radeon-vii-details

https://fudzilla.com/news/graphics/47922-amd-shares-more-radeon-vii-details

There are some significant changes to the package due to the size of the new 7nm 2nd generation Vega GPU, and we are looking at a 331mm2 GPU, surrounded by 4GB HMB2 chips. According to AMD, it offers 1.8x gaming performance per area, 2x memory capacity and 2.1x memory bandwidth, compared to the Radeon RX Vega 64.

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Also, remember that you can barely find a new 1080 Ti anymore for $699. Used, sure, but I don't think they are making them anymore, so they won't get easier to find.

If benchmarks are true, we have a 1080 Ti / 2080 competitor from AMD that is at a somewhat reasonable price. I think it will do well.

If benchmarks are true, we have a 1080 Ti / 2080 competitor from AMD that is at a somewhat reasonable price. I think it will do well.

This. I am disappointed. Why pay for 16gb of HBM? not really usable. Then again neither is ray tracing really. not this gen. Both sides have offered us cards with features that we can't use yet.They don't need to. This card isn't competitively priced IMO.

It'll likely have the same performance as a GTX 1080 Ti...except 2 years later...and at the same price.

If you are starting fresh, that works great, but its a hassle to port over all your assets from, say, Arnold - different renderers have slightly different behaviors with regards to lighting, materials, etc and your art team might not appreciate the changes...

5150Joker

Supreme [H]ardness

- Joined

- Aug 1, 2005

- Messages

- 4,568

Also, remember that you can barely find a new 1080 Ti anymore for $699. Used, sure, but I don't think they are making them anymore, so they won't get easier to find.

If benchmarks are true, we have a 1080 Ti / 2080 competitor from AMD that is at a somewhat reasonable price. I think it will do well.

At 2016 prices it is way overpriced. Nobody that is a gamer (who this card is intended) gives a shit about HBM2. Both the 2080 and this card cost $100 too much. At least the 2080 can command a bit more premium than this thing since it does have more features.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Wow this amazes me people already know exactly how fast VII is in games

We know because AMD released benchmarks, so it will be at most that fast, and we can expect within all reason for it to be a bit slower than shown in AMD's own benchmarks. All manufacturer benchmarks should be taken with a grain of salt, with AMD's perhaps a bit larger than most.

Neapolitan6th

[H]ard|Gawd

- Joined

- Nov 18, 2016

- Messages

- 1,182

This. I am disappointed. Why pay for 16gb of HBM? not really usable. Then again neither is ray tracing really. not this gen. Both sides have offered us cards with features that we can't use yet.

I think it is more about memory bandwidth.

16gb HBM grants 4 HBM stacks which means a 4096 bit memory bus as opposed to an 8gb 2 stack 2048 bit memory bus (like vega 64).

(Not sure if there are any 2gb HBM2 modules which allow 4 modules adding up to 8gb)

sabrewolf732

Supreme [H]ardness

- Joined

- Dec 6, 2004

- Messages

- 4,778

We know because AMD released benchmarks, so it will be at most that fast, and we can expect within all reason for it to be a bit slower than shown in AMD's own benchmarks. All manufacturer benchmarks should be taken with a grain of salt, with AMD's perhaps a bit larger than most.

Im going to wager that AMDs benchmarks are accurate. I think much of the bullshit went out the door with raja and crew. Su seems to be much less of a fan of the trash talking and debauchery. Ryzen numbers were conservative.

The vega 64 numbers benchamrked with the VII are spot on. Games with mediocre increase were also included (7% and 15%)

Last edited:

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

At 2016 prices it is way overpriced. Nobody that is a gamer (who this card is intended) gives a shit about HBM2. Both the 2080 and this card cost $100 too much. At least the 2080 can command a bit more premium than this thing since it does have more features.

You are suggesting that more features on the Nvidia card are what gamers want. HBM2 does a lot more then just fast memory and it allows features as HDR to work well due to bandwidth advantage.

So that leaves all the gamers in the world that play Battlefield 5 to choose Nvidia because that is about the only thing that has used extra features.

Ooh then you have the texture compression isn't that a framebuffer feature DLSS. But you have to wait for it for Nvidia to implement it.

Those more features pretty much depend on Nvidia implementing them. And that has more value?

5150Joker

Supreme [H]ardness

- Joined

- Aug 1, 2005

- Messages

- 4,568

You are suggesting that more features on the Nvidia card are what gamers want. HBM2 does a lot more then just fast memory and it allows features as HDR to work well due to bandwidth advantage.

So that leaves all the gamers in the world that play Battlefield 5 to choose Nvidia because that is about the only thing that has used extra features.

Ooh then you have the texture compression isn't that a framebuffer feature DLSS. But you have to wait for it for Nvidia to implement it.

Those more features pretty much depend on Nvidia implementing them. And that has more value?

Final fantasy, metro, bfv and others. In what universe do you need 16 GB of hbm2 for hdr?

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

HBM2 does a lot more then just fast memory and it allows features as HDR to work well due to bandwidth advantage.

HBM2 is literally just fast memory. It's also unrelated to HDR; the delta that HDR adds is miniscule compared to the rest of the graphics pipeline. The Radeon 7 will still be slower.

So that leaves all the gamers in the world that play Battlefield 5 to choose Nvidia because that is about the only thing that has used extra features.

For now; the hardware is here, and the software is coming along nicely. The Radeon 7 lacks the hardware completely and isn't any faster.

Ooh then you have the texture compression isn't that a framebuffer feature DLSS. But you have to wait for it for Nvidia to implement it.

Those more features pretty much depend on Nvidia implementing them. And that has more value?

Absolutely. Given Nvidia's marketshare and history, useful implementations are highly likely.

Pieter3dnow

Supreme [H]ardness

- Joined

- Jul 29, 2009

- Messages

- 6,784

Absolutely. Given Nvidia's marketshare and history, useful implementations are highly likely.

That is why there are so many ray tracing titles because of Nvidia market share. Rather contradictory isn't it?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)