Presbytier

[H]ard|Gawd

- Joined

- Jun 21, 2016

- Messages

- 1,058

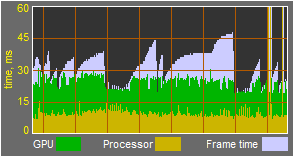

How so? they already are even in DX12 and vulkan games. The gains AMD cards receive so far is not enough to offset how well NVIDIA cards are allready performing plus there are very few games that are being made with DX12 or Vulkan DX11 is still the got to for many games coming out.i can't see how nvidia can be faster than AMD in new upcoming games.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)