NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

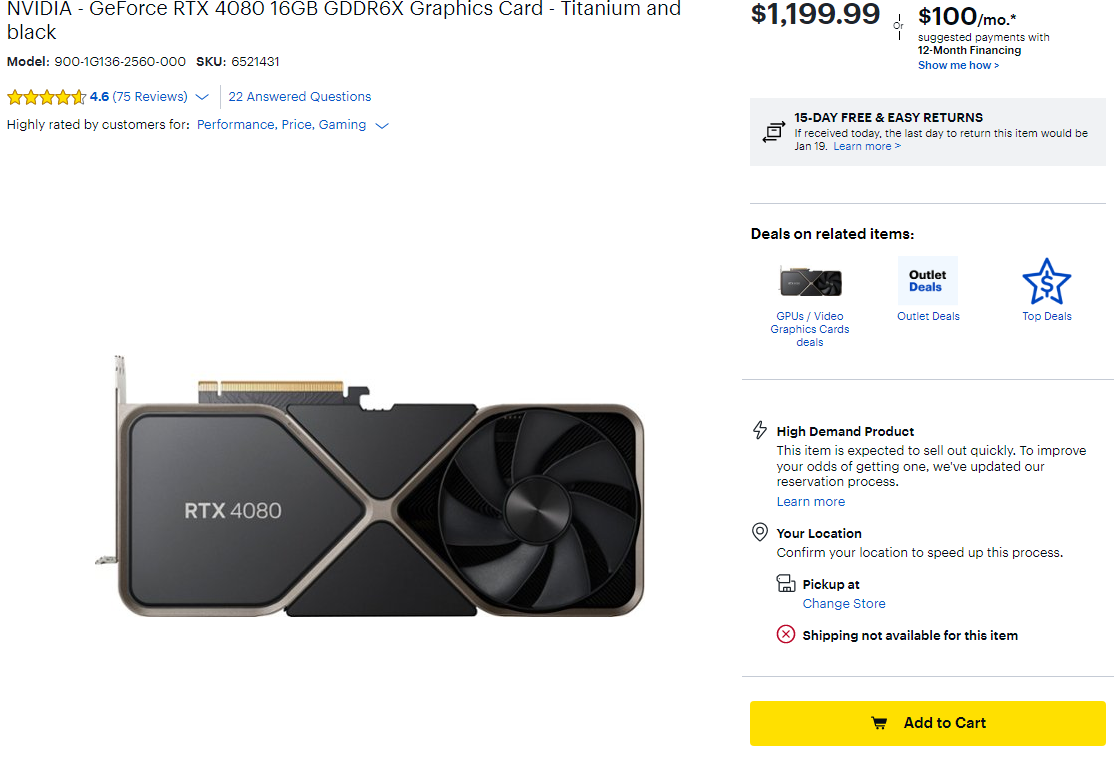

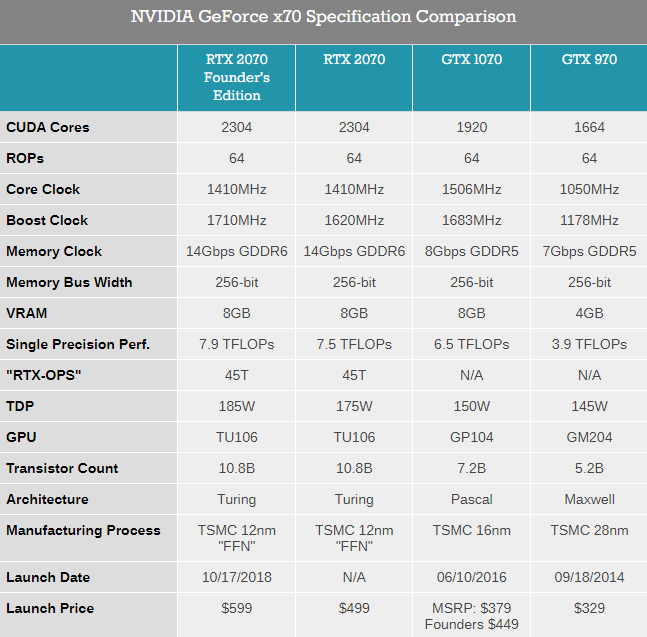

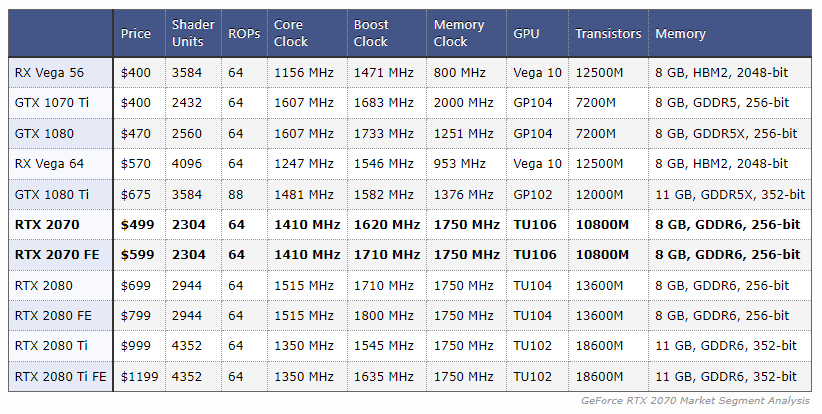

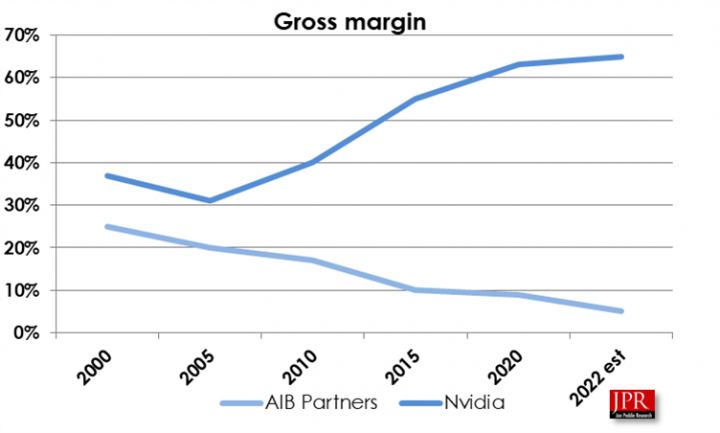

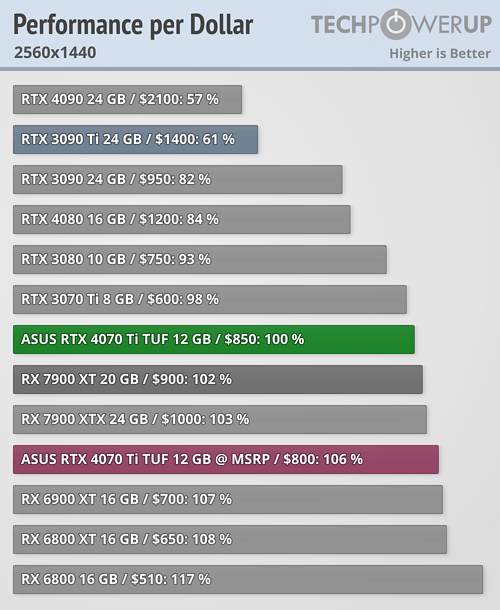

I don't think the chiplet GPUs will get particularly exciting until AMD manages to bring out a multi-GCD design, without any of the jank plaguing their past dual-GPU cards going all the way back to the Rage Fury MAXX (roughly a competitor to the ol' 3dfx Voodoo5 5500) since CrossFire and SLI are super dead right now.I've been silent about this for a while now, but it's been bothering me almost since the release. How can people tout the cost saving of chiplet and celebrate a company releasing a $1K GPU? A GPU that has had so many issues and drama no less.

GPU pricing is just such a shit show. We can only pray it comes back to sane pricing at some point. I consider myself lucky to be running a 3070, and I'll hold on to dear life until a sanely priced replacement gets released.

If they can link up multiple GCDs and present them as seamlessly as a single monolithic GPU would, then we're in for some real breakthroughs with GPU scaling, without the PCB complexity and VRAM inefficiencies you get with discrete GPUs. (Case in point: 3dfx Voodoo5 6000. Four VSA-100s, 32 MB of RAM each that meant you only had 32 MB of effective VRAM rather than 128 MB, and insanely elaborate multi-layer PCBs to handle all that which drove production costs up, right up until 3dfx's bankruptcy and prompt devouring by NVIDIA with their much more elegant, single-chip GeForce 256 that was rapidly iterated upon over the next few years.)

May your RTX 3070 hold up; it might even make it seven years like my GTX 980 did.

I recall hearing something like this before, but for Microsoft to pull that kind of dick move literally a week before release? Wow, no wonder Vista had to fail so that Vista SE - I mean Windows 7 could succeed! The drivers were more or less stable by the time Win7 was released.It was a Vista thing that forced Nvidia to make last-minute revisions to key parts of the API which resulted in a nightmare.

Microsoft decided between the final Vista Beta release and the Retail release to close off parts of the Kernel, which yeah was more secure, but it resulted in things that did work on the very last revision of the beta release being completely incompatible with the retail release with nothing more than a quick "heads up" email to device manufacturers a week before the launch date.

Nvidia, Creative, HP, and just about everybody else was completely blindsided by it and it required them to completely abandon large parts of their driver code. HP simply decided then and there that much of their hardware was simply not going to get Vista drivers in retaliation, Creative struggled, and Nvidia got off relatively lucky as they were completely rehashing their drivers at the time anyways, but yeah there were growing pains from Vista. It's no coincidence that CUDA was launched around 2007, supposedly CUDA was developed as a result of trying to keep up with all the API changes so they just sort of created a framework so they could better adapt. (I can't verify that, I was told it once many years ago and it just sort of stuck with me)

That's the sort of rapid "Yeah, I broke it so that your stuff no longer functions, too bad, adapt or die" mentality I expect from that rival bitten fruit company.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)