MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,479

AdoredTV is a fanboy.

Some games are hard to see if raytracing is doing anything. Look at examples like Spider-Man,Cyberpunk,Control. The quake,quake2,doom rt ports, Minecraft.

Hell, raytracing on Minecraft is so effective. I always feel compelled to dig out bases on the coast line and build a glass wall in the water to have light shine though the water and the glass.

Also I was rebuking that the rt gains are sub par. Going from cyberpunk running like ass with compromised settings to completely maxed at 60 minimum is a decent accomplishment. It’s like 60 min. Around ~70 average in the areas I tested with a 7950x on psycho rt. Keep in mind psycho rt ran horribly before. Ultra is what last gen cards typically run and is over 100fps

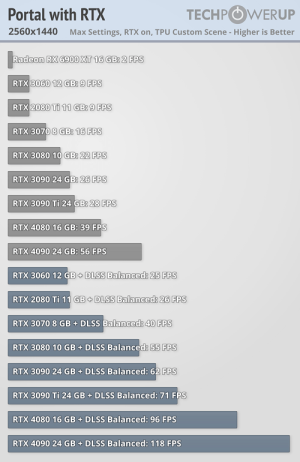

The 4090 is just that powerful but that isn't the point here. Look if we compare Ampere vs RDNA 2 let's say RDNA 2 (6800 XT) takes a massive 60% performance loss for turning on RT, Ampere (RTX 3080) will suffer a much less performance loss like let's say 35% instead of 60%. Ampere is much faster than RDNA 2 in RT so it makes sense that it will lose less performance and we'll assume that they are both equal in raster. So now when the RTX 4080 12GB relaunches as the 4070 Ti or whatever, that card has 92 RT Tflops and the 3090 Ti has 78 RT Tflops so if they end up being equal in raw raster performance, I will expect the 4070 Ti to take a smaller performance hit when turning on RT vs the 3090 Ti due to the difference in RT Tflops (Again 92 vs 78). If the 4070 Ti performs exactly the same as the 3090 Ti in both raster AND RT, taking the same performance penalty, then what is the point of the extra RT Tflops?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)