- Joined

- Aug 20, 2006

- Messages

- 13,000

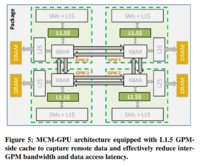

Packing more transistors into a monolithic GPU won't be feasible for much longer, and a new white paper from NVIDIA and university researchers suggest that the company may look to multi-chip module designs to get around the limitation of Moore’s Law. Proposed is a strength-in-numbers approach: multiple GPU modules would be connected using advanced, high-speed input/output protocols to efficiently communicate with each other, and this would allow for less complex (and presumably cheaper) GPU modules compared to a monolithic design.

Without either switching to a multi-chip module design or coming up with an alternative solution, Nvidia warns that the performance curve of single monolithic GPUs as currently constructed will ultimately plateau. Beyond the technical challenge of cramming more transistors into smaller spaces, there is also the cost to consider, both in terms of technical research and reduced die yields. Whether or not an MCM design is ultimately the answer, Nvidia thinks it is at least worth exploring. One thing that Nvidia mentions in its paper is that it's difficult to scale GPU workloads on multi-GPU systems, even if the scale will on a single GPU.

Without either switching to a multi-chip module design or coming up with an alternative solution, Nvidia warns that the performance curve of single monolithic GPUs as currently constructed will ultimately plateau. Beyond the technical challenge of cramming more transistors into smaller spaces, there is also the cost to consider, both in terms of technical research and reduced die yields. Whether or not an MCM design is ultimately the answer, Nvidia thinks it is at least worth exploring. One thing that Nvidia mentions in its paper is that it's difficult to scale GPU workloads on multi-GPU systems, even if the scale will on a single GPU.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)