kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

If that's how you want to view it then sure.

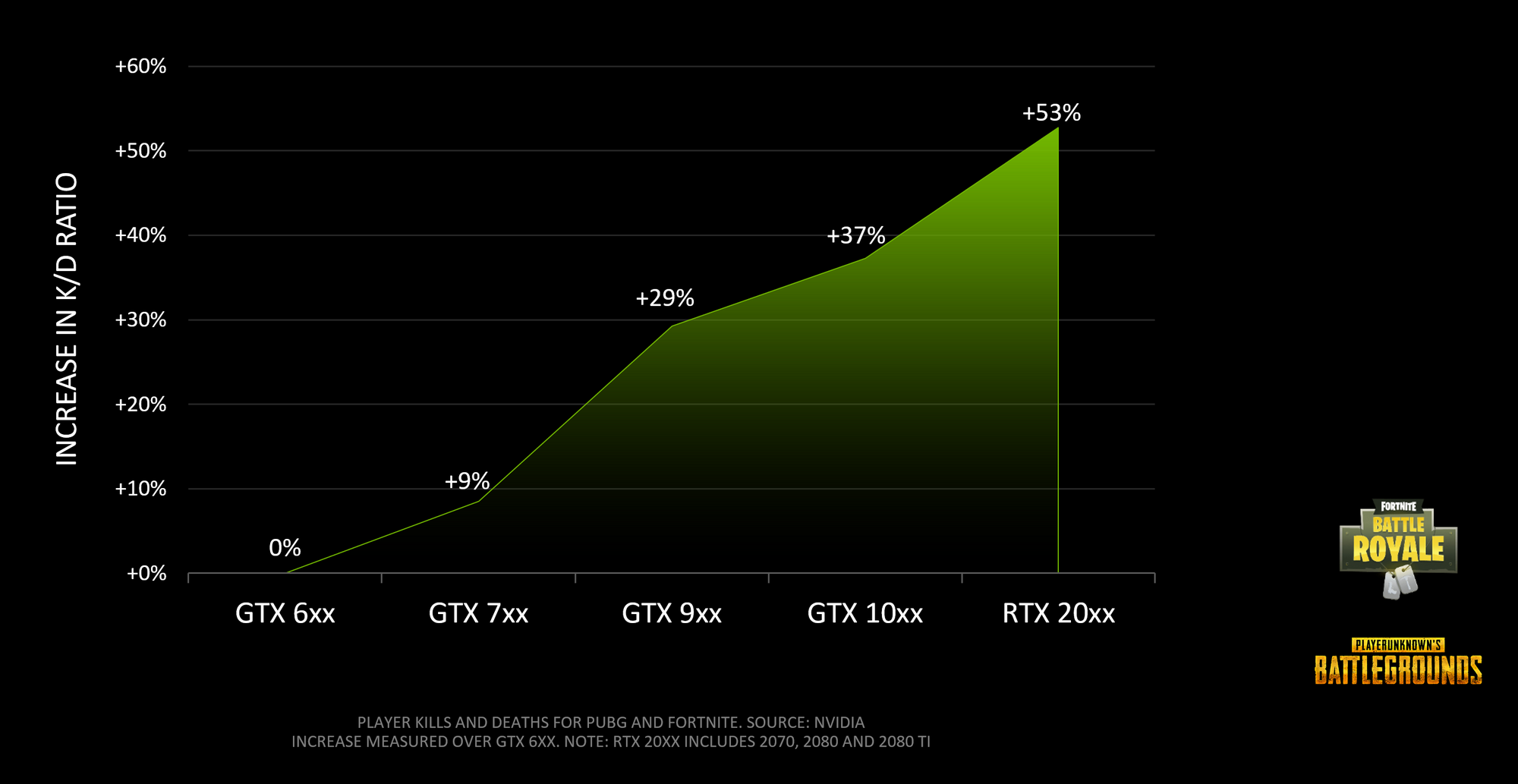

But remember the experiment was "does higher fps improve gameplay". Not "what is the best way to improve gameplay".

It's like if a university releases a study about the efficacy of a new drug used to reduce heart attacks. Should they be expected to run a separate battery of tests that test that efficacy against every other possible treatment? Absolutely not. That makes no sense. Nothing would get done.

You need to isolate a single variable and test that against the control group.

I don't know what you're arguing against. I'm in agreement with you that the only variable they tested was higher FPS. I'm just questioning the motivation behind Nvidia telling us that higher FPS makes you a better player. It's like an oil company funding an anti-climate change study. Or a liberal think tank funding a pro-climate change study. There might be elements of truth in there, but you'll always question the findings because of where the funding comes from.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)