Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,356

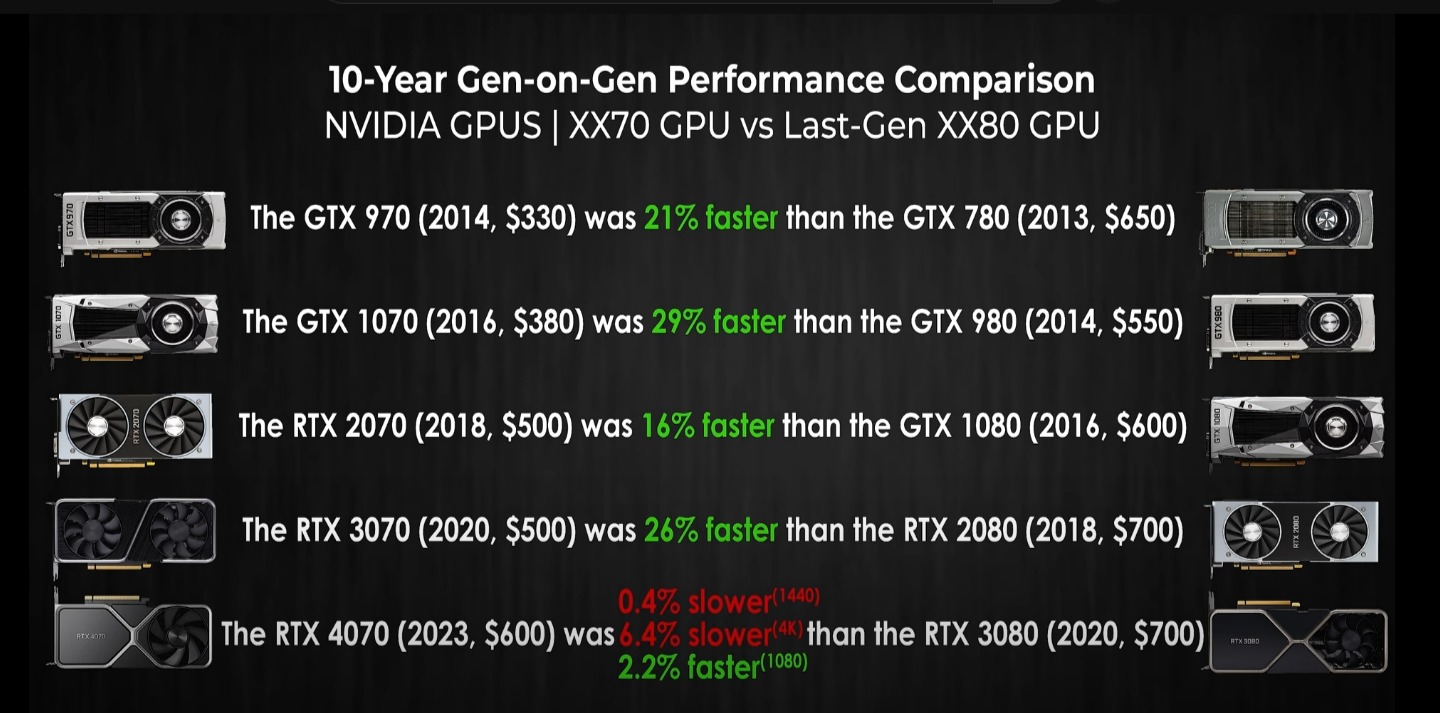

Canada Computers is already giving away a RAM kit with purchase, and I have already seen some models on sale locally here, for a card that just launched.

I have also now come across one local seller giving away a Samsung 970 Evo Plus 1TB M.2 SSD with any Gigabyte RTX 40 GPU....the desperation is becoming palpable.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)