Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

Agreed

Wait before the people start coming in claiming Hardware Unboxed is Biased toward AMD:

DLSS > FSR and not even close

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Agreed

the 4070 is barely on par with a 3080, only really edges it out at 1080p or rtx 1080p/1440p. (or with DLSS 3.0)

this is a Meh for me.

Yeah my thought too.Idk. It just doesn't feel good. The 3070 made a big splash by being "2080ti performance, but for $500!"

Now you get not quite 3080 performance for.....$600. Oh, but you get dlss 3. I guess if you're going to make a ton of use out of that?

Funny mentioning bias, after I watched the LTT's review of the 4070 this morning I got the impression Linus and his writers wrote a script to purposely cast light onto Nvidia. I was expecting them to bash the product like everyone else, but to my surprise they didn't. Then I recalled them bashing the 4090 launch so I went back and rewatched that review. My memory must be flawed because lo and behold they cast light onto it too.Wait before the people start coming in claiming Hardware Unboxed is Biased toward AMD:

What about the folks getting so mad that others are just buying a Nvidia 4070?

To them, I say:

View attachment 563709

When you add reflex in there the latency issue is not bad, pair it with a GSync-compatible display and it feels even better from there, updates to DLSS 3 since launch have also significantly improved visuals and greatly reduced artifacts. At launch there was a lot of rushed implementations of it which gave weird results but updates have greatly improved it since then.DLSS3 is not a good selling point. It looks awful, it adds latency. It is inferior compared to DLSS2 which doesn't generate frames.

DLSS3 is a joke.

Wait before the people start coming in claiming Hardware Unboxed is Biased toward AMD:

DLSS > FSR and not even close

When you add reflex in there the latency issue is not bad, pair it with a GSync-compatible display and it feels even better from there, updates to DLSS 3 since launch have also significantly improved visuals and greatly reduced artifacts. At launch there was a lot of rushed implementations of it which gave weird results but updates have greatly improved it since then.

I needed a new work machine and the Avigilon software requires an Nvidia GPU if I want facial, or license plate recognition, so I mean... given the cost of a 4080 compared to an equivalent RTX A4000 I mean it was a no brainer right... So yeah my work desktop is a better gaming rig than my actual gaming rig and I am sorta mad about that, so I had to give it a try, and yeah it was pretty decent. My monitor being GSync compatible was a pure fluke and wasn't something I had paid attention to when I ordered it because Dell was just giving me a bulk deal on a series of monitors they were looking to offload.Latency is so subjective no one should ever take anyone's word for it as gospel though - if a lot of people give it a thumbs up, check it out - but it's always so subjective - especially the more sensitive you are - expect the worst.

That said, you speaking from hands-on experience or things you read online?

I needed a new work machine and the Avigilon software requires an Nvidia GPU if I want facial, or license plate recognition, so I mean... given the cost of a 4080 compared to an equivalent RTX A4000 I mean it was a no brainer right... So yeah my work desktop is a better gaming rig than my actual gaming rig and I am sorta mad about that, so I had to give it a try, and yeah it was pretty decent. My monitor being GSync compatible was a pure fluke and wasn't something I had paid attention to when I ordered it because Dell was just giving me a bulk deal on a series of monitors they were looking to offload.

DLSS3 is, as of now, the most useless framerate improvement tech yet, making sense only when there already is ample underlying performance.When you add reflex in there the latency issue is not bad, pair it with a GSync-compatible display and it feels even better from there, updates to DLSS 3 since launch have also significantly improved visuals and greatly reduced artifacts. At launch there was a lot of rushed implementations of it which gave weird results but updates have greatly improved it since then.

making sense only when there already is ample underlying performance.

Yeah you do need at least 60fps for it or FSR 3 to be viable, I suspect it exists to be better compatible with 4K 120hz (I don't have one so I can't test it), but from what I have read if you use DLSS 3 and frame lock yourself to 120 FPS you can turn on more eye-candy and not deal with tearing or VSync issues on 4K TV's if you are using them as your display. Supposedly the nicer newer displays like the LG C2 don't have this issue as there is some sort of GSync compatible VRR there but for the more abundant TLC panels that are all over the place that don't, it is a big help.DLSS3 is, as of now, the most useless framerate improvement tech yet, making sense only when there already is ample underlying performance.

That’s why I said feels better, like to me it’s not bad, but I am not a twitch gamer and most FPS titles make me want to puke so I get it. I’ve only used DLSS 3 on Darktide, so I can say in that one instance any latency issues the frame generation did induce weren’t really distinguishable from latency generated by my connection to their servers.Latency is so subjective no one should ever take anyone's word for it as gospel though - if a lot of people give it a thumbs up, check it out - but it's always so subjective - especially the more sensitive you are - expect the worst.

That said, you speaking from hands-on experience or things you read online?

I tend to agree for "future proofing" but I can see a 12gb card making sense. If you're a peasant like I am, maybe all you really want is 1080p gaming. 12gb does that with plenty to spare. Even at 1440p it's not so bad but I'd be concerned about it a year from now.lol I don't live in the US.But fair. Its still a terrible idea to buy a 12gb card in 2023.

Well tbf, Nvidia kind of went out of their way to make the 4090 the only card that really stands out this generation.

Everything else has been kind of...meh to not good.

The technical issues, don't exist on cards with a proper amount of vram.

For what both companies are asking for mid range cards these days... I want to know its going to actually be able to play a game released NOW. I don't care how well they play 4 year old games like AC Odyssey, I mean my 5700xt can handle that game. I want to know how a new GPU handles games my 3 year old cards are just now starting to stumble on. Its very easy to see multiple newer games that are pushing 15gb in use at 1080. I wanna see those games reviewed before I would consider pulling the trigger on a 12gb cards. (even if they have to add a bunch of *s and list version numbers to the results)

Easy answer.. Esports gamers with high refresh rate monitors want the high frames possible at 1080p. That calls for the fastest cpu and gpu.the 4080 was like a 900 dollar card (and actually sold for 900) I'd buy one.

I also dont get why they still benchmark games that get like 200fps.

I tend to agree for "future proofing" but I can see a 12gb card making sense. If you're a peasant like I am, maybe all you really want is 1080p gaming. 12gb does that with plenty to spare. Even at 1440p it's not so bad but I'd be concerned about it a year from now.

As for the 4070 itself....it is overpriced? Oh hell yes. It wouldn't have cost much more to equip it with 16gb either.

I also dont get why they still benchmark games that get like 200fps.

That is sort of the problem though. 12gb is just barely enough for 1080p high settings in newer games. 8gb for sure isn't good enough anymore... I posted Hardware unboxed video from the other day detailing how 8gb 3070s are shitting the bed on multiple newer titles.

Another garbage tier release by nvidia. It's fascinating that all of their releases are good cards, the pricing and naming conventions just suck. This would've been great as the 4060 ti at 450 dollars. Both companies seem hell bent on maximizing pricing, I'm expecting the 7800 XT to slot in right between this and the 4070 ti and priced to match.

For people that has the case-PSU for a 6950xt (they are almost 2 different tier of cards in that regard, so for many the direct comparison is not that useful), but for some it does considering the really close price range at the moment:

In a sense it is impressive how close a 295mm-192 bits-190watt or so card can keep up with a 520mm-256bits, 350watts or so card, released less than a year ago at $1100 msrp, would just need AMD offer to force Nvidia to pass more of all those impressive rebate to us with their 7700 offer.

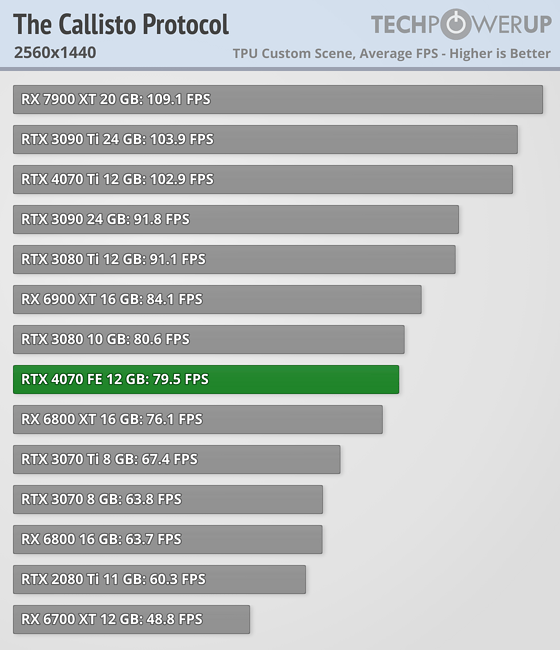

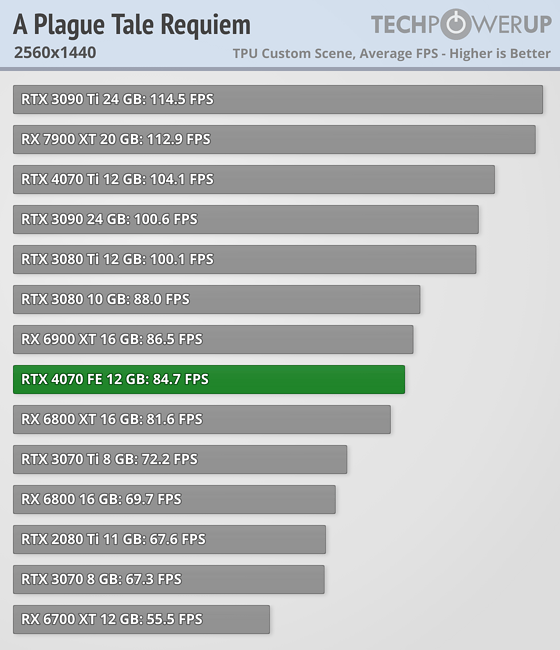

That was very obvious to me as well. When I saw Control I was like, "Really?"I suspect... and maybe I'm just an old cynic. That a lot of these reviewers get a list of approved for review games. Being kind perhaps They get feed lines like.... X developer hasn't optimized that title yet or some other BS. Right now though I am finding it very interesting that every single big outlet review of the 4070 is not touching games like Hogwarts, Calisto, Resident evil, Plague Tale.... I swear there was a time when reviewers where [H] and actually attempted to run the latest greatest choke your hardware software when doing reviews. (even the mid range stuff)

That was very obvious to me as well. When I saw Control I was like, "Really?"

Well... for the founders edition, the AIB ones definitely push the size up to "Super"Personally, I'm just happy to see a card designed to "fit" your existing case.

Sometime techpowerup can feel like the main reference and they did for some of those:I am finding it very interesting that every single big outlet review of the 4070 is not touching games like Hogwarts, Calisto, Resident evil, Plague Tale....

Uhhh.. what? I'm actively playing Hogwarts Legacy right now, and have gone through each graphical setting to see how much VRAM is used i.e Native, DLSS, DLSS + FG, etc. it never ever goes above 11GB at full Ultra with RT enabled.Hogwarts Legacy. Calisto Project, Last of us Part one, The new resident evil (which hardware unboxed just showed a 8gb 3070 crashing on at 1080p), The new Plague tale game. No doubt there are more... and many more on the way. Everyone of those will use well over 12gb of ram at 1080p ultra and 1440 high.

I would not go as far as to call shenanigan's on all the major reviewers today not including games like Hogwarts which is one of the most popular games going at the moment... I do have to wonder why no one has covered any of this years big eye candy titles. I seem to remember a time when reviewers didn't ONLY look at 2+ year old games in a review for a brand new GPU. Sure perhaps they mention and * hey we used patch X.X.... but to ignore all games released in the last two years seems odd to me.

Uhhh.. what? I'm actively playing Hogwarts Legacy right now, and have gone through each graphical setting to see how much VRAM is used i.e Native, DLSS, DLSS + FG, etc. it never ever goes above 11GB at full Ultra with RT enabled.

Agreed

How much ram do you have available ? I also didn't say you would run out of ram with 12gb.... I said 8gb was already shown to run out of ram in that game. You just said yourself your pushing 11gb. That means all those 8gb Ray tracing for the future proofing buys from the last product cycle WOULD run out of ram right. How many people grabbed 3060s... and 3070s based on the RT is the future crap, sure AMD is faster raster but Nvidia has RT). The 8gb 3070 that people bought cause it would do RT better in the future vs 16gb AMD options, are crashing and having texture popping issues in games like potter where the AMD competition that may have less then RT hardware can feed it as they do not and are actually reporting much better even playable RT in such games.

This is actually wrong. 4k 6800xt, no fsr. Runs great at 48-50+ fps, zero stutters.The stuttering issues were present on all GPUs last I checked.

This is actually wrong. 4k 6800xt, no fsr. Runs great at 48-50+ fps, zero stutters.