but isn't the 6500 XT the worst GPU of all time?

Maybe but one thing going for it is that it isn't $399. Now or at its release.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

but isn't the 6500 XT the worst GPU of all time?

Dear Nvidia, Price matters. Sincerely yours, The WorldMaybe but one thing going for it is that it isn't $399. Now or at its release.

Nvidia: Dear cjcox; GPUs are selling, people have spoken with their wallets. Perhaphs you should write to The World and hash it out.Dear Nvidia, Price matters. Sincerely yours, The World

Nvidia: Dear cjcox; GPUs are selling, people have spoken with their wallets. Perhaphs you should write to The World and hash it out.

All the reports I've seen are that the 4060 ti cards aren't selling.Nvidia: Dear cjcox; GPUs are selling, people have spoken with their wallets. Perhaphs you should write to The World and hash it out.

Nvidia's GPUs are selling so well that sales actually decreased 38% year on year.

Prime example of not being able to see the forest from the trees.All the reports I've seen are that the 4060 ti cards aren't selling.

Prime example of not being able to see the forest from the trees.

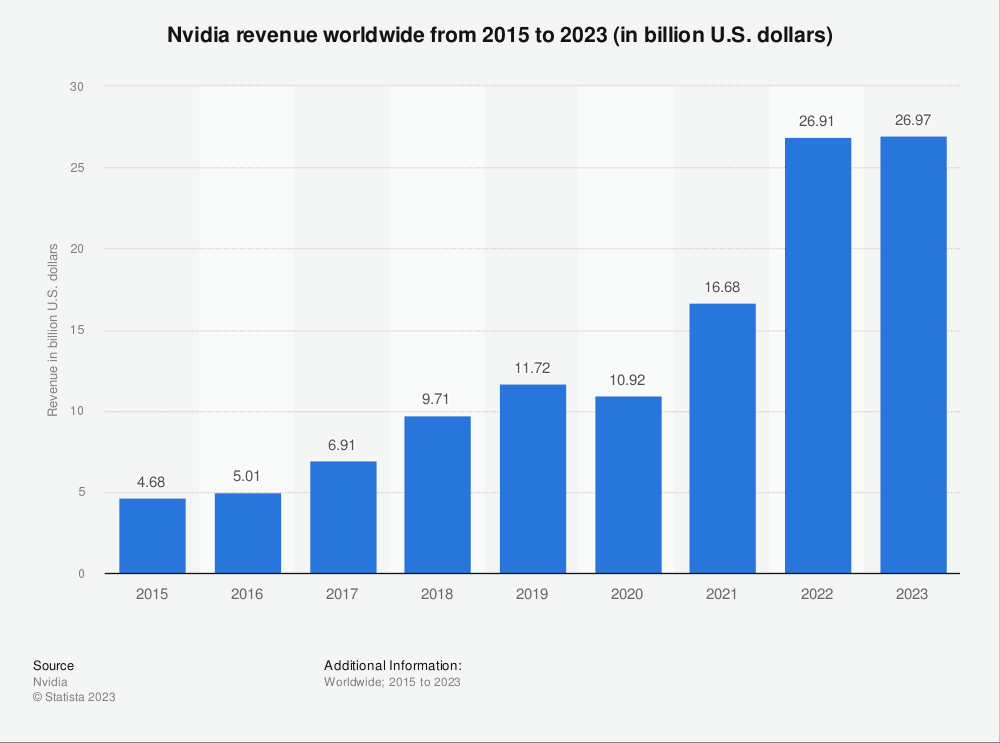

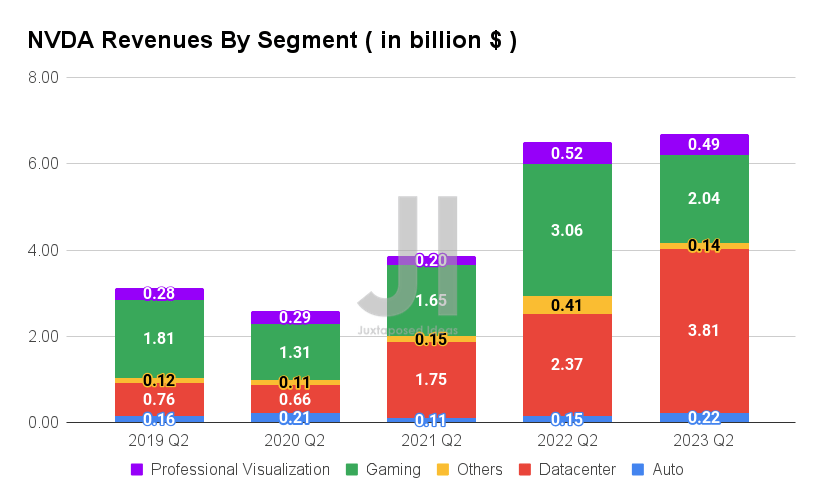

Here, let me enlighten you both since you seem to not understand that Nvidia as a company stacks bills, regardless of what you believe about them and/or their consumer GPU business.

View attachment 573275 View attachment 573276

Well look at that, their revenue is still looking good. Their gaming segment took a hit but their data center gobbled that percentage right up. Now add to that, they have already decreased production of the consumer GPUs to feed even more chips into their data center; and you tell me what incentive does Nvidia have to reduce the price of their GPUs. That's right, no incentive at all.

and he showed exactly why nvidia couldn't give a rats ass if sales are low on the consumer side. there's no reason for them to reduce prices when they're able to offset the lower sales to consumers with increased sales to the enterprise market.. consumers will only vote with their wallet for so long before they just say fuck it and pay the higher price and all marketing teams worth a damn know that. the only ones being hurt are the AIB's which have absolutely no say in the matter.All of which has stuff all to do with the premise of your insinuation that gaming GPUs are still selling well at inflated prices, i.e. they are not, people are speaking with their wallets by NOT BUYING them.

Moreover, overall revenue is down 13% year on year. Nvidia printing money from its other divisions is allowing it to offset substantial decreases in gaming GPU sales. How long Nvidia can keep this up is a matter of speculation, but there are recent examples where complacent industry leaders thought they need not worry about competitors catching up *cough* Intel *cough*.

New Hardware Unboxed out too

but isn't the 6500 XT the worst GPU of all time?

Prime example of not being able to see the forest from the trees.

Here, let me enlighten you both since you seem to not understand that Nvidia as a company stacks bills, regardless of what you believe about them and/or their consumer GPU business.

View attachment 573275 View attachment 573276

Well look at that, their revenue is still looking good. Their gaming segment took a hit but their data center gobbled that percentage right up. Now add to that, they have already decreased production of the consumer GPUs to feed even more chips into their data center; and you tell me what incentive does Nvidia have to reduce the price of their GPUs. That's right, no incentive at all.

Epic review.

Not anymore. The "4060 Ti" wins something, I guess.but isn't the 6500 XT the worst GPU of all time?

This is a thread on the 4060 ti and you were responding to someone pointing out that it is overpriced, I responded because your comment was non sequitur.Prime example of not being able to see the forest from the trees.

Here, let me enlighten you both since you seem to not understand that Nvidia as a company stacks bills, regardless of what you believe about them and/or their consumer GPU business.

View attachment 573275 View attachment 573276

Well look at that, their revenue is still looking good. Their gaming segment took a hit but their data center gobbled that percentage right up. Now add to that, they have already decreased production of the consumer GPUs to feed even more chips into their data center; and you tell me what incentive does Nvidia have to reduce the price of their GPUs. That's right, no incentive at all.

No, I responded to a facetious post about the price with a facetious post about the company as a whole not having any in incentive to lower the price.This is a thread on the 4060 ti and you were responding to someone pointing out that it is overpriced, I responded because your comment was non sequitur.

It's almost as if you didn't even watch the video, as they clearly state that the 6500XT is still the worst GPU launch in history. The 4060Ti is just a bad price, but the product itself is fine.Not anymore. The "4060 Ti" wins something, I guess.

How important the gaming market is to nvidia is a completely separate discussion from whether the 4060 ti is overpriced in that market. Your responded to a post referencing the price of the 4060 ti saying that the market had spoken and GPUs were selling well and then tried to back it up with a chart that actually shows the latest gaming revenue down though it wouldn't include the card in question anyway.No, I responded to a facetious post about the price with a facetious post about the company as a whole not having any in incentive to lower the price.

What I can only imagine is that my facetious post must have hurt your sensibilities. Why else would you reply to my facetious post as if it were serious?

Exactly.How important the gaming market is to nvidia is a completely separate discussion from whether the 4060 ti is overpriced in that market. Your responded to a post referencing the price of the 4060 ti saying that the market had spoken and GPUs were selling well and then tried to back it up with a chart that actually shows the latest gaming revenue down though it wouldn't include the card in question anyway.

I responded because you were conflating two separate subjects and continue to do so.

You won't be buying a "fine" GPU anymore.The 4060TI is a fine GPU at a $299 MSRP, at $399 it's trash to be avoided as long as any alternative exists at all.

Then what do you consider a good GPU in the current market?You won't be buying a "fine" GPU anymore.

You know what, sure. Whatever you want to believe. I'm not going to debate both facetious posts with someone that can't even decipher the word let alone the content of a waggish post.How important the gaming market is to nvidia is a completely separate discussion from whether the 4060 ti is overpriced in that market. Your responded to a post referencing the price of the 4060 ti saying that the market had spoken and GPUs were selling well and then tried to back it up with a chart that actually shows the latest gaming revenue down though it wouldn't include the card in question anyway.

I responded because you were conflating two separate subjects and continue to do so.

My point is your mythical $299 4060 Ti isn't going to happen. These prices are here to stay.Then what do you consider a good GPU in the current market?

My point is your mythical $299 4060 Ti isn't going to happen. These prices are here to stay.

No doubt.Then at least make the 4060Ti worth $399.

AMD and Nvidia do very different things with their Cache so the 4GB difference here is pretty meaningless in the grand scheme, neither card is something that I would want to be using 2 years from now in 1440p. 1080p either will be fine for their usable lifetime, but 1440p they are going to encounter limitations very quickly with anything based on a UE5 engine or equivalent.Radeon 6700xt matches 4060 ti on 1440p raster while clearly beating it in price (& is more future proof due to 12gb vram)

https://www.digitaltrends.com/computing/nvidia-rtx-4060-ti-vs-amd-rx-6700-xt/

One of the biggest issues regardless of if it's AMD/nVidia is that when you become VRAM constrained the fix for this is decreasing LoD, and texture quality on the fly to prevent the game from becoming a stuttering mess. While this "fixes" the issue, from a benchmarking standpoint its a nightmare and to some extent is creating results that aren't real. It's a cost saving measure across the board, that at the end of the day is allowing GPU manufacturers to slow advancements in lieu of providing an increase in fidelity.AMD and Nvidia do very different things with their Cache so the 4GB difference here is pretty meaningless in the grand scheme, neither card is something that I would want to be using 2 years from now in 1440p. 1080p either will be fine for their usable lifetime, but 1440p they are going to encounter limitations very quickly with anything based on a UE5 engine or equivalent.

I see it more as a combination of 3 things:One of the biggest issues regardless of if it's AMD/nVidia is that when you become VRAM constrained the fix for this is decreasing LoD, and texture quality on the fly to prevent the game from becoming a stuttering mess. While this "fixes" the issue, from a benchmarking standpoint its a nightmare and to some extent is creating results that aren't real. It's a cost saving measure across the board, that at the end of the day is allowing GPU manufacturers to slow advancements in lieu of providing an increase in fidelity.

Many people think that DLSS/FSR are "now I can turn on ray tracing" toggles when they pay much larger dividends in reducing strain on the memory subsystem more than anything.

We are currently in the era of GPU manufacturers monetizing the areas of a frame than your brain can't detect and upselling it to you as a "feature" instead of concrete improvements in raster and texture quality.

Regarding #1, is that what Intel did with Alchemist? How far back would the break be, and would a wrapper like DXVK work for compatibility?I see it more as a combination of 3 things:

1. They can't improve the raster pipeline much more than they have without breaking backward compatibility.

2. Improvements in performance come as a result of cache and transistor increases which have hit a cost wall.

3. Investors are demanding more from Nvidia, AMD, and TSMC so we have to pay more to meet their demands.

It's really number 3 that is screwing us over but 1 and 2 are huge technical hurdles that will have to be addressed at some point sooner than not.

The big trouble is multi-GPU chips, the technology exists to make them, it can be done and is done but the software needs to be tailored for them. Much like the old single-threaded CPU applications if you ran them on a multi-core CPU they wouldn't scale or worse they are unstable and exhibit worse performance. Multi GPU is the same way for software designed to run on them they run great, but for anything that isn't though they do worse.

If AMD or Nvidia put out a multi-GPU card today and told us for UE 5.5* or any upcoming game on a multi-GPU engine you can see upwards of a 150% performance increase over our existing flagship, but then in reviews, it showed that it was between 50-75% of the performance of those GPUs on existing titles that were not using a multi-GPU engine I doubt people would be forming lines to buy them at the price that either party would need to sell it at.

So AMD and Nvidia need to either develop a consumer-friendly front-end IO chip that can present the downstream GPU cores as a single entity or we need game developers to start developing for multi-GPU which is something that both DX12 and Vulkan support.

I suspect that sometime soon we may start seeing control cards like a small 2-slot NVSwitch or something to facilitate the front-facing IO for consumer cards, but who knows?

* I picked the number 5.5 out of my ass, I have no clue if a 5.5 exists and I certainly don't know if it natively supports multi-GPU, or if that is a thing they are even working on.

Intel Alchemist only does DX12 and Vulkan natively, DX9 through DX11, and OpenGL are all handled via wrappers which they are developing in-house.Regarding #1, is that what Intel did with Alchemist? How far back would the break be, and would a wrapper like DXVK work for compatibility?

Considering the RTX 3060 has better lows on some games than the 4060 Ti due to having more VRAM, there's really no difference here. The problem with recommending a graphics card is that nothing is without issues.AMD and Nvidia do very different things with their Cache so the 4GB difference here is pretty meaningless in the grand scheme, neither card is something that I would want to be using 2 years from now in 1440p. 1080p either will be fine for their usable lifetime, but 1440p they are going to encounter limitations very quickly with anything based on a UE5 engine or equivalent.

I snagged a cheap 6750xt for my secondary desktop have it paired with a decent FreeSync display and while it’s a 1440p screen I’d probably still enable FSR there for it. Rather have closer to 165 than not. I have a 3080ti 12gb that I paid through the nose for so it’s my main for the foreseeable future but I have a hard time recommending anything from NVidia as a sub $600cad card right now.Considering the RTX 3060 has better lows on some games than the 4060 Ti due to having more VRAM, there's really no difference here. The problem with recommending a graphics card is that nothing is without issues.

Lets say someone asks me what graphics card to buy that's around $400 or less. Nvidia has no downsides hardware wise, except price. As much as people think 8GB of VRAM is OK, it isn't OK. So I can't recommend a 4060 Ti, 3070, 3070 Ti, and 3060 Ti. I could recommend the RTX 3060 12GB which can actually be had for under $300, but other than that with Nvidia you need to go to the RTX 4070 or RTX 3080, which are both beyond $400. AMD has faster rasterization but slower Ray-Tracing performance. Does Ray-Tracing performance matter, especially at the $400 price point? Cause if it doesn't then the RX 6700 XT is pretty good for $340. The RX 6700 with 10GB of VRAM can be found for under $300. The 6600, 6600 XT, and 7600 and not worth the asking amount. Intel's A770 problem is that they're not ready with drivers. It'll run games, but some games may have graphic glitches and older not DX12 and Vulkan titles will not run as well compared to AMD and Nvidia. Interestingly, Intel has very good Ray-Tracing performance. If you buy an Intel GPU you're doing so as a future investment, but at $340 for the A770 you can find faster cards with equal amounts of VRAM. Also Intel has working AV1 encoding which is a plus. None of these graphic cards I would say are future proof. The RTX 3060 is slow even with 12GB of VRAM. The RX 6700 XT is fast but not at Ray-Tracing. The Intel A770 has a lot of potential but potential is wasted energy. Take your chances with Nvidia's DLSS future with 8GB of VRAM or AMD's fine wine drivers that may some day increase their Ray-Tracing performance. You could also take your chance with Intel and hope that one day the god rays from fired Raja Koduri will fix Intel's drivers. There's no graphics card here that'll last 2 years without running DLSS or FSR 24/7.

We have quite a bit to go before that happensI see it more as a combination of 3 things:

1. They can't improve the raster pipeline much more than they have without breaking backward compatibility.

True2. Improvements in performance come as a result of cache and transistor increases which have hit a cost wall.

Also problematic.3. Investors are demanding more from Nvidia, AMD, and TSMC so we have to pay more to meet their demands.

Chiplets solve this momentarily until we get to true 3D manufacturing. Multi-GPU processing is currently being manufactured/deployed in the enterprise sector by both AMD and Intel. So there's no problem there. Honestly we are in a downward period until full 3D manufacturing is possible. However, this does not excuse them from blame for not pushing the market further than it has. Intel in particular is probably the furthest along and it wouldn't surprise me if nVidia takes advantage of it in that foundry deal they just made.It's really number 3 that is screwing us over but 1 and 2 are huge technical hurdles that will have to be addressed at some point sooner than not.

The big trouble is multi-GPU chips, the technology exists to make them, it can be done and is done but the software needs to be tailored for them. Much like the old single-threaded CPU applications if you ran them on a multi-core CPU they wouldn't scale or worse they are unstable and exhibit worse performance. Multi GPU is the same way for software designed to run on them they run great, but for anything that isn't though they do worse.

Now, on to the disappointing news: the RTX 4060 Ti.

We don’t understand what kind of decisions NVIDIA took when deciding the Ada Lovelace GeForce product stack, but it has been nothing but mistakes. The RTX 4060 Ti 8GB with only a 128-bit wide memory bus and GDDR6 VRAM is a serious downgrade for emulation when compared to its predecessor, the 256-bit wide equipped RTX 3060 Ti. You will be getting slower performance in Switch emulation if you get the newer product. We have no choice but to advise users to stick to Ampere products if possible, or aim higher in the product stack if you have to get a 4000 series card for some reason (DLSS3 or AV1 encoding), which is clearly what NVIDIA is aiming for.

The argument in favour of Ada is the increased cache size, which RDNA2 confirmed in the past helps with performance substantially, but it also has a silent warning no review mentions: if you saturate the cache, you’re left with the performance of a 128-bit wide card, and it’s very easy to saturate the cache when using the resolution scaler — just 2X is enough to tank performance.

Spending 400 USD on a card that has terrible performance outside of 1X scaling is, in our opinion, a terrible investment, and should be avoided entirely. We hope the 16GB version at least comes equipped with GDDR6X VRAM, which would increase the available bandwidth and provide an actual improvement in performance for this kind of workload.