Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

lostin3d

[H]ard|Gawd

- Joined

- Oct 13, 2016

- Messages

- 2,043

I admit that I haven't followed all of NV's cards over the last 5-10 years but I have checked out most of them. It really seems since the 10xx cards came out that NV simply mix/matches parts now to throw a card out there. If these specs are true then it's a pretty depressing looking card for what will likely be pricey. Come on AMD, now NV is getting so lazy it's as if they're not even trying anymore. I really do wonder if they've got some warehouses somewhere whose only purpose is to somehow disassemble unsold inventory to re-purpose into a 'new' model that has one or two new features.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,074

You down with GPP (Yeah you know me) [Repeat: x3]

Who's down with GPP (Every last homie)

You down with GPP (Yeah you know me) [Repeat: x3]

Who's down with GPP (All the nerdies)

Who's down with GPP (Every last homie)

You down with GPP (Yeah you know me) [Repeat: x3]

Who's down with GPP (All the nerdies)

If these specs are true then it's a pretty depressing looking card

How do they know what the performance is going to be if they don't even know anything about the architecture changes yet? Or is Turing just a modified Volta?

dgingeri

2[H]4U

- Joined

- Dec 5, 2004

- Messages

- 2,830

I'm running a 3440X1440 34" for my main and a 2560X1440 for my secondary, and can run WoW or Rift on the main and STO or Netflix or a VM on the secondary at the same time without any issues. That's a heck of a lot of screen real estate, and a lot of pixels. It may not be as much as a 4k screen, but it is still not enough to make my 980 Ti breath heavy.In 1080p and 1440p. 4K? No way. My 980Ti in sig is screaming UPGRADE ME! Bouncing between 27-34 fps in AC:Origins is killing me. And on a 100 inch screen, 1080p vs. 4K w/HDR is night vs. day literally. From the numbers 1080Ti looks like a 40-50% increase in most games I play over the 980Ti. So add in another 20%, yeah this card is really for us 980Ti users looking for the next level and needing it bad. I would bet nVidia uses the 980 or 980Ti to show performance gains on this 1180.

Seventyfive

[H]ard|Gawd

- Joined

- Jul 14, 2004

- Messages

- 1,347

clickbaittech

ROFL so true

ZLoth

Gawd

- Joined

- Apr 13, 2010

- Messages

- 854

I currently own a 980 card (purchased in November, 2014) and a 2K GSync Monitor (purchased in December, 2016). The 980 card was a definite improvement over the 460 card. I had planned on getting a 1080Ti last December, but decided against it. Now, I'm waiting on the 1180Ti coming out next year.

dgz

Supreme [H]ardness

- Joined

- Feb 15, 2010

- Messages

- 5,838

At least all those rumor mills will keep them cool.

d3athf1sh

[H]ard|Gawd

- Joined

- Dec 16, 2015

- Messages

- 1,232

Gaping Poop Producer?

well that's what anyone deals with them will have.

i was thinking gargantuon penis pouch! because it's a pretty big p**sy @ss move on their part!

bizzmeister

2[H]4U

- Joined

- Apr 26, 2010

- Messages

- 2,439

I’ll build a new PC when the 1180Ti hits stores.

Until then, this 4770k / 1070Ti / SSD @ 1440p will do just fine

Until then, this 4770k / 1070Ti / SSD @ 1440p will do just fine

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

You down with GPP (Yeah you know me) [Repeat: x3]

Who's down with GPP (Every last homie)

You down with GPP (Yeah you know me) [Repeat: x3]

Who's down with GPP (All the nerdies)

Nope, still down with OPP

lucidrenegade

Limp Gawd

- Joined

- Nov 3, 2011

- Messages

- 388

With the Titan V , is it assumed there won't be a Titan flavor on the gaming side again or at least in this next gen ?

There probably will be. The Titan V was never meant to be a halo gaming card with that price tag. I suspect we'll see a Titan Xt (or whatever they call it) within 3-6 months of the 1180 launch.

lucidrenegade

Limp Gawd

- Joined

- Nov 3, 2011

- Messages

- 388

I'll buy once GPP is gone. For now I will buy used Nvidia GPUs on ebay just so Nvidia doesn't get my hard earned money.

While I don't condone the strong arm tactics, I also don't see why there's so much nerd rage over this. My understanding is that a vendor can't sell an AMD card with the same series name as an Nvidia one. So if Asus has ROG Nvidia cards, they can't have an AMD card with the ROG branding (hence the Arez brand). Do people really care that much about the name as opposed to the card itself?

lostin3d

[H]ard|Gawd

- Joined

- Oct 13, 2016

- Messages

- 2,043

How do they know what the performance is going to be if they don't even know anything about the architecture changes yet? Or is Turing just a modified Volta?

Sort of leading into my point. During the whole stretch of the 10xx cards we saw various iterations of gp10x and various ram types. Even within the same models(1070's, 1050's) they eventually had variations of ram types. So they could slap whatever name they want on this but if these specs are true than it looks more like a mix/match of parts old and new. Tick and Tock in green colors.

Sad thing is that threads like these really are pure speculation. I remember exhaustively reading on any site that said anything about the 1080's for a year before they hit shelves as I was eagerly awaiting them to replace my 970's. Thankfully I'm not really in that boat now. Bottom line is we don't really know the performance but using 256 bit interface and 1.6-1.8Ghz clocks don't appear to be much different than current 1080 or TI except coupled with TI CUDA cores.

Not "skipping," just logically progressing. Why would they go 4-5-6-7-8-9-10-20?

I didn't say it was logical, nor did I day NV was skipping to the 2000 series.

I said I read an article saying that. One no doubt as factual and accurate as the one this thread is chewing on.

As to why someone would publish that or why NV would in fact do that, the right person to ask why, is not me.

Aenra

Limp Gawd

- Joined

- Apr 15, 2017

- Messages

- 191

cageymaru

Was so very often i'd be reading a news bit, usual dosage of irony/sarcasm (which i do love) followed by a "thanks cageymaru!", that i actually thought you were a meme, lol

So you're an actual person. O.K.!

(i don't exactly follow contemporary.. trends? You'd be surprised how perplexing the internet can be at times)

Was so very often i'd be reading a news bit, usual dosage of irony/sarcasm (which i do love) followed by a "thanks cageymaru!", that i actually thought you were a meme, lol

So you're an actual person. O.K.!

(i don't exactly follow contemporary.. trends? You'd be surprised how perplexing the internet can be at times)

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,074

cageymaru

Was so very often i'd be reading a news bit, usual dosage of irony/sarcasm (which i do love) followed by a "thanks cageymaru!", that i actually thought you were a meme, lol

So you're an actual person. O.K.!

(i don't exactly follow contemporary.. trends? You'd be surprised how perplexing the internet can be at times)

I take pride in being the sarcastic one as I got it from my mother. You had to have some really thick skin around her! So that's pretty much the news that I like to find. Glad you enjoy it!

Now off to the shadows...

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,543

Thats pretty much what I expected it to be, argued quite a bit with Razor1 on that. Tho its just a rumor so a big grain of salt.

Dion

2[H]4U

- Joined

- Oct 2, 2004

- Messages

- 3,920

Sounds like it's pretty much a side-grade to the 1080Ti, idk, maybe around 10% on top like the 1080 was to the 980Ti? I should just be content and sit this one out... or go the used 1080Ti route.

It is a side grade to a 1080 ti.. It doesn't compete with it.. It replaces 1080 and 1070s. Which it won't be a side grade on.

5150Joker

Supreme [H]ardness

- Joined

- Aug 1, 2005

- Messages

- 4,568

A 1080 replacement that's significantly faster for the same MSRP? Should be a winner. Meanwhile AMD is busy making videos crying about GPP lol!!

Isn't that 1080 was like at least 20% faster than factory overclocked 980ti?Sounds like it's pretty much a side-grade to the 1080Ti, idk, maybe around 10% on top like the 1080 was to the 980Ti? I should just be content and sit this one out... or go the used 1080Ti route.

AlphaQup

Gawd

- Joined

- Oct 27, 2014

- Messages

- 845

It is a side grade to a 1080 ti.. It doesn't compete with it.. It replaces 1080 and 1070s. Which it won't be a side grade on.

Correct, which is why I went from a 970 to a 1080 in the first month of it's availability last go around, that was a huge jump up for me at 1440p/144hz. If I were to go 1180 this time around from my current 1080 the improvement wouldn't be near as drastic as before.

Isn't that 1080 was like at least 20% faster than factory overclocked 980ti?

Looks like I stand corrected: https://www.hardocp.com/article/2016/05/17/nvidia_geforce_gtx_1080_founders_edition_review/14

~30% increase stock to stock, 980Ti vs 1080. Maybe it was the OC'd to OC'd comparisons people were comparing that made it slightly less appealing, with the 980Ti's having more headroom and closing that gap down a bit?

Not sure, but thanks for the correction.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 41,978

https://en.wikipedia.org/wiki/GeForce_800M_seriesthere was no 8 series

MacLeod

[H]F Junkie

- Joined

- Jul 28, 2009

- Messages

- 8,279

I cant wait to get 20 new cards in the mining rigs...

A 1080 replacement that's significantly faster for the same MSRP? Should be a winner. Meanwhile AMD is busy making videos crying about GPP lol!!

Sad but true. I've been holding off upgrading my GPU then got stuck when the mining craze hit. I can't wait for these cards to come out. My beloved 290x is finally starting to struggle and I'm having to turn a setting or two down on a couple of newer games. Prey was fine but had to tweak the AA in the new Wolfenstein and can't use Ultra settings on everything in Deus Ex:MD

it is interesting... on this.

I have 2 x 8 pin connectors and I think I have the small power supply.

I think I have the 425w power supply.. this link says there are 2.. 685 / 425 W

so I could upgrade the ps as well...

http://www.dell.com/support/manuals...1a5aa6-1e70-44b9-b690-59507a3a9f31&lang=en-us

I don't think they'd give you 2x8 pin connectors on a 425W PSU, that makes me suspect you've got the 685. OTOH even the 425 should be enough to run a 150w card unless you've got the biggest Xeon, and maxed out your ram and HDD capacity.

You might need to unscrew it to check, but the wattage should be printed on the side of the PSU somewhere. The important number is what's available on the 12V rail not the headline number. (For modern designs these tend to be very close or the same since almost everything is 12v now.)

TeleFragger

[H]ard|Gawd

- Joined

- Nov 10, 2005

- Messages

- 1,108

I don't think they'd give you 2x8 pin connectors on a 425W PSU, that makes me suspect you've got the 685. OTOH even the 425 should be enough to run a 150w card unless you've got the biggest Xeon, and maxed out your ram and HDD capacity.

You might need to unscrew it to check, but the wattage should be printed on the side of the PSU somewhere. The important number is what's available on the 12V rail not the headline number. (For modern designs these tend to be very close or the same since almost everything is 12v now.)

gotcha ill check later..

I do know some of it..

I have

Xeon E5-1620 V3

3x 500gb SSD

DVD Rom

4x8gb ddr4 dimms - hopefully add 4 more in a few months...

gxt 750it

that is about it..so ill still check the power supply

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

Perhaps get out a calculator if you can't do the math in your head because that is actually more bandwidth than the 1080ti.GDDR6 and only a 256bit memory interface and the same number of Cuda cores as the 1080Ti?

Sure it's likely much faster ram but a step back from the 352bit interface of the 1080Ti.

Sounds more like a future: "Woulda, Coulda, Shoulda"

Araxie

Supreme [H]ardness

- Joined

- Feb 11, 2013

- Messages

- 6,463

Perhaps get out a calculator if you can't do the math in your head because that is actually more bandwidth than the 1080ti.

still bus wide have a huge role in memory performance, sure, bandwidth it's a part, but sometimes depending on the game coding, bus width have a more important job if max bandwidth hasn't been achieved.

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

You are making no sense as the effective bandwidth is what matters and it's irrelevant how it achieves that.still bus wide have a huge role in memory performance, sure, bandwidth it's a part, but sometimes depending on the game coding, bus width have a more important job if max bandwidth hasn't been achieved.

Araxie

Supreme [H]ardness

- Joined

- Feb 11, 2013

- Messages

- 6,463

You are making no sense as the effective bandwidth is what matters and it's irrelevant how it achieves that.

ehm nope, big nope there. first learn a bit about game coding and programming then return here and say the same. Using the highway analogy, if the bus width is the number of lanes, and the bus speed is how fast the cars are driving, then the bandwidth is the product of these two and reflects the amount of traffic that the channel can convey per second, so following this same analogy, more number of lanes in the highway at the same car speed will result in a more efficient final "throughput", more bus lanes mean faster access to data streaming, larger data size and faster I/O/cache operations, which also mean more efficient achievement of the maximum "theoretical" bandwidth as there's not always full efficiency in the Double Data Rate interface, that is present not only in vRAM but also in system RAM, you can do whatever test you want on your system, and you will see with benchmark that the achieved bandwidht by your machine isn't always the same as theoric numbers can mean, same principle apply to overall GPU architecture, Pixel fillrate, Texel Fillrate, Shader arithmetic rate, rasterization rate are all together to bandwidth maximum theoretical numbers.

The "bandwidth measurement" calculation just give a "peak" of theoretical maximum bandwdith (which isn't always achieved or sometimes exceeded and it's guarantee depend on many factors which you probably will not know anyways but to make it simply, it depends on type of texturing and cache programming)

GTX 1080

256-bit / 8 = 32

32 * 10008Mhz = 320256MB/s maximum theoric bandwdith

GTX 1080Ti

352 bit/ 8 = 44

44 * 11008Mhz = 484352MB/s maximum theoric bandwdidth

GTX 980Ti

384 bit /8 = 48

48 * 7012Mhz = 336576MB/s maximum theoric bandwidth

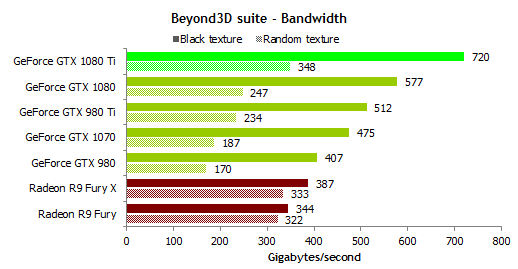

however when you put the GPUS to a test to measure the realworld bandwdith usage scenario you find things like this.

Do I need to explain what's going on here with texture performance and texture compression?

I don't recall, but if they did, they did not have the market share to cause the kind of damage NVIDIA is currently capable of.Didn't 3Dfx pull this exclusivity crap?

misterbobby

2[H]4U

- Joined

- Mar 18, 2014

- Messages

- 3,814

2048 coresehm nope, big nope there. first learn a bit about game coding and programming then return here and say the same. Using the highway analogy, if the bus width is the number of lanes, and the bus speed is how fast the cars are driving, then the bandwidth is the product of these two and reflects the amount of traffic that the channel can convey per second, so following this same analogy, more number of lanes in the highway at the same car speed will result in a more efficient final "throughput", more bus lanes mean faster access to data streaming, larger data size and faster I/O/cache operations, which also mean more efficient achievement of the maximum "theoretical" bandwidth as there's not always full efficiency in the Double Data Rate interface, that is present not only in vRAM but also in system RAM, you can do whatever test you want on your system, and you will see with benchmark that the achieved bandwidht by your machine isn't always the same as theoric numbers can mean, same principle apply to overall GPU architecture, Pixel fillrate, Texel Fillrate, Shader arithmetic rate, rasterization rate are all together to bandwidth maximum theoretical numbers.

The "bandwidth measurement" calculation just give a "peak" of theoretical maximum bandwdith (which isn't always achieved or sometimes exceeded and it's guarantee depend on many factors which you probably will not know anyways but to make it simply, it depends on type of texturing and cache programming)

GTX 1080

256-bit / 8 = 32

32 * 10008Mhz = 320256MB/s maximum theoric bandwdith

GTX 1080Ti

352 bit/ 8 = 44

44 * 11008Mhz = 484352MB/s maximum theoric bandwdidth

GTX 980Ti

384 bit /8 = 48

48 * 7012Mhz = 336576MB/s maximum theoric bandwidth

however when you put the GPUS to a test to measure the realworld bandwdith usage scenario you find things like this.

View attachment 67947

Do I need to explain what's going on here with texture performance and texture compression?

128 tmus

64 rops

128 bit bus

16000 mhz memory speed

2048 cores

128 tmus

64 rops

256 bit bus

8000 mhz memory speed

BOTH of those would perform exactly the same if the same architecture and running at the same clock speeds. The fact that one uses 128-bit bus and the other uses 256-bit bus is 100% irrelevant if the effective memory bandwidth is the same and everything else is equal.

I don't recall, but if they did, they did not have the market share to cause the kind of damage NVIDIA is currently capable of.

I believ 3dfx do away from AIB partners after acquiring STB Systems and sell its GPU alone. I could be wrong but I don't recalled seeing Voodoo3, 4 or 5 from any AIB partners.

I believ 3dfx do away from AIB partners after acquiring STB Systems and sell its GPU alone. I could be wrong but I don't recalled seeing Voodoo3, 4 or 5 from any AIB partners.

That's correct, but not really directly comparable to the GPP. NVidia isn't making their own brand, they're effectively hijacking brands belonging to other companies.

Nebell

2[H]4U

- Joined

- Jul 20, 2015

- Messages

- 2,382

Wallowing in the muck of avarice, much?

Why would anyone want even more problems with availability by doing a gleaming mining review?

Well if he doesn't, someone else will beat him to it. Like Anandtech.

Getting closer. GTX 1180 spotted in techpowerup database. Computex in 28 days.

https://www.techpowerup.com/gpudb/3224/geforce-gtx-1180

https://wccftech.com/nvidia-geforce-gtx-1180-12nm-database/

https://www.techpowerup.com/gpudb/3224/geforce-gtx-1180

https://wccftech.com/nvidia-geforce-gtx-1180-12nm-database/

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)