jojo69

[H]F Junkie

- Joined

- Sep 13, 2009

- Messages

- 11,267

I did not know that about Tom's

lame

lame

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I did not know that about Tom's

lame

Me neither. I know their forums are pretty bad cuz of lack of moderator control but i always go there for their articles. Granted I always read as many articles/reviews as I can from different websites to come to an unbiased conclusion.

Zarathustra[H];1037191610 said:Yeah, I used to read THG back in the 90's before I knew of their shady practices. These days it's: [H] > Anand > Guru3D

Agreed. Also, it seems that the Nvidia SLI setup is less sensitive to the pcie lane limitation than the AMD setup. The SLI was only affected 10% at 1080p using pcie x4 and even less on 1600p

Here are some other results directly contradicting [H] results:

Even only single monitor and GPU up to 18% performance loss going from 16x to 4x on slower cards not even having to frame swap anything.

The 18% drop in performance is on an AMD card, the Nvidia card (GTX570) loses 6-11%. The percentage of drop actually decreases the higher the resolution goes on the Nvidia card, how much would it be at Eyefinity res? My own experience at eyefinity resolutions is that going from 16x/16x PCIe to 16x/4x PCIe gave a 3-5% performance drop on a Crossfire HD6970 system.

None of this detracts from the fact that even with this probable 10% boost the 3 way SLI would be about as fast, or marginally faster than a much cheaper AMD TriFire solution. If as some seem to imply there was some sort of CPU bottleneck then why is the AMD TriFire system getting 18% higher speeds on the same CPU? Surely if tehre was a bottleneck with the CPU the both systesm would have hit a wall and had identical FPS on all tests.

There is no CPU limitation affecting this evaluation in any way. People keep bringing it up like it's something that needs to be examined, but it's just not an issue. 7MP is approaching two 2560 x 1600 monitors. Would an i7 @ 3.6GHz HT bottleneck a 2560 monitor? No, it wouldn't, and it definitely wouldn't bottleneck almost two of them. This setup's weakest link is its GPU, not its CPU. I stand by this post, and will reference it when the re-test proves my claim.

3 gpus is also ALOT of driver overhead bro

Both configurations are CPU restrained. I'm seeing 25-30% improvement going from 3.6 to 5 GHz on warhead, bf:bc2 and metro (trifire 6970)

None of this detracts from the fact that even with this probable 20% boost (being generous here) the 3 way SLI would be about as fast, or marginally faster than a much cheaper AMD TriFire solution.

Bolded part is simply not true, as both anisotropic filtering quality and AA is currently better on Nvidia cards. High samples SLI antialiasing, SGSAA and TrAA(Supersampling) all work in DirectX 10 and DirectX 11, with nothing similar from AMD to counter this

(albeit having MLAA, which tbh is more performance then quality preset).

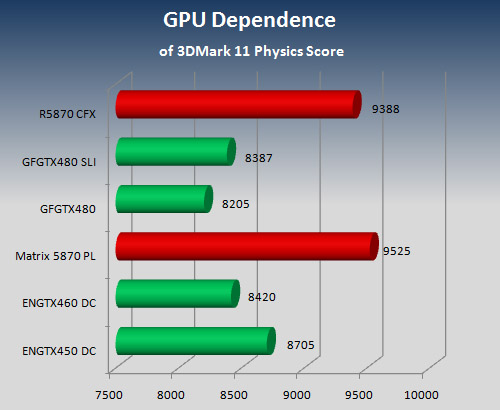

Yeah, here is 4ghz vs 3.6ghz in 3dmark11... Even though it says 3.8ghz, it's actually 4ghz.

http://3dmark.com/compare/3dm11/914071/3dm11/1107130

BTW, to address VRAM concerns even more, I will make some ap2ap graphs at 2560x1600 on the Quad GPU testing i'm doing, and the re-testing here. We certainly want to address that and limit it completely as a factor, I've already done that of course, but I will do 2560x1600 to so those that find value in that will have it.

Fast system, is fast.

It sounds like you'll be pretty busy for a while. I assume that Kyle got you the new platform already? Can't wait to see as you guys are probably the first review site to test this much graphics power with this much CPU power.

BTW, to address VRAM concerns even more, I will make some ap2ap graphs at 2560x1600 on the Quad GPU testing i'm doing, and the re-testing here. We certainly want to address that and limit it completely as a factor, I've already done that of course, but I will do 2560x1600 to so those that find value in that will have it.

Yes, behold the power! *watches as all electricity within a 20 mile radius shuts down*

You guys should just use the 3GB 580's for Eyefinity/Surround resolutions if you can get your hands on them.

They would probably have to buy it, and that would get expensive.

Fair enough, they could at least use a better game selection to make their conclusion though. F1 and DA2 are known to run far better on AMD hardware, to keep things fair either add some known games that favour Nvidia or use a wider and more balanced set of games when making claims.

Can you even buy them in stock anywhere right now?

Civ 5/Metro/BC2/Crysis have been known to favor NV in the past...

I really don't want to argue about that though. Save that for another rant.

We are in desperate need of new games, there are some good ones coming out this year I can't wait for.

Fair enough, they could at least use a better game selection to make their conclusion though. F1 and DA2 are known to run far better on AMD hardware, to keep things fair either add some known games that favour Nvidia or use a wider and more balanced set of games when making claims.

Civ 5/Metro/BC2/Crysis have been known to favor NV in the past...

I really don't want to argue about that though. Save that for another rant.

We are in desperate need of new games, there are some good ones coming out this year I can't wait for.

I'd just use a wider variety of games to make blanket statments. It's no coincidence that the 2 games AMD run the best out of any game on the market are AMD sponsored games, and thats fair enough but at least make the sample size of games bigger.

Those games are in effect to AMD what Lost Planet 2 is to Nvidia, which I think would not be appropriate in a 5 game review.

Would be nice to see some oc results if possible and a couple synthetic benches too.

I'd just use a wider variety of games to make blanket statments. It's no coincidence that the 2 games AMD run the best out of any game on the market are AMD sponsored games, and thats fair enough but at least make the sample size of games bigger.

Those games are in effect to AMD what Lost Planet 2 is to Nvidia, which I think would not be appropirate in a 5 game review.

Would be nice to see some oc results if possible and a couple synthetic benches too.

Civ 5/Metro/BC2/Crysis have been known to favor NV in the past...

I really don't want to argue about that though. Save that for another rant.

We are in desperate need of new games, there are some good ones coming out this year I can't wait for.