Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia Expands G-Sync Support to Approved Adaptive Sync Monitors

- Thread starter AlphaAtlas

- Start date

-

- Tags

- adaptive sync ces gsync nvidia vrr

nightanole

[H]ard|Gawd

- Joined

- Feb 16, 2003

- Messages

- 2,032

General VRR question. My Samsung TV and xBox one X support it, but my Marantz AVR is my hub. Is this a pass through type of thing or does the Xbox need to go directly to tv?

Thanks.

Based on experience, the marantz does not care about frequency, only resolution. So if the marantz supports 4k(hdmi 2.0) it should work.

nightanole

[H]ard|Gawd

- Joined

- Feb 16, 2003

- Messages

- 2,032

HDMI 2.1 supports VRR but

1) does that mean panel makers must support it?

2) are there any standards? I appreciate that GSYNC mandated that GSYNC monitors were capable of 144Hz and LFC. Sure Freesync seemed a lot cheaper but when you are comparing a 60hz monitor to a GSYNC 144hz monitor, why wouldn't you expect the Gsync one to be more expensive?

I think AMD noticed this too and attached standards to the Freesync 2 certification.

1) Yes if they want to write hdmi 2.1 on the box

2) Yes, for example AMD LFC kicks in via the gpu if the panel says it has a frequency range of at least 2.5x the lowest supported frequency. So a popular TV setup is 24hz-60hz. A popular PC setup is 48hz-120/144hz.

My understanding is that being on the list is a formality. That just means that Nvidia has tested it, and bless that it will work properly. Sortof like a Microsoft WHQL driver.

The way I read it you should be able to go in to the driver settings and manually enable it for any VRR or Freesync display as long as you have a 10xx or 20xx GPU.

As long as one remembers that the "formality" actually means that your mileage may vary. Freesync2 cleaned up some of the earlier problems with Freesync where performance parameters allowed a wide range in the specification. That same wide range is what will have a measurable impact in VRR performance with these drivers.

BloodyIron

2[H]4U

- Joined

- Jul 11, 2005

- Messages

- 3,439

nVidia bought PhysX, and were hoping to capture the market with Physics offloading tech. But most of the industry didn't care.

nVidia rolled out G-Sync to try and capture the dynamics sync refresh market, but it's been expensive, and most gamers don't really buy into it.

nVidia rolled out G-Sync to try and capture the dynamics sync refresh market, but it's been expensive, and most gamers don't really buy into it.

Huh?

Nvidia have been annoying lately in too many ways.

But this 'looks' to be a great move even if they were forced in this direction.

They dont need to provide an option to allow any VRR display to try the tech but they are.

Their Gsync HDR series displays are missing a trick though.

They should push at least 2000nits to be the best of.

Like I stated before, garenteed Nvidia is going pull some shinanigans with this. Most likely with branding: if you want to put the GSync logo on your box, you can't have the Freesync logo (or even mention Freesync support at all), or Nvidia may even go so far as to force "qualified monitors" to hack out support for Freesync all together, making it difficult or impossible to enable on AMD cards.

Call me pessimistic, but I simply don't see Nvidia as the 'generous' type.

I think you see evil where no such evil exists. Oh there is the usual corporate greed, which is present on both sides of the house. But nothing like what you suggest.

Here's the details, Freesync itself is not actually a part of the monitors. VRR technology is part of the VESA standard as detailed in this link;

https://vesa.org/featured-articles/vesa-adds-adaptive-sync-to-popular-displayport-video-standard/

The VESA standard allows for the inclusion of Adaptive Sync (VRR), and if a monitor has that, then it's Freesync compatible. So here is reality as it meets your pessimism;

A VESA standard monitor without VRR support won't be doing any VRR no matter who's card or label it wears.

A VESA standard monitor with VRR support will either be fully supported by both NVidia and AMD or partially capable depending on the quality of the VRR implementation put into the display.

And then there will be the GSync monitors witch implement full NVidia GSync and no VESA VRR support at all.

Now if you look at the marketing today for the second in the list, the VESA monitors with VRR support, most are marketed saying they are Freesync compatible, below is my example;

https://www.amazon.com/Samsung-LC49...ocphy=9030195&hvtargid=pla-456716051689&psc=1

Pay close attention to the text;

AMD Radeon FreeSync 2 support

The CHG90 supports AMD’s new Radeon FreeSync 2 technology

This monitor supports Freesync2, an AMD implementation. If the monitor is also on NVidia's list of fully supported displays, then it will also support NVidia's VRR tech and be just as deserving of that title as well. As such, I would expect that NVidia would expect equal billing at a minimum. They might even try and push for more, like force them to choose, GSync or Freesync. but if they "hacked out VRR" yea, I don't see that working well at all as that part is a VESA standard, not a marketing mechanism.

Last edited:

As an Amazon Associate, HardForum may earn from qualifying purchases.

Reading the interwebs, there are some folks who are especially upset, namely those who recently bought an G-Sync monitor to replace a FreeSync one.Hard to hurt someone that has already taken the shaft.

^ Damn that has to hurt. That's why I went freesync, price difference is so big can basically buy two quality FS monitors for a single G-Sync monitor. I went with one 32" 4k $350 (but 60hz and VA) and 144hz 27" 1440p IPS $400, really nice to be able to switch from 4k to 144hz on what game I was playing. I had surround PG278Q which worked pretty well with SLI'd 980ti's, but the single DP connection really hindered work.

Falkentyne

[H]ard|Gawd

- Joined

- Jul 19, 2000

- Messages

- 1,868

Too bad my BenQ XL2540 isn't in the list but if it can be turned on anyway unofficially shouldn't matter, quite suprised by this move. For me personally won't matter that much as I simply prefer running 144Hz + BenQ Blur Reduction (strobing) on mine (prefer it over 240Hz non-strobed for better contrast/image quality and smoother motion)

The XL2540 isn't in the list but will work unofficially as it's on AMD's freesync list.

The XL2546 isn't, however, even though it has the exact same scaler. The scaler is capable of VRR. Not sure why it's locked out by the firmware. (perhaps because DyAc (Benq blur reduction) is on by default, even though it CAN be forced off). I don't know if anyone got freesync working over DP over this monitor. (the HDMI toastyX CRU hack does work as the scaler fully supports VRR).

Cr4ckm0nk3y

Gawd

- Joined

- Jul 30, 2009

- Messages

- 847

nVidia bought PhysX, and were hoping to capture the market with Physics offloading tech. But most of the industry didn't care.

Nvidia rolled out G-Sync to try and capture the dynamics sync refresh market, but it's been expensive, and most gamers don't really buy into it.

You have no idea what you are talking about with PhysX.

Most gamers don't really buy into higher tier cards either but Nvidia sells a bunch of them don't they. Gsync wasn't developed for all gamers. It was developed for higher tier gamers running fast cards. Thus the custom scaler and higher end panels.

You have no idea what you are talking about with PhysX.

Most gamers don't really buy into higher tier cards either but Nvidia sells a bunch of them don't they. Gsync wasn't developed for all gamers. It was developed for higher tier gamers running fast cards. Thus the custom scaler and higher end panels.

It sounds like he does know what he's talking about in regards to physx.

Appears it only works on 10 and 20 series GPUs, even though nVidia has supported it since the 600 series. Ok, they want to sell cards...

Does it require a "qualified" monitor, or can you actually enable it on any Freesync/VRR with YMMV?

It only works on 10 series and newer GPU's because the older cards don't have the hardware needed to connect to an Adaptive sync monitor.

If they allow their GPUs to connect to any adaptive sync monitor, they will be able to connect to all Adaptive Sync monitors. But, as you say, YMMV as it does need some fine tuning for different monitors.

My understanding is that while VRR and Freesync work very similarly, G-Sync works differently, and uses some sort of hardware acceleration.

Presumably with Freesync and VRR you wind up with more either GPU or CPU load, but that is unclear to me.

Either way, without an Nvidia blessing, I doubt it would have been as easy as editing an INF file.

Gysnc works the same way too. It's just Nvidia went about it differently. Both solutions are based on the eDP standard in laptops. For VRR(Gsync or Freeysnc) to work you need a timing controller, Framebuffer and a suitable scaler. For Freesync these are split between the GPU and the Monitor. For Gsync the FPGA module is all these combined into one. There is no extra load on the GPU/CPU because of this.

This is also the reason Gsync supports a wider range of GPU, the GPU only needs to support Display port 1.2. Whereas with Freesync/Adaptive sync, the GPU has to support display port 1.2a which needs extra hardware.

^ Damn that has to hurt. That's why I went freesync, price difference is so big can basically buy two quality FS monitors for a single G-Sync monitor. I went with one 32" 4k $350 (but 60hz and VA) and 144hz 27" 1440p IPS $400, really nice to be able to switch from 4k to 144hz on what game I was playing. I had surround PG278Q which worked pretty well with SLI'd 980ti's, but the single DP connection really hindered work.

That's funny, price difference and performance was exactly why I went the other way back before Vega, at that time, the $300 "GSync Tax" was cheaper than buying a second AMD video card (to keep up with the power of one GForce card), and a bigger PSU to power them both. That's not to mention the difference in my electric bill which although small by the month, adds up for the year much less the life of the equipment.

But hey, it's a timing thing and what was a valid set of conditions one day don't make it the next. We all have our own demands and desires and the situation we have to make our decisions in changes all the time. Sometimes it works out well for us, sometimes not so much.

I think the existence of G-Sync on notebooks proves you wrong. It uses Adaptive-Sync instead of the G-Sync module, and has worked before Geforce 1000 series.It only works on 10 series and newer GPU's because the older cards don't have the hardware needed to connect to an Adaptive sync monitor.

I think the existence of G-Sync on notebooks proves you wrong. It uses Adaptive-Sync instead of the G-Sync module, and has worked before Geforce 1000 series.

Actually this doesn't prove what you think it does. Don't worry, lots of people who don't understand the tech make the same mistake.

Laptop hardware has different requirements than desktop hardware. The VRR tech that's used in Freesync/adaptive sync has been around in laptops since 2009. It's a power saving feature that's part of the Embedded Display port specification. If Nvidia or AMD put a GPU into a laptop and want to use the embedded display port, it has to support the eDP specification. That's why Nvidia's GPU's don't need a module on laptops, the hardware needed is already in place.

This hardware is not on desktop GPUs as they don't need that kind of power savings. The desktop display port specification didn't have any such requirements until adaptive sync was made an optional part of the standard in 2014.

DisplayPort is a superset of embedded DisplayPort since 1.2.It's a power saving feature that's part of the Embedded Display port specification.

But the silicon is identical between desktop and mobile.This hardware is not on desktop GPUs as they don't need that kind of power savings. The desktop display port specification didn't have any such requirements until adaptive sync was made an optional part of the standard in 2014.

It sounds like he does know what he's talking about in regards to physx.

Are you sure, with statements like "nVidia bought PhysX, and were hoping to capture the market with Physics offloading tech. But most of the industry didn't care."

Let's dissect that statement first. Actually, it's easier to just flip it. NVidia bought PhysX because they don't want to do anything to try and succeed as a business? It's like "Doh !"

That sounds like nobody adopted PhysX, but that's far from true;

PhysX technology is used by game engines such as Unreal Engine (version 3 onwards), Unity, Gamebryo, Vision (version 6 onwards), Instinct Engine,[27] Panda3D, Diesel, Torque, HeroEngine and BigWorld.[19]

As one of the handful of major physics engines, it is used in many games, such as The Witcher 3: Wild Hunt, Warframe, Killing Floor 2, Fallout 4, Batman: Arkham Knight, Borderlands 2, etc. Most of these games use the CPU to process the physics simulations.

That list of games leaves out a lot of other titles. What's more, now that NVidia has Open Sourced PhysX, it's even more common.

So the industry did care, PhysX has been widely adopted, it even crossed to the console makers. Of course NVidia did it for the purpose of gaining market share.

Now let's look at his statement about G-Sync;

Nvidia rolled out G-Sync to try and capture the dynamics sync refresh market, but it's been expensive, and most gamers don't really buy into it.

The "dynamics sync refresh market" ?

WTF is this? Let me explain my problem with this comment this way? What discrete graphics card manufacturer represents the non-dynamic sync refresh market? It's not a market, that is just a non-existent thing you just made up. There is no such thing. There is a discrete graphics card market, AMD and NVidia both have offerings that support Adaptive Sync, and each leverages what it can to try and secure as much of that market as they are able.

Did NVidia come out with G-Sync in order to gain market share, of course it did. There was no other adaptive sync option available on the market in 2013/2014 when NVidia release G-Sync. Freesync wasn't released until March 2015 so for over a year G-Sync was the only horse in the race for adaptive sync. Freesync has not always been the cheaper option by default because not everyone is in a blank slate position when deciding to buy into the technology. Freesync monitors are cheaper than G-Sync monitors, but the total solution has at times been more expensive, particularly if a customer already owned a good NVidia card. Everyone is in their own unique situation.

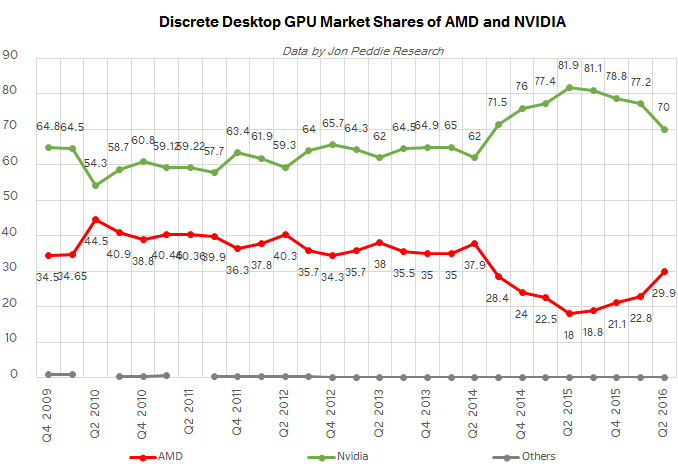

Overall, I'm not seeing any time prior to late 2015 where NVidia was struggling for market share in the dedicated graphics card market except that small little dip in 2010. Back when PhysX came out in 2008, NVidia had the lead by a recognizable margin, but for the next seven years since PhysX was purchased, NVidia extended that market share lead into a dominant position. That has changed some but it remains a 70/30 split in NVidia's favor.

Now I am not claiming that NVidia, adding PhysX and G-Sync to their arsenal, ere the keys to their success. I'm simply pointing out that NVidia already had a superior market share position and since PhysX and G-Sync they have strengthened that position substantially. I'm thinking any change in market share following the changes in late 2015 are at least some part due to crypto mining, but by this time, PhysX is not really part of the picture as it is now Open Source and G-Sync is now supporting Adaptive Sync across the new HDMI standard while maintaining it's G-Sync capability thru the Display Port standard.

And here is a very good article on why manufacturers have a more expensive time with G-Sync monitors, it's not just the costs of the G-Sync module and licensing;

https://www.pcworld.com/article/312...ia-g-sync-on-monitor-selection-and-price.html

EDIT: And I must appologize if this rambles and at any time seems disjointed, I was distracted several times while writing it.

Parja

[H]F Junkie

- Joined

- Oct 4, 2002

- Messages

- 12,670

Interesting video I saw in the Display forum that shows what can happen when enabling G-Sync on a monitor that's not on the validated list...

So what does this mean for consumers? Are G-Sync monitors going to slowly go away in favor of Freesync 2? I don't think I'd buy another one now.

Probably, since consumers usually choose what it cheap and so do manufacturers. In theory Gsync monitors will continue since it is a slightly better solution. Thing is since Freesync/Adaptivesync supports any card that can use it, which includes things like the game consoles, and doesn't require the Gsync module that makes it attractive to manufacturers since one part can support everything and it costs less to make. That will likely translate to lower prices in the store, which will lead to consumers getting them by and large since people tend to prioritize price unless there's a pretty major quality advantage (and even then often prioritize price).

Interesting video I saw in the Display forum that shows what can happen when enabling G-Sync on a monitor that's not on the validated list...

Not sure if I understood correctly, but that latter monitor that was blinking, did it do the same with Radeon cards? Since english is not my native language it is sometimes hard to make full sense of sentences when someone speaks quickly. Anyway, if that is the case then the screen is just plain faulty, or worse, badly made and Freesync got glued on top for marketing purposes. And the other one that was blurring badly, I think that was pretty much the hallmark for the poor first wave Freesync monitors IIRC.

*edit* Watched that part again with auto subtitles and yeah, the blinking monitor is just shitty. So I guess we can say it is official, Nvidia will now support Freesync much like AMD. But out of the sea of crappy Freesync screens it is up to the customer to find a good one.

*edit2* A little quotes from Youtube comments.

It's funny how tech journalists fall for Nvidia marketing, I have the 3rd monitor (if is the LG 144Hz as seems to be) the blinking never happened on my previous 480 or in my current Vega 64, it's happening on the Nvidia card because it is locked under the freesync range, which starts at 50Hz in this monitor. Edit: To clarify, what I meant is that Nvidia driver is forcing the monitor to go below its minimum refresh rate instead of turning off freesync below 50Hz.

AMD is aware of the supported Freesync range of the screens and turns off when outside of the limits. Nvidia on the other hand would have you believe its a Monitor issue because they are driving the screen outside of its supported HZ range this will cause issues with any screen. custom controls over the Adptive sync range would be needed for the user to setup the correct range OR nvidia can do some research and use the right ranges for the screens

So let me get this straight, Nvidia really can't help themselves but to do SOMETHING to make an otherwise good thing appear worse. Nvidia being Nvidia again.

Last edited:

Not sure if I understood correctly, but that latter monitor that was blinking, did it do the same with Radeon cards? Since english is not my native language it is sometimes hard to make full sense of sentences when someone speaks quickly. Anyway, if that is the case then the screen is just plain faulty, or worse, badly made and Freesync got glued on top for marketing purposes. And the other one that was blurring badly, I think that was pretty much the hallmark for the poor first wave Freesync monitors IIRC.

*edit* Watched that part again with auto subtitles and yeah, the blinking monitor is just shitty. So I guess we can say it is official, Nvidia will now support Freesync much like AMD. But out of the sea of crappy Freesync screens it is up to the customer to find a good one.

That is what I got out of it as well. If you were to use a Radeon card you would get the same blinking. If that is the case then that monitor would have to go back.

Are you sure, with statements like "nVidia bought PhysX, and were hoping to capture the market with Physics offloading tech. But most of the industry didn't care."

Let's dissect that statement first. Actually, it's easier to just flip it. NVidia bought PhysX because they don't want to do anything to try and succeed as a business? It's like "Doh !"Of course NVidia is working to increase market share. But he continues by saying that the Industry didn't care .....

That sounds like nobody adopted PhysX, but that's far from true;

That list of games leaves out a lot of other titles. What's more, now that NVidia has Open Sourced PhysX, it's even more common.

So the industry did care, PhysX has been widely adopted, it even crossed to the console makers. Of course NVidia did it for the purpose of gaining market share.

View attachment 133715

Now let's look at his statement about G-Sync;

The "dynamics sync refresh market" ?

WTF is this? Let me explain my problem with this comment this way? What discrete graphics card manufacturer represents the non-dynamic sync refresh market? It's not a market, that is just a non-existent thing you just made up. There is no such thing. There is a discrete graphics card market, AMD and NVidia both have offerings that support Adaptive Sync, and each leverages what it can to try and secure as much of that market as they are able.

Did NVidia come out with G-Sync in order to gain market share, of course it did. There was no other adaptive sync option available on the market in 2013/2014 when NVidia release G-Sync. Freesync wasn't released until March 2015 so for over a year G-Sync was the only horse in the race for adaptive sync. Freesync has not always been the cheaper option by default because not everyone is in a blank slate position when deciding to buy into the technology. Freesync monitors are cheaper than G-Sync monitors, but the total solution has at times been more expensive, particularly if a customer already owned a good NVidia card. Everyone is in their own unique situation.

Overall, I'm not seeing any time prior to late 2015 where NVidia was struggling for market share in the dedicated graphics card market except that small little dip in 2010. Back when PhysX came out in 2008, NVidia had the lead by a recognizable margin, but for the next seven years since PhysX was purchased, NVidia extended that market share lead into a dominant position. That has changed some but it remains a 70/30 split in NVidia's favor.

Now I am not claiming that NVidia, adding PhysX and G-Sync to their arsenal, ere the keys to their success. I'm simply pointing out that NVidia already had a superior market share position and since PhysX and G-Sync they have strengthened that position substantially. I'm thinking any change in market share following the changes in late 2015 are at least some part due to crypto mining, but by this time, PhysX is not really part of the picture as it is now Open Source and G-Sync is now supporting Adaptive Sync across the new HDMI standard while maintaining it's G-Sync capability thru the Display Port standard.

And here is a very good article on why manufacturers have a more expensive time with G-Sync monitors, it's not just the costs of the G-Sync module and licensing;

https://www.pcworld.com/article/312...ia-g-sync-on-monitor-selection-and-price.html

EDIT: And I must appologize if this rambles and at any time seems disjointed, I was distracted several times while writing it.

Lol my reading must have not been good, because I totally missed the part where he stated nobody cares about physx.

Quoted from the Nvidia page. Looks like you can turn on VRR manually, even if it's not officially supported by Nvidia.

"Support for G-SYNC Compatible monitors will begin Jan. 15 with the launch of our first 2019 Game Ready driver. Already, 12 monitors have been validated as G-SYNC Compatible (from the 400 we have tested so far). We’ll continue to test monitors and update our support list. For gamers who have monitors that we have not yet tested, or that have failed validation, we’ll give you an option to manually enable VRR, too."

"Support for G-SYNC Compatible monitors will begin Jan. 15 with the launch of our first 2019 Game Ready driver. Already, 12 monitors have been validated as G-SYNC Compatible (from the 400 we have tested so far). We’ll continue to test monitors and update our support list. For gamers who have monitors that we have not yet tested, or that have failed validation, we’ll give you an option to manually enable VRR, too."

Not sure if I understood correctly, but that latter monitor that was blinking, did it do the same with Radeon cards? Since english is not my native language it is sometimes hard to make full sense of sentences when someone speaks quickly. Anyway, if that is the case then the screen is just plain faulty, or worse, badly made and Freesync got glued on top for marketing purposes. And the other one that was blurring badly, I think that was pretty much the hallmark for the poor first wave Freesync monitors IIRC.

*edit* Watched that part again with auto subtitles and yeah, the blinking monitor is just shitty. So I guess we can say it is official, Nvidia will now support Freesync much like AMD. But out of the sea of crappy Freesync screens it is up to the customer to find a good one.

*edit2* A little quotes from Youtube comments.

So let me get this straight, Nvidia really can't help themselves but to do SOMETHING to make an otherwise good thing appear worse. Nvidia being Nvidia again.

I saw a couple comments saying they had flickering with that monitor on AMD cards. AMD does some driver level tweaks on Freesync monitors to make some of them work better I believe. Some of those flickering issues can be dealt with by changing monitor settings or doing other tweaks. Nvidia is likely running the monitors mostly stock in their testing, relying on monitor manufacturers to be telling the truth about the specs and capabilities of the panels. If Nvidia wanted to do driver level monitor-by-monitor tweaks they probably could ensure that all Freesync monitors worked as well on their cards as they do on AMD’s.

i bet there was a loop hole in the driver where someone knew about this and basically nvidia came clean

only 12 monitors i though 400 would work

something fishy

Only 12 validated, you can force it to work on any freesync monitor in January 15 patch, but YMMV on how it works.

It looks like Nvidia will continue validating other freesync monitors but it sure seems like they are validating high refresh rate monitors first.

Forgive me if I am wrong, but wasn't the reason behind being able to enable G-Sync on laptop displays primarily because eDP already had VRR tech built into them as a power saving measure, so G-Sync was piggy backed on that tech, but no such thing existed for desktop computers (power saving simply isn't a problem here), so nVidia was essentially forced to make their own modules in order to do what they wanted?

Dayaks

[H]F Junkie

- Joined

- Feb 22, 2012

- Messages

- 9,773

This is great news!!

Lol my reading must have not been good, because I totally missed the part where he stated nobody cares about physx.

LOL, your simple straight forward admission of being human makes my "thesis" look pretty damned ridiculous

I saw a couple comments saying they had flickering with that monitor on AMD cards. AMD does some driver level tweaks on Freesync monitors to make some of them work better I believe. Some of those flickering issues can be dealt with by changing monitor settings or doing other tweaks. Nvidia is likely running the monitors mostly stock in their testing, relying on monitor manufacturers to be telling the truth about the specs and capabilities of the panels. If Nvidia wanted to do driver level monitor-by-monitor tweaks they probably could ensure that all Freesync monitors worked as well on their cards as they do on AMD’s.

Wait, tweak which drivers? The NVidia drivers or the monitor drivers?

At first I thought you meant monitor drivers and I was thinking, shit that's nothing, just a text file with a list of support resolution and refresh rate combinations. But then I looked again and thought, he probably means the display drivers for the video card.

DisplayPort is a superset of embedded DisplayPort since 1.2.

But the silicon is identical between desktop and mobile.

See my last post and try to understand it this time.

Laptop/APU that uses eDP has supported VRR since 2009 for power saving. There is hardware required to make VRR work and as part of the eDP specification, you have to install that hardware if you put a GPU into a laptop. This is why Nvidia Gysnc works on laptops without a module.

Desktop GPUs that use display port, use the DP specification. DP did not support VRR until 2014 and even then it was only made an optional part of the standard. The timing controller/frame buffer etc that you need to make VRR isn't required on a desktop GPU. And before the 260x launched in September 2013 no desktop GPU had this hardware installed. Pascal is the First desktop GPU from Nvidia with the needed hardware.

Forgive me if I am wrong, but wasn't the reason behind being able to enable G-Sync on laptop displays primarily because eDP already had VRR tech built into them as a power saving measure, so G-Sync was piggy backed on that tech, but no such thing existed for desktop computers (power saving simply isn't a problem here), so nVidia was essentially forced to make their own modules in order to do what they wanted?

You are correct. Adaptive sync wasn't a thing until after May 2014. Nvidia came up with their own solution in October 2013.

I'm surprised this thread only has 3 pages as this is a huge step in the right direction for Nvidia. The G-SYNC "fee" or "tax" or whatever you would like to call it was very unappealing and also being limited to either FreeSync if you had AMD or G-SYNC if you had Nvidia was very anti-consumer. Supporting adaptive sync monitors outside of G-SYNC certified ones gives consumers more purchasing power and an option to avoid premiums associated with G-SYNC certified monitors.

However, I am slightly upset to see that out of 400 monitors they tested only 12 passed. Makes me wonder if Nvidia is purposely making the validation process extremely strict (more than it needs to be) on these monitors.

However, I am slightly upset to see that out of 400 monitors they tested only 12 passed. Makes me wonder if Nvidia is purposely making the validation process extremely strict (more than it needs to be) on these monitors.

I'm surprised this thread only has 3 pages as this is a huge step in the right direction for Nvidia. The G-SYNC "fee" or "tax" or whatever you would like to call it was very unappealing and also being limited to either FreeSync if you had AMD or G-SYNC if you had Nvidia was very anti-consumer. Supporting adaptive sync monitors outside of G-SYNC certified ones gives consumers more purchasing power and an option to avoid premiums associated with G-SYNC certified monitors.

However, I am slightly upset to see that out of 400 monitors they tested only 12 passed. Makes me wonder if Nvidia is purposely making the validation process extremely strict (more than it needs to be) on these monitors.

Nothing to wonder about at all. The implementation of VRR to support AMD's Freesync has always been a tale of wide variances in acceptable specifications. It was never an exacting set of parameters. It's why people's experiences with Freesync in the past has always been a mixed bag.

Parja

[H]F Junkie

- Joined

- Oct 4, 2002

- Messages

- 12,670

I'm surprised this thread only has 3 pages as this is a huge step in the right direction for Nvidia.

'Cuz the proof is in the pudding. When the driver releases and people have a chance to actually test it (especially with non-approved monitor), I think you'll see a lot more discussion.

That sounds all logical, until you realize that (1) desktop and mobile GPUs are the same silicon, and (2) DP and eDP are packet based protocols, and all that information is encoded in the packets. If you can output VRR via eDP, then you can also output VRR via DP, it's that simple.See my last post and try to understand it this time.

Laptop/APU that uses eDP has supported VRR since 2009 for power saving. There is hardware required to make VRR work and as part of the eDP specification, you have to install that hardware if you put a GPU into a laptop. This is why Nvidia Gysnc works on laptops without a module.

Desktop GPUs that use display port, use the DP specification. DP did not support VRR until 2014 and even then it was only made an optional part of the standard. The timing controller/frame buffer etc that you need to make VRR isn't required on a desktop GPU. And before the 260x launched in September 2013 no desktop GPU had this hardware installed. Pascal is the First desktop GPU from Nvidia with the needed hardware.

You can also connect a VRR capable eDP display to a Ryzen APU (not sure about dGPUs) and bam! you get FreeSync. (if the display EDID does not advertise Adaptive-Sync you may need to fake it through CRU or similar.)

That sounds all logical, until you realize that (1) desktop and mobile GPUs are the same silicon, and (2) DP and eDP are packet based protocols, and all that information is encoded in the packets. If you can output VRR via eDP, then you can also output VRR via DP, it's that simple.

You can also connect a VRR capable eDP display to a Ryzen APU (not sure about dGPUs) and bam! you get FreeSync. (if the display EDID does not advertise Adaptive-Sync you may need to fake it through CRU or similar.)

*sigh* You really don't understand.

Desktop and mobile graphic chips might be the game but the whole GPU isn't. And what are you talking about? packet based protocols lol You have a standard, DP, HDMI, eDP etc. No matter what the standard, it will certain specifications some optional, some a requirement. to meet some of those specifications certain hardware is needed, the right standard of materials etc. Some of the Specifications of the eDP standard is that you must have a frame buffer and Tcon (timing controller) on the embedded display port to handle variable refresh rates, and a frame buffer on the display to handle panel self refresh. These are all used as power saving features.

While display port has a different set of requirements. The need for putting hardware capable of VRR on a desktop gpu's display port became an optional part of the Display port 1.2a specification in May 2014. No desktop GPU needed these features before that, the power saving wasn't a concern for desktop users. AMD had been working on their VRR solution alongside the development of their second generation GCN cards. It's why the 290x, 290 etc have the hardware needed even though the specification hadn't been approved by VESA at that time.

Your last statement just proves my point. Of course you get can use Freesync with a Ryzen APU, All APU's use the eDP standard, that's why they work with Adaptive sync.

That's why when freesync was been launched people wondered why the 7970 and 7950 didn't support it but cheap Kaveri APUs did. They are both first generation GCN. So why didn't they both work? Simple, Kaveri had the hardware needed because it had been built to use the eDP spec, the 7970 didn't as it was built to use the display port spec.

And since version 1.2, DisplayPort is a superset of eDP. And this is not just theory, people actually tried running iPad eDP screens off their graphics cards' DP outputs. And guess what? With DP 1.1 that didn't work, but with DP 1.2 that worked with minimal circuitry.Some of the Specifications of the eDP standard

All I'm saying is that if your hardware already supports VRR via eDP, then your hardware has everything it needs for supporting VRR via DP.some of those specifications certain hardware is needed,

It just proves that FreeSync/Adaptive-Sync on DP and FreeSync/Adaptive-Sync on eDP are functionally the same.Your last statement just proves my point. Of course you get can use Freesync with a Ryzen APU, All APU's use the eDP standard, that's why they work with Adaptive sync.

You are wrong here too. Kaveri is second generation GCN. There are no APUs of first generation GCN (Bobcat is VLIW5, Trinity/Richland are VLIW4, and Kaveri/Kabini are GCN2),That's why when freesync was been launched people wondered why the 7970 and 7950 didn't support it but cheap Kaveri APUs did. They are both first generation GCN

Also your claim that 7970 doesn't support FreeSync is false. It supports FreeSync for video playback, but not for gaming.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)