Twisted Kidney

Supreme [H]ardness

- Joined

- Mar 18, 2013

- Messages

- 4,103

Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

It is shit, but I don't see anybody selling hardware that offers good framerates for native 4K.Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

I'd rather play at 1440p than play with some bizarre simulated "Vaseline smear" filter in the form of DLSS, but I get you.It is shit, but I don't see anybody selling hardware that offers good framerates for native 4K.

So we're kind of where we are at with that.

It's why I stay at 1440p, every aspect of it is cheaper, and if you have to render 1440p to upscale to 4K to make 4K playable then why not just play at 1440p and be done with it.I'd rather play at 1440p than play with some bizarre simulated "Vaseline smear" filter in the form of DLSS, but I get you.

The technology is interesting (to me) as a “this is just cool on a maths, programming and proper hw/sw vertical-stack implementation” thing, but the application to gaming is total horseshit.Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

I think AMD and Nvidia realized early on that Raster performance wouldn't get them there and they both had to work on alternatives.The technology is interesting (to me) as a “this is just cool on a maths, programming and proper hw/sw vertical-stack implementation” thing, but the application to gaming is total horseshit.

I am impressed how nvidia is able to pull off combining bleeding edge research, tight hardware + software integration and optimization, and roll all this out in way that gamedevs can actually play with it and we can see the outcome (good or bad) in games launching soon (tm). Neither intel nor AMD have managed this even once in recent memory (maybe in a very very minor way AMD did this with HDR+AA on the HD2900 series way back when iirc?).

Still hate how this comes at the expense of proper research and development into gfx cores and raster perf though haha.

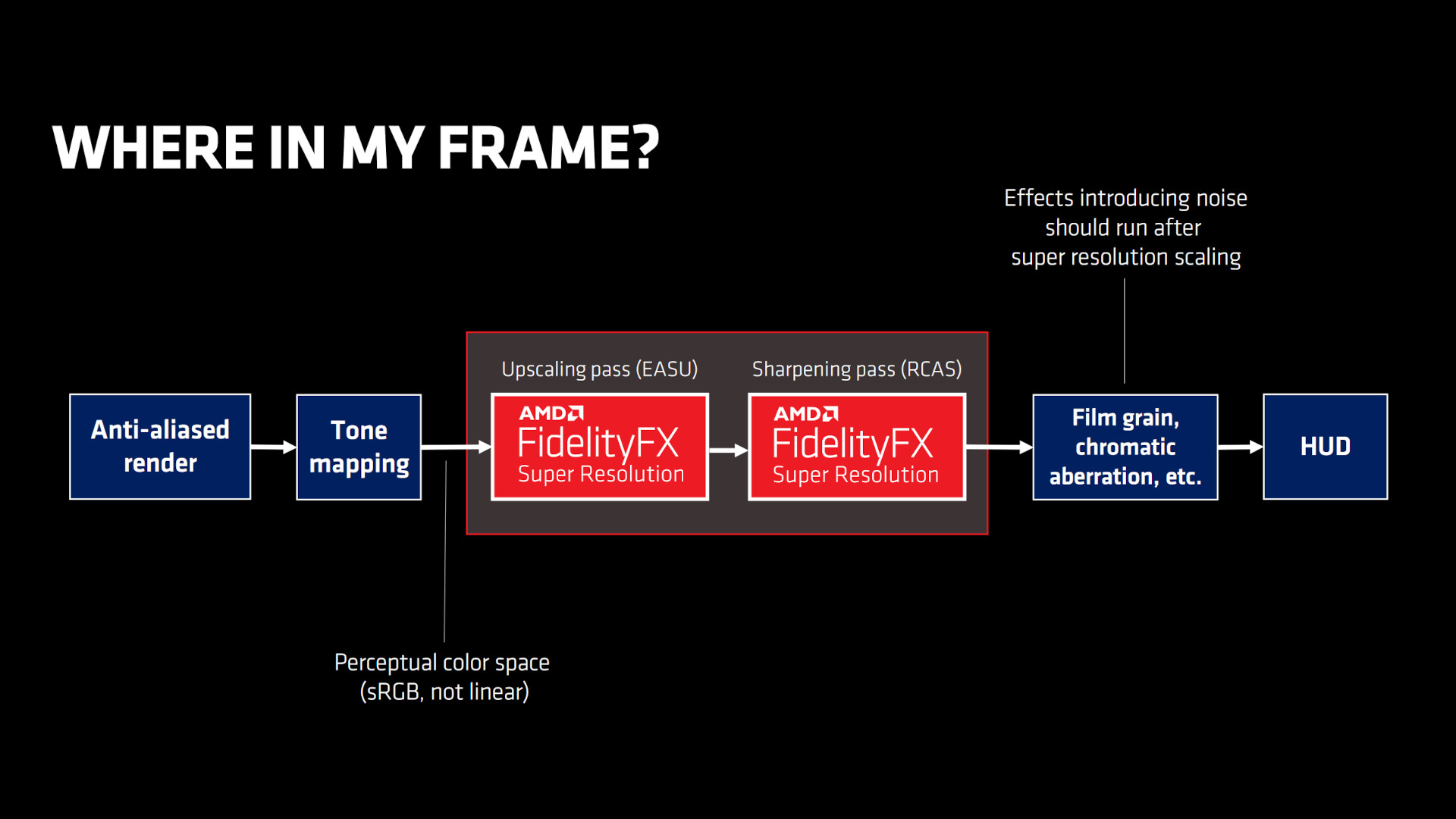

AMD doesn't even hide it, it's in their own slides. The FidelityFX system uses what they call EASU which is their image upscaling pass where it uses a combination of 2 or 3 different well-known upscaling methods to generate the image then it performs its RCAS pass which again uses 2 or 3 different well-known and researched sharpening algorithms to sharpen the image.Yeah I think what people may not realize is that FSR is basically using and improving on decades of university research into general upscaling algorithms. It’s basically talking ye-olde “turn 1 pixel into 4 pixels” and accelerating this on a GPU in “real-time”. Obviously I’m over simplifying the actual algorithms and complexity here, but this is why FSR “just works” everywhere.

Nvidias DLSS requires that an ML model is pre-trained by the game developer, basically (this is really over simplified) you’re pre-generating a set of patterns and situations which get processed at runtime based on your current frame and so you can “predict” possible outcomes and pull them from your model. Obviously much more complicated and smarter, but fundamentally the tensor cores are just “generating” potential frames and then selecting the best-fit. This is why you get some strange glitching around reflections in water and non-deterministic scenes (or the devs just didn’t create and train a proper model).

I personally think nvidia went this route because they have tensor cores to sell (these are much more lucrative in the compute AI enterprise markets) and proper gfx cores are becoming less and less important. I also think it’s overall stupid to try to merge AI compute and gaming product lines like this but whatever - I’m a lowly engineer and not a CEO so what do I know.

I dunno what DLSS you all have been using, but at 4K, DLSS Quality looks better than native with TAA applied and I get a frame boost on top of it. Now Balanced and Performance, ok, you CAN notice for sure, but Quality is damn near perfect in every game I have played and my preferred method of AA now.I'd rather play at 1440p than play with some bizarre simulated "Vaseline smear" filter in the form of DLSS, but I get you.

Nvidias DLSS requires that an ML model is pre-trained by the game developer, basically (this is really over simplified) you’re pre-generating a set of patterns and situations which get processed at runtime based on your current frame and so you can “predict” possible outcomes and pull them from your model. Obviously much more complicated and smarter, but fundamentally the tensor cores are just “generating” potential frames and then selecting the best-fit. This is why you get some strange glitching around reflections in water and non-deterministic scenes (or the devs just didn’t create and train a proper model).

Everything I have seen has shown latency to be equal to or better than native with DLSS 3. But yes it has higher latency than DLSS 2. But if it's still better than native does it matter? Or have I missed one of the reviews where it is showing DLSS 3 to have higher latency than native?The big take away from me regarding DLSS 3 is the increased latency, a lot of people told me that reflex would resolve this issue, doesn't seem to be the case. I would rather use actual native frame rates at a lower frame rate than use this as it stands. Seems like an interesting start, but reminds me more of DLSS 1.0 in terms of where its at currently, it's a beta that's not ready for real time. DLSS quality I think is cool and works well in most situations, sometimes looks better than native with TAA or other AA techniques but rarely.

It also begs the question, are the 4080 cards fast enough for people to pay a premium? Or are people going to balk at the price and not see DLSS 3.0 as a reason to buy one? I'm kind of in the latter group at the moment.

check out the HUB video above, seems there's some latency issues. Edit: here's the video again it's on the previous page, checkout the latency section.Everything I have seen has shown latency to be equal to or better than native with DLSS 3. But yes it has higher latency than DLSS 2. But if it's still better than native does it matter? Or have I missed one of the reviews where it is showing DLSS 3 to have higher latency than native?

I wouldn't go that far. Native 4k looks better then using DLSS Quality. No it isn't that much better, but it is better. Now I am not saying it isn't worth using either, as in Cyberpunk I do use DLSS quality at 4k to get better framerate. But I just know it is a slight decrease in IQ.I dunno what DLSS you all have been using, but at 4K, DLSS Quality looks better than native with TAA applied and I get a frame boost on top of it. Now Balanced and Performance, ok, you CAN notice for sure, but Quality is damn near perfect in every game I have played and my preferred method of AA now.

Anything looks better than TAAI dunno what DLSS you all have been using, but at 4K, DLSS Quality looks better than native with TAA applied

I wouldn't be so harsh. More of a wet fart.Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

I don't think anyone is mad about it, they're just not happy with what it is currently. Nvidia is presumably charging a premium acting like DLSS 3 making their new cards exponentially faster than the previous generation. It's just clearly not the case, the 4090 is still an impressive generational uplift, I just think the disappointment is really going to settle in when the 4080 series doesn't have much of a generational uplift without DLSS 3.Imagine getting mad over an optional feature you don't have to enable.

Seeing as most games use either TAA or FXAA, DLSS Quality is always a better option for getting rid of Aliasing as it disables the other forms of AA. the only time I would say "native" is better is if MSAA is an option, but I rarely see that in games anymore. Last game I played that had it was Forza Horizons 5, and it does look damn good and play smooth.I wouldn't go that far. Native 4k looks better then using DLSS Quality. No it isn't that much better, but it is better. Now I am not saying it isn't worth using either, as in Cyberpunk I do use DLSS quality at 4k to get better framerate. But I just know it is a slight decrease in IQ.

I would agree with this, I also think it's rarely an option because it has such a massive performance hit. Still though, I would like to see games include it.Seeing as most games use either TAA or FXAA, DLSS Quality is always a better option for getting rid of Aliasing as it disables the other forms of AA. the only time I would say "native" is better is if MSAA is an option, but I rarely see that in games anymore. Last game I played that had it was Forza Horizons 5, and it does look damn good and play smooth.

What a copout. Nvidia blew up dlss3 into something it frankly isn't to the point it almost felt like they were trying to sell it, not a GPU.Imagine getting mad over an optional feature you don't have to enable.

The technology is interesting (to me) as a “this is just cool on a maths, programming and proper hw/sw vertical-stack implementation” thing, but the application to gaming is total horseshit.

I am impressed how nvidia is able to pull off combining bleeding edge research, tight hardware + software integration and optimization, and roll all this out in way that gamedevs can actually play with it and we can see the outcome (good or bad) in games launching soon (tm). Neither intel nor AMD have managed this even once in recent memory (maybe in a very very minor way AMD did this with HDR+AA on the HD2900 series way back when iirc?).

Still hate how this comes at the expense of proper research and development into gfx cores and raster perf though haha.

But to be completely fair, the 4090 isn’t a slouch at 4K raster perf either.

This is taken from the Techspot website. Where you can see the quality difference. Its slight but it easily looks better in native 4k, even in there conclusion they say as much. Now, DLSS quality is a damn good thing, but can't be spreading fud around saying its better than native when it's not in anyway.I would agree with this, I also think it's rarely an option because it has such a massive performance hit. Still though, I would like to see games include it.

When did that even start? I remember DLSS used to be talked about as a pretty worthwhile compromise. Then suddenly it shifted to gift from God, "better than native resolution" status.This is taken from the Techspot website. Where you can see the quality difference. Its slight but it easily looks better in native 4k, even in there conclusion they say as much. Now, DLSS quality is a damn good thing, but can't be spreading fud around saying its better than native when it's not in anyway.

https://static.techspot.com/articles-info/2165/images/F-14.jpg

https://static.techspot.com/articles-info/2165/images/F-15.jpg

When did that even start? I remember DLSS used to be talked about as a pretty worthwhile compromise. Then suddenly it shifted to gift from God, "better than native resolution" status.

Unless I’m totally wrong - all of those were reactionary based on nvidia pushing the envelope first though right? This bothers me about AMD a bit, but I understand why things are the way they are - AMD is really treading water with a fraction of the personnel that nvidia has just to keep up. There’s a positive to that in that they are much more mobile and there’s less inertia to pivot quickly.That is a fair assessment. As far as cutting edge GPU features... I mean IMO you probably have to go back even further. Truform was 100% ATI in 2001... one of the few real game changing bits of research turned practical AMD/ATI was directly responsible for. Sort of like RT in a lot of ways that didn't really get into a proper hardware form and get any real software traction till the HD2900.... and AMD including the tech in the Xbox 360. Everyone takes Tessellation for granted now.

To be fair to AMD though... on the software side they have focused on some pretty practical things aimed at raster and quality of life. Image sharpening, FSR, Chill, Anti Lag. They have done a pretty good job of implementing them all driver side and making it easy for users to choose when it makes sense to use which settings. I actually like Imagine Sharpening in a few old titles, FSR Quality mode is actually not bad for a handful of games that push hardware more, Chill is a feature Nvidia should APE but never has I guess slowing the card down on purpose is anathema to their philosophy of win all the FPS benchmarks always. Anti Lag is awesome for esports type stuff if you play any of it.

None of it is flashy new research turned into selling bullet. I would say though that all those things are far more practical then even high end RT is for the time being anyway. A lot of Nvidia users think I'm a crazy shill when I say AMDs drivers are superior... and I don't know perhaps I am, but they are superior. AMD has made QOL for gamers a priority... in a way Nvidia really has not.

I'm pretty sure Nvidia doesn't have a feature similar to Radeon Chill. (hands down my favorite driver feature ever... I play a few games now that my GPU doesn't even have to turn the fan on for and its not noticeable) FSR I guess you could call reactionary sort of...as others have said its iterative tech I'm not so sure it wouldn't have found its way into their software anyway. Regardless its the superior feature... as cool as DLSS is in theory FSR actually solves a real issue. DLSS was invented to sell new hardware (which continues with Version 3)... FSR actually extends the life of old hardware. That is a pretty big difference in mission.Unless I’m totally wrong - all of those were reactionary based on nvidia pushing the envelope first though right? This bothers me about AMD a bit, but I understand why things are the way they are - AMD is really treading water with a fraction of the personnel that nvidia has just to keep up. There’s a positive to that in that they are much more mobile and there’s less inertia to pivot quickly.

IT can handle the motion of small, sub-pixel details more smoothly than native TAA when implemented properly. Things like Grass, hair and wire lines CAN look better than native in some games.When did that even start? I remember DLSS used to be talked about as a pretty worthwhile compromise. Then suddenly it shifted to gift from God, "better than native resolution" status.

Dlss quality is really good and should be used. I actually think it’s a fantastic feature. And in the 4K+ resolution it was needed to get decent frames above 60fps. Now with the 4090 that shouldn’t an issue at 4K native. But using dlss 2.0 on a 4090 at 4K would ensure you be getting over 100fps easily which would be a god send in my eyes.When did that even start? I remember DLSS used to be talked about as a pretty worthwhile compromise. Then suddenly it shifted to gift from God, "better than native resolution" status.

Right. I'm only wondering about the "better than native res" talk.Dlss quality is really good and should be used. I actually think it’s a fantastic feature. And in the 4K+ resolution it was needed to get decent frames above 60fps. Now with the 4090 that shouldn’t an issue at 4K native. But using dlss 2.0 on a 4090 at 4K would ensure you be getting over 100fps easily which would be a god send in my eyes.

Bad textures look better, good textures look about the same, textures in motion get interesting.Right. I'm only wondering about the "better than native res" talk.

What a copout. Nvidia blew up dlss3 into something it frankly isn't to the point it almost felt like they were trying to sell it, not a GPU.

That sums it up. Its subjective. I like sharpening in some games but it isn't the intended look. I like it... others may not. Same has been true with AA methods going back long before DLSS and FSR tech.Hyperbole aside, a modifier/filter on a game's graphics is always going to be subjective, and DLSS of any level still just boils down to an option to A/B compare and see if it happens to work better for your particular mix of GPU/Monitor/game. I don't really care how any optional feature is ever marketed since it still comes down to doing one's own testing in the end.

I mean, yeah, it's not an image enhancer, it's a way to get better framerate with minimal* image quality loss.Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

I wouldn't be so harsh. More of a wet fart.

Completely agree... But you are understating how bad it is IMO.Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

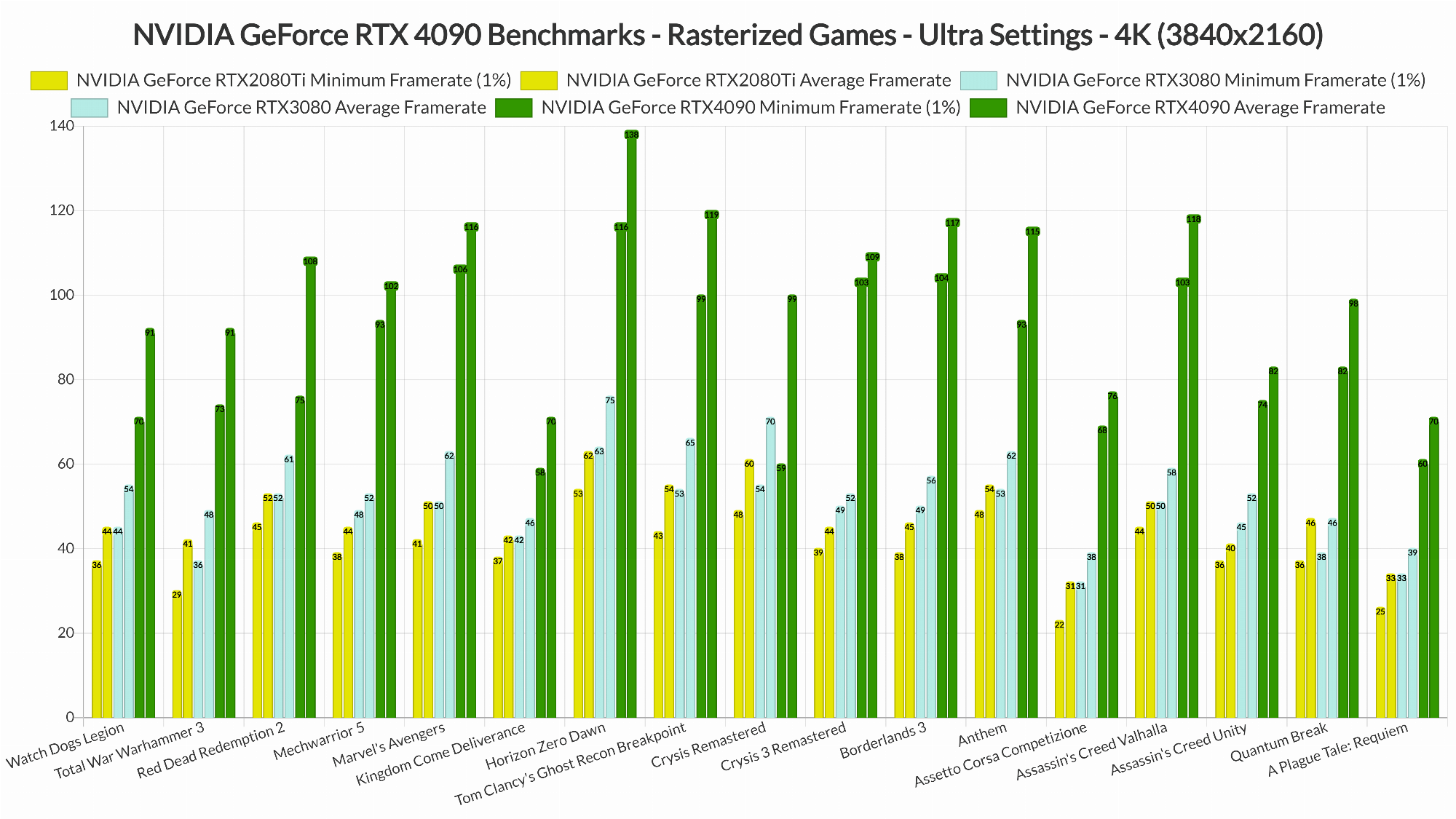

Have you seen the reviews of the RTX 4090 cards? 4k looks pretty crazy playable to me.It is shit, but I don't see anybody selling hardware that offers good framerates for native 4K.

So we're kind of where we are at with that.

Lot of people were playing lot of game with good FPS before last week (Maybe just not at very ultra setting), but that do not sound up to date:It is shit, but I don't see anybody selling hardware that offers good framerates for native 4K.

So we're kind of where we are at with that.

I saw those sorts of numbers and thought that was with DLSS on, I am surprised to see Anthem on that list. I'm sad every time I think of that game because I really liked it, and it was so good, they just fucked it up so hard...Lot of people were playing lot of game with good FPS before last week (Maybe just not at very ultra setting), but that do not sound up to date:

View attachment 518247

Collection of not the easiest title to run, ultra settings, 4K often above 100 fps average

Have you heard of Frame Generation?Am I the only person on earth that thinks DLSS, ALL DLSS, is a bit shit?

I want to see side by side output quality comparisons with it disabled and enabled.Have you heard of Frame Generation?