How about.making powerful sub $300 GPUs a thing again? I cant justify 2080 pricing if I'm not making money off the thing, and I dont mean mining

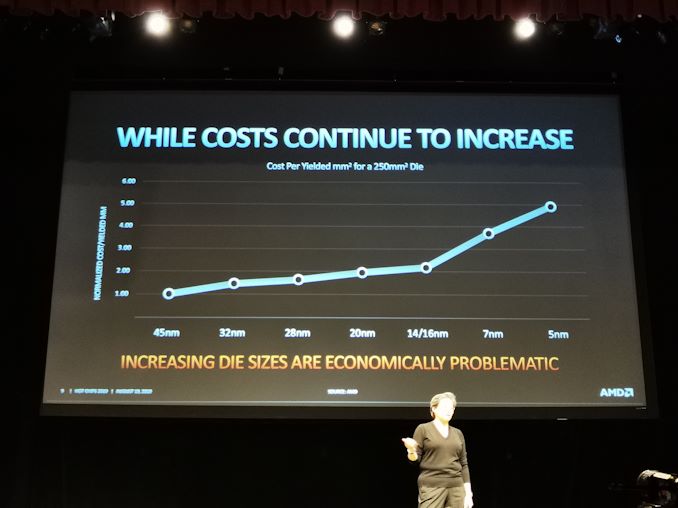

Unfortunately, old school silicon economics have been upended.

There are no more easy gains like the days when die shrinks cut transistor costs in half, now transistor costs are flat and even potentially increasing with die shrinks.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)