GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,768

lol

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The "New High in Low Morality" sounded like he would be preaching, and making some pretty crazy claims...I'm assume it's the same complaints everyone else has been making for the last week.

Adored is an AMDrone. AMDrones hang on to his every word. You're probably making a smart decision.The "New High in Low Morality" sounded like he would be preaching, and making some pretty crazy claims...

Does nVidia use child labor? (Apple and Nike do, so, maybe?) Does nVidia invade countries? Does nVidia force you to have that baby??

Gonna say probably not.

Do they sell for prices we don't like? Sure. Don't see the moral question here.

So clickbaity video, not gonna click. Guy needs a new job, as the "morality" of his is questionable. Irony right there, or more likely hypocrisy.

Amdrone is a highly scientific term. Nvidiot is a term made up by them.Lol are we really coming back to "AMDrone" and "Nvidiots"? Guess that means competition is back.

Nvidia is gonna bend you over for sex 2-4x better than you’ve had in the past. It’ll be in an orifice you don’t normally like to have sex with but you will smile and enjoy it because, well, it’s NvidiaI don't want to give him a view/click, can someone summarize it.

The "New High in Low Morality" sounded like he would be preaching, and making some pretty crazy claims...

Does nVidia use child labor? (Apple and Nike do, so, maybe?) Does nVidia invade countries? Does nVidia force you to have that baby??

Gonna say probably not.

Do they sell for prices we don't like? Sure. Don't see the moral question here.

So clickbaity video, not gonna click. Guy needs a new job, as the "morality" of his is questionable. Irony right there, or more likely hypocrisy.

This forum would not exist without it. Careful what you wish for ya?Lol are we really coming back to "AMDrone" and "Nvidiots"? Guess that means competition is back.

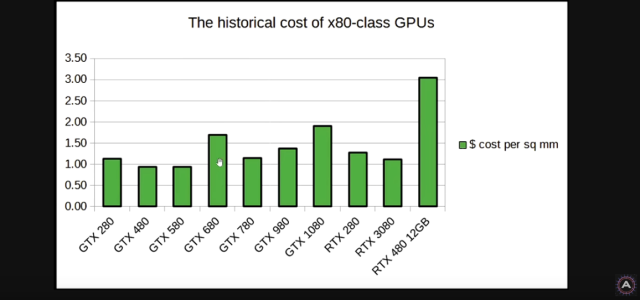

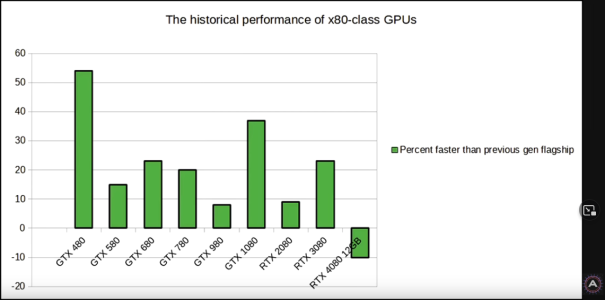

You're right, morality is not the right word. Ethics would be a more appropriate word. Everything he says in the video is true though, and he presents hard hitting facts/info that so far PC tech channels like LTT, GN, HWU, and Jay have not comprehensively presented so far. Essentially he details the history of previous performance, MSRP, cost (to Nvidia), and other important metrics comparable from generation to generation, and shows that the 4090/4080 series value is the worst in 80-series history, going all the way back to 14 years ago when the first 80-series, the 280, was released. Simultaneously, Nvidia will maintain its historically high profit margins, regardless of whatever margins the retailers/AIBs are making. The "increased costs" and "unprecedented performance increases" supposedly justifying the MSRPs are Nvidia BS.The "New High in Low Morality" sounded like he would be preaching, and making some pretty crazy claims...

Does nVidia use child labor? (Apple and Nike do, so, maybe?) Does nVidia invade countries? Does nVidia force you to have that baby??

Gonna say probably not.

Do they sell for prices we don't like? Sure. Don't see the moral question here.

So clickbaity video, not gonna click. Guy needs a new job, as the "morality" of his is questionable. Irony right there, or more likely hypocrisy.

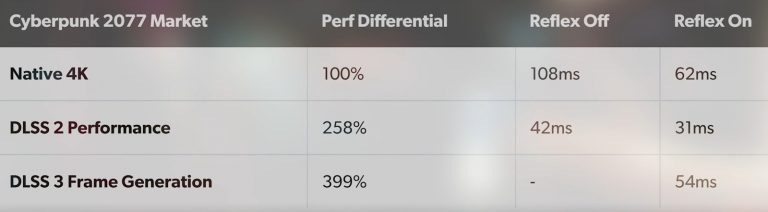

It's new technology. It has nothing to do with morality. Don't buy the product if you don't like it. Every release it's always something that's EVIL. SMDH.I'm ok with Nvidia making money. In fact, I'm GREAT with Nvidia making money, because that money gives them resources to build newer and better products. The moral dilemma that we are facing with the RTX 4000 cards is "What is a frame?". Higher framerates and higher image quality has always been the goal, but in the last several years, "lower latency" has also asserted itself as an important factor, especially as esports takes hold in modern culture.

If GPU technology is improving the way it's supposed to, you will get a combination of these items.

The problem with the RTX 4000 series is that it has introduced a feature that creates higher framerates, mildly impacts image quality negatively, and keeps latency the same as it was, or even increases it. This trend started with the introduction of the RTX 2000 cards and DLSS which, at first, degraded image quality in order to increase framerates and lower latency. So... if we're getting higher framerates at the expense of image quality and latency, is it really a step forward? I honestly don't think it is.

- Higher framerates

- Higher image quality

- Lower latency

It feels like Nvidia has hit a plateau from a design/innovation standpoint and is now trying to trick consumers into thinking they are still making advancements. On top of this, they are tagging us with insane pricing for these new products. I'm all for technology moving forward, but the RTX 4000 cards feels like a solid step to the side, not a super tangible increase. The RTX 4090 looks like it's going to be a solid step up, but everything else is very... meh.

Correct me if I'm wrong on this.

Correct. There is very little "morality" in business. You make a product, you sell it, you make a profit, you build a new product. Morality does not play into any of it.It's new technology. It has nothing to do with morality. Don't buy the product if you don't like it. Every release it's always something that's EVIL. SMDH.

We'll see how much latency there really is, but I tend to have a glass-half-full approach; to me, DLSS is more about making a 1080p or 1440p game look better without tanking your frame rate.I'm ok with Nvidia making money. In fact, I'm GREAT with Nvidia making money, because that money gives them resources to build newer and better products. The moral dilemma that we are facing with the RTX 4000 cards is "What is a frame?". Higher framerates and higher image quality has always been the goal, but in the last several years, "lower latency" has also asserted itself as an important factor, especially as esports takes hold in modern culture.

If GPU technology is improving the way it's supposed to, you will get a combination of these items.

The problem with the RTX 4000 series is that it has introduced a feature that creates higher framerates, mildly impacts image quality negatively, and keeps latency the same as it was, or even increases it. This trend started with the introduction of the RTX 2000 cards and DLSS which, at first, degraded image quality in order to increase framerates and lower latency. So... if we're getting higher framerates at the expense of image quality and latency, is it really a step forward? I honestly don't think it is.

- Higher framerates

- Higher image quality

- Lower latency

It feels like Nvidia has hit a plateau from a design/innovation standpoint and is now trying to trick consumers into thinking they are still making advancements. On top of this, they are tagging us with insane pricing for these new products. I'm all for technology moving forward, but the RTX 4000 cards feels like a solid step to the side, not a super tangible increase. The RTX 4090 looks like it's going to be a solid step up, but everything else is very... meh.

Correct me if I'm wrong on this.

I get a lot of people are butthurt over the prices, and they are meant to be, Nvidia has intentionally made the pricing on the 4000 series unattractive, they need consumers to choose the leftover 3000 series stock over the 4000 series. That high pricing is completely intentional and Nvidia didn't hide that they were going to do this, they stated it very plainly when they said they were going to shift the market accordingly and all the forums were up in arms about Nvidia "manipulating the market". This is them manipulating the market as they publically stated to clear out the 3000 series over stock.GPU's on 150nm nodes were much simpler to design, or even 65nm which the GTX280 was built on. The orders of magnitude that the complexity has grown over that same timespan he discusses, he utterly ignores. Either out of stupidity or on purpose. If it is the former, he has no business trying to make any ethical judgement on shit he understands only at the surface, most basic level. If it's the latter, he's a hypocrite. Either way, not getting any views from me.

Complexity = new capabilities + more speed

GTX280 = 1.4 Billion Transistors - Features: unified shaders, texture mapping units, render output units, 0.933 Tflops processing power

Features added in the GPUs in between: cuda cores, shader processors, improved power management, GPU Boost (automatic overclocking when temp and power allows it), parallelized instructions, faster encoding, newer pcie, PureVideo feature set, Dynamic Super Resolution, Delta Color Compression, Multi-Pixel Programming Sampling, Nvidia VXGI (Real-Time-Voxel-Global Illumination), VR Direct, Multi-Projection Acceleration, Multi-Frame Sampled Anti-Aliasing (MFAA), Async compute, G-Sync, Hardware Raytracing, Deep Learning Super Sampling, newer video port technologies such as Displayport. All along the way, these technologies has been refined and improved upon. And this is just what I skimmed from wikipedia, there's probably more that is not listed.

GA102 = 76.3 Billion Transistors - 1,321 Tensor-TFLOPs, 191 RT-TFLOPs, and 83 Shader-TFLOPs. This total is 1710x the GTX280, if you leave out the Tensor and RT processing power and only include the Shader processing power, it's 89x the GTX260's processing power.

over 54x the transistors in nearly the same die size.

My 'Ethics' analysis: RTX4080 15Gb card is $1199, that is $904 in 2008 dollars. GTX 280 launched at $649. So for 1.39x the price in inflation adjusted dollars, you get 89x the shader processing power, tons of new features, some pretty amazing ones. Oh the Morality of it! Oh the Ethics of it! What will we ever do??!?! Why isn't it 1x the price? Let's make a video!

For all of the billions spent on R&D to make a modern GPU possible, 1.39x cost increase doesn't feel immoral or unethical.

But let's ignore all of that and preach the Heavenlies of AMD and the Evils of nVidia!

(it gets more clicks, and/or I am paid to make these videos by AMD)

Edit: corrected the gtx280 tflops

And AMD is to be commended on those consoles, they punch above their weight class, I would take a PS5/XBox over any $600 gaming PC easily.We'll see how much latency there really is, but I tend to have a glass-half-full approach; to me, DLSS is more about making a 1080p or 1440p game look better without tanking your frame rate.

Having said this, NVIDIA is definitely hitting a wall in terms of performance and efficiency. It's bit funny to hear some hardcore PC gamers calling for the death of consoles (and competition, and variety) when you can buy a modern console and a TV for the same price as an RTX 4080. Get back to me when there's an RTX 4060... which hopefully won't cost more than a PS5.

PC high end definitely performs head, neck, and shoulders above consoles, but at the low-to-mid range, the PS5 and Xbox Series X stand alone. It would seem PC component manufacturers have ceded the low/mid arena to consoles.And AMD is to be commended on those consoles, they punch above their weight class, I would take a PS5/XBox over any $600 gaming PC easily.

Yeah I'm going to guess it won't be. Nvidia seems to be pretty aware that lower latency is important to some (as their work on reflex shows) but a small increase in latency for better RTX visuals would certainly appeal to a large group as well.We'll know if DLSS 3 is a giant ruse soon enough - just 2 more weeks until the review embargo lifts. I feel pretty confident that if latency is an issue, the major tech reviewers will be all over it.

Then it'll come down to support. Nvidia usually isn't afraid to throw money at things, so then it'll come down to which titles support it and when.

The big question is does the latency added by the frame generation get countered enough by reflex so that the final result is less than or equal to the latency if neither was used? Because if they combined are equal to using neither then its a zero-sum argument and not an issue.Yeah I'm going to guess it won't be. Nvidia seems to be pretty aware that lower latency is important to some (as their work on reflex shows) but a small increase in latency for better RTX visuals would certainly appeal to a large group as well.

Hell, I'd take one over more expensive PCs than that! To have a clear edge over a PS5 or XSX, I'd say you need a fairly hefty GPU investment.And AMD is to be commended on those consoles, they punch above their weight class, I would take a PS5/XBox over any $600 gaming PC easily.

Hell, I'd take one over more expensive PCs than that! To have a clear edge over a PS5 or XSX, I'd say you need a fairly hefty GPU investment.

A $600 gaming PC would probably have to be full of used parts to be worth a damn. Either that or using shaky #'s that don't count expenses like the cost of a Windows license, KB/M/Gamepad, fans, case, etc.

The days of low-end PC's besting consoles are over for the moment.

I'm ok with Nvidia making money. In fact, I'm GREAT with Nvidia making money, because that money gives them resources to build newer and better products. The dilemma that we are facing with the RTX 4000 cards is "What is a frame?". Higher framerates and higher image quality has always been the goal, but in the last several years, "lower latency" has also asserted itself as an important factor, especially as esports takes hold in modern culture.

If GPU technology is improving the way it's supposed to, you will get a combination of these items.

The problem with the RTX 4000 series is that it has introduced a feature that creates higher framerates, mildly impacts image quality negatively, and keeps latency the same as it was, or even increases it. This trend started with the introduction of the RTX 2000 cards and DLSS which, at first, degraded image quality in order to increase framerates and lower latency. So... if we're getting higher framerates at the expense of image quality and latency, is it really a step forward? I honestly don't think it is.

- Higher framerates

- Higher image quality

- Lower latency

It feels like Nvidia has hit a plateau from a design/innovation standpoint and is now trying to trick consumers into thinking they are still making advancements. On top of this, they are tagging us with insane pricing for these new products. I'm all for technology moving forward, but the RTX 4000 cards feels like a solid step to the side, not a super tangible increase. The RTX 4090 looks like it's going to be a solid step up, but everything else is very... meh.

Correct me if I'm wrong on this.

But let's ignore all of that and preach the Heavenlies of AMD and the Evils of nVidia!

(it gets more clicks, and/or I am paid to make these videos by AMD)

My 'Ethics' analysis: RTX4080 15Gb card is $1199, that is $904 in 2008 dollars. GTX 280 launched at $649. So for 1.39x the price in inflation adjusted dollars, you get 89x the shader processing power, tons of new features, some pretty amazing ones. Oh the Morality of it! Oh the Ethics of it! What will we ever do??!?! Why isn't it 1x the price? Let's make a video!

For all of the billions spent on R&D to make a modern GPU possible, 1.39x cost increase doesn't feel immoral or unethical.

It's even easier for me.Yes, because I see so many preaching the wonderfulness of AMD's prices (sans the somewhat sane 6600 for the past 3 months). Never mind that the market didn't want to pay AMD's prices either even when they were available and $200-400 cheaper at the high end for over a year now compared to ampere cards.

I care not for Nvidia's complexities and problems with R&D just as they care not about my gpu budget. But hey, run me the numbers on a $379 1070 in 2016 (that really was $400-450 for AIB models, more for the founders NV brand) that is now $899. What was the GTX970 in 9/2014? Oh yeah, $330-350.

View attachment 514959

Complexities man. Its all about them complexities...of profit margin.

View attachment 514970

This. In the most basic form of capitalism, corporations exist to earn a profit and maximize shareholder wealth. In that regard, corporations are no more evil than animals in nature who savagely kill other animals to eat them. The upside to the consumer is innovation to earn their dollars. Without capitalism, we wouldn't have a grocery store full of foods from all over the world and we sure wouldn't have graphic cards for playing video games.Correct. There is very little "morality" in business. You make a product, you sell it, you make a profit, you build a new product. Morality does not play into any of it.

Let's apply that line of thinking to CPUs. The P4 EE 3.4 GHz was a single core / Hyper Threading chip that sold for $999 in 2004, so the 32 thread 13900K should cost about $25,000 today. Intel stockholders should be pissed.GPU's on 150nm nodes were much simpler to design, or even 65nm which the GTX280 was built on. The orders of magnitude that the complexity has grown over that same timespan he discusses, he utterly ignores. Either out of stupidity or on purpose. If it is the former, he has no business trying to make any ethical judgement on shit he understands only at the surface, most basic level. If it's the latter, he's a hypocrite. Either way, not getting any views from me.

Complexity = new capabilities + more speed

GTX280 = 1.4 Billion Transistors - Features: unified shaders, texture mapping units, render output units, 0.933 Tflops processing power

Features added in the GPUs in between: cuda cores, shader processors, improved power management, GPU Boost (automatic overclocking when temp and power allows it), parallelized instructions, faster encoding, newer pcie, PureVideo feature set, Dynamic Super Resolution, Delta Color Compression, Multi-Pixel Programming Sampling, Nvidia VXGI (Real-Time-Voxel-Global Illumination), VR Direct, Multi-Projection Acceleration, Multi-Frame Sampled Anti-Aliasing (MFAA), Async compute, G-Sync, Hardware Raytracing, Deep Learning Super Sampling, newer video port technologies such as Displayport. All along the way, these technologies has been refined and improved upon. And this is just what I skimmed from wikipedia, there's probably more that is not listed.

GA102 = 76.3 Billion Transistors - 1,321 Tensor-TFLOPs, 191 RT-TFLOPs, and 83 Shader-TFLOPs. This total is 1710x the GTX280, if you leave out the Tensor and RT processing power and only include the Shader processing power, it's 89x the GTX260's processing power.

over 54x the transistors in nearly the same die size.

My 'Ethics' analysis: RTX4080 15Gb card is $1199, that is $904 in 2008 dollars. GTX 280 launched at $649. So for 1.39x the price in inflation adjusted dollars, you get 89x the shader processing power, tons of new features, some pretty amazing ones. Oh the Morality of it! Oh the Ethics of it! What will we ever do??!?! Why isn't it 1x the price? Let's make a video!

For all of the billions spent on R&D to make a modern GPU possible, 1.39x cost increase doesn't feel immoral or unethical.

But let's ignore all of that and preach the Heavenlies of AMD and the Evils of nVidia!

(it gets more clicks, and/or I am paid to make these videos by AMD)

Edit: corrected the gtx280 tflops

A 2003 Pentium EE had around 178 millions transistor a 12900K around 12,400 millions or 70 times more.Let's apply that line of thinking to CPUs. The P4 EE 3.4 GHz was a single core / Hyper Threading chip that sold for $999 in 2004, so the 32 thread 13900K should cost about $25,000 today. Intel stockholders should be pissed.

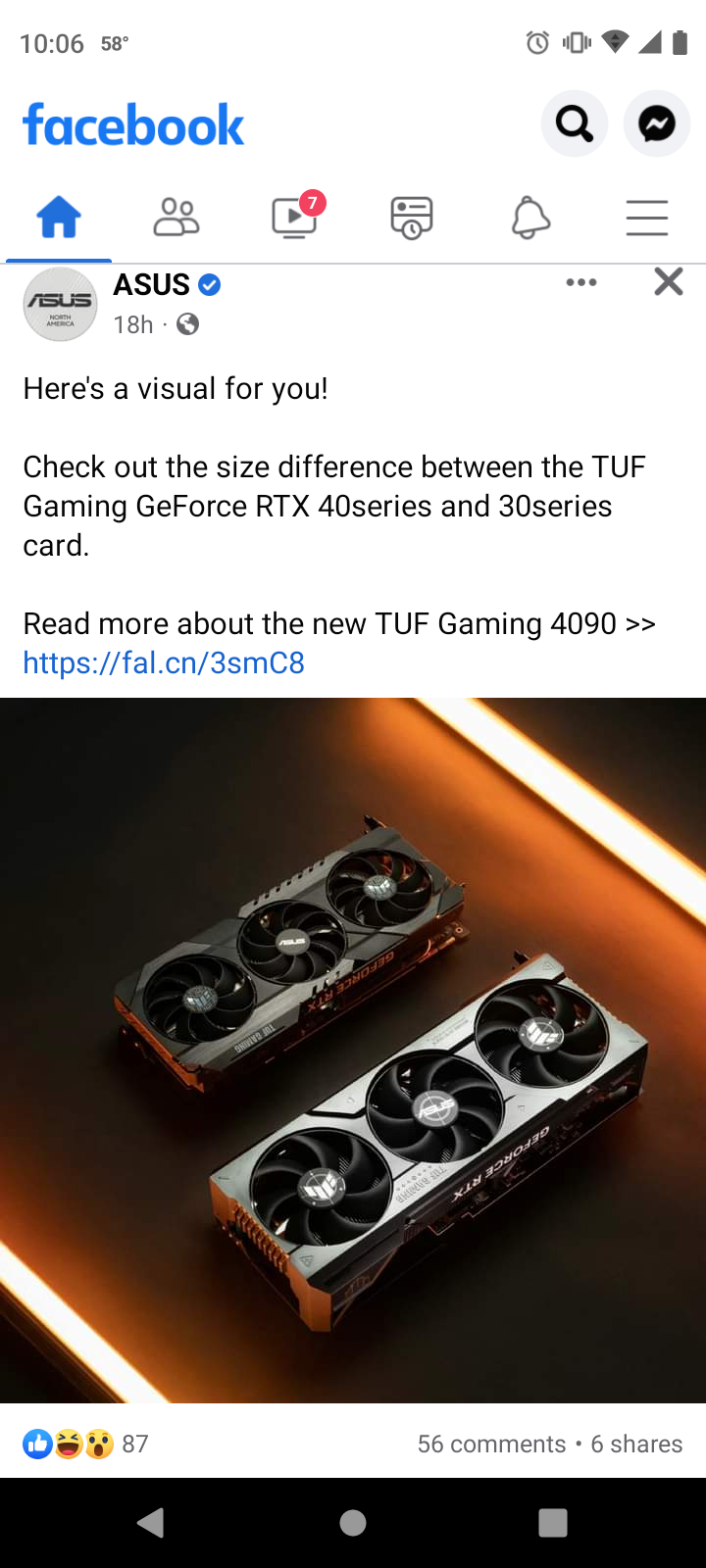

Geez, thats comically large. I think I'll go with a AIO cooler this time.

I know right… nucking futs.Geez, thats comically large. I think I'll go with a AIO cooler this time.

Looks like if I'm upgrading to 4xxx, I'd be kissing my mini-ATX cases goodbye. The performance of the new cards looks...nice...but for what purpose? So I can get 4k 100+ FPS instead of 4k 95 FPS in Spiderman: Remastered? Back in the day, graphics in software was improving somewhat along the same rate as GPU horsepower. But now? Software developers have no use for that much GPU power. SLI has been dead/redundant for quite a long time now (and I remember having to convince people it was dead a few years back). We're entering a new ballgame. I don't blame the developers, they're just trying target and accommodate the common consumer rather than the enthusiast, and the buy-in on a regular GPU has increase an incredible amount over the last 5 years.I know right… nucking futs.

I really think that GPU’s are going to have to go the AIO route and cases are going to need to adapt to accommodate. GPU’s draw more than the rest of the system combined and things will have to change accordingly.