TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,689

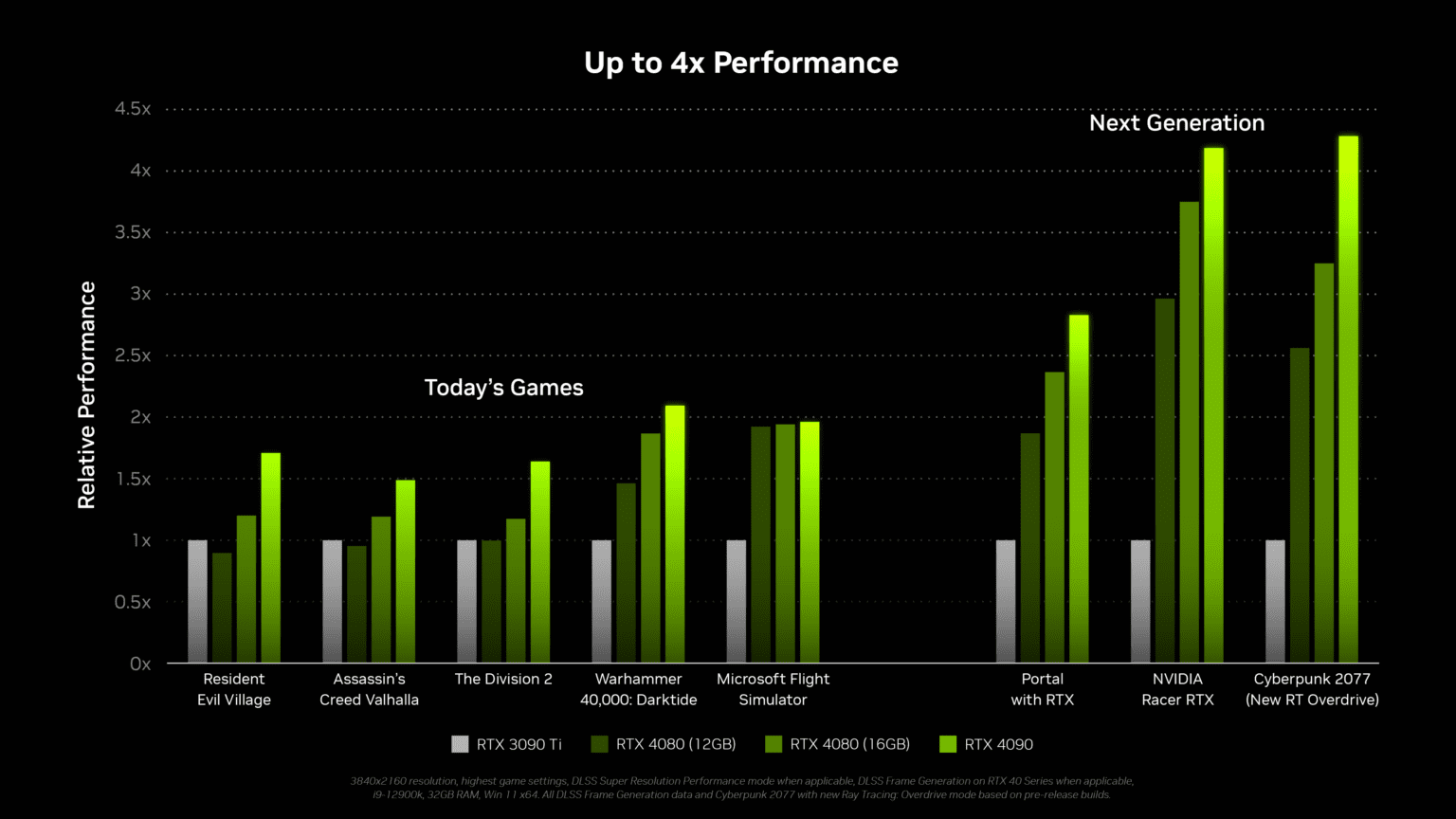

"Up to" refers to the maximum value, not the average. Nvidia releases individual graphs you can look at. Don't let MLID bring down your IQ.

Charts here:

https://hardforum.com/threads/nvidi...r-20th-tuesday.2021544/page-9#post-1045455558

Charts here:

https://hardforum.com/threads/nvidi...r-20th-tuesday.2021544/page-9#post-1045455558

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)