im_shadows

n00b

- Joined

- Feb 17, 2018

- Messages

- 32

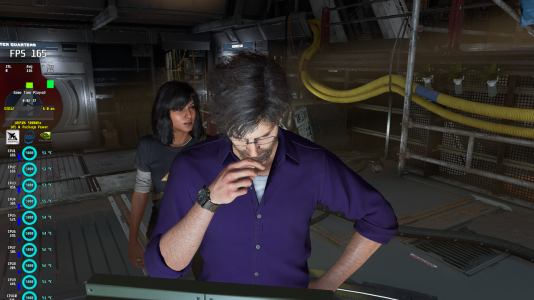

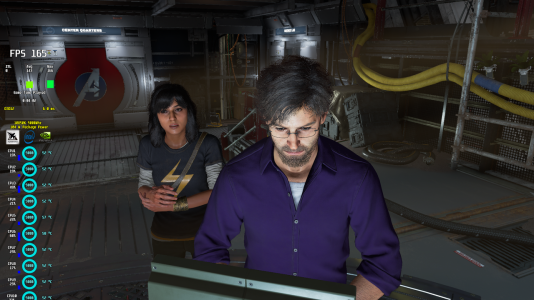

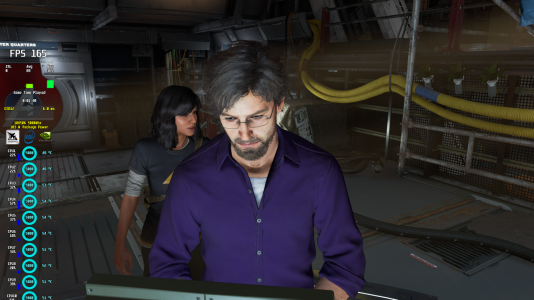

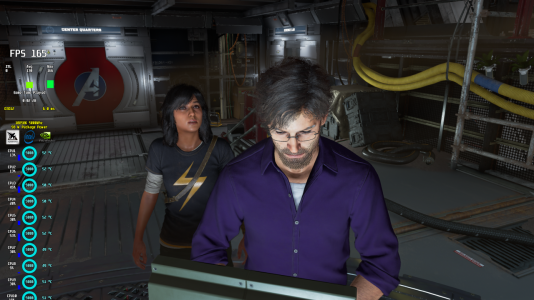

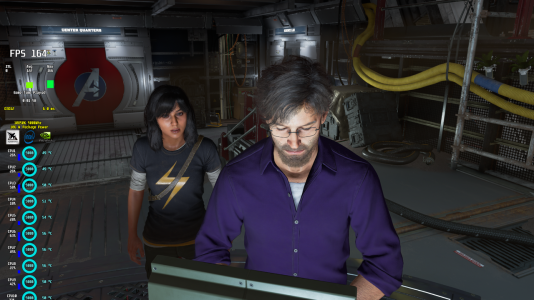

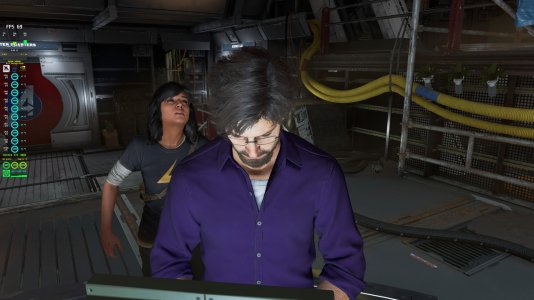

I know you're answering jobert, and yes, the problem in this image is very similar to mine, still, this very problem happens on other games, made by other developers (such as rdr2 and cod mw). So the problem is not with this specific game, maybe it's some sort of technology that all of them use, but it is not related exclusively to Marvel's Avengers.Also this from the Steam community. Not trying to be a jerk or anything, but I really think it's just the game.

View attachment 383332

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)