erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

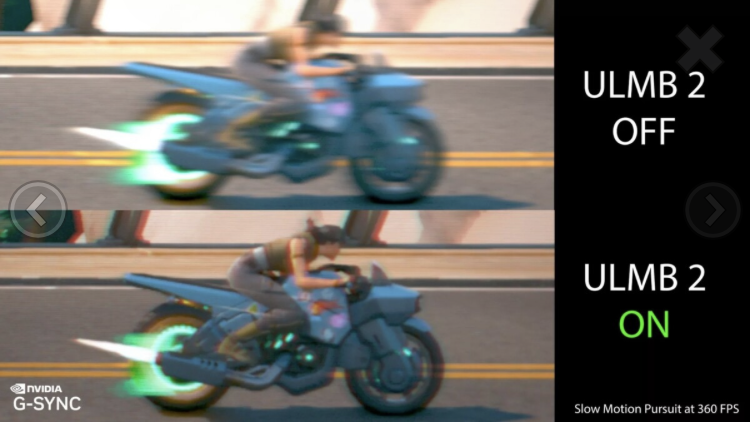

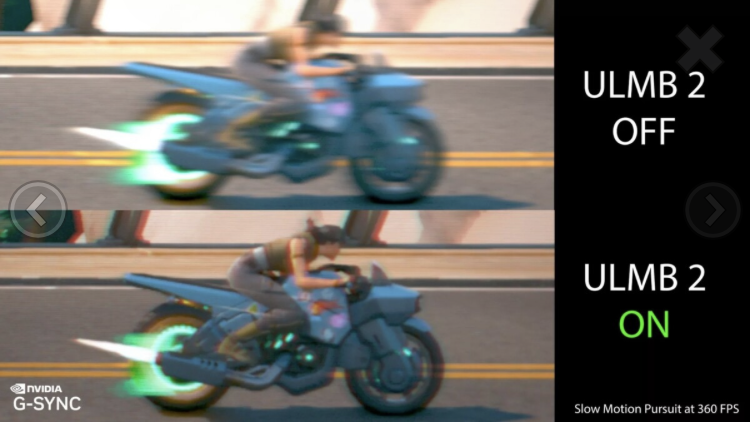

1000 Hz of emulated & interpolated “motion clarity” with whiz-bang voodoo AI similar to the underpinnings of DLSS2.5/3?

“With ULMB 2, the backlight is only turned on when each pixel is at its correct color value. The idea is to not show the pixels transitioning, and only show them when their color is accurate.

But this technique creates a challenge: backlights generally light up all pixels at the same time where pixels are changed on a rolling scanout. At any given point in time, a portion of the screen will have double images (as known as crosstalk).

The solution to this problem is what sets G-SYNC's ULMB 2 apart from other backlight strobing techniques: with G-SYNC, we're able to control the response time depending on where the vertical scan is, such that the pixels throughout the panel are at the right level at precisely the right time for the backlight to be flashed. We call this "Vertical Dependent Overdrive".

With Vertical Dependent Overdrive, ULMB 2 delivers great image quality even at high refresh rates where the optimal window for backlight strobing is small.

ULMB 2 Is Available Now

For ULMB 2 capability, monitors must meet the following requirements:”

Source: https://www.techpowerup.com/309298/...lmb-2-over-1000hz-of-effective-motion-clarity

“With ULMB 2, the backlight is only turned on when each pixel is at its correct color value. The idea is to not show the pixels transitioning, and only show them when their color is accurate.

But this technique creates a challenge: backlights generally light up all pixels at the same time where pixels are changed on a rolling scanout. At any given point in time, a portion of the screen will have double images (as known as crosstalk).

The solution to this problem is what sets G-SYNC's ULMB 2 apart from other backlight strobing techniques: with G-SYNC, we're able to control the response time depending on where the vertical scan is, such that the pixels throughout the panel are at the right level at precisely the right time for the backlight to be flashed. We call this "Vertical Dependent Overdrive".

With Vertical Dependent Overdrive, ULMB 2 delivers great image quality even at high refresh rates where the optimal window for backlight strobing is small.

ULMB 2 Is Available Now

For ULMB 2 capability, monitors must meet the following requirements:”

Source: https://www.techpowerup.com/309298/...lmb-2-over-1000hz-of-effective-motion-clarity

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)