i vote Nivdia for releasing solid drivers

Get AMD if you want crap drivers and 3-5 percent faster FPS in metro and maybe another game.

Lol, I vote for who ever has the best card at the price I want to pay when I want to buy.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

i vote Nivdia for releasing solid drivers

Get AMD if you want crap drivers and 3-5 percent faster FPS in metro and maybe another game.

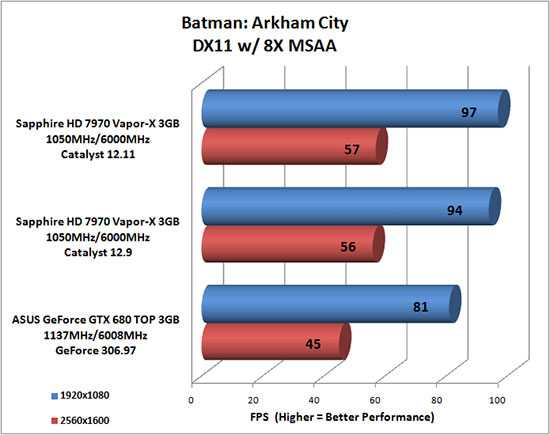

first, lets make it clear that the 7970 is using 2xmsaa.

cant go wrong with either card in terms of performance but it still comes down to who has a better driver support, heat, fan speed, and power draw at the end.

nvidia is clearly the winner this gen, anyone who denies it is a die hard amd/ati extremist.

I'm glad I gave you a laugh, Kyle. Life is short, and we can use all the good cheer we can get!

Didn't know I could actually rouse the big guy to respond to my humble rant! I feel strangely honored.

Yes, yes you did give ONE gold award to an AMD or AMD-based product in the last 3 years. OK, so you're not really mad at AMD then!

Seriously, though, I am looking forward to the day that AMD graphics cards and CPUs strangely get the benefit of the doubt/mysteriously good reviews all of a sudden. It will feel like old times all over again.

i vote Nivdia for releasing solid drivers

Get AMD if you want crap drivers and 3-5 percent faster FPS in metro and maybe another game.

That's why clock for clock is important. If you have the GPU's running at the same speed, and one does more work, it shows the strengths of the architecture. Since the 7970 is faster, a good starting consideration is indeed the memory bandwidth. It's not unreasonable to think the extra memory bandwidth helps performance, and again shows why the 7970 being faster is a better purchase right now.

Clock-for-clock comparisons compare architectures and designs, not raw speed, something it seems you don't understand. 1.3GHz is an arbitrary number, you can select any overclock and Kepler and Tahiti will clock relatively the same. In fact, hwbot shows their averages as being almost identical: http://hwbot.org/hardware/videocard/geforce_gtx_680/ . Therefore, if both cards clock relatively the same, but the 7970 is faster at the same clocks, clearly the 7970 is the higher performing part.

That's why you use raw data, which I showed you. Looks like you got lousy clockers, it happens. The reasonable expectation when buying a part is that you will fall at the 50th percentile, which in this case is 1214 MHz.

Similar clocks are the trend, I just proved it and provided references.

Furthermore, I can show the opposite, there's no power difference. For example:

That's an overclocked 7970 @ 1210MHz and it's using 13W more than a stock GTX 680. I was being generous with 50W; again, power isn't the issue you're trying to make it into.

I like seeing how fanboys argue over 680 vs 7970 when the frame rate is withing 5%.

So it isn't as simple as stating its only 5% faster. You need to factor in it is also ~$70 cheaper to purchase and comes with a great games bundle. Faster and cheaper = better price/perf. When GTX 680 was released it had better price/perf and it was rightly received as a great card at a (then) great price. Now AMD have turned the tables and the kudos goes to them, unfortunately there are too many brand loyalists on both sides who refuse to give credit where it is deserved.

What you describe is a problem of AMD. They have to sell the far more expensive product including a game bundle for a lower price. Good business execution is something different. Their Q3 financial results where a nightmare and even the GPU division did not well in the last quater. They had a profit but revenue took a hit. We will see when Nvidia posts numbers but my feeling is they are doing far better. BTW AMD still has no answer for the high quality GTX690.

Kepler's lack of memory bandwidth has the possibility of being restrictive and much more quickly than a 7970. That's a disadvantage. You don't need to complicate it further or be purposefully dense to try to negate or deflect that point.You will never, ever convince me of the logic behind clock for clock comparison between two different architectures. That memory bandwidth BS also made zero sense to me. You don't seem to like to include memory OC to your clock for clock point of view. Knowing the difference in stock memory bandwidths of Tahiti and Kepler, it's clear that those two respond very differently to memory OC. Not only that, but as a result of that difference in bandwidth, they also respond very differently to core OC when considering the bandwidth as constant. Kepler will be much more bandwidth restricted which is not necessarily so when you take memory OC into account.

The same can be said for 7970 which also has boost. The great thing about averages is it tends to minimize differences.Wanna know what's the problem with your raw data? GTX 680 clocks are all over the place. There are many, many hwbot users who report the clocks that you see on the first tab of GPU-Z. Those are not the actual clocks the card is running with, you need to check those in the sensors tab. Take the actual clocks, get an average out of those and then talk about raw data.

That's a baseless assumption on your part. Do you have any data to show that or are you now just making up things because your argument is so weak?Then there is the fact that for Tahiti cards it is much more common to bench with a set up not suitable for 24/7 use. Take the average air cooled 7970 owner: for benching he cranks the voltage to max, clocks to where they are stable at with that max voltage, makes his bench run and then turns the voltage and clocks down. Take your average air cooled 680 owner: he cranks the clocks up to as high as they are stable, makes his bench run and then goes on playing his games.

And the point is that the 7970 can clock the same as the GTX 680 without touching the voltage control. If you want to go higher, you can give it voltage to keep going, which is another advantage the 7970 has over the GTX 680. Again, not complicated.You can show it, but you don't seem to understand it. That XFX is overclocked with no voltage control. Overclocking with no overvolting results in exactly that: miniscule power consumption increase. Just as with the 680 I showed you. Take your average 7970 and your average "raw data" overclock: on average you will need extra voltage to get there. That extra voltage will increase the power consumption exponentially. There is no way out of it: voltage tweaking will destroy your power efficiency, simple as that.

What has happened is you have no rebuttals or data to counter my points so you want to bow out. Just say that instead of this "let's agree to disagree" nonsense.Now we can argue about these things forever, but I think it's safe to say we are never going to agree on the details. The thing is, we are agreeing on the big question: AMD is the thing to get right now. So let's not go any further into it, ok?

Lol, amen.You post is such BS I don't know where to begin. Seriously dude, take a step back and read the utter bullshit you just typed. It doesn't mater how AMD are doing financially, this is pure and simple about what GPU cards are the best bang for buck. RIGHT NOW the AMD cards are better price/perf compared to their equivalent Nvidia cards.

How the hell is AMDs current financial position pertinent to how good the AMD GPUs are?

This HardOCP not the FT times.

Then there is the fact that for Tahiti cards it is much more common to bench with a set up not suitable for 24/7 use. Take the average air cooled 7970 owner: for benching he cranks the voltage to max, clocks to where they are stable at with that max voltage, makes his bench run and then turns the voltage and clocks down. Take your average air cooled 680 owner: he cranks the clocks up to as high as they are stable, makes his bench run and then goes on playing his games.

You can show it, but you don't seem to understand it. That XFX is overclocked with no voltage control. Overclocking with no overvolting results in exactly that: miniscule power consumption increase. Just as with the 680 I showed you. Take your average 7970 and your average "raw data" overclock: on average you will need extra voltage to get there. That extra voltage will increase the power consumption exponentially. There is no way out of it: voltage tweaking will destroy your power efficiency, simple as that.

You post is such BS I don't know where to begin. Seriously dude, take a step back and read the utter bullshit you just typed. It doesn't mater how AMD are doing financially, this is pure and simple about what GPU cards are the best bang for buck. RIGHT NOW the AMD cards are better price/perf compared to their equivalent Nvidia cards.

How the hell is AMDs current financial position pertinent to how good the AMD GPUs are?

This HardOCP not the FT times.

You post is such BS I don't know where to begin. Seriously dude, take a step back and read the utter bullshit you just typed. It doesn't mater how AMD are doing financially, this is pure and simple about what GPU cards are the best bang for buck. RIGHT NOW the AMD cards are better price/perf compared to their equivalent Nvidia cards.

How the hell is AMDs current financial position pertinent to how good the AMD GPUs are?

This HardOCP not the FT times.

No, brainless sheep without a pair tie themselves to a company because they lack a personality and a functional social life. It's video card company, move on.Because people like winners and not loosers. Nvidia has a lot of other stuff going for them. A great brand is definately one of them. This justifies a higher price plain and simple and the majority - whether you like it or not - seems to agree as numbers clearly show.

Because people like winners and not loosers. Nvidia has a lot of other stuff going for them. A great brand is definately one of them. This justifies a higher price plain and simple and the majority - whether you like it or not - seems to agree as numbers clearly show.

So you bought a card with identical performance and lost out on a year of Bitcoin mining profits instead. Strong work.So it took almost a year for AMD to unleash the potential of the HD79XX series? Good thing I didn't waste my time and bought my 680 GTX at launch instead.

Kepler's lack of memory bandwidth has the possibility of being restrictive and much more quickly than a 7970. That's a disadvantage. You don't need to complicate it further or be purposefully dense to try to negate or deflect that point.

The same can be said for 7970 which also has boost. The great thing about averages is it tends to minimize differences.

That's a baseless assumption on your part. Do you have any data to show that or are you now just making up things because your argument is so weak?

And the point is that the 7970 can clock the same as the GTX 680 without touching the voltage control. If you want to go higher, you can give it voltage to keep going, which is another advantage the 7970 has over the GTX 680. Again, not complicated.

What has happened is you have no rebuttals or data to counter my points so you want to bow out. Just say that instead of this "let's agree to disagree" nonsense.

So you bought a card with identical performance and lost out on a year of Bitcoin mining profits instead. Strong work.

Honestly, it's interesting to see the fanboys downplay the performance boost. Competition is good for all consumers, even if it hit your favorite company in the nuts.

Where did I exclude anything? Don't try to make me into a fanboy like yourself, I'm stating an advantage which can come into play - the 7970 has much higher memory bandwidth and that is a big advantage in some applications. That's a simple point, don't go ad hominem because you don't like it.It is a disadvantage and you maximize that disadvantage by not taking into account the possibility to OC the memory on 680. That is of course fitting to your need to include everything pro AMD and exclude everything pro Nvidia.

And what about when boost is disabled when overclocking, which is what we're talking about in the first place. It seems like, once again, you don't have your facts straight.Seriously, stop talking nonsense. GPU-Z reports just one core clock for the 7970 GHz Edition and it is that boosted clock. How about getting your facts straight before attacking my arguments?

Again, you're making up anecdotal nonsense because your arguments are completely transparent and baseless. I'm not the only one telling you this.Data? You mean like the ages old fact that benchmarking is all about suicide runs with settings not suitable for 24/7 usage? You can say whatever bad things you like on Nvidia's decision to not allow voltage tweaking with Keplers, but the one thing it means is that suicide runs do not go with Keplers. They do with Tahitis. Deny it all you want, but I've made my fair share of suicide runs with my 7970s and I know perfectly well that it is not the same thing as getting to a balanced and stable 24/7 OC. How exactly do you do that with a locked voltage?

The thing is your trying to make this black and white because that's what nvidia has restricted you to do, and of course you're going to bat for them. With AMD you have a choice - you can run a nice overclock at lower power consumption or you can boost the voltage and shoot for the moon. You can't do that with a Kepler based card, period. And furthermore, at the same clocks, the Kepler card is slower. No matter how you try to deflect or change parameters you can't escape the fact that Kepler is a gimped GPU.And again, you are cherry-picking your data. You want everyone to know how great the 7970 overclocks with their unlocked voltages and the next thing you do is tell them to ignore the effect overvolting has on the power consumption of a graphics card. When the facts are already on the side of AMD, why do you feel the need to distort the obvious truth? 1125/1575 are pretty much the clocks that most 7970s do on stock voltage. Some do more, 1150MHz isn't too rare, but then there are cards that come shy of 1100MHz. 1200MHz is very much in the overvolting category. One card making it to 1210MHz (with a higher-than-original stock voltage of 1.2V, mind you) don't change that.

You're not countering anything, just stop. You haven't provided a shred of evidence to prove your points and rely on anecdotal hearsay and your opinion, which counts for nothing may I remind you, as evidence. Keep going, you're digging your own hole and this is fun to watch.You want to keep going, I'm all for it. I don't have an agenda to put forth and feel no personal need to argue with you, but keep posting stuff like this and I will for sure keep countering you.

Wait, you come into this thread to stamp your feet and I'm the one that's bitter? Sorry you don't like being called out on your behavior, here's a tissue.Performance was identical when I bought my 680 GTX? Yeah right guy.

Right, I'm going to spend all my time mining. Wait for that next price plunge.

You're just bitter that it took AMD almost a year to realize the potential of their GAMING card.

No, brainless sheep without a pair tie themselves to a company because they lack a personality and a functional social life. It's video card company, move on.

Performance was identical when I bought my 680 GTX? Yeah right guy.

Right, I'm going to spend all my time mining. Wait for that next price plunge.

You're just bitter that it took AMD almost a year to realize the potential of their GAMING card.

Performance was identical when I bought my 680 GTX? Yeah right guy.

Right, I'm going to spend all my time mining. Wait for that next price plunge.

You're just bitter that it took AMD almost a year to realize the potential of their GAMING card.

LOL, Nvidia is not a great brand for GPUs, how can it be when it is in an industry populated by two manufacturers in the gaming GPU market. I guarantee you that if you ask the average person in the street they won't have heard about Nvidia. This isn't Ford vs BMW we are talking about, this is very minor, small fry gaming GPUs that the vast majority of people will never have heard off. How many people outside of gamers actually even buy discrete GPUs? In fact the enthusiast gamer GPU market is so small only Nvidia and AMD care about it. Think about that when you spout the "good brand" drivel.

We are talking about gamers. And gamers do very well know the brand "Nvidia" because after 3dfx has stopped existing they have been the synonym for gaming, especially enthusiast gaming. And the latter one is a good selling factor for performance, mid range and low end gaming. Simple market mathematics. It does not matter how you want to spin it. The majority of customers prefers Nvidia plain and simple. Live with it. Its AMDs fault if their brand recognition is so lousy as it is.

No, brainless sheep without a pair tie themselves to a company because they lack a personality and a functional social life. It's video card company, move on.

So it isn't as simple as stating its only 5% faster. You need to factor in it is also ~$70 cheaper to purchase and comes with a great games bundle. Faster and cheaper = better price/perf. When GTX 680 was released it had better price/perf and it was rightly received as a great card at a (then) great price. Now AMD have turned the tables and the kudos goes to them, unfortunately there are too many brand loyalists on both sides who refuse to give credit where it is deserved.

Where did I exclude anything? Don't try to make me into a fanboy like yourself, I'm stating an advantage which can come into play - the 7970 has much higher memory bandwidth and that is a big advantage in some applications. That's a simple point, don't go ad hominem because you don't like it.

And what about when boost is disabled when overclocking, which is what we're talking about in the first place. It seems like, once again, you don't have your facts straight.

Again, you're making up anecdotal nonsense because your arguments are completely transparent and baseless. I'm not the only one telling you this.

The thing is your trying to make this black and white because that's what nvidia has restricted you to do, and of course you're going to bat for them. With AMD you have a choice - you can run a nice overclock at lower power consumption or you can boost the voltage and shoot for the moon. You can't do that with a Kepler based card, period. And furthermore, at the same clocks, the Kepler card is slower. No matter how you try to deflect or change parameters you can't escape the fact that Kepler is a gimped GPU.

You're not countering anything, just stop. You haven't provided a shred of evidence to prove your points and rely on anecdotal hearsay and your opinion, which counts for nothing may I remind you, as evidence. Keep going, you're digging your own hole and this is fun to watch

Wait, you come into this thread to stamp your feet and I'm the one that's bitter? Sorry you don't like being called out on your behavior, here's a tissue.

And why would any 7970 owners be bitter? That's about the stupidiest thing that I have read in this thread so far. They are getting a fairly substantial upgrade for free, what's not to like? Seriously?

Wait, you come into this thread to stamp your feet and I'm the one that's bitter? Sorry you don't like being called out on your behavior, here's a tissue.

NVIDIA has also surprised us by providing an efficient GPU. Efficiency is out of character for NVIDIA, but surely we welcome this cool running and quiet GTX 680. NVIDIA has delivered better performance than the Radeon HD 7970 with a TDP and power envelope well below that of the Radeon HD 7970. NVIDIA has made a huge leap in efficiency, and a very large step up from what we saw with the GeForce GTX 580.

NVIDIA has raised the performance metric at the $499 price point. This is what we expect out of next generation video cards, moving efficiency forward as well as performance at a given price point. The $500 segment just became a lot more interesting, and will give you more performance now than ever before.

We've given many awards to Radeon HD 7970 video cards, and those were well deserved. Now that the GeForce GTX 680 is here, the game changes again, and there is no doubt in our minds that this video card has earned HardOCP's Editor's Choice Gold Award. NVIDIAs GeForce GTX 680 has delivered a more efficient GPU, lower in TDP, that is better or competitive in performance, at a lower price.

The GeForce GTX 680 truly is a win right out of the gate. It has been a long time since we've said that about a new GPU from NVIDIA, and it is about time the company got something right the first time! Perhaps the stigma of power hungry, hot, inefficient GPUs is gone thanks to the GeForce GTX 680? NVIDIA needed to build its "green" reputation back up with hardware enthusiasts and gamers, and the GeForce GTX 680 is an excellent start. Lets just hope we see NVIDIAs next flagship GK110 do the same.

Were not done with the AMD Enduro driver story, of course. With this release, AMD is starting on the road to delivering reference drivers that will in theory work with all Enduro (PowerXpress 4.0 or later) laptops. In practice, there are still some teething problems, and long-term AMD needs to get all the kinks straightened out. Theyre aware of issues with other Enduro laptops and the 12.9 Beta drivers (theyre beta for a reason, right?), and hopefully the next major release after the Hotfix will take care of the laptop compatibility aspect. Ive stated before that AMDs Enduro feels like its where NVIDIA was with Optimus about two years (2.5 years) back, and that continues to be the case. The first public Enduro beta driver is a good place to start, and now AMD just needs to repeat and refine the process a few more times. Hopefully by the end of the year well see a couple more driver updates and compatibility will improve.

Off-topic nonsense

You're digging a pretty deep grave for yourself and your position. We're talking about the market landscape and price/performance right now.

Transistor for transistor the 680 simply clobbers the 7970

You know how nVidia fanboys like to thrash ATI, now AMD, for their drivers? How about you affix a sticker to your card: in about a year performance will be what it should have been.

Wow, just when I thought you couldn't say anything stupider than your last post, you go and top it with this post!! Look at the lifespan of any card, it's performance is better at the end than at release date. Heck Look at the first fermi, nvidia couldn't fix that crap with drivers they had to release a whole new card.

.

Wait, AMD unlocks more performance in their new architecture and you try to negatively spin it as performance they should have had at launch. Nvidia is late to the game and tries to respond WITH THE EXACT SAME THING and not a peep.And just when I thought you couldn't say anything more stupid than your last post you go and top it. Add a disclaimer to the next AMD release: Will perform as it should in about 11 months. Thanks for the money suckers.

OP, skip the next AMD release for at least 10 months. Buy it way cheaper and don't finance AMD's driver team. They're still pretty busy trying to fix the Enduro line.

Since I'm looking at buying a Windows laptop I'll make sure to avoid AMD: