erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,874

Xbox Lockhart ram performance seems suboptimal compared to Scarlet.

"

"

"

"

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Memory sizes don't tell us anything about the actual memory clock speed, just the bus width.

To feed something more powerful than a RX 5700 XT means you need 384 bits 14gbps GDDR6 , so 24GB works.

20GB can work with either 320-bit or 160-bit. I would probably use 12 Gbps 160-bit GDDR6 to save costs (same trick Nvidia pull with the 1660 Ti versus the rest of Turing), which would explain why it's having trouble meeting the 1440p performance level (less bandwidth than a 1660 ti)!

Also, like most development kits, they're likely to cut memory in half. 10 and 12GB sound just fine for next generation of sub-$400 APUs.

160 bit GDDR6 would be rather crappy. My guess is a 320 bit GDDR5. It would basically be a 5/6th One X with a much better CPU.

Remember, there are two parts here: 12 Tflops (almost certainly 384 bit gddr6), and 4 Tflops cut version with either 10gb (320-bit or 160-bit), or possibly cut to 8gb 128-bit gddr6?

Since Lockhart is either 4-5 Tflops (depending on the leak), it's well within range of 160-bit GDDR5. See the GTX1060 5GB card:

https://www.techpowerup.com/gpu-specs/geforce-gtx-1060-5-gb.c3060

If you replaced it with 9gbps memory, the bandwidth would be nearly identical to the 192-bit version of the 1060. And from what we've seen, AMD's Navi has caught-up with Pascal on memory efficiency.

I'm going to miss those CPU-bottleneck discussions about Jaguar...The CPU is honestly the exciting part. Can't wait to see what they do with Halo Infinite.

These numbers would make more sense if both had 8 gb of system memory. That would leave Lockhart with 12 GB of 384 bit GDDR5. (cost savings and perhaps sharing OneX board layout) The 800 mhz could be the DDR4 speed (3200 mhz effective).

Anaconda would then have 16 GB of 256 GDDR6, and at 1900 mhz gddr6 (15.2 gbps effective), should have bandwidth

Everyone is so fixated on it having unified GDDR6 memory. Why can't it have dedicated DDR4 for swapping the OS, like the PS4 Pro?

You don't have to worry about wasting parts of expensive GDDR6 if you have dedicated OS ram. I imaging the PS5 will use the same trick.

16GB GDDR6 means you're stuck with 2556-bit bus (not enough bandwidth for 12 Tflops of power) or 512-bit bus (way more expensive than setting up two different types of ram on the same board).

The 5700xt runs 10 flops using just 448 gbps. Using faster 2080 Super-like gddr6 will give it about the same ratio with just a 256 bit bus.

Hmm every leak I've seen says Anaconda = 16GB total system ram, that's os+games, with games having access to 13GB for both the game and video.

These numbers do seem weak to me, but I guess that checkerboard will be the name of the game for 4k.

That 13GB is shared memory, not just video memory. And Microsoft says it will support 8K. Even reconstructed 8K is going to require more memory available for the frame buffer. If you're using 8-10GB for video that doesn't leave a whole lot for the game engine to work with outside of graphics. The result will be a continued lack in innovation on the front of scale with things like numbers of active actors and overall size of the game world. That is not a good sign. 24-32GB of shared total memory would make more sense for some better future proofing in your typical 5-7 year console cycle.13 GB of vRam would be more than enough for the life if this console. Even 8 GB seems to be enough for this amount of gpu power as we have seen no issues with the RTX 2080 over the GTX 1080ti. Demands of vram have not changed in the last couple of years and I doubt things will change fast going forward.

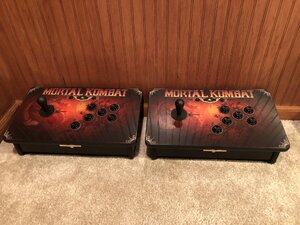

Also games like Mortal Kombat on PC has no chance against console.

Hmm every leak I've seen says Anaconda = 16GB total system ram, that's os+games, with games having access to 13GB for both the game and video.

These numbers do seem weak to me, but I guess that checkerboard will be the name of the game for 4k.

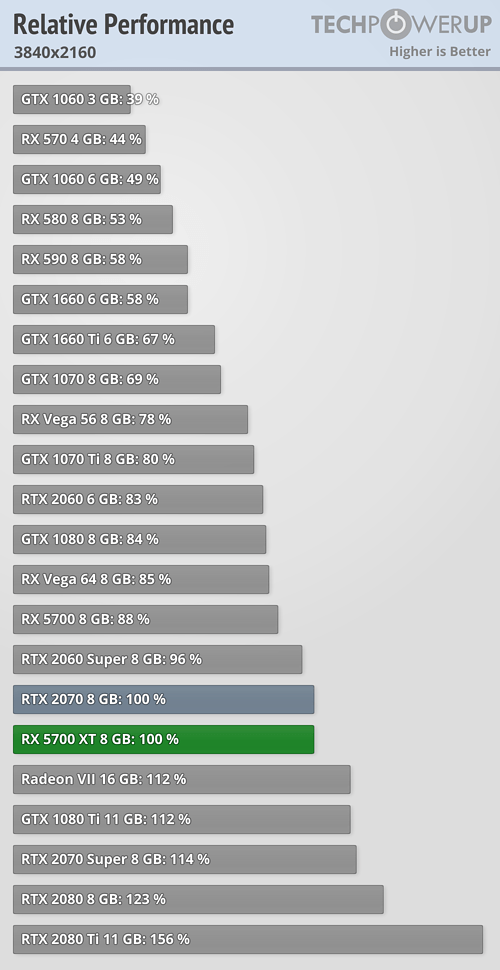

You're way too hung up on memory bandwidth. The reason the Radeon VII is faster is because it has more CUs and a higher clock speed compared to the 5700 XT. Look at the 2070 Super: It has 8GB of GDDR6 on a 256-bit bus, the same as the 5700 XT and producing the same 448 GB/s bandwidth as the 5700 XT. Same with the 2080. The 1080 Ti uses 11 Gbps GDDR5X on a 352-bit bus, producing a bandwidth of 484 GB/s and there were no indications that it was bandwidth starved. The only video card I've ever had that was bandwidth starved was the GTX Titan X, which demonstrated double-digit performance gains just from overclocking the memory.And how do you keep a graphics card %30 faster than the RX 5700 XT fed on 256-bit ram? Especially when you're adding higher bandwidth than traditional rendering (with RT acceleration).

See here where the Radeon VII pulls away by 10% at 4k because it's got bandwidth to spare vs Navi.You're going to run into the same issue on a mainstream console with the same 14Gbps GDDR6 ram at 256-bit. powering 12 Teraflops APU!

View attachment 206282

Someone want to tell us again how they could possibly make he 256-bit bus and 16GB GDDR6 ram work WHEN You're feeding 30% more performancee AND new RT AND the rest of he APU?? The 12GB ram at 384-bit bus is a lot more feasible!

And no, I've already covered the exorbitant cost of corner-case 16Gbps GDDR6 above, so expensive that it will never ship outside a $750 8GB ram graphics card in 2020. For consoles you need mainstream, so it's the same 14Gbps ram as the RX 5700, or nothing!

Bad example

I have MK9, MK10, and MK11 on PC. I host tournaments with it at LAN parties the last couple years. I have the Xbox 360 Mortal Kombat arcade controller pads (that work perfect on PC via USB), and a PC that gets 60FPS rock solid with highest detail, which is more than any console can muster at their 'we can try for mostly 60FPS with lowered detail, or we can lock in at 30FPS with medium high detail" mentality.

Point is - on your MK game example I certainly have a finer experience on my PC than any console can provide.

View attachment 206266 View attachment 206267 View attachment 206264 View attachment 206262 View attachment 206263

1) you spent a ridiculous amount of money for that setup that is hardly practical. In fact it is a counter point, you need thousands of dollars to achieve what a couple hundred does.

2) Xbox 360 is not old tech but it still is plug and play on any television setup you have.

3) FPS is a PC thing most console games are seamless anyway given the games are optimized for them, the only way you can really know is by porting and porting delivers poor performance.

And how do you keep a graphics card %30 faster than the RX 5700 XT fed on 256-bit ram? Especially when you're adding higher bandwidth than traditional rendering (with RT acceleration).

See here where the Radeon VII pulls away by 10% at 4k because it's got bandwidth to spare vs Navi.You're going to run into the same issue (just increased) on a mainstream console with the same 14Gbps GDDR6 ram at 256-bit. powering 12 Teraflops APU!

View attachment 206282

Someone want to tell us again how they could possibly make he 256-bit bus and 16GB GDDR6 ram work WHEN You're feeding 30% more performancee AND new RT AND the rest of he APU?? The 12GB ram at 384-bit bus is a lot more feasible!

And no, I've already covered the exorbitant cost of corner-case 16Gbps GDDR6 above, so expensive that it will never ship outside a $750 8GB ram graphics card in 2020. For consoles you need mainstream, so it's the same 14Gbps ram as the RX 5700, or nothing!

You're way too hung up on memory bandwidth. The reason the Radeon VII is faster is because it has more CUs and a higher clock speed compared to the 5700 XT. Look at the 2070 Super: It has 8GB of GDDR6 on a 256-bit bus, the same as the 5700 XT and producing the same 448 GB/s bandwidth as the 5700 XT. Same with the 2080. The 1080 Ti uses 11 Gbps GDDR5X on a 352-bit bus, producing a bandwidth of 484 GB/s and there were no indications that it was bandwidth starved. The only video card I've ever had that was bandwidth starved was the GTX Titan X, which demonstrated double-digit performance gains just from overclocking the memory.

That 13GB is shared memory, not just video memory. And Microsoft says it will support 8K. Even reconstructed 8K is going to require more memory available for the frame buffer. If you're using 8-10GB for video that doesn't leave a whole lot for the game engine to work with outside of graphics. The result will be a continued lack in innovation on the front of scale with things like numbers of active actors and overall size of the game world. That is not a good sign. 24-32GB of shared total memory would make more sense for some better future proofing in your typical 5-7 year console cycle.

13GB would he dedicated to video with 3 GB to the system and that is worst case scenarios. It is more than enough even after 5+ years.

8k support doesn't mean 8k gaming and most assumed as much. It is saying it has support for 8k video.

This is true, and also don't forget that these consoles are using not shared memory, but unified memory.No, 13 are for the game, again this isn't video only or haven't you seen your task manager how any program requires system ram.

3 GB are set aside for the OS alone.

If it was 24GB sure you could say 13 GB for vram, but we are talking 16 GB TOTAL.

Edit :

Let's say that the game code occupies 5GB ram then your vram will be limited to 8GB in that one case. This limits everything you can do.

By the time it comes out it's still going to be 2 generations behind PCs. Shame, I thought we might be getting something exciting in a console again like what Gen 7 brought us, but they're going to be just one step above a cell phone yet again.

Bad example

I have MK9, MK10, and MK11 on PC. I host tournaments with it at LAN parties the last couple years. I have the Xbox 360 Mortal Kombat arcade controller pads (that work perfect on PC via USB), and a PC that gets 60FPS rock solid with highest detail, which is more than any console can muster at their 'we can try for mostly 60FPS with lowered detail, or we can lock in at 30FPS with medium high detail" mentality.

Point is - on your MK game example I certainly have a finer experience on my PC than any console can provide.

View attachment 206266 View attachment 206267 View attachment 206264 View attachment 206262 View attachment 206263

You're way too hung up on memory bandwidth. The reason the Radeon VII is faster is because it has more CUs and a higher clock speed compared to the 5700 XT. Look at the 2070 Super: It has 8GB of GDDR6 on a 256-bit bus, the same as the 5700 XT and producing the same 448 GB/s bandwidth as the 5700 XT. Same with the 2080. The 1080 Ti uses 11 Gbps GDDR5X on a 352-bit bus, producing a bandwidth of 484 GB/s and there were no indications that it was bandwidth starved. The only video card I've ever had that was bandwidth starved was the GTX Titan X, which demonstrated double-digit performance gains just from overclocking the memory.

By the time it comes out it's still going to be 2 generations behind PCs. Shame, I thought we might be getting something exciting in a console again like what Gen 7 brought us, but they're going to be just one step above a cell phone yet again.

With checkerboard 4k, you are actually looking at equivalent 1500p requirements (for 2160p CB) since you only calculate half of the pixels every frame and do a smart temporal interpolation for the half not calculated

With VRS in play, it looks like the Xbox Series X is targetting 2080ti territory