Thanks AMD. Just ordered GTX 980 Ti.

They're so stupid. 4k came out of their lips about a hundred times during their PR campaign for the Fury X, yet it ships with 4GB and HDMI 1.4.

Just...wow.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Thanks AMD. Just ordered GTX 980 Ti.

They're so stupid. 4k came out of their lips about a hundred times during their PR campaign for the Fury X, yet it ships with 4GB and HDMI 1.4.

Just...wow.

Zarathustra[H];1041674011 said:Yeah,

I'm usually one to wait for official information before making any decisions, and I was following the "coming soon, few weeks" thread since January. I was hoping to support AMD and get a top end AMD GPU this time around, but apparently that isn't happening.

Usually I would have waited until the final reviews, but coming - as it did - from an actual AMD Rep, I decided it was more reliable than most rumors, and I didn't want to be stuck on the flipside once Fury-X reviews drop, fighting for available 980ti inventory, so I decided to go for it.

Now I'm trying to decide if - for the older titles I play - a single 980ti will be sufficient for 3840x2160, or if I really ought to get another one...

It's difficult to find good figures on it. How much faster - on average - would you guys say a 980ti is than the original Titan, specifically in Unreal Engine 3 titles if possible? I can't find numbers on this at all.

I'm going under the assumption that since 3840x2160 has double the pixels of my 2560x1600 screen, if everything scales linearly I'll need double the GPU power for the same frame rates. (this may be a bad assumption though)

Zarathustra[H];1041674541 said:I'm just trying to decide if I should grab a second 980ti now, so I can have two identical cards, while they are still in stock, rather than waiting for the first one to decide whether I need it or not...

This is starting to get into The Vacation Budget, which may imply some nights of Sleeping on the Couch.

My suggestion is to wait on a second card. Not all games require the same about of video processing, so I would play the games you want and watch your frame rates. If they aren't what you want or need, then get a 2nd card. This is my methodology...!

Zarathustra[H];1041674541 said:I'm just trying to decide if I should grab a second 980ti now, so I can have two identical cards, while they are still in stock, rather than waiting for the first one to decide whether I need it or not...

This is starting to get into The Vacation Budget™, which may imply some nights of Sleeping on the Couch™.

They're so stupid. 4k came out of their lips about a hundred times during their PR campaign for the Fury X, yet it ships with 4GB and HDMI 1.4.

Just...wow.

In regards to the Samsung promotion: I just purchased a new JS9000 from Crutchfield and have initiated a return from my current JS9000 to Amazon. It's a shame to send back a good unit (i.e., no obvious pixel anomalies or display defects), but Amazon wouldn't honor the promotion - they even recommended I return the display.

At least for a brief period, I'll have two JS9000s in the house. What a splendid sight it will be.

In regards to the Samsung promotion: I just purchased a new JS9000 from Crutchfield and have initiated a return from my current JS9000 to Amazon. It's a shame to send back a good unit (i.e., no obvious pixel anomalies or display defects), but Amazon wouldn't honor the promotion - they even recommended I return the display.

At least for a brief period, I'll have two JS9000s in the house. What a splendid sight it will be.

With it being such a hassle to get a JS9000 without a pixel issue, I'd gladly give up the new promotion if I already had one without an issue.

Definitely hold onto the Amazon one until you can validate the Crutchfieldone for pixel issues, etc.

I made that decision three weeks ago, I wanted to 'want' the 9000 honestly, but the truth was it wasn't dramatically different in my tests versus the 7500. Small jump in other words.

I did feel the 6700 to 7500 jump was worth it, but not the 7500 to 9000 jump.

All of them are great truthfully.

I actually value the benefit of two cards, especially now that I'm driving a 4k display, but this is largely dependent on the games I enjoy (e.g., IL-2: BOS, ArmA 3, etc.). You should evaluate your list of favorite games and determine if the cost/benefit ratio is favorable for you.

For reference, I have two vanilla GTX 980s.

I know it's somewhat irrelevant, but loling at how much money AMD lost within 24 hours just to this thread alone. Probably at least 5-10 of us that were Fury leaning, now for sure getting Tis.

Zarathustra[H];1041675556 said:Did you guys find your "one connect" boxes to be loud at all?

So I now have the JS9500 65" and the JS9000 55" sitting side by side.

Before, I hit my main question, how do I check to ensure its a perfect monitor? I.E. 0 dead pixels, pixel bleed, accurate colors?

My issue, is that I am using this as a computer monitor. My personal preference is the 9500 at 55" Unfortunately, this is not to be had....

Was there a reason they did not make it at 55" when I compare the sheer size of the 65" JS9500 to the 55" JS9000, it's just not a larger screen, the siding/ thickness of the panel is about 3x larger. It's a massive increase in size compared to the slimmer JS9000 profile.

Computer wise, will I gain that much more at 65"? I do not think so, especially when you consider its in a smaller room being mounted to a wall with a OmniMount, which may or may not work over 60"

Have any of you here had any experience with the 2?

Sad to hear... but probably necessary due to the full array backlighting. That is the only difference, correct?

But is it worth the price difference? Probably not...

Updated my JS9000 to 1217.

So far it feels like lag in all modes went down. Maybe placebo, I'm not sure... might need to test. Anyone else update yet?!

No, but I do hate that thing. Can't seem to tuck it out of the way properly.

What sort of noise is yours making?

Officially confirmed no HDMI 2.0 for AMD. Not possible with firmware or addon, it's fundamentally incompatible with the hardware architecture.

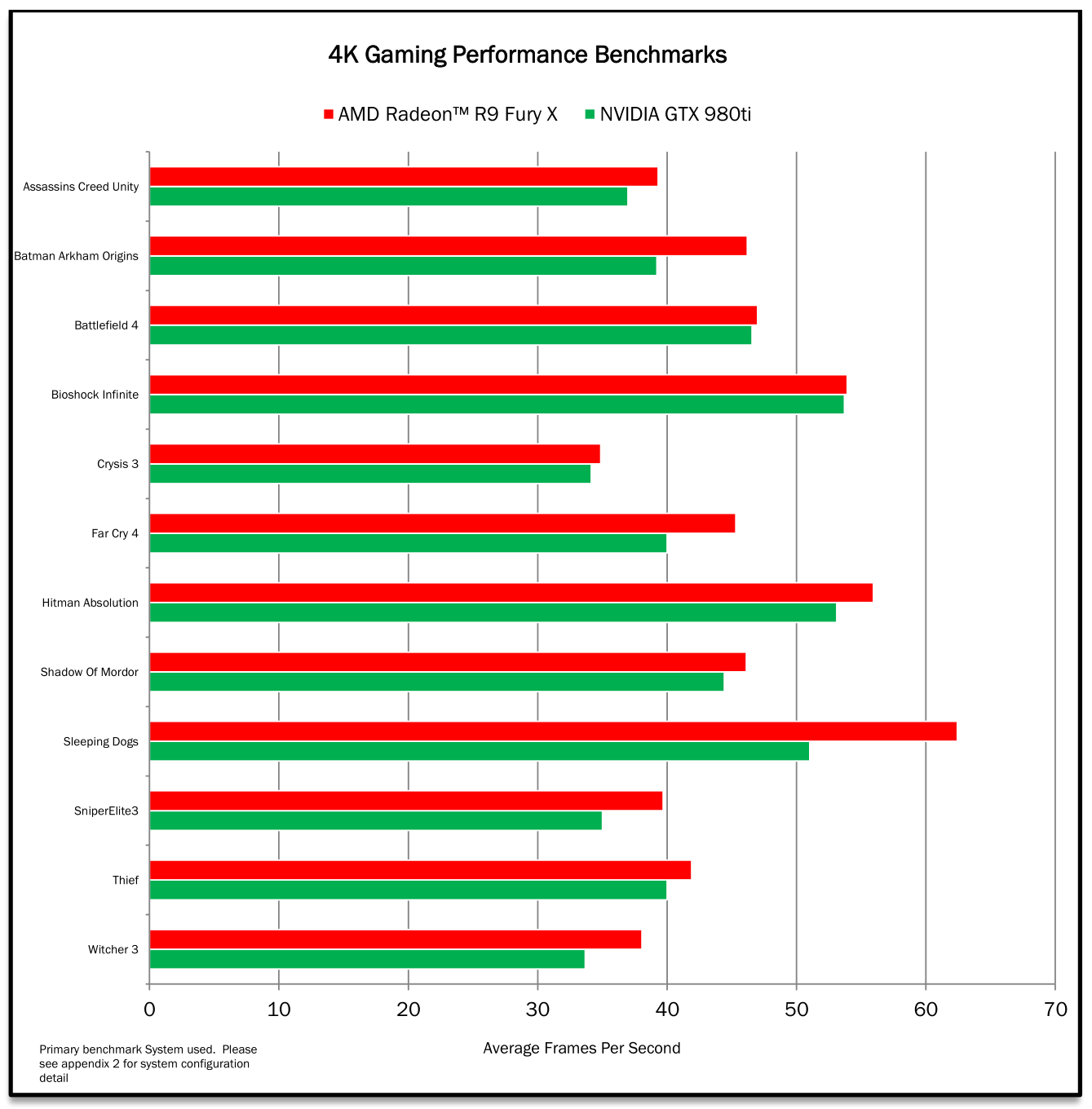

Apparently not an oversight, probably to release it sooner. It's not a ground up architecture, I believe they said in the Podcast it's an adaptation of Tonga. Yet they keep touting how the Fury beats the GTX 980 Ti in 4K. I wonder how they could even benchmark the Fury beyond 30fps in 4K...

Before, I hit my main question, how do I check to ensure its a perfect monitor? I.E. 0 dead pixels, pixel bleed, accurate colors?

... I wonder how they could even benchmark the Fury beyond 30fps in 4K...

If AMD had a bigger budget, I'm sure they would have invested in the development for HDMI 2.0. As the underdog, AMD did what they could with limited time and budget to bring a competitive GPU to market. At least they succeeded in making it slightly faster. It's good for the market. Otherwise price per performance from Nvidia would be through the roof.