cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,085

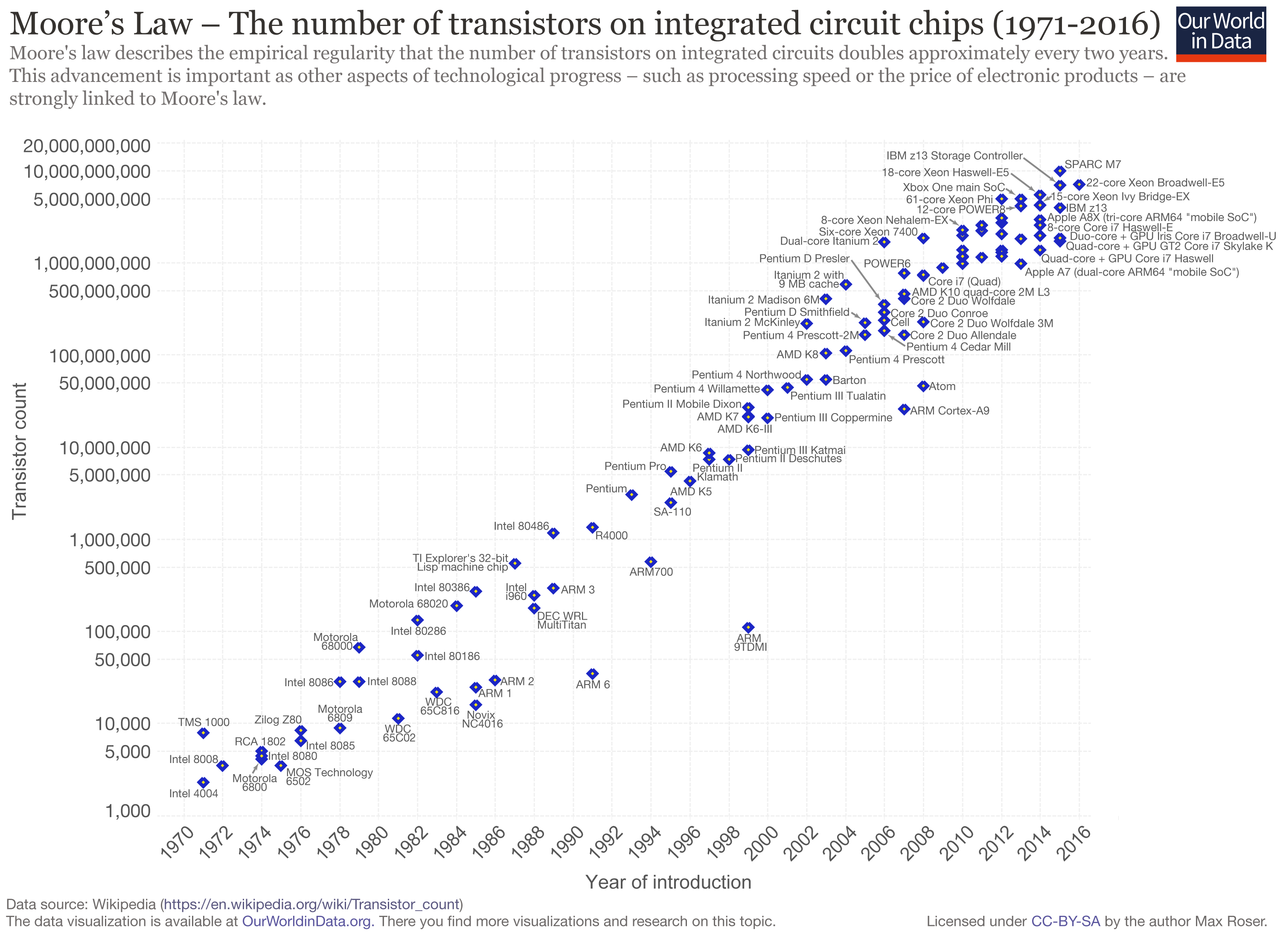

Turing Award recipient, RISC pioneer, Google engineer, and University of California professor David Patterson gave a talk at the 2018 @Scale Conference on how software innovations and revolutionary hardware architectures will drive performance gains in the future as Moore's Law is over. He thinks that applying optimizations to existing software languages could net a 1,000x improvement in performance, and new technologies such as machine learning will require the development of new hardware architectures and software languages. "This," he says, "is a golden age for computer architecture."

"Revolutionary new hardware architectures and new software languages, tailored to dealing with specific kinds of computing problems, are just waiting to be developed," he said. "There are Turing Awards waiting to be picked up if people would just work on these things."

"Revolutionary new hardware architectures and new software languages, tailored to dealing with specific kinds of computing problems, are just waiting to be developed," he said. "There are Turing Awards waiting to be picked up if people would just work on these things."

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)