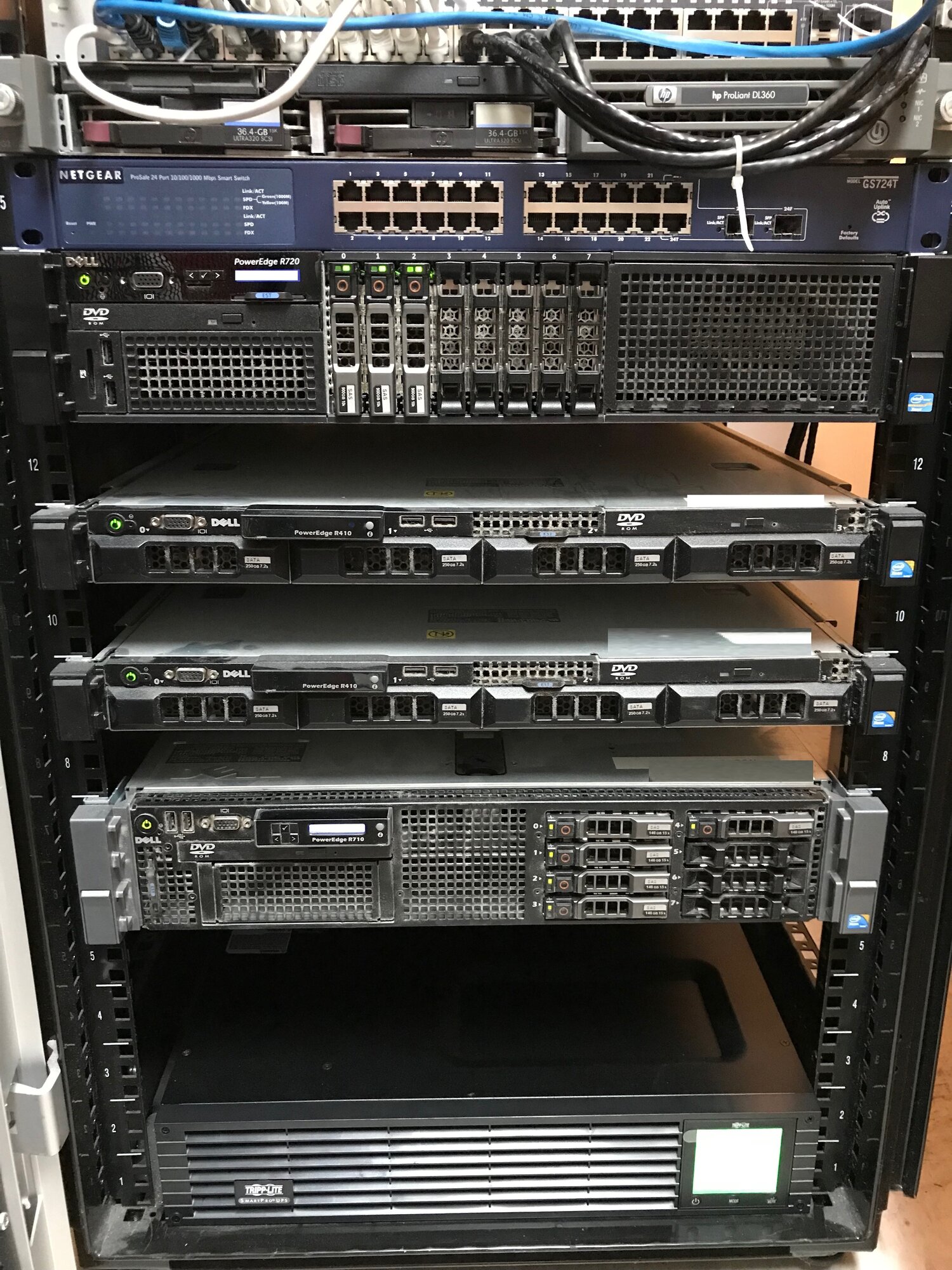

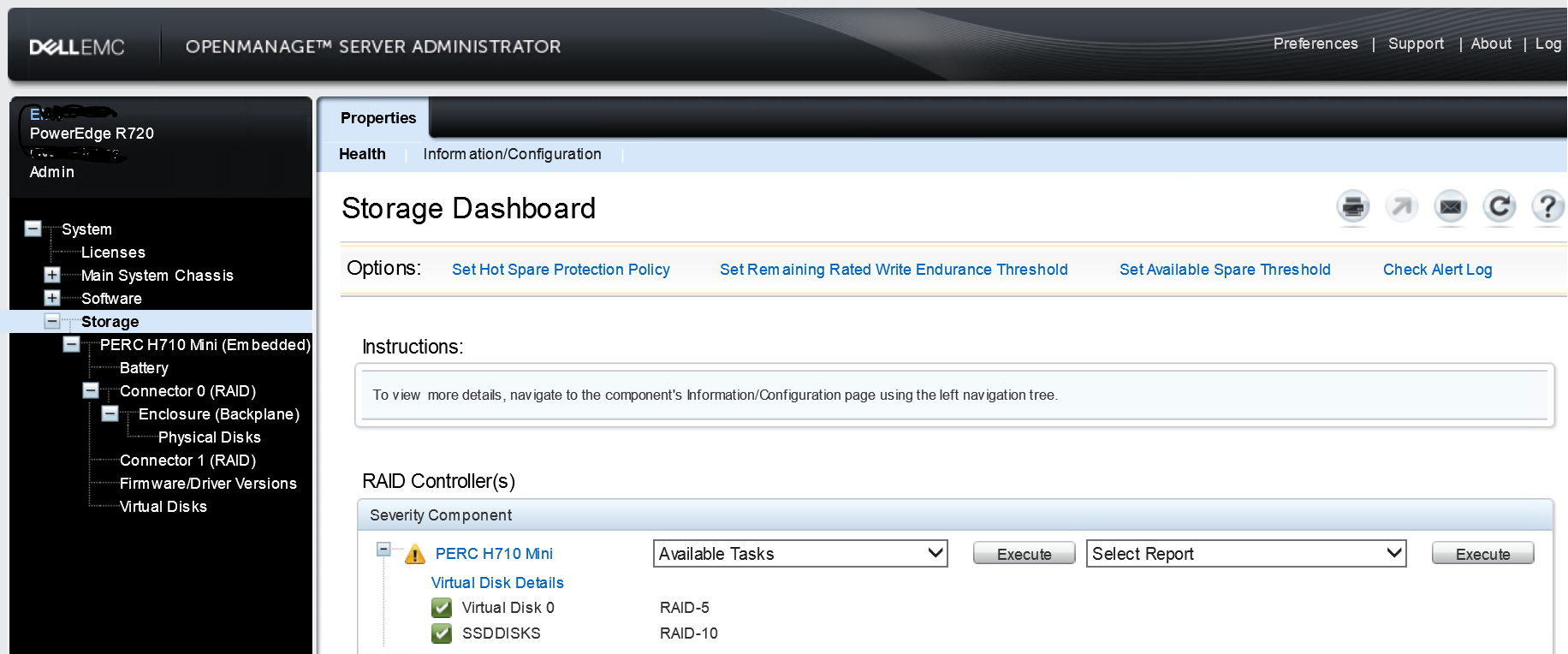

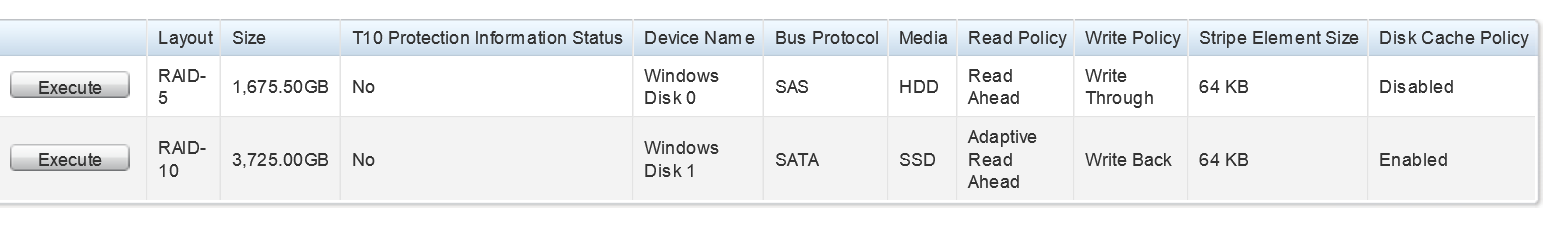

We have a Dell PowerEdge R720 and we currently have three (3) 900GB 10K RPM SAS 6Gbps 2.5in Hot-plug Hard Drive in RAID 5 for a total of 1.8TB of storage. The server has 32GB of RAM and an Intel Xeon E5-2620 CPU @ 2.00Ghz.

We would like to upgrade our drives to more storage and hopefully more speed. Looking for a total of 2.5-3.0TB of storage and could use an increase in speed, as well.

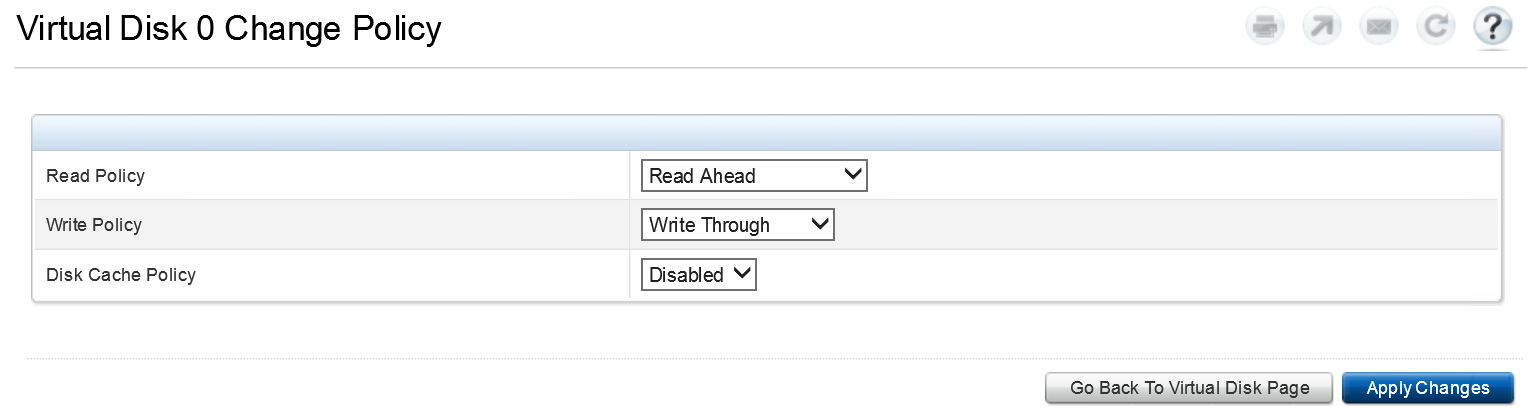

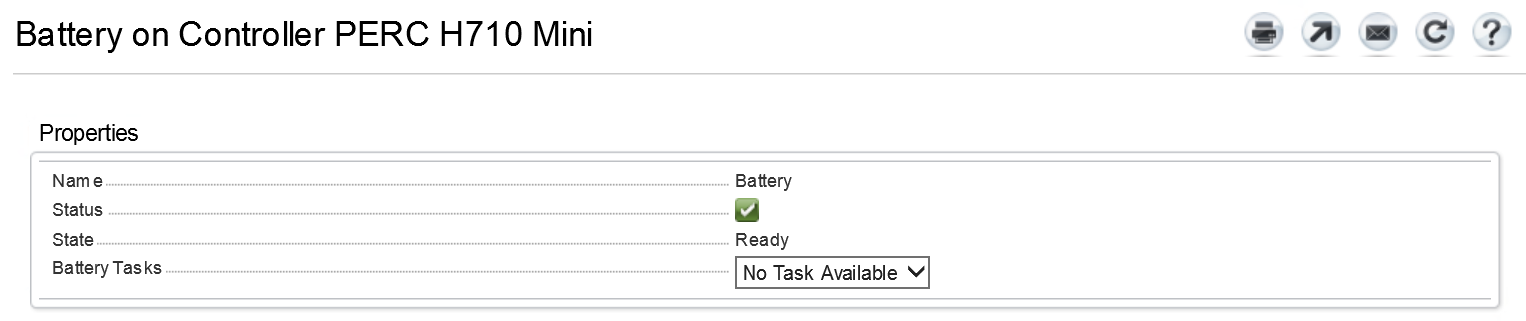

We have the 2.5" Chassis with up to 8 Hard Drives and a Dell Perc H710 RAID controller.

I would like to add new drives at a reasonable price (not looking to go under $400 but not looking to go over $4,000).

Any recommendations on which drives, what number of those drives, and in what RAID configuration to get?

Any help will be greatly appreciated.

Thanks!!!

We would like to upgrade our drives to more storage and hopefully more speed. Looking for a total of 2.5-3.0TB of storage and could use an increase in speed, as well.

We have the 2.5" Chassis with up to 8 Hard Drives and a Dell Perc H710 RAID controller.

I would like to add new drives at a reasonable price (not looking to go under $400 but not looking to go over $4,000).

Any recommendations on which drives, what number of those drives, and in what RAID configuration to get?

Any help will be greatly appreciated.

Thanks!!!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)