cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,074

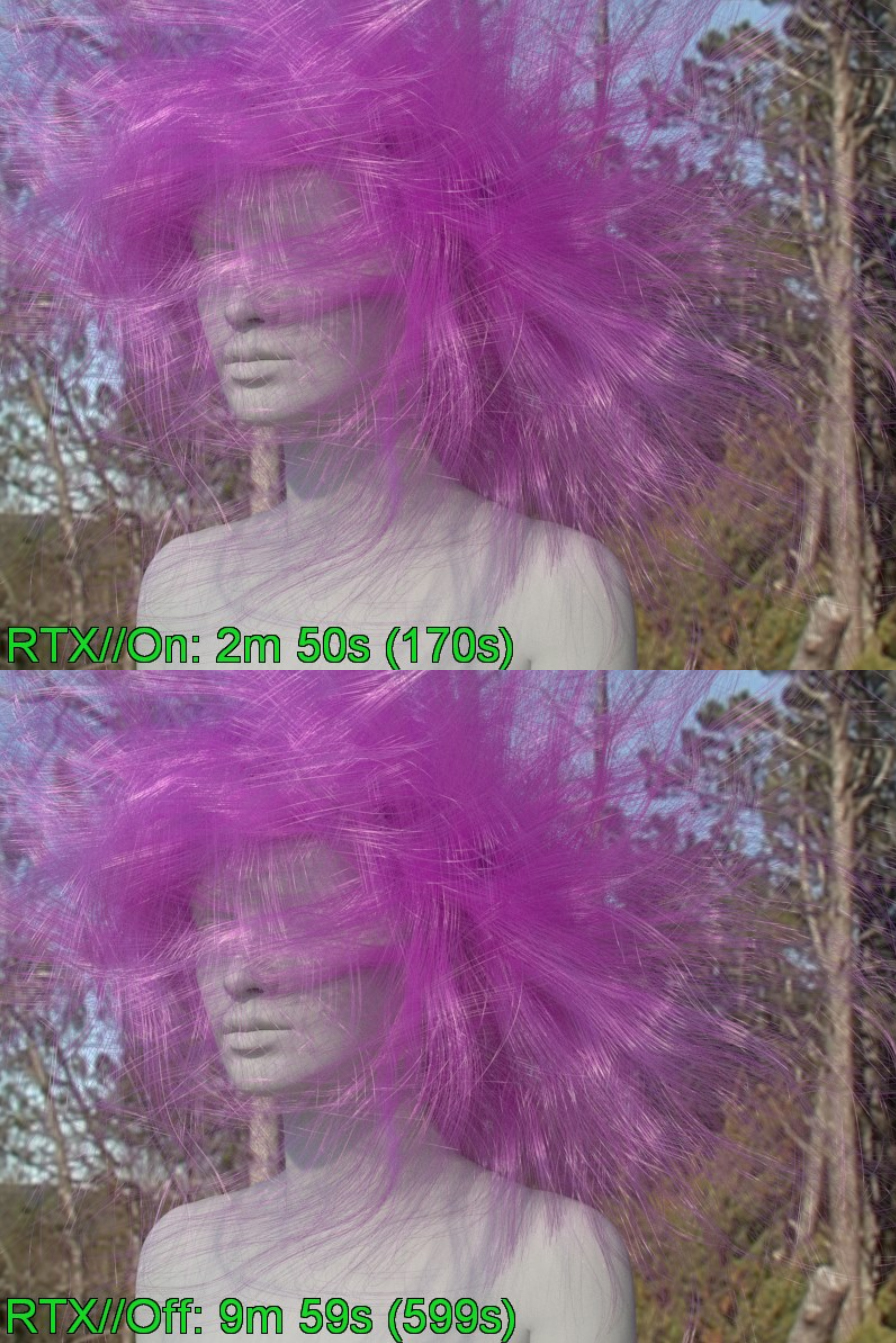

Gamers Nexus created a demonstration that illustrates the positives and negatives of RTX usage by utilizing the DXR implementation in Unreal Engine. They create environments using traditional graphics techniques that fake RTX global illumination and then recreate them using the accuracy of real-time ray tracing . Viewers can visually see the ups and downs of using DXR as noise rises and performance declines. This is the first video of a series that will delve into the topic.

Today's content fakes global illumination to illustrate the ups and downs of using RTX. The upside is obvious -- it's easier, allows better dynamic movement of objects while retaining GI, and is theoretically more accurate. The downside is performance, clearly, and noise. Our 100% ray-traced... "game" provides some early demos of DXR's implementation into Unreal Engine, which is presently (at writing) on its third preview build of the DXR implementation. Epic Games still has a lot of work to do on this front, but we can take an advance look at RTX shadows, reflections, global illumination, and more.

Today's content fakes global illumination to illustrate the ups and downs of using RTX. The upside is obvious -- it's easier, allows better dynamic movement of objects while retaining GI, and is theoretically more accurate. The downside is performance, clearly, and noise. Our 100% ray-traced... "game" provides some early demos of DXR's implementation into Unreal Engine, which is presently (at writing) on its third preview build of the DXR implementation. Epic Games still has a lot of work to do on this front, but we can take an advance look at RTX shadows, reflections, global illumination, and more.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)