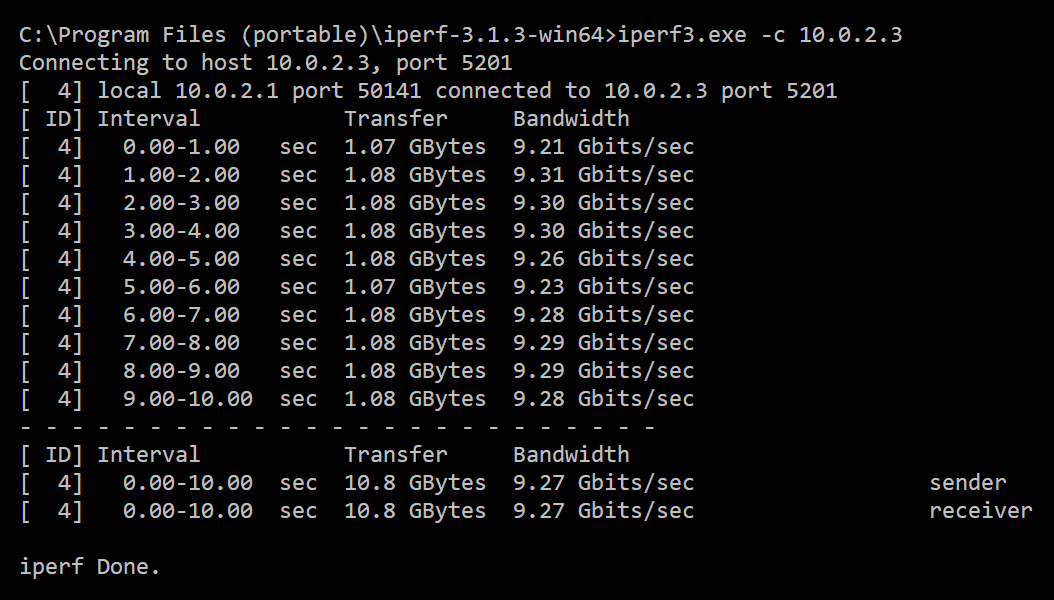

Ok so I decided to try something a little different. I decided to try copying a file from one array to the other over the network. Locally on the server copying a 32gb file from O: to R: took 1:29 at 368MB. Transferring it on my pc the same way took 1:08 at 480MB/sec. I did see the network activity past 4gbit up and down simultaneously. To verify this result, I tried a few more 32gb files transferred with my pc over the network, all within the margin of error (1:14 and 1:10.) So then I decide to verify it locally, and transfer a few more 32gb there too (burst plot files.) Here we get 1:27 and 1:29, again very similar.

So average transfer rate locally was 368.67MB/sec, and doing the same thing with my pc over the network was 462.33MB/sec

During this time my pc was seeing about 14% cpu use from Teracopy, the server would see up to 8%. It looks like I may be cpu limited, because my 8700k's single thread performance is greater than my ryzen 1700x? I did not expect to see this limit reached so easily.

This also leads me to believe that my internal ssd is actually my limiting factor right now, even though Crystal Disk Mark showed otherwise. Suppose this gives me an excuse to look at an nvme drive again, very peculiar.

So average transfer rate locally was 368.67MB/sec, and doing the same thing with my pc over the network was 462.33MB/sec

During this time my pc was seeing about 14% cpu use from Teracopy, the server would see up to 8%. It looks like I may be cpu limited, because my 8700k's single thread performance is greater than my ryzen 1700x? I did not expect to see this limit reached so easily.

This also leads me to believe that my internal ssd is actually my limiting factor right now, even though Crystal Disk Mark showed otherwise. Suppose this gives me an excuse to look at an nvme drive again, very peculiar.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)