I am supremely frustrated this morning. I have a very large file transfer to complete for a client and (initially) everything was working smoothly at first.

Setup

1. Server with Gigabit Network Adapter

2. Western Digital MyCloud PR4100

BOTH ends of the connection have a gigabit capable adapter.

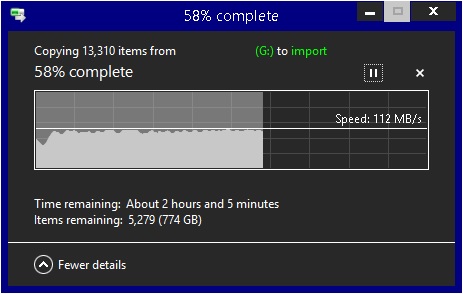

When I first begin the file transfer, I get a nice steady 110 MB/s transfer rate.

Then, after some time it goes down to 35 MB/s

I have configured a software RAID array on Server 2012 (which is working without issue).

The physical link is a Gigabit Ethernet switch (UN-managed).

What should I try? Flow control adjustment? Jumbo frames? This is unbelievably frustrating.

Setup

1. Server with Gigabit Network Adapter

2. Western Digital MyCloud PR4100

BOTH ends of the connection have a gigabit capable adapter.

When I first begin the file transfer, I get a nice steady 110 MB/s transfer rate.

Then, after some time it goes down to 35 MB/s

I have configured a software RAID array on Server 2012 (which is working without issue).

The physical link is a Gigabit Ethernet switch (UN-managed).

What should I try? Flow control adjustment? Jumbo frames? This is unbelievably frustrating.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)