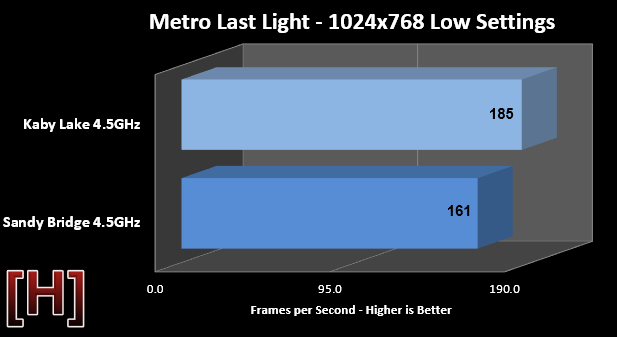

Seems like Kaby Lake should really only make to case to upgrade if you are still on a 1st Gen i7.

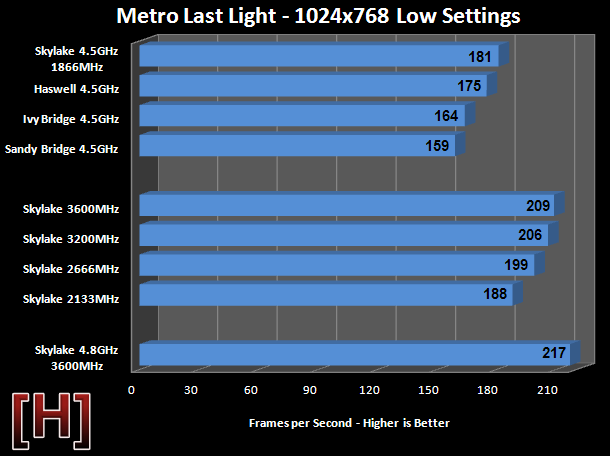

The IPC upgrade will probably jump to 30% or more.

Wish it was possible to get up to 4.5 on this old beast. Using around 1.4v just to get stable at 4Ghz lol.

The IPC upgrade will probably jump to 30% or more.

Wish it was possible to get up to 4.5 on this old beast. Using around 1.4v just to get stable at 4Ghz lol.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)