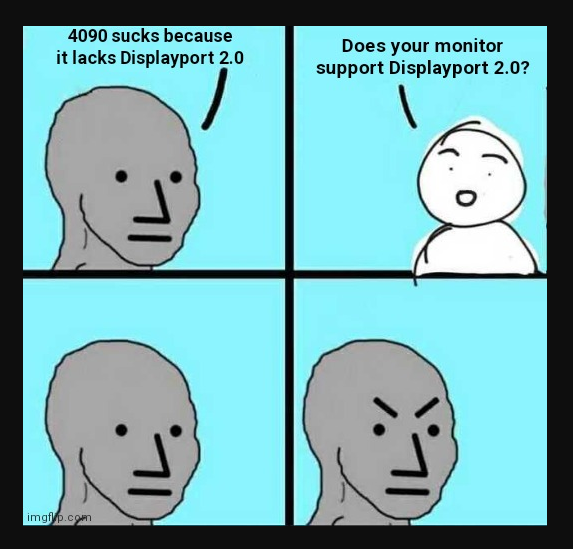

Yup. My plan was a 3080 and a 6800XT for my two main systems (since both are at 1440P). One would handle ray tracing, and given the performance being almost identical for raster workloads, I'd have the alternative if there was something that just ran better on AMD, or driver bugs kept one from working well. But I did grant the possibility of RT being important, so with them being equal, I did pivot to a 3080 for my main gaming box. I'd have been ok if I'd been forced to stick with AMD though - and I don't regret having the 6800XT in my workstation either.No, I'm not. If AMD can produce a faster card with feature parity, I'd buy that just as easily. I've had many AMD cards over the years as well as NVIDIA. I'm simply betting that its more likely that AMD can make a card just fast enough to force NVIDIA to drop its pricing a bit. Had the mining demand not been so strong, the $500 cheaper MSRP of the 6900XT might have forced NVIDIA to drop the price on the RTX 3090 or the subsequent 3080 Ti. Not everyone cared about ray tracing performance, but it might have gone that way depending on how much weight individuals gave to it. That said, I think a lot of people think more like I do when shopping the top of the stack.

In fact, the only time I've REALLY cared is for VR - there are still some micro-stutter issues with AMD (or were at the time) so that HAD to be Nvidia.

And for me it was Control - I wanted to play that with RT. REALLY wanted to play that with RT. But that's the one game I've played where it mattered - in the last two years.Again, at 4K in some games the small percentage of difference is more pronounced than it is at lower resolutions where you've essentially got frames to spare. That said, I mostly agree with you. AMD didn't falter that much on the 6900XT given it's much lower MSRP, but the poor ray tracing performance absolutely killed it on the high end for buyers like me. Like it or not, individual games often sell GPU's. For the time, that game was Cyberpunk 2077.

Also agreed.That's why I was talking about AMD competing aggressively on price. When they do it right, AMD can sometimes offer more performance for the money in the more mainstream segments where the bulk of people buy their cards.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)