Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

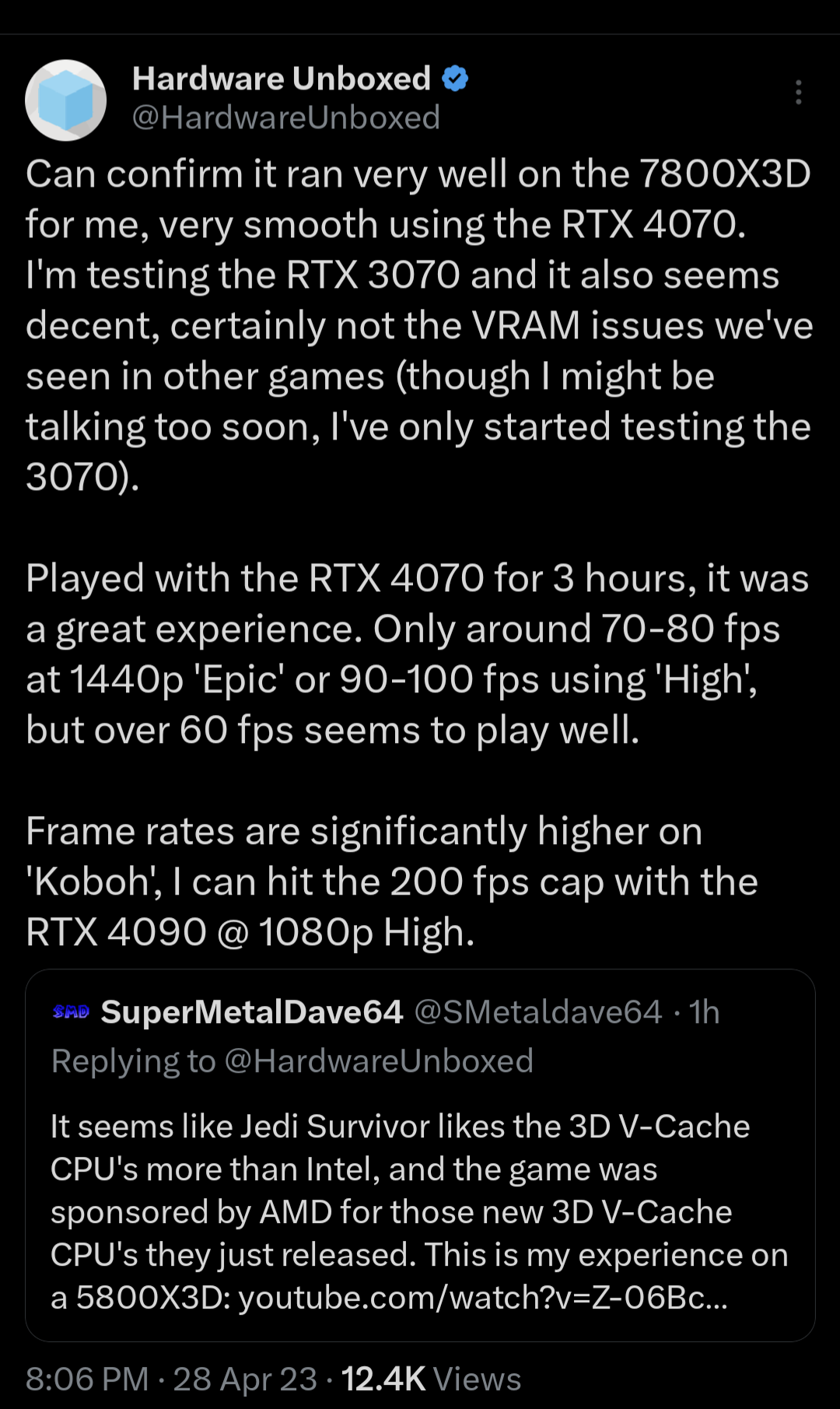

Jedi Survivor is the best showcase of a looming problem for PC players

- Thread starter Lakados

- Start date

D

Deleted member 312202

Guest

Sorry, but basically calling people basically "dumb" for buying what they want doesn't really make sense let alone being offensive. I have a lot of respect for people here (you included), but man you saying something like this just isn't right. I grew up in the 90's (born in the 70's) and yes I remember the days of games causing new high end cards to buckle under the new latest graphics. Fortunately I've come a long way in life and can afford my high end graphics card which by the way plays this game just fine at everything maxed out so stop generalizing the population here.People who buy expensive cards aren't smart people. The complaint is that your graphics card that costs as much as a used car, can't play your new $60 game at max settings with at least 60 fps. Without Ray-Tracing of all things too, which would murder that frame rate even more so. God forbid you guys grew up in the 90's and early 2000's when expensive graphic cards would get spanked by the latest new title. This was the norm back then. What wasn't the norm was spending $1,600 on a graphics card.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,534

You must have missed lots of his posts lately, gross generalizations are what he does best.Sorry, but basically calling people basically "dumb" for buying what they want doesn't really make sense let alone being offensive. I have a lot of respect for people here (you included), but man you saying something like this just isn't right. I grew up in the 90's (born in the 70's) and yes I remember the days of games causing new high end cards to buckle under the new latest graphics. Fortunately I've come a long way in life and can afford my high end graphics card which by the way plays this game just fine at everything maxed out so stop generalizing the population here.

Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,590

I have my bouts of retardation to be certain. Some of em on here!People who buy expensive cards aren't smart people. The complaint is that your graphics card that costs as much as a used car, can't play your new $60 game at max settings with at least 60 fps. Without Ray-Tracing of all things too, which would murder that frame rate even more so. God forbid you guys grew up in the 90's and early 2000's when expensive graphic cards would get spanked by the latest new title. This was the norm back then. What wasn't the norm was spending $1,600 on a graphics card.

I like to play games at 4K at playable frame rates. That's why I buy expensive shit. And I replace the damn things relatively often. I think my 2080Ti lasted the longest as it's still infrequently in service. You need some serious horsepower to run games at max resolution in 4K.

If that makes me stupid, so be it. Hope you have a nice weekend, I agreed with most of what you said. lol

D

Deleted member 312202

Guest

Yes I guess I missed them.You must have missed lots of his posts lately, gross generalizations are what he does best.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,557

I run all the mods. Because the base game was designed for linear and limited play. I chafe running 4 mechs as a hard limit. I like fielding a company against the same or greater with real AI challenges.

The engine is riddled with bugs and issues that HBS never fixed.

Base game sure, its fine. Modded game is a turd with out a ton of community DLL and optimizations that basically rewrite the engine.

I run Battletech Advanced 3062 mod, so yeah it's a hog but it's massively modifying the game as well. It was never built to handle all of those mechs and units on the field. It's a cool mod though and really adds to the fun, but I also understand why it runs worse then the stock version of the game. At least the developer was cool enough to open the game up to mods, because that was not the case at release. Not sure you can hold the developer responsible for making mods play better, they made the base game play just fine for the most part. But yes they never did fix the memory leak and city maps still take forever to load.

III_Slyflyer_III

[H]ard|Gawd

- Joined

- Sep 17, 2019

- Messages

- 1,252

Gave this game an hour... was hopeful at first, was getting over 100 FPS at the begining with the smaller areas and it was smooth... then things opened up and performance absolutely tanked hard... like 68 FPS hard on average with dips into the 50's. GPU usage was at like 50% tops... was abysmal. I will be returning this game and will pick it up when it goes on sale in the winter. It is in no state to have been released. I am disappointed because this game looks like it will be fun, but I am not supporting these studios rushing things out at $70 a pop for sub-par performance. On Epic settings at 4K it still looked like garbage compared to other games I have that run 144 FPS+ at 4K maxed.

This game is ripe for DLSS 3.0 and frame gen, but until someone hacks it into the game somehow, this game is toast.

This game is ripe for DLSS 3.0 and frame gen, but until someone hacks it into the game somehow, this game is toast.

Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,590

FYI guys, I disabled my E-cores and the Micro stuttering disappeared on my PC. It's been utterly fluid ever since. Rock solid at 60FPS (Limited by my display).

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,369

itNonsense. UE4 by design is lowest common denominator to sell as many assets as possible to as many gullible developers as possible. Don’t even get started on Unity - even “professional” games feel janky and unoptimized.

The proof is in all the asset-flip UE4/5 and Unity trash that floods Steam with impressive looking screenshots but absolutely horrendous performance and floaty/jank-AF input.

UE4 works great if your developers do a proper job. Earlier UE4 games ran much better for some reason, I am not sure if a later build introduced some issues that make it harder for developers to get it running smoothly. But most games on their own engines are stuttery messes these days. Most of the major AAA games over the past 2 years have had some stuttering problem. Halo Infinite, Cyberpunk, Hitman 3 are some examples. It would be easier for me to list AAA games that don't have stuttering issues because there are only a few of them.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,807

Going to guess none of the "pro reviewers" even thought about trying that out.FYI guys, I disabled my E-cores and the Micro stuttering disappeared on my PC. It's been utterly fluid ever since. Rock solid at 60FPS (Limited by my display).

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,534

In all honesty if you need to artificially gimp your CPU to get a game to run correctly then that's a good reason to just return the game and wait. There's no excuse for that in a AAA game.Going to guess none of the "pro reviewers" even thought about trying that out.

Could people went from code to blueprint over time ?Earlier UE4 games ran much better for some reason, I am not sure if a later build introduced some issues that make it harder for developers to get it running smoothly.

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,369

Could people went from code to blueprint over time ?

I have no idea. I always thought blueprint was for quick prototyping and small teams. Can you really make a fully functioning game with just blueprint?

Yes.I have no idea. I always thought blueprint was for quick prototyping and small teams. Can you really make a fully functioning game with just blueprint?

Won't make any different if you aren't bottlenecked by the game thread.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,939

Texture compression does increase performance because it helps feed the GPU faster, directly. What the PS5 does isn't helping GPU performance, just making it faster for decompression of data which results in faster loading times.The obvious benefit is what most people talk about. That being 30-40% less storage space needed for at least some games.

What consoles do is cheaper, not superior. Unified memory is cost cutting savings. It sucks when both the GPU and CPU have to access the same memory which can only feed data one at a time. GDDR6 is inferior to CPU's due to higher latency. There's a reason why PC uses DDR for CPU's and GDDR for GPU's, and not one giant memory pool made of GDDR6 for both. You can't stream textures from the SSD fast enough to feed a GPU, even with Sony's Kraken compression. The most you can do is quickly remove old textures from the ram and quickly retrieve new texture data from the SSD, but games have been doing this since the PS1 era, and this won't result in faster frame rates. If anything you won't see textures missing in the game for a few seconds.Anyway its not magic no... its just superior to PC at the moment. Obviously PC has more powerful GPUs and CPUs, but being able to stream textures is an advantage for developers targeting the consoles.

Nobody knows what they've done to the game. Where is this info even from? Did developers just come out and explain this?And as Lakados has said it doesn't even look like in this case they are even leveraging that tech anyway. Lazy ass developer I guess is the case. I understand games like last of us having port issues... as they lean on the rad compression stuff baked into PS5. In this case it just sounds like they did a crap job.

We all agree GPU's are overpriced right? We also agree that the 4090 is the most overpriced GPU yet to date? People with 4090's act entitled that they should get 60 fps in a new game. It is what it is.Sorry, but basically calling people basically "dumb" for buying what they want doesn't really make sense let alone being offensive.

Fortunately I've come a long way in life and can afford my high end graphics card which by the way plays this game just fine at everything maxed out so stop generalizing the population here.

I have a Vega 56 and it's still doing just fine, but my standards are low, like 1080p low. If you paid $1,600 for a graphics card and are sad that it isn't playing the latest titles at max settings 4k and you're upset, then that's because you feel like you're not getting your moneys worth and you overpaid. All GPU's have a certain sweet spot when it comes to price and performance and at some point you're paying more for prestige than longevity. It wouldn't shock me that a Radeon 7900 XTX performs better at this game than a 4090, just because it's an AMD sponsored game.I like to play games at 4K at playable frame rates. That's why I buy expensive shit. And I replace the damn things relatively often. I think my 2080Ti lasted the longest as it's still infrequently in service. You need some serious horsepower to run games at max resolution in 4K.

If that makes me stupid, so be it. Hope you have a nice weekend, I agreed with most of what you said. lol

schoolslave

[H]ard|Gawd

- Joined

- Dec 7, 2010

- Messages

- 1,293

Unlikely as it’s usually the other way around.Could people went from code to blueprint over time ?

Basically... C programmers are not exactly cheap and game studios burn through developers like a puppy mill. Almost all the guys I know who were doing game development for the last 15+ years are now either in positions where they barely code, or have left and are far happier (and making more money) doing shit like pouring concrete slabs.Could people went from code to blueprint over time ?

A former game dev I worked with some 20 years ago at a very infamous studio in Vancouver recently decided to retire and he now makes 2x as much doing custom acid-etched concrete work. Got pissed off literally asked the team redoing the company lobby if he needed help, they hired him, and he quit, super happy now, and for the past 4 years or so he had been a right miserable bastard to play, so D&D nights on Discord go way smoother now.

This is purely anecdotal, but C, C++, and C# programmers are aging out or being promoted out, and the scratch generation of drag-and-drop coders are taking over.

This is purely anecdotal, but C, C++, and C# programmers are aging out or being promoted out, and the scratch generation of drag-and-drop coders are taking over.

Or the game dev industry is well known as a shit show.

Unity for AAA gaming is a thing now but it's more of a recent development. Standard unity dev is almost entirely single-threaded and relies heavily on coroutines to do anything remotely seeming like parallelism (it's not but it seems like it, it just hands the main thread back to the engine to come back later to check on the workload). You can still make a AA or AAA game with it, but your code has to be very limited on CPU utilization, most intensive stuff would have to be done on the GPU in the form of compute shaders.No, it's the best, most optimized, easiest to use, and most documented engine available.

It's also the most popular engine by far, so a lot of games use it. And among those many games there are plenty of poorly made ones.

The only engine comparable to Unreal is Unity. Unity insn't nearly as optimized. But a good developer using Unity will have a better optimized game than a bad developer using Unreal Engine.

Basically, these are the Jedi Survivor developers

https://media.giphy.com/media/cqurdLEk6zlmg/giphy-downsized-large.gif

The paradigm is now shifting with Unity DOTS entering version 1.0 as of February. It's moving from OOP (standard dev) to a more data-oriented approach. You should start seeing a lot more parallelism with the jobs system, there is a catch though, this a fundamental departure from the way you would typically make games in Unity, it's a bit more complicated and overall is a sea change in terms of difference. So yeah, in the near future, you will see highly optimized AAA quality games in the future from unity devs (I'm actually building a DOTS program for work now). DOTS has been around in earlier versions for years prior to 1.0, but with an official release means higher stability and more support.

As for the devs of Jedi Survivor, I don't think they're "bad" by any means, I think they chose a method of texture streaming that works exclusively for the new consoles, and didn't have the time to deal with a separate method for PC. I don't see why they can't just bifurcate the release. Release on consoles first, then release on PC 4-6 months later, people will still buy it.

Last edited:

D

Deleted member 312202

Guest

This makes no sense honestly. We buy the hardware so we can get the extra FPS just like we did in the 90's and early 2000's. Things have gone up in price as we all know so complaining about it won't help. I didn't choose poorly as I enjoy my card and I easily get over 60FPS in most new games including the one we are discussing. Moving on....We all agree GPU's are overpriced right? We also agree that the 4090 is the most overpriced GPU yet to date? People with 4090's act entitled that they should get 60 fps in a new game. It is what it is.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,579

While true - I really don't think these are language proficiency issues. It is a design based upon cost-assumptions which do not apply on PCs.This is purely anecdotal, but C, C++, and C# programmers are aging out or being promoted out, and the scratch generation of drag-and-drop coders are taking over.

FYI guys, I disabled my E-cores and the Micro stuttering disappeared on my PC. It's been utterly fluid ever since. Rock solid at 60FPS (Limited by my display).

No, no...sir. Sir.. sir... it is because of Win10. EA has said it is because of win10. Win10 is bad! Trust in EA!

/s

Oh, and please update to the latest patch to continue the game.

https://www.wepc.com/news/ea-appear...-progression-until-latest-patch-is-installed/

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

We all agree GPU's are overpriced right? We also agree that the 4090 is the most overpriced GPU yet to date? People with 4090's act entitled that they should get 60 fps in a new game. It is what it is.

Yeah…. You can you’re not supposed to but you can.I have no idea. I always thought blueprint was for quick prototyping and small teams. Can you really make a fully functioning game with just blueprint?

You are supposed to use that to bang it out so you can circle back around and do the cleanup and optimization. The idea being you have the trained monkeys bang out the bulk of the mind numbing work with pre approved and tested snippets then have the trained experienced team following behind to make sure all the parts are fitting together properly.

It doesn’t help that blueprint and other things like it have gotten a lot “better” while dealing with low level API’s has gotten more complex and the people experienced enough doing that sort of work retire out or move on for less stressful better paying pastures.

So blueprint lets you get something functional out faster than ever but cleaning it up is more complicated than ever, that’s where some AI tools are being introduced but troubleshooting their output is interesting from what I’ve been told.

Basically programming talent is moving on and fewer good programmers are interested in building games because they have more options than ever before.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,579

We're seeing "minimum viable product", more and more. Does it run? Yes. Does it run well ... no.Yeah…. You can you’re not supposed to but you can.

You are supposed to use that to bang it out so you can circle back around and do the cleanup and optimization. The idea being you have the trained monkeys bang out the bulk of the mind numbing work with pre approved and tested snippets then have the trained experienced team following behind to make sure all the parts are fitting together properly.

It doesn’t help that blueprint and other things like it have gotten a lot “better” while dealing with low level API’s has gotten more complex and the people experienced enough doing that sort of work retire out or move on for less stressful better paying pastures.

So blueprint lets you get something functional out faster than ever but cleaning it up is more complicated than ever, that’s where some AI tools are being introduced but troubleshooting their output is interesting from what I’ve been told.

Basically programming talent is moving on and fewer good programmers are interested in building games because they have more options than ever before.

To your point - what were once called "prototypes" are now shipping, by all outward indications.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,939

Nobody pays $1,600 for a graphics card in the late 90's and 2000's. It wasn't a problem for someone to hop from one graphics card to another, because the cost wasn't that high. You're not future proofing all that much by paying that much for a graphics card. Just a reminder but by the time the Xbox 360 was released, graphic cards prices started to go up and this is why a lot of people left PC gaming for consoles. Also why the whole "Can it Run Crysis" meme started.This makes no sense honestly. We buy the hardware so we can get the extra FPS just like we did in the 90's and early 2000's.

It absolutely does help as Nvidia is already thinking of lower prices of unreleased graphic cards due to the backlash from consumers. If you complain and still buy their products, then yes it's a waste of time. Vocal minority is very powerful.Things have gone up in price as we all know so complaining about it won't help.

Assuming you're talking about the RTX 2080 Ti, it's gonna play games fine because most games up until now aren't that demanding. The Vega 56 I have also plays games just fine, as long as I don't go past 1080P. It was fine enough that Google was planning to use it to power their cloud gaming service, before it failed. Keep in mind that 2080 Ti probably can't do Ray-Tracing without DLSS and probably not at 1440p. You can't use DLSS 3.0 because Nvidia, but you can use FSR even in Jefi Survivor. It wasn't all that much faster than a 1080 Ti, which is why a lot of people held onto them. There is a much bigger gap between the 980 Ti and 1080 Ti, than there is for the 1080 Ti and 2080 Ti. If you're wondering I bought my Vega 56 used for $220 in 2019 after the crypto market crashed and have been using it since. I was just playing CyberPunk2077 on it through Linux, with a mix of high and ultra settings, at 1080P of course.I didn't choose poorly as I enjoy my card and I easily get over 60FPS in most new games including the one we are discussing. Moving on....

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

People have an overly optimistic view of the past when it comes to games. I'm old enough to remember buying enough games in both the 8-bit and 16-bit era on $50-$60 cartridges that were complete buggy dogshit. No patches, and just garbage. These were 'AAA' titles. It's always been like this. At least now we have the internet.We're seeing "minimum viable product", more and more. Does it run? Yes. Does it run well ... no.

To your point - what were once called "prototypes" are now shipping, by all outward indications.

I identify as stupid af and having bought a 4090 (upgraded from a 3090 so extra dumb) and I'm currently downloading this game with EA Pro. Once it downloads I'm gonna find out how entitled I am.

I loved the first game so hopefully this kicks ass. For $15 for a month idaf as long as it's as cool as the first one and I played that on an Xbox One X.

I loved the first game so hopefully this kicks ass. For $15 for a month idaf as long as it's as cool as the first one and I played that on an Xbox One X.

D

Deleted member 312202

Guest

You'll enjoy it just like I am. Doesn't matter what some "others" say that are stuck back in the 90's pricing.I identify as having bought a 4090 and I'm currently downloading this game with EA Pro. Once it downloads I'm gonna find out how entitled I am.

Something else to try with regards to that if you haven't is leave E cores on, but run in balanced instead of high performance. I hit an issue with that in Metro Last Light Redux, of all things. Never played it, had it in my library for years. Playing it on a 13900k and 3090 so super overkill, everything running great. Then one day I fire it up and I'm getting frame drops. I'm playing it on my TV which isn't setup for VRR right now (long story, need a new receiver) so vsync'd to 60Hz and it is noticeable when it happens. WTF? Was running fine before and this is an older game. Then when I'm looking at things, I realize I've switched over to high performance mode for Nuendo, and I left it there, I didn't switch back to balanced. When I did that, it was nice and smooth again.FYI guys, I disabled my E-cores and the Micro stuttering disappeared on my PC. It's been utterly fluid ever since. Rock solid at 60FPS (Limited by my display).

You would think that high performance would just be better performance wise than balanced but I think perhaps with the hybrid CPUs it leads to something where threads that shouldn't get stuck on E-cores.

Not sure if this game is the same, but something to try if you haven't. It surprised me when I discovered it.

Rose colored glasses about the past are a big issue, games are no exception. People should go and load up StarFox or FF7 in an accurate emulator sometime and cringe at the 15-20fps you get to experience.People have an overly optimistic view of the past when it comes to games. I'm old enough to remember buying enough games in both the 8-bit and 16-bit era on $50-$60 cartridges that were complete buggy dogshit. No patches, and just garbage. These were 'AAA' titles. It's always been like this. At least now we have the internet.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

Assuming you're talking about the RTX 2080 Ti, it's gonna play games fine because most games up until now aren't that demanding. The Vega 56 I have also plays games just fine, as long as I don't go past 1080P. It was fine enough that Google was planning to use it to power their cloud gaming service, before it failed. Keep in mind that 2080 Ti probably can't do Ray-Tracing without DLSS and probably not at 1440p. You can't use DLSS 3.0 because Nvidia, but you can use FSR even in Jefi Survivor. It wasn't all that much faster than a 1080 Ti, which is why a lot of people held onto them. There is a much bigger gap between the 980 Ti and 1080 Ti, than there is for the 1080 Ti and 2080 Ti. If you're wondering I bought my Vega 56 used for $220 in 2019 after the crypto market crashed and have been using it since. I was just playing CyberPunk2077 on it through Linux, with a mix of high and ultra settings, at 1080P of course.

You say:

1) "2080Ti probably can't do Ray-Tracing without DLSS and probably not at 1440p" - It can but it depends on the game and settings. It was the 1st RTX capable GPU after all.

2) "You can't use DLSS 3.0 because Nvidia, but you can use FSR" - There are no cards that can do frame generation other than the RTX4k series; AMD has no alternative and FSR 2 is not as good as DLSS 2 anyway.

3) "It wasn't all that much faster than a 1080Ti" - The video you posted said that the 2080Ti was 33% faster at 1440p than the 1080Ti. An upgrade from my 2080ti to a 3090 or 6950XT is right around a 33% increase so I don't see why it matters; especially when NV cards really stretch their legs over AMD cards at higher resolutions...

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

I want to try this game so badly just to see how it runs but I'm not spending $70 on it..

Can we message somebody in charge for an additional option aside from like for “I hate that this is true”.We're seeing "minimum viable product", more and more. Does it run? Yes. Does it run well ... no.

To your point - what were once called "prototypes" are now shipping, by all outward indications.

So I am going to like your comment for you putting it into words even if the content is a scathing indication that collectively we have decided this behaviour is acceptable when we should be vehemently protesting it.

next-Jin

Supreme [H]ardness

- Joined

- Mar 29, 2006

- Messages

- 7,387

Well, I can set my AMD graphics card to limit fps to 30, just did that. No Hz alteration necessary

Funny you say that about the slide show. My cousin plays competitively at 144 Hz in shooters online. Had him over when Star Wars Squadrons released and had the game running 30 Hz on a 4K display in my front room on a GTX 1080, he couldn't tell the difference even when he moved to my main rig at the time with it's 2080Ti at 60 Fps.

It's all drama with the high Hz gamers all the time

Unequivocally false. It depends on the person, I can’t stand frame rates lower than probably 90. I can tell when it does and it aggravates the hell out of me. I’m also extremely sensitive to 1% and 0.1% lows. I was a competitive counter strike player (among others) for years and have been a twitch shooter type player forever.

I literally haven’t beaten TLoU on PC because it’s also coded like trash. As a matter of fact I haven’t even gotten Ellie yet. It’s just frustrating to play, like it physically bothers me. I spend WAY more time in settings than I do playing most games because of this.

Also, 60fps on consoles is not the same as 60fps on PC. I’m not sure if it’s because of KBM vs controller or motion blur or what but even with motion blur on PC synced to 60Hz it physically feels different on PC compared to consoles. PCs at 60 feels god awful.

Bankie

2[H]4U

- Joined

- Jul 27, 2004

- Messages

- 2,469

I guess I missed that post. 30hz is freaking awful regardless of the resolution. On my Steamdeck I cringe at most games that are recommended to be set to 40fps. Anyone should be able to tell the difference between 30fps and 144hz; even when moving the mouse cursor on the desktop.Unequivocally false. It depends on the person, I can’t stand frame rates lower than probably 90. I can tell when it does and it aggravates the hell out of me. I’m also extremely sensitive to 1% and 0.1% lows. I was a competitive counter strike player (among others) for years and have been a twitch shooter type player forever.

I literally haven’t beaten TLoU on PC because it’s also coded like trash. As a matter of fact I haven’t even gotten Ellie yet. It’s just frustrating to play, like it physically bothers me. I spend WAY more time in settings than I do playing most games because of this.

Also, 60fps on consoles is not the same as 60fps on PC. I’m not sure if it’s because of KBM vs controller or motion blur or what but even with motion blur on PC synced to 60Hz it physically feels different on PC compared to consoles. PCs at 60 feels god awful.

EA Play Pro. $15, play, finish, cancel.I want to try this game so badly just to see how it runs but I'm not spending $70 on it..

I gotta agree, this dude is unfortunately blind as all fuck if 30 vs 60 is literally unnoticeable. I just don't see how that's possible.Unequivocally false. It depends on the person, I can’t stand frame rates lower than probably 90. I can tell when it does and it aggravates the hell out of me. I’m also extremely sensitive to 1% and 0.1% lows. I was a competitive counter strike player (among others) for years and have been a twitch shooter type player forever.

I literally haven’t beaten TLoU on PC because it’s also coded like trash. As a matter of fact I haven’t even gotten Ellie yet. It’s just frustrating to play, like it physically bothers me. I spend WAY more time in settings than I do playing most games because of this.

Also, 60fps on consoles is not the same as 60fps on PC. I’m not sure if it’s because of KBM vs controller or motion blur or what but even with motion blur on PC synced to 60Hz it physically feels different on PC compared to consoles. PCs at 60 feels god awful.

Like you can just straight up feel it immediately on a mouse. Even if you somehow can't see it, I don't see how it wouldn't be obvious the instant the mouse moves - it's terrible.

60 feels pretty smooth on a controller but it's still not particularly great on a mouse. I agree that 90 is probably the threshold where it starts feeling decent.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,939

Everything depends on game settings.1) "2080Ti probably can't do Ray-Tracing without DLSS and probably not at 1440p" - It can but it depends on the game and settings. It was the 1st RTX capable GPU after all.

Could the RTX 20 and 30 series do DLSS 3.0? Most likely yes, but Nvidia is locking older gen products out of it. DLSS is superior but it's also superior at fragmenting users out of tech that could be done on any GPU. FSR works on all cards, including yours and even older cards like mine.2) "You can't use DLSS 3.0 because Nvidia, but you can use FSR" - There are no cards that can do frame generation other than the RTX4k series; AMD has no alternative and FSR 2 is not as good as DLSS 2 anyway.

He also said it's also basically nothing over Pascal. It's also closer to 35% faster at 4k, which is still basically nothing.3) "It wasn't all that much faster than a 1080Ti" - The video you posted said that the 2080Ti was 33% faster at 1440p than the 1080Ti.

For most people 33% isn't enough to justify hundreds or in your case thousands of dollars, hence the poor GPU sales. If I'm gaming at 40fps and buy a GPU that's 33% faster, then I'm running it at 60fps, for what is hundreds of dollars. For that kind of increase I'm better off waiting for a proper upgrade. For me to get a proper upgrade I would have to buy a 6700 XT, which are cheap but at best it offers double the frame rate of my Vega 56 for what is now $350 new and under $300 used on Ebay. If I'm more patient, I wouldn't be surprised if Jedi Survivor's poor frame rate is attributed to Denuvo. Remember that whole fiasco with Resident Evil 8 and DRM killing performance? Wouldn't shock me if new games are terrible just because of the new Denuvo. Most of these new games haven't been cracked yet, so who knows what performance killing effects Denuvo has on these games?An upgrade from my 2080ti to a 3090 or 6950XT is right around a 33% increase so I don't see why it matters; especially when NV cards really stretch their legs over AMD cards at higher resolutions...

For me to get a proper upgrade I would have to buy a 6700 XT, which are cheap but at best it offers double the frame rate of my Vega 56 for what is now $350 new and under $300 used on Ebay.

The problem with 6700xt is even 12gb may not support 1080p ultra going forward

Maybe you should switch target to a 16gb 6800 for $400.

(For ex this open box asrock is $420)

https://www.newegg.com/asrock-radeo...4930048R?Item=N82E16814930048R&quicklink=true

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)