Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is 10gb VRAM really enough for 1440p?

- Thread starter Floor_of_late

- Start date

MavericK

Zero Cool

- Joined

- Sep 2, 2004

- Messages

- 31,901

Yep, the question is how much more, you have only one example in your sources. I'd like to see a list of games and the differences in allocation vs actual usage. I'm also willing to believe there's some technical benefit from allocating more than you need ahead of time but of course we will never know any specifics.

How wide is the gap to justify this constant allocation != usage argument

The crux of the issue is that it's hard to know because the way VRAM usage is presented is allocation versus actual need. I saw an article that tried to do some direct testing awhile back, but I can't recall the site that did it (will post if I can find it). IIRC it was on the order of a 1-2GB+ swing for allocated versus actual.

EDIT: This not the source I originally mentioned, but it does show the difference:

Generally seems to be at least a 1 GB difference between allocated versus actual.

Last edited:

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

Hmm, i highly doubtt they are tightening their belts over this. It's more of a cash grab and playing consumers making them think that. Look at the 3090. It's like jedi mind trucks making ya think they are operating at tight margins since the 3090 is twice the price so that memory cost must be a big part of it. Imo, amd just blew that away as the curtain had been drawn on that. Now Nvidia has to react to this by releasing higher vram count cards, but at what cost to be determined... At the end of the day they still come out on top, fucking brilliant. smhReally? I didn't even think it was a debate that the 3080 has lower margins than Nvidia is used to. AMD traditionally works with lower margins to stay relevant.

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 947

Unless your game suddenly turned into a slideshow or crashed, you didn't run out of VRAM at 1440p on a 8GB or 10GB card.

D

Deleted member 143938

Guest

Doesn't nullify the need to discuss if it's enough for for X amount of time so techies can make informed purchasing decisions.Unless your game suddenly turned into a slideshow or crashed, you didn't run out of VRAM at 1440p on a 8GB or 10GB card.

(yes yes, crystal balls, future telling, full moons, no one knows how consoles will change the landscape and where devs are going. We know. Stop parroting this just to shut down discussions on a perfectly valid and interesting topic [not saying this to you specifically Sir Beregond])

As a SQL DBA, I can confirm this. You'd better define how much memory SQL can munch on, or it will munch it all, whether it needs it or not.I do sizing for those for a living. If you don't right-size a SQL VM and just give it a bunch of RAM, it will use it all. So you increase it, and it "uses" that. If you check in the DB, 1/10th of it is actually in use - MSSQL service just claimed all the ram because it was there

Sir Beregond

Gawd

- Joined

- Oct 12, 2020

- Messages

- 947

Oh trust me, I totally agree. Just look at my sig rig. I am feeling the age of my 980 hard these days. I just don't see 10GB being a problem for 1440p. 4K in the near future? Yeah totally. 1440p? Seems a stretch to me.Doesn't nullify the need to discuss if it's enough for for X amount of time so techies can make informed purchasing decisions.

(yes yes, crystal balls, future telling, full moons, no one knows how consoles will change the landscape and where devs are going. We know. Stop parroting this just to shut down discussions on a perfectly valid and interesting topic [not saying this to you specifically Sir Beregond])

Denpepe

2[H]4U

- Joined

- Oct 26, 2015

- Messages

- 2,269

Obviously this is not a miracle solution, but every bit helps Announcing the NVIDIA Texture Tools Exporter 2020.1 – NVIDIA Developer News Center

I'm not too bothered about the amount of memory on my 3080, I'm pretty sure these GPU companies know what they are doing, new consoles or not. A bit like gamers with 64 GB RAM machines because more RAM makes your PC run games faster

Also you can't realy compare prices with amount of memory used between AMD to Nvidia due to using different memory, the GDDR6X that Nvidia uses would certainly be more expensive then the more generic and easier to aquire GDDR6 that AMD uses.

BTW over here AMD cards are a lot more expensive then their Nvidia counterparts due to their extreme low stock nr's

I'm not too bothered about the amount of memory on my 3080, I'm pretty sure these GPU companies know what they are doing, new consoles or not. A bit like gamers with 64 GB RAM machines because more RAM makes your PC run games faster

Also you can't realy compare prices with amount of memory used between AMD to Nvidia due to using different memory, the GDDR6X that Nvidia uses would certainly be more expensive then the more generic and easier to aquire GDDR6 that AMD uses.

BTW over here AMD cards are a lot more expensive then their Nvidia counterparts due to their extreme low stock nr's

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

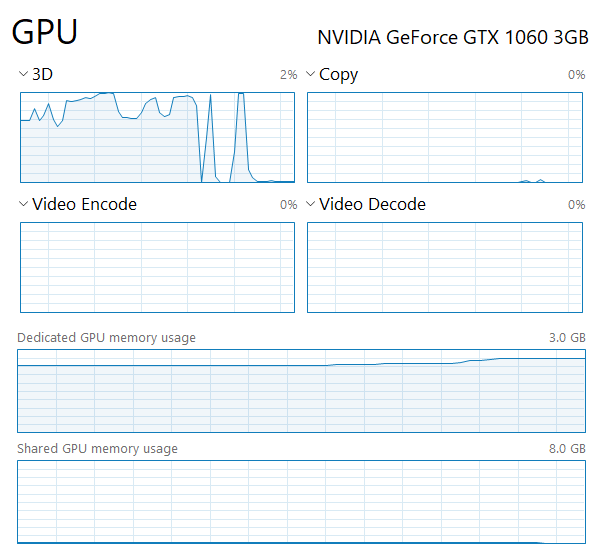

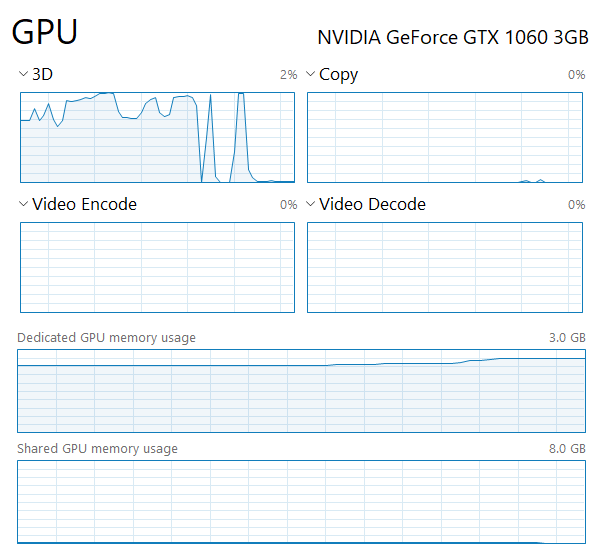

My 2 cents - look what happens when I play Beyond Two Souls, a 2013 PS4 game, on high quality settings at 1440p:

While not constant, the game often gets too close to my 3GB VRAM capacity and stutters for a good half to full second. If I play 1080p, VRAM stays around 2.6GB and the issue goes away. And that's on a revved up 2013 game! For any 2021 game, I wouldn't want anything less than 6GB as the absolute bottom-barrel minimum, 8GB should really be minimum for 1440p. Considering what's coming with the new console generation, I'd say 10GB will be sustainable for a couple years, after that 12GB will really be the only reasonable option. Personally, if there's 6GB/12GB options for the upcoming 3060, the 6GB is going to have to be really cheap for me to even consider it (as in $229 at absolute most), I'd much rather go with a 12GB for about $299 - though if it ends up costing $349, I might have to go with the 6GB option - I can't regularly justify spending more than $299 on a PC part exclusively for my gaming hobby, I got a house and a family to take care of. I'd consider AMD options that usually have more VRAM, but they have had even less availability than Nvidia lately and DLSS is a powerful attractive force (yes, I know AMD's super resolution is coming, but who knows when and how good it is, while DLSS is right here right now for the games I play).

While not constant, the game often gets too close to my 3GB VRAM capacity and stutters for a good half to full second. If I play 1080p, VRAM stays around 2.6GB and the issue goes away. And that's on a revved up 2013 game! For any 2021 game, I wouldn't want anything less than 6GB as the absolute bottom-barrel minimum, 8GB should really be minimum for 1440p. Considering what's coming with the new console generation, I'd say 10GB will be sustainable for a couple years, after that 12GB will really be the only reasonable option. Personally, if there's 6GB/12GB options for the upcoming 3060, the 6GB is going to have to be really cheap for me to even consider it (as in $229 at absolute most), I'd much rather go with a 12GB for about $299 - though if it ends up costing $349, I might have to go with the 6GB option - I can't regularly justify spending more than $299 on a PC part exclusively for my gaming hobby, I got a house and a family to take care of. I'd consider AMD options that usually have more VRAM, but they have had even less availability than Nvidia lately and DLSS is a powerful attractive force (yes, I know AMD's super resolution is coming, but who knows when and how good it is, while DLSS is right here right now for the games I play).

Last edited:

Before it is a slide show when running out of Vram, as a severe case, you first see stutter, pauses, stops periodically becoming apparent. My 5700 XT AE did exactly that with Ultra settings in FS 2020 in high dense cities such as New York or San Francisco. It is unmistakable and a poor gaming experience. Have not checked that lately since MS continue to improve the simulator with updates. If 8gb at 1440p has issues on a 2020 game even being mostly CPU limited, RT adding additional requirements for vram, higher resolutions and even more detailed textures and more assets in games, 10gb seems to me a little bit short for longevity. If a console game actually uses the 10gb dedicated to the GPU (can also use more beyond that 10gb) how in the world will a 10gb card then give better quality without sacrificing something else even with superior processing power? For most I do believe 10gb will serve them well, while some more picky will see some limitations, then others will find it unacceptable the limitations that may come about. 12gb I would think is the sweet spot, 16gb just an added buffer where you do not have to ever worry about vram running out before other constraints become apparent.My 2 cents - look what happens when I play Beyond Two Souls, a 2013 PS4 game, on high quality settings at 1440p:

View attachment 317735

While not constant, the game often gets too close to my 3GB VRAM capacity and stutters for a good half to full second. If I play 1080p, VRAM stays around 2.6GB and the issue goes away. And that's on a revved up 2013 game! For any 2021 game, I wouldn't want anything less than 6GB as the absolute bottom-barrel minimum, 8GB should really be minimum for 1440p. Considering what's coming with the new console generation, I'd say 10GB will be sustainable for a couple years, after that 12GB will really be the only reasonable option. Personally, if there's 6GB/12GB options for the upcoming 3060, the 6GB is going to have to be really cheap for me to even consider it (as in $229 at absolute most), I'd much rather go with a 12GB for about $299 - though if it ends up costing $349, I might have to go with the 6GB option - I can't regularly justify spending more than $299 on a PC part exclusively for my gaming hobby, I got a house and a family to take care of. I'd consider AMD options that usually have more VRAM, but they have had even less availability than Nvidia lately and DLSS is a powerful attractive force (yes, I know AMD's super resolution is coming, but who knows when and how good it is, while DLSS is right here right now for the games I play).

We're going to settle this with an old game: Grand Theft Auto 5. What makes this game unique is that it has a "Frame Scaling Mode". What this does is scale the resolution based on the value listed. You can set this item all the way up to 5/2, which is 2.5x native resolution. If you do this at 4K and max all settings, it will estimate a whopping 24GB VRAM utilization. It is so taxing, in fact, that if I attempt to set this on my RTX 3080, it locks up my computer.... then crashes the game.

So I did a few tests (in single player as Michael) to see how far I could push this.

At 4K, I set the Frame Scale mode to 2.5x and set every other setting to minimum. At this point, the game plays very well. 80-100 FPS is the average. Afterburner showing about 7.1GB VRAM usage... at minimum settings.

My next step was to turn up MSAA to 2x. This increases the VRAM utilization dramatically and greatly decreases framerate. We're now bumping right up against the 10GB VRAM allocation, and we're averaging 40-50 FPS. Ouch.

Next step is to turn up MSAA to 4x... game over. Framerate is so low I can't even accept the changes.

Next, a set of settings I tried (all settings minimum unless otherwise mentioned).

4K, Frame Scale Mode: 2/1 (2x), 4x MSAA. 38-45FPS with VRAM around 9.6 GB utilization.

4K, Frame Scale Mode: 2/1 (2x), 8x MSAA: Unable to apply, too slow.

4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA: 36-43 FPS with VRAM around 9.8 GB utilization.

This is the highest "playable" (I use that term loosely) resolution. At this point, I was able to increase every setting to max in the regular Graphics settings (left Reflection MSAA at none and left Grass at normal because Grass punishes framerate).

At 4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA, and the settings above, I got an average of 30-35 FPS with VRAM utilization bumping up against the 10GB VRAM limit. However, there was no stutter, which means VRAM utilization was fine.

TL;DR

GTA V is nearly UNPLAYABLE before you reach the VRAM limit on the RTX 3080 10GB. So, is 10GB vram enough? Because at this point... I'm thinking that it is; we run into a GPU bottleneck before we run out of VRAM.

Is there another, more modern game we can test this on?

So I did a few tests (in single player as Michael) to see how far I could push this.

At 4K, I set the Frame Scale mode to 2.5x and set every other setting to minimum. At this point, the game plays very well. 80-100 FPS is the average. Afterburner showing about 7.1GB VRAM usage... at minimum settings.

My next step was to turn up MSAA to 2x. This increases the VRAM utilization dramatically and greatly decreases framerate. We're now bumping right up against the 10GB VRAM allocation, and we're averaging 40-50 FPS. Ouch.

Next step is to turn up MSAA to 4x... game over. Framerate is so low I can't even accept the changes.

Next, a set of settings I tried (all settings minimum unless otherwise mentioned).

4K, Frame Scale Mode: 2/1 (2x), 4x MSAA. 38-45FPS with VRAM around 9.6 GB utilization.

4K, Frame Scale Mode: 2/1 (2x), 8x MSAA: Unable to apply, too slow.

4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA: 36-43 FPS with VRAM around 9.8 GB utilization.

This is the highest "playable" (I use that term loosely) resolution. At this point, I was able to increase every setting to max in the regular Graphics settings (left Reflection MSAA at none and left Grass at normal because Grass punishes framerate).

At 4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA, and the settings above, I got an average of 30-35 FPS with VRAM utilization bumping up against the 10GB VRAM limit. However, there was no stutter, which means VRAM utilization was fine.

TL;DR

GTA V is nearly UNPLAYABLE before you reach the VRAM limit on the RTX 3080 10GB. So, is 10GB vram enough? Because at this point... I'm thinking that it is; we run into a GPU bottleneck before we run out of VRAM.

Is there another, more modern game we can test this on?

D

Deleted member 143938

Guest

Is there a way to temporarily disable some VRAM? For example "remove" 2GB of VRAM from a 3080 to make it 8GB? (without hardware modification)

You got me thinking on that one.Is there a way to temporarily disable some VRAM? For example "remove" 2GB of VRAM from a 3080 to make it 8GB? (without hardware modification)

I fired up a copy of Heaven Benchmark @ 4K, 8x MSAA, maxed. It used 3GB VRAM. I left that running minimized (low GPU utilization), and opened up GTA V at the most recent settings... sucker was averaging about 10 FPS with VRAM maxed.

So I went and closed Heaven Benchmark and alt-tabbed back into GTA V, and the framerate was good again (30-35 FPS).

So if you max the VRAM, there is a huge performance penalty, but at what cost? It is possible, but you have to really try in order to do it.

Nice experiment, conclusion I say is weak. Reason is as follows:We're going to settle this with an old game: Grand Theft Auto 5. What makes this game unique is that it has a "Frame Scaling Mode". What this does is scale the resolution based on the value listed. You can set this item all the way up to 5/2, which is 2.5x native resolution. If you do this at 4K and max all settings, it will estimate a whopping 24GB VRAM utilization. It is so taxing, in fact, that if I attempt to set this on my RTX 3080, it locks up my computer.... then crashes the game.

So I did a few tests (in single player as Michael) to see how far I could push this.

At 4K, I set the Frame Scale mode to 2.5x and set every other setting to minimum. At this point, the game plays very well. 80-100 FPS is the average. Afterburner showing about 7.1GB VRAM usage... at minimum settings.

My next step was to turn up MSAA to 2x. This increases the VRAM utilization dramatically and greatly decreases framerate. We're now bumping right up against the 10GB VRAM allocation, and we're averaging 40-50 FPS. Ouch.

Next step is to turn up MSAA to 4x... game over. Framerate is so low I can't even accept the changes.

Next, a set of settings I tried (all settings minimum unless otherwise mentioned).

4K, Frame Scale Mode: 2/1 (2x), 4x MSAA. 38-45FPS with VRAM around 9.6 GB utilization.

4K, Frame Scale Mode: 2/1 (2x), 8x MSAA: Unable to apply, too slow.

4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA: 36-43 FPS with VRAM around 9.8 GB utilization.

This is the highest "playable" (I use that term loosely) resolution. At this point, I was able to increase every setting to max in the regular Graphics settings (left Reflection MSAA at none and left Grass at normal because Grass punishes framerate).

At 4K, Frame Scale Mode: 3/2 (1.5x) 8xMSAA, and the settings above, I got an average of 30-35 FPS with VRAM utilization bumping up against the 10GB VRAM limit. However, there was no stutter, which means VRAM utilization was fine.

TL;DR

GTA V is nearly UNPLAYABLE before you reach the VRAM limit on the RTX 3080 10GB. So, is 10GB vram enough? Because at this point... I'm thinking that it is; we run into a GPU bottleneck before we run out of VRAM.

Is there another, more modern game we can test this on?

- You did not change the geometry complexity, number of objects or polygons and memory needed. That stays the same regardless of resolution for the same settings or rendered resolution and memory for that

- You did not change the number of shaders and memory for that

- Your artificially limiting performance with MSAA which the ROP of your card and memory bandwidth was not designed to handle such resolutions vice using more modern more effective/efficient AA techniques

- Your stressing something different as well on the card, shading performance or fp32 to be more exact. The triangles needed to be shaded remain the same from 480p to 8K, what changes are the number of pixels that are needed to color those triangles. 2.5x of 4K means 2.5x more pixels than 4K, with every new additional pixel requires more compute operations, becoming compute/shading limited -> this is not realistic to determine what normal resolution pixel shading will require and performance

Now I would like to see that experiment done with an RNDA2 card, my thinking would be fp32/shading performance starts to become limiting at higher resolutions but don't know for sure.

Last edited:

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

GTA V is nearly UNPLAYABLE before you reach the VRAM limit on the RTX 3080 10GB. So, is 10GB vram enough? Because at this point... I'm thinking that it is; we run into a GPU bottleneck before we run out of VRAM.

While it's a nice experiment, madpistol , there are 2 major flaws with it:Nice experiment, conclusion I say is weak.

*snip*

Newer games will not behave like GTV 5

1) as noko said, you assume newer games will behave like GTAV. They most certainly will not.

2) your conclusion assumes that new GPU architectures will not change efficiency/performance wise in the future. They most certainly will.

So, I guess you could say that within the same 3000 generation, VRAM will not be an issue with GTAV. That conclusion will not hold any weight with a) a different game that processes memory differently and b) a different GPU architecture that renders differently.

Nice experiment, conclusion I say is weak. Reason is as follows:

Newer games will not behave like GTV 5 with those settings on ram. RT takes 1-2gb+ added, mostly BVH but also compute shaders and additional objects having to be in the scene for RT. More objects and more complex objects in games, pushing vram requirements. More objects also entail more shaders and textures as games get more and more complex requiring more vram. Higher quality textures also adding additional memory requirements. What breaks first is anyone's guess, Ram bandwidth? VRam amount? FP32 or shading performance? ROP?

- You did not change the geometry complexity, number of objects or polygons and memory needed. That stays the same regardless of resolution for the same settings or rendered resolution and memory for that

- You did not change the number of shaders and memory for that

- Your artificially limiting performance with MSAA which the ROP of your card and memory bandwidth was not designed to handle such resolutions vice using more modern more effective/efficient AA techniques

- Your stressing something different as well on the card, shading performance or fp32 to be more exact. The triangles needed to be shaded remain the same from 480p to 8K, what changes are the number of pixels that are needed to color those triangles. 2.5x of 4K means 2.5x more pixels that 4K, with every new additional pixel requires more compute operations, becoming compute/shading limited -> this is not realistic to determine what normal resolution pixel shading will require and performance

Now I would like to see that experiment done with an RNDA2 card, my thinking would be fp32/shading performance starts to become limiting at higher resolutions but don't know for sure.

While it's a nice experiment, madpistol , there are 2 major flaws with it:

1) as noko said, you assume newer games will behave like GTAV. They most certainly will not.

2) your conclusion assumes that new GPU architectures will not change efficiency/performance wise in the future. They most certainly will.

Both of you are correct. That's the reason I asked if there is a more modern game where we can do a test like this? GTA V is an old game now, so it's not going to behave the same way as more recent titles will... but I don't know of another title where we can move the VRAM utilization up and down with more granularity in order to test the impact of VRAM utilization on a video card.

I'm open to suggestions.

Also, I would love to see the same test on an RX 6000 GPU (especially the RX 6900 XT) to see what impact resolution has on the 256-bit memory bus + Infinity Cache.

I also thought about using Cyberpunk 2077 to test VRAM limitations, but it does an amazing job at managing video memory. Seriously, I maxed the settings at 4K including Ray Tracing and disabling DLSS, and the game is COMPLETELY unplayable (4-5 FPS), but VRAM utilization is only at around 9.3 GB. Crazy.

That's the main reason I used GTA V to test VRAM utilization; it's terrible at managing VRAM utilization.

That's the main reason I used GTA V to test VRAM utilization; it's terrible at managing VRAM utilization.

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,534

Another reason not to use cp2077 is it's so new and needs a lot of work in the bugs and optimization department.I also thought about using Cyberpunk 2077 to test VRAM limitations, but it does an amazing job at managing video memory. Seriously, I maxed the settings at 4K including Ray Tracing and disabling DLSS, and the game is COMPLETELY unplayable (4-5 FPS), but VRAM utilization is only at around 9.3 GB. Crazy.

That's the main reason I used GTA V to test VRAM utilization; it's terrible at managing VRAM utilization.

I think you are on the right track, we don't know exactly because future games are well obviously not out yet. If you have FS 2020, Use Ultimate settings and fly over San Francisco area and do what you did before with Heaven eating up some of that Vram might be interesting. Another would be Doom Eternal with the max settings at 4K with Heaven in the background.I also thought about using Cyberpunk 2077 to test VRAM limitations, but it does an amazing job at managing video memory. Seriously, I maxed the settings at 4K including Ray Tracing and disabling DLSS, and the game is COMPLETELY unplayable (4-5 FPS), but VRAM utilization is only at around 9.3 GB. Crazy.

That's the main reason I used GTA V to test VRAM utilization; it's terrible at managing VRAM utilization.

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

For non-RT I’d agree on Doom Eternal, for RT Control might be a good choice. Cyberpunk seems like the most obvious go to, but I’d agree that it needs a bit more patching and work to be a reliable benchmark. That said I think this is a great effort madpistol and I hope I didn’t discourage you from it!

Doom Eternal stays pegged at around 9.5 GB VRAM at 4K maxed. I also have the Framerate artificially capped at 115 (smooth as butter on my CX55).For non-RT I’d agree on Doom Eternal, for RT Control might be a good choice. Cyberpunk seems like the most obvious go to, but I’d agree that it needs a bit more patching and work to be a reliable benchmark. That said I think this is a great effort madpistol and I hope I didn’t discourage you from it!

I fired up Overwatch, just the menu screen, but the cool part is that it actually has a tool built in that shows you the amount of VRAM it's using under "Display Performance Stats". What I found is that at 1440P 100% scaling, max settings, It uses just over 1GB VRAM. At this point there is no performance degradation in Doom Eternal.

HOWEVER, if I bump up the resolution in Overwatch to 4K, it bumps the VRAM utilization up to 1.5GB, and it becomes laggy as hell. Same with Doom Eternal. This tells me that the system is now paging GPU data to the System RAM, which means full VRAM saturation.

So we can basically conclude that Doom Eternal @ 4K maxed settings utilizes roughly 8.5-9GB VRAM.

Enjoy.

EDIT: Overwatch also has a resolution scaler built in. This means that we now have a fairly easy way to eat up between 500MB - 2GB+ VRAM on the GPU at will; we can artificially inflate GPU VRAM utilization.

undertaker2k8

[H]ard|Gawd

- Joined

- Jul 25, 2012

- Messages

- 1,990

The new consoles do not have 16 GB dedicated to video memory that is a common misconception. They have 16 GB total memory for everything including OS.

Last edited:

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

Off the top of my head, I think ~2GB was reserved for OS and fast switching, leaving anywhere between 12-14GB available for games?The new consoles do not have 16 GB dedicated to video memory that is a common misconception. They have 16 GB total memory for everything include OS.

For non-RT I’d agree on Doom Eternal, for RT Control might be a good choice. Cyberpunk seems like the most obvious go to, but I’d agree that it needs a bit more patching and work to be a reliable benchmark. That said I think this is a great effort madpistol and I hope I didn’t discourage you from it!

I've tested the game extensively. If you can lock down your manual run throughs the game is actually remarkably consistent with its frame rates.

III_Slyflyer_III

[H]ard|Gawd

- Joined

- Sep 17, 2019

- Messages

- 1,252

I'm not entirely sure, I let gpuz run in the background and log memory usage when I tested my 2080Ti and my new 3090.

On my 2080Ti, cyberpunk, SOTTR and MSFS2020 would max out at 10998mb.

On my 3090, cyberpunk used 13985mb. This was at 4k, psycho RT and DLSS at quality settings.

I did not pick up a 3090 for the vram though, I picked it up because I wanted the best video card available. Just a bonus it has more vram, but the wider and faster bus is more important for gaming performance.

It took some time, but finally found a game that maxes out my 24GB of VRAM on the 3090 (or at least allocates most of it). When I installed COD:CW, I installed the additional 80GB of 4K textures. Decided to play some MP rounds tonight... playing at 4k, full settings, max RT, HDR on and quality DLSS. Getting 100~120FPS solid the entire time, and it is using an astonishing 23499MB of vram!

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

80GB for just the extra textures??? Jeez. We really need new and improved compression algorithms.

Sir Psycho

Limp Gawd

- Joined

- May 29, 2020

- Messages

- 176

It took some time, but finally found a game that maxes out my 24GB of VRAM on the 3090 (or at least allocates most of it). When I installed COD:CW, I installed the additional 80GB of 4K textures. Decided to play some MP rounds tonight... playing at 4k, full settings, max RT, HDR on and quality DLSS. Getting 100~120FPS solid the entire time, and it is using an astonishing 23499MB of vram!Smooth as butter tho and looks amazing!!!

Cold War has allocated all available VRAM on every system/GPU I've ran it on. 11 on 1080Ti, 10 on 3080 (with 4K pack), 6GB on 1060 and 8GB on 580. Every single time. 3440x1440, 1080p. Even 720p IIRC.

Makes sense as a lot of PCs lack CPU power to decompress textures while running the game at full speed. They might compress the transfer over the internet though.80GB for just the extra textures??? Jeez. We really need new and improved compression algorithms.

To be fair, I was running my 3080 at around 70-80 FPS maxed 4K with DLSS @ Quality. It utilized all 10GB of VRAM... and as a bonus, I had GPU folding running in the background. Oops.It took some time, but finally found a game that maxes out my 24GB of VRAM on the 3090 (or at least allocates most of it). When I installed COD:CW, I installed the additional 80GB of 4K textures. Decided to play some MP rounds tonight... playing at 4k, full settings, max RT, HDR on and quality DLSS. Getting 100~120FPS solid the entire time, and it is using an astonishing 23499MB of vram!Smooth as butter tho and looks amazing!!!

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

We have GPU compression and decompression these days... the CPU doesn't need to be tasked with this at all. Nvidia has had methods available to do this via CUDA for years now, I don't know what the AMD equivalent is but I'm sure there's one.Makes sense as a lot of PCs lack CPU power to decompress textures while running the game at full speed. They might compress the transfer over the internet though.

That's what the non-extra textures are for...80GB for just the extra textures??? Jeez. We really need new and improved compression algorithms.

euskalzabe

[H]ard|Gawd

- Joined

- May 9, 2009

- Messages

- 1,478

???That's what the non-extra textures are for...

With proper compression, these 4K textures don't need to be anywhere near 80GB in size.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)