Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

The best way to accurately emulate a CRT in the future would be a 1000Hz+ OLED or discrete-MicroLED display, and simply simulating a CRT electron beam in software -- using a GPU shader (or FPGA) that uses many digital refresh cycles to emulate one CRT refresh cycle.

This is a much easier engineering path, to 'enhance' existing MicroLED modules for a higher refresh rate (many already PWM-refresh at 1920Hz but done by repeating refresh cycles, An FPGA per module can commandeer it to unique refresh cycles, using currently off-the-shelf technology, at prices cheaper than fabbing a SED/FED factory).

It's like playing a high-speed video of a CRT in reverse in real time on a ultra high Hz display. It temporally matches the zero-blurness and phosphor-decay behaviours of a CRT!

It's possible to shift CRT electron beam control over to software, and simply use many digital refresh cycles to emulate one CRT Hz. I actually personally added a BountySource bounty on a RetroArch emulator item (BFIv3) that incorporates such an algorithm, since display refresh rates are now getting almost high enough to emulate the electron beam of a CRT to within human vision error margins.

Also, crossposting what I posted in Blur Busters Display Research Forum (Area 51), as I am now cited in over 25 peer reviewed research papers [Google Scholar], so I'm a pretty authoritative voice in this territory. Early tests in CRT beam simulation has shown promising results, with the realism of CRT electron beam simulation improving as refresh rates keep going up -- 1000 Hz displays are no longer too far behind, and will arrive sooner than a FED/SED panel.

BFI is like pre-MameHSL. CRT electron beam simulation in software is like "perfect CRT-like BFI on steroids", with a fadebehind rolling-scan effect that can technically be beam-raced with the raster of the GPU output (for subrefresh-lag CRT simulation).

A CRT electron beam simulator is like the temporal equivalent of spatial MAME HLSL CRT filter.

More resolution = better spatials.

More refresh = better temporals.

Enough refresh rate = perfect low-Hz CRT simulation to the human eye.

I fear that FED/SED will be stillborn, since already-manufactured sample parts (necessary for a 1000Hz display -- e.g. retrofitting ultra-high-resolution MicroLED modules obtained as sample quantity, and then modify them with one FPGA chip per module to enable a higher refresh rate), rather than waiting out pandemic-slowed factory supply chains to try to manufacture a FED/SED or a new CRT tube. Computer parallelism can be utilized, if needing to drive different high-hz modules at lower resolutions, so one computer may have to output to a few computers that drive FPGA-modified MicroLED modules for a 1000Hz display using today's already-manufactured computer parts waiting in warehouses -- at least for a prototype demonstration display. If you wanted to go lower resolution, it gets cheaper (e.g. low resolution 32x32 RGB LED matrixes off Alibaba for $10 each are already refreshing at 1920Hz via repeat-refresh technique, but can be FPGA-modded too for unique refresh cycles), but we're trying to reach high resolution so you gotta buy high-resolution MicroLED modules instead. There are engineering paths of lower-resistance.

At 1000Hz-2000Hz+, with an accurate software-based temporal CRT electron beam simulator (in a GPU shader) we will be able to able to pass the CRT turing test (can't tell apart a masked flat Trinitron and a fake-CRT-glassboxed 1000Hz OLED/MicroLED). The challenge is getting good spatial (4K resolution) and good temporal (1000Hz), so 4K 1000Hz should be a baseline goal for a CRT turing A/B blind test versus a flat tube, though you can get by with lower resolutions (1080p 1000Hz) if you're simulating very tiny Sony PVM CRTs -- you need high DPI to get those phosphor dots resolving uniquely ideally. But you can prioritize temporal first at a much lower budget (modding existing MicroLED blocks with FPGA apiece), and use large viewing distance (wall sized CRT) to hide the LED-bead effect.

However, the bottom line is the technology is purchasable today for a buildable prototype -- given sufficient programming skill (FPGA/Xilinx/shaders/etc) for the processing speed necessary to simulate an accurate CRT beam in realtime (including gamma-correction-conversion, for all the CRT-fade overlaps, so you don't get flicker-band effects).

--- Begin unlinked crosspost from Blur Busters Area 51 Forum ---

The problem with SED/FED is they had to be refreshed differently from a CRT, behaving kinda like plasmas (the prototype SED/FED has worse artifacts than a Pioneer Kuro plasma). Instead, I'd rather shift the CRT refresh-pattern control over to software (ASIC, FPGA, GPU shader, or other software-reprogrammable algorithm) to get exactly the same perfect CRT look-and-feel.

The only thing is we need brute overkill Hz, to allow CRT refresh cycle emulation over several digital refresh cycles.

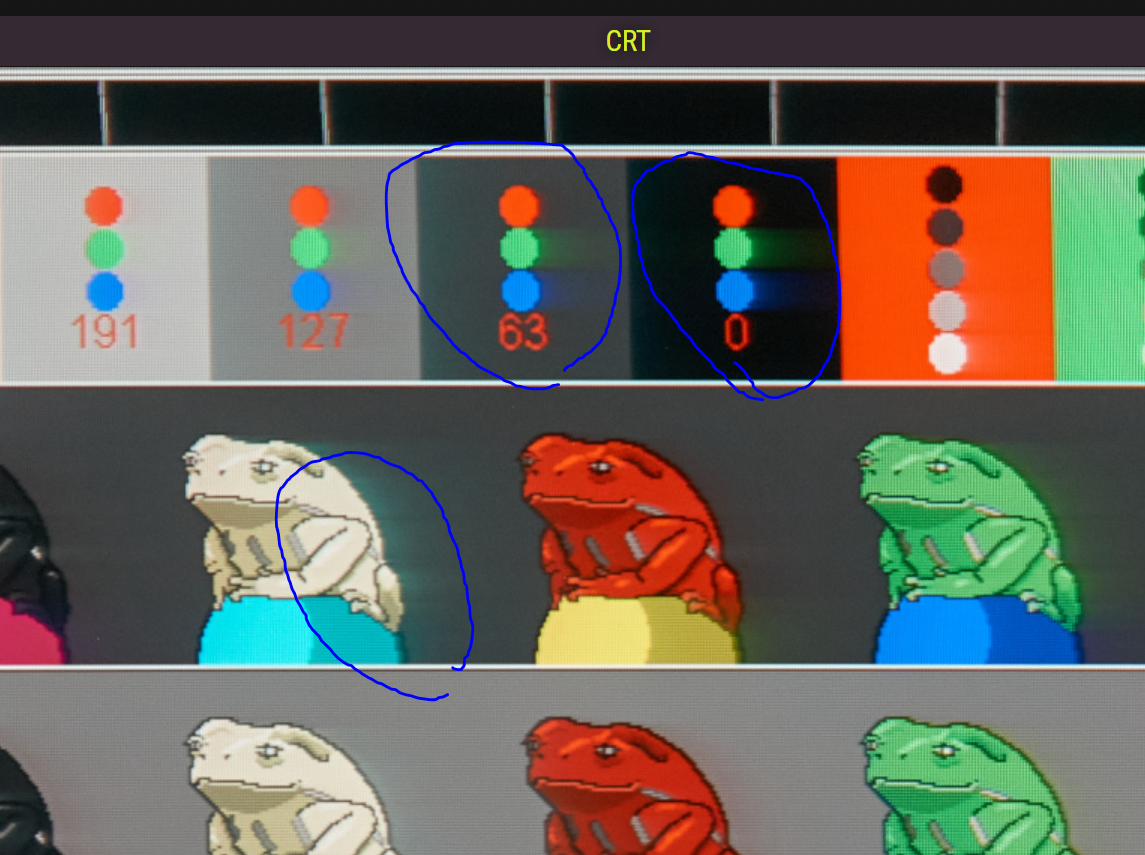

Think of this as a temporal version of the spatial CRT filters. We already know how great spatial CRT filters look on a 4K OLED display, looks uncannily accurate for low-rez CRT, you can even see individual simulated phosphor dots! But this isn't blurfree. But it's possible to do it temporally too, not just spatially -- it just requires many refresh cycles per CRT refresh cycles.

Direct-view discrete micro LED displays (and possibly future OLED), can do all the following:

- Color gamut of CRT

- Perfect blacks

- Bright whites

- High resolutions enough for spatial CRT filter texture emulation (see realistic individual phosphor dots!)

- Can do high refresh rates enough for temporal CRT scanning emulation (see realistic zero-blur motion and realistic phosphor decay!)

- HDR capability can also give the brightness-surge headroom to prevent things from being too dark

So you just need an ultra-high-rez ultra-high-refreshrate HDR display (such as a 1000Hz OLED or MicroLED), then the rest of the CRT behaviors can be successfully emulated spatially AND temporally in software.

You can use a 960Hz display at 16 refresh cycles per CRT Hz to emulate 60 electron-scanned refresh cycles per second at 16 frames per simulated CRT refresh cycle (each frame would be like a frame of a high speed video of a CRT, but in realtime instead). This is to simulate an electron beam / phosphor / zero motion blur as long as there was enough brute Hz to allow multiple refresh cycles per simulated CRT Hz to emulate the electron beam.

Incidentally, a 1000fps high speed video of a CRT, played back in real time to a 1000Hz display, accurately preserves a lot of the CRT temporals (zero blur, phosphor decay, same flicker, etc).

Instead, you’d use a software algorithm in the firmware or the GPU, to artificially emulate a CRT tube via piggybacking on the ultra high refresh rate capability of future displays.

Direct view micro LED panels (without LCD) can exceed the contrast range, color gamut, and resolution of a CRT tube, and so it’s a great supersetted venn diagram that is a perfect candidate for this type of logic to emulate a CRT tube, with a CRT emulator mode.

That’s the better engineering path to go down, in my opinion.

Although full-fabbed 1000Hz+ panels don't yet exist, DIY 1000Hz+ is possible: If you begin with modified jumbotron LED modules from Alibaba (they already refresh at 1920Hz, they're just repeating 60Hz frames -- need to commander with some modifications 1920 unique images per second) -- then a prototype can probably be built in less than 1/100th the cost of a SED/FED prototype. There'd be a bit more software development needed, but it could be done in plain shadier programming instead of complex FPGA/assembly. It would be low resolution (large DPI) and maybe need to be wall sized.

Alternatively, an early version of the temporal CRT algorithm can be programmed on a 360Hz display (6 refresh cycles of rolling-scan BFI emulation for one 60Hz CRT refresh cycle). It would be something like the algorithm in proposed RetroArch BFIv3 suggestion. It could either be implemented in the display firmware, or as a GPU shader (using a Windows Indirect Display Driver), or programmed into a specific app (like an emulator such as RetroArch).

This is a much easier engineering path, to 'enhance' existing MicroLED modules for a higher refresh rate (many already PWM-refresh at 1920Hz but done by repeating refresh cycles, An FPGA per module can commandeer it to unique refresh cycles, using currently off-the-shelf technology, at prices cheaper than fabbing a SED/FED factory).

It's like playing a high-speed video of a CRT in reverse in real time on a ultra high Hz display. It temporally matches the zero-blurness and phosphor-decay behaviours of a CRT!

It's possible to shift CRT electron beam control over to software, and simply use many digital refresh cycles to emulate one CRT Hz. I actually personally added a BountySource bounty on a RetroArch emulator item (BFIv3) that incorporates such an algorithm, since display refresh rates are now getting almost high enough to emulate the electron beam of a CRT to within human vision error margins.

Also, crossposting what I posted in Blur Busters Display Research Forum (Area 51), as I am now cited in over 25 peer reviewed research papers [Google Scholar], so I'm a pretty authoritative voice in this territory. Early tests in CRT beam simulation has shown promising results, with the realism of CRT electron beam simulation improving as refresh rates keep going up -- 1000 Hz displays are no longer too far behind, and will arrive sooner than a FED/SED panel.

BFI is like pre-MameHSL. CRT electron beam simulation in software is like "perfect CRT-like BFI on steroids", with a fadebehind rolling-scan effect that can technically be beam-raced with the raster of the GPU output (for subrefresh-lag CRT simulation).

A CRT electron beam simulator is like the temporal equivalent of spatial MAME HLSL CRT filter.

More resolution = better spatials.

More refresh = better temporals.

Enough refresh rate = perfect low-Hz CRT simulation to the human eye.

I fear that FED/SED will be stillborn, since already-manufactured sample parts (necessary for a 1000Hz display -- e.g. retrofitting ultra-high-resolution MicroLED modules obtained as sample quantity, and then modify them with one FPGA chip per module to enable a higher refresh rate), rather than waiting out pandemic-slowed factory supply chains to try to manufacture a FED/SED or a new CRT tube. Computer parallelism can be utilized, if needing to drive different high-hz modules at lower resolutions, so one computer may have to output to a few computers that drive FPGA-modified MicroLED modules for a 1000Hz display using today's already-manufactured computer parts waiting in warehouses -- at least for a prototype demonstration display. If you wanted to go lower resolution, it gets cheaper (e.g. low resolution 32x32 RGB LED matrixes off Alibaba for $10 each are already refreshing at 1920Hz via repeat-refresh technique, but can be FPGA-modded too for unique refresh cycles), but we're trying to reach high resolution so you gotta buy high-resolution MicroLED modules instead. There are engineering paths of lower-resistance.

At 1000Hz-2000Hz+, with an accurate software-based temporal CRT electron beam simulator (in a GPU shader) we will be able to able to pass the CRT turing test (can't tell apart a masked flat Trinitron and a fake-CRT-glassboxed 1000Hz OLED/MicroLED). The challenge is getting good spatial (4K resolution) and good temporal (1000Hz), so 4K 1000Hz should be a baseline goal for a CRT turing A/B blind test versus a flat tube, though you can get by with lower resolutions (1080p 1000Hz) if you're simulating very tiny Sony PVM CRTs -- you need high DPI to get those phosphor dots resolving uniquely ideally. But you can prioritize temporal first at a much lower budget (modding existing MicroLED blocks with FPGA apiece), and use large viewing distance (wall sized CRT) to hide the LED-bead effect.

However, the bottom line is the technology is purchasable today for a buildable prototype -- given sufficient programming skill (FPGA/Xilinx/shaders/etc) for the processing speed necessary to simulate an accurate CRT beam in realtime (including gamma-correction-conversion, for all the CRT-fade overlaps, so you don't get flicker-band effects).

--- Begin unlinked crosspost from Blur Busters Area 51 Forum ---

High-Hz Micro LED, combined with a rolling-scan CRT emulator mode, would now probably produce vastly superior CRT look than any SED/FED ever could.Still waiting for one to be released...

The problem with SED/FED is they had to be refreshed differently from a CRT, behaving kinda like plasmas (the prototype SED/FED has worse artifacts than a Pioneer Kuro plasma). Instead, I'd rather shift the CRT refresh-pattern control over to software (ASIC, FPGA, GPU shader, or other software-reprogrammable algorithm) to get exactly the same perfect CRT look-and-feel.

The only thing is we need brute overkill Hz, to allow CRT refresh cycle emulation over several digital refresh cycles.

Think of this as a temporal version of the spatial CRT filters. We already know how great spatial CRT filters look on a 4K OLED display, looks uncannily accurate for low-rez CRT, you can even see individual simulated phosphor dots! But this isn't blurfree. But it's possible to do it temporally too, not just spatially -- it just requires many refresh cycles per CRT refresh cycles.

Direct-view discrete micro LED displays (and possibly future OLED), can do all the following:

- Color gamut of CRT

- Perfect blacks

- Bright whites

- High resolutions enough for spatial CRT filter texture emulation (see realistic individual phosphor dots!)

- Can do high refresh rates enough for temporal CRT scanning emulation (see realistic zero-blur motion and realistic phosphor decay!)

- HDR capability can also give the brightness-surge headroom to prevent things from being too dark

So you just need an ultra-high-rez ultra-high-refreshrate HDR display (such as a 1000Hz OLED or MicroLED), then the rest of the CRT behaviors can be successfully emulated spatially AND temporally in software.

You can use a 960Hz display at 16 refresh cycles per CRT Hz to emulate 60 electron-scanned refresh cycles per second at 16 frames per simulated CRT refresh cycle (each frame would be like a frame of a high speed video of a CRT, but in realtime instead). This is to simulate an electron beam / phosphor / zero motion blur as long as there was enough brute Hz to allow multiple refresh cycles per simulated CRT Hz to emulate the electron beam.

Incidentally, a 1000fps high speed video of a CRT, played back in real time to a 1000Hz display, accurately preserves a lot of the CRT temporals (zero blur, phosphor decay, same flicker, etc).

Instead, you’d use a software algorithm in the firmware or the GPU, to artificially emulate a CRT tube via piggybacking on the ultra high refresh rate capability of future displays.

Direct view micro LED panels (without LCD) can exceed the contrast range, color gamut, and resolution of a CRT tube, and so it’s a great supersetted venn diagram that is a perfect candidate for this type of logic to emulate a CRT tube, with a CRT emulator mode.

That’s the better engineering path to go down, in my opinion.

Although full-fabbed 1000Hz+ panels don't yet exist, DIY 1000Hz+ is possible: If you begin with modified jumbotron LED modules from Alibaba (they already refresh at 1920Hz, they're just repeating 60Hz frames -- need to commander with some modifications 1920 unique images per second) -- then a prototype can probably be built in less than 1/100th the cost of a SED/FED prototype. There'd be a bit more software development needed, but it could be done in plain shadier programming instead of complex FPGA/assembly. It would be low resolution (large DPI) and maybe need to be wall sized.

Alternatively, an early version of the temporal CRT algorithm can be programmed on a 360Hz display (6 refresh cycles of rolling-scan BFI emulation for one 60Hz CRT refresh cycle). It would be something like the algorithm in proposed RetroArch BFIv3 suggestion. It could either be implemented in the display firmware, or as a GPU shader (using a Windows Indirect Display Driver), or programmed into a specific app (like an emulator such as RetroArch).

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

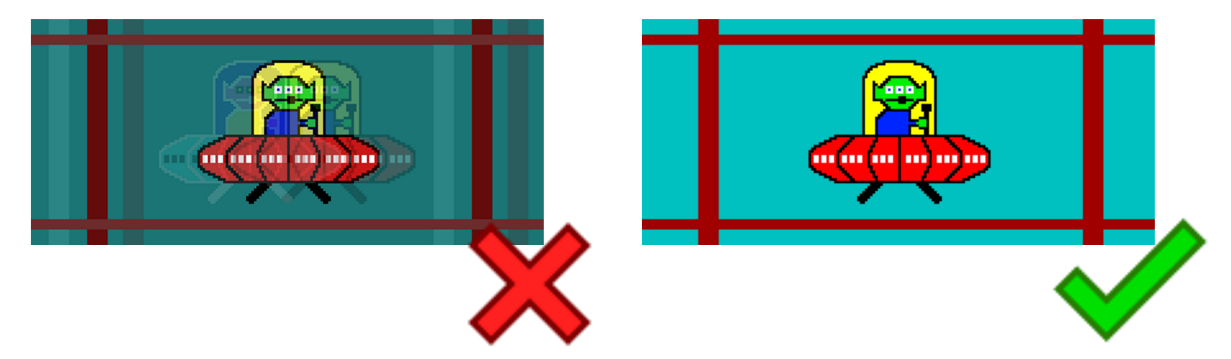

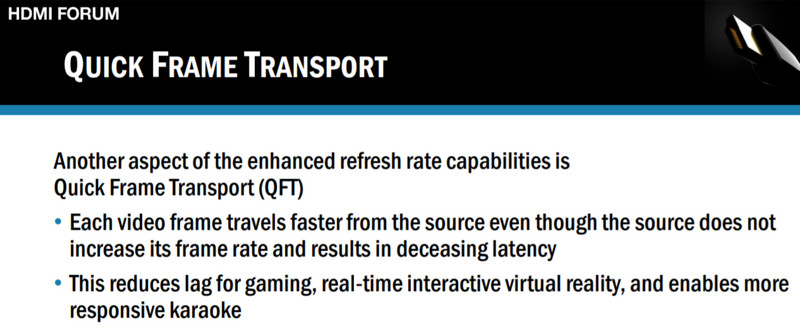

![crosstalk-annotated-ANIMATED-VERTTOTAL[1].gif crosstalk-annotated-ANIMATED-VERTTOTAL[1].gif](https://cdn.hardforum.com/data/attachment-files/2022/05/660883_crosstalk-annotated-ANIMATED-VERTTOTAL1.gif)