Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Interest in brand new 8K 80Hz CRT for 8000$?

- Thread starter rabidz7

- Start date

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,505

I thought one of the main benefits of CRT was how much better lower resolutions looked vs LCD/OLED due to them not really having a native resolution. I would have just preferred a 1920x1200 CRT that can do the highest refresh rate possible rather than shooting for resolution, something like 1920x1200 at 480Hz.

There were many other benefits to CRT's. No native resolution, so the display could look good at nearly any resolution the tube was rated for. Additionally, you had less input lag and better motion clarity. Not only that, you had better contrast. Deeper blacks being the big thing people point out. The downsides are tube geometry, noticeable refresh and scan lines at some resolutions. You also had image distortion that had to be adjusted for at times. That's before you get into the radiation, heat output, power consumption and the weight and size of the tubes relative to their screen sizes.

Not to mention, the tubes degrade over time leading to blurriness, loss of brightness and so on.

Not to mention, the tubes degrade over time leading to blurriness, loss of brightness and so on.

Same here. I spent a lot of time playing games and it was hard on my eyes. I ended up with a lot of headaches from crap CRT's. Even good ones caused eyestrain after long enough hours.Eye fatigue was a huge issue for me with tube monitors. Sucked a lot playing 16 hours straight of FFXI back in the day.

NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

Tbh I would be mildly interested in a 30" 3840x2400 Plasma monitor with native DP input. If we're talking about fantasy monitors that would cost thousands and destroy your desk and eyes, may as well go with the more compact and geometrically-correct version

I do kind of wish I still had my last CRT monitor to see how modern games look on it... Mitsubishi 20" flat something, 2048x1536 @75hz I think?

I do kind of wish I still had my last CRT monitor to see how modern games look on it... Mitsubishi 20" flat something, 2048x1536 @75hz I think?

I'd add that CRTs are also sensitive to the quality of the signal converters in the GPU. I remember back in the day just using a different GPU made a difference in image quality.There were many other benefits to CRT's. No native resolution, so the display could look good at nearly any resolution the tube was rated for. Additionally, you had less input lag and better motion clarity. Not only that, you had better contrast. Deeper blacks being the big thing people point out. The downsides are tube geometry, noticeable refresh and scan lines at some resolutions. You also had image distortion that had to be adjusted for at times. That's before you get into the radiation, heat output, power consumption and the weight and size of the tubes relative to their screen sizes.

Not to mention, the tubes degrade over time leading to blurriness, loss of brightness and so on.

I own a few reference grade CRTs for retro gaming but have zero desire to use them for anything else. They make those old games look absolutely gorgeus in a way that the pixel perfectness of LCDs or OLEDs cannot.

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

The DAC will need to be part of the display now.I'd add that CRTs are also sensitive to the quality of the signal converters in the GPU. I remember back in the day just using a different GPU made a difference in image quality.

An analogue cable will be too fat and unwieldy to support 8K80 without serious issues, even then it might still suck a little.

Also no gfx cards have analogue out now. You can use an external DAC but it will barely improve the outcome, analogue cable will still be needed.

The DAC needs to be as close to the monitors internal circuits as possible, connecting directly with very short wires to reduce problems.

imo this would have been the best outcome from the start to get the least problems + sharpest and most stable image.

Image quality will be far more consistent.

But the DAC and Digital input will need to be 8K80 bandwidth compliant before release, or have a replaceable Digital input / DAC module.

Assuming rabidz7 post isnt correct! Otherwise you may be reduced to lower refresh 8K only at release which will reduce its appeal dramatically.

And will it support variable refresh rate? In theory it should as its simple to implement on CRT.Apparently only 4K at 80Hz will be supported.

But if its truly limited to only 80Hz it wont get VRR.

Last edited:

rabidz7

[H]ard|Gawd

- Joined

- Jul 24, 2014

- Messages

- 1,331

Progressively scanned resolutions:

5120x3200@60Hz

4096x2560@70Hz

3840x2400@80Hz

3072x1920@96Hz

2880x1800@105Hz

2560x1600@120Hz

2304x1440@130Hz

2048x1280@144Hz

1920x1200@155Hz

1680x1050@170Hz

1440x900@200Hz

5120x3200@60Hz

4096x2560@70Hz

3840x2400@80Hz

3072x1920@96Hz

2880x1800@105Hz

2560x1600@120Hz

2304x1440@130Hz

2048x1280@144Hz

1920x1200@155Hz

1680x1050@170Hz

1440x900@200Hz

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

Stop making up fantastical fairytale fables of fud.Progressively scanned resolutions:

5120x3200@60Hz

4096x2560@70Hz

3840x2400@80Hz

3072x1920@96Hz

2880x1800@105Hz

2560x1600@120Hz

2304x1440@130Hz

2048x1280@144Hz

1920x1200@155Hz

1680x1050@170Hz

1440x900@200Hz

Nenu

[H]ardened

- Joined

- Apr 28, 2007

- Messages

- 20,315

lol, thats a little differentProgressively scanned resolutions:

5120x3200@60Hz

4096x2560@70Hz

3840x2400@80Hz

3072x1920@96Hz

2880x1800@105Hz

2560x1600@120Hz

2304x1440@130Hz

2048x1280@144Hz

1920x1200@155Hz

1680x1050@170Hz

1440x900@200Hz

Much better, but I expected higher refresh rates than that now its not locked.

This suggests there are problems with clarity at higher refresh rates.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Yeah had a Sony wide and flat screen crt in the later days before plasma etc. Pretty good for ps2 gaming.I remember Samsung had a 16:9 tube TV back in the day. My question is how can this even exist? No one has been working on tube tech for at least 15 years.

rabidz7

[H]ard|Gawd

- Joined

- Jul 24, 2014

- Messages

- 1,331

Well guys, the supplier crapped out on me. Doesn't look like it's going to happen.

It never was.Well guys, the supplier crapped out on me. Doesn't look like it's going to happen.

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

You for real?Well guys, the supplier crapped out on me. Doesn't look like it's going to happen.

Out of interest, what was the best LCD you had/used?

It doesn't look like you ever used good LCD panels and it is only because of that you still hold on to idea that there is nothing better.

Definitely OLED panels are better than both CRT and LCD and they are fairly easy to get these days.

I have SONY GDM-FW900 and LG 27GP950 and the latter has superior image quality.

I would need to go to extremes like Doom 3 in pitch black room to even showcase case where FW900 looks better. Otherwise it just does not. Not in videos and not in games and especially modern games where framerate is consideration. Color quality and its brightness on this LG makes CRT look washed out and sad. And even for CRT I need to use ambient light otherwise blacks look bad. It is simple perceptual effect.

Good enough always wins.

And when it comes down to it the only reason I from time to time use CRTs for games is that where it is worse than modern IPS it is still good enough that despite being worse it is good enough it can be easily ignored. That said imho modern IPS is better 'good enough' where it is worse than CRT than CRT's good enough where it is worse than IPS. Hence simple conclusion: LCD panels already got better than CRT

Even if that monitor you trolled about was real and it had EBU phosphors and AG coating used in some PDPs it would have hard time competing even with that LG I mentioned. And especially in $8K price range where one could get OLED TV and FALD IPS monitor and still have some cash left for future upgrades

That said if such monitor was released imho few people would get them for $8K.

For myself it would really need to hit all the right check boxes including proper hardware calibration (I would not spend 8K to have to worry about things like gamma level) and would need to work with PS5 at 1080p120 out of the box and without any image distortion. And it would need to be at least 200 nits all while having really dark screen so I would not need to dance around lighting conditions to get it to look good. That said... why not just get OLED TV for fraction of the price... yeah... hard sell. Your 'supplier' had the right call. He probably just sold you usual stuff instead. Stuff like happy pills with which even plain walls display images

Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

Social experiment complete I see.Well guys, the supplier crapped out on me. Doesn't look like it's going to happen.

rabidz7

[H]ard|Gawd

- Joined

- Jul 24, 2014

- Messages

- 1,331

I'm still trying to get a new monitor into the market. Everything here is very preliminary. I sent a request for a 30" 5120x3200 LCD/OLED. I will see if that is possible

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

I went down this path some years ago with an [h] member here, to manufacture 4k120 10 bit screens back when 1440p was king. Back then even 8k60 was possible with modded tcon.I'm still trying to get a new monitor into the market. Everything here is very preliminary. I sent a request for a 30" 5120x3200 LCD/OLED. I will see if that is possible

I have all the manufacturing equipment needed at my disposal. Like million+ euros of machining hall, anodisation plant, engineering needed etc.

Guess what sunk the plan? Those realtek dp chips. You want to know what minimum order is from them? 1.5m usd. Then the Intel fpgas you need, etc etc.

You ain't building your own screens unless you have at least 2mil for lunch money bud, Sorry.

NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

This. So many tech products get canned due to logistics issues rather than design or engineering... there are immense differences between building 1 thing, 10 things, 1,000 things, and 1,000,000 things and as someone who has run operations / logistics / R&D for a small tech mfg startup, the "we need to mass produce a few hundred to a few thousand of this thing" zone can actually be incredibly challenging to be in. Getting a handful of a component is trivial, suppliers love to give away samples to R&D folks. Getting a few hundred of something though can be an issue for production if the manufacturer or first-level distributor won't do small quantity then you're off to Digikey or Arrow and there go your margins- if the last-level supplier even has the thing stocked.I went down this path some years ago with an [h] member here, to manufacture 4k120 10 bit screens back when 1440p was king. Back then even 8k60 was possible with modded tcon.

I have all the manufacturing equipment needed at my disposal. Like million+ euros of machining hall, anodisation plant, engineering needed etc.

Guess what sunk the plan? Those realtek dp chips. You want to know what minimum order is from them? 1.5m usd. Then the Intel fpgas you need, etc etc.

You ain't building your own screens unless you have at least 2mil for lunch money bud, Sorry.

It's been enough to put me off of a couple product ideas of my own- at small scale, the options are lean into a high price and go full boutique to keep margins- which has its own set of issues- or attempt to scale to get per-unit cost down which is a logistics nightmare if you don't have immense funding to go from 0-100 in an extremely short period of time.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

This. So many tech products get canned due to logistics issues rather than design or engineering... there are immense differences between building 1 thing, 10 things, 1,000 things, and 1,000,000 things and as someone who has run operations / logistics / R&D for a small tech mfg startup, the "we need to mass produce a few hundred to a few thousand of this thing" zone can actually be incredibly challenging to be in. Getting a handful of a component is trivial, suppliers love to give away samples to R&D folks. Getting a few hundred of something though can be an issue for production if the manufacturer or first-level distributor won't do small quantity then you're off to Digikey or Arrow and there go your margins- if the last-level supplier even has the thing stocked.

It's been enough to put me off of a couple product ideas of my own- at small scale, the options are lean into a high price and go full boutique to keep margins- which has its own set of issues- or attempt to scale to get per-unit cost down which is a logistics nightmare if you don't have immense funding to go from 0-100 in an extremely short period of time.

Yeah you get the difficulty. We do custom production runs for customers, 10x units minimum etc, sometimes one off if its 6 figure stuff or cheap prototype. Last time I looked you could only source direct from realtek, no one is buying them from arrow etc.This. So many tech products get canned due to logistics issues rather than design or engineering... there are immense differences between building 1 thing, 10 things, 1,000 things, and 1,000,000 things and as someone who has run operations / logistics / R&D for a small tech mfg startup, the "we need to mass produce a few hundred to a few thousand of this thing" zone can actually be incredibly challenging to be in. Getting a handful of a component is trivial, suppliers love to give away samples to R&D folks. Getting a few hundred of something though can be an issue for production if the manufacturer or first-level distributor won't do small quantity then you're off to Digikey or Arrow and there go your margins- if the last-level supplier even has the thing stocked.

It's been enough to put me off of a couple product ideas of my own- at small scale, the options are lean into a high price and go full boutique to keep margins- which has its own set of issues- or attempt to scale to get per-unit cost down which is a logistics nightmare if you don't have immense funding to go from 0-100 in an extremely short period of time.

I'd say skim the fat off high end of market, if it moves then volume and optimise to get costs where ya need them if ya want to try something. Good luck it you do go down that path in future.

You for real?

Out of interest, what was the best LCD you had/used?

It doesn't look like you ever used good LCD panels and it is only because of that you still hold on to idea that there is nothing better.

Definitely OLED panels are better than both CRT and LCD and they are fairly easy to get these days.

I have SONY GDM-FW900 and LG 27GP950 and the latter has superior image quality.

I would need to go to extremes like Doom 3 in pitch black room to even showcase case where FW900 looks better. Otherwise it just does not. Not in videos and not in games and especially modern games where framerate is consideration. Color quality and its brightness on this LG makes CRT look washed out and sad. And even for CRT I need to use ambient light otherwise blacks look bad. It is simple perceptual effect.

Good enough always wins.

And when it comes down to it the only reason I from time to time use CRTs for games is that where it is worse than modern IPS it is still good enough that despite being worse it is good enough it can be easily ignored. That said imho modern IPS is better 'good enough' where it is worse than CRT than CRT's good enough where it is worse than IPS. Hence simple conclusion: LCD panels already got better than CRT

Even if that monitor you trolled about was real and it had EBU phosphors and AG coating used in some PDPs it would have hard time competing even with that LG I mentioned. And especially in $8K price range where one could get OLED TV and FALD IPS monitor and still have some cash left for future upgrades

That said if such monitor was released imho few people would get them for $8K.

For myself it would really need to hit all the right check boxes including proper hardware calibration (I would not spend 8K to have to worry about things like gamma level) and would need to work with PS5 at 1080p120 out of the box and without any image distortion. And it would need to be at least 200 nits all while having really dark screen so I would not need to dance around lighting conditions to get it to look good. That said... why not just get OLED TV for fraction of the price... yeah... hard sell. Your 'supplier' had the right call. He probably just sold you usual stuff instead. Stuff like happy pills with which even plain walls display images

Maybe your tubes are wearing out or something? My Trinitrons are still vibrant and spectacular. IPS really doesn't compare. Looking to OLED and such to finally provide an off ramp.

(I'm talking about in a room with controlled ambient lighting. Admittedly, CRTs do not do well in a well lit room... )

EDIT: Or maybe it's because you're using that dark polarizing film on the FW900. The filter I use is dark enough to fix black, but is much lighter than the polarizer.

Last edited:

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,003

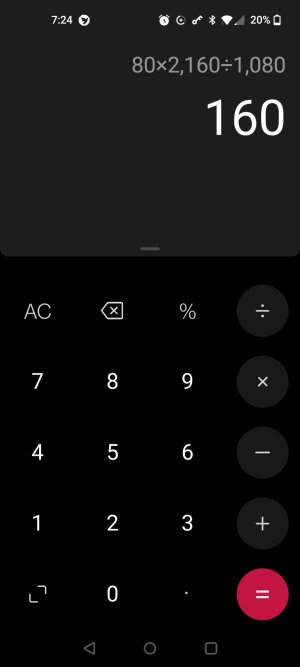

That's disappointing.1920x1200p@160Hz

1080p at 160 is literally half the bandwidth versus 4k @80.

Why the limitations?

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

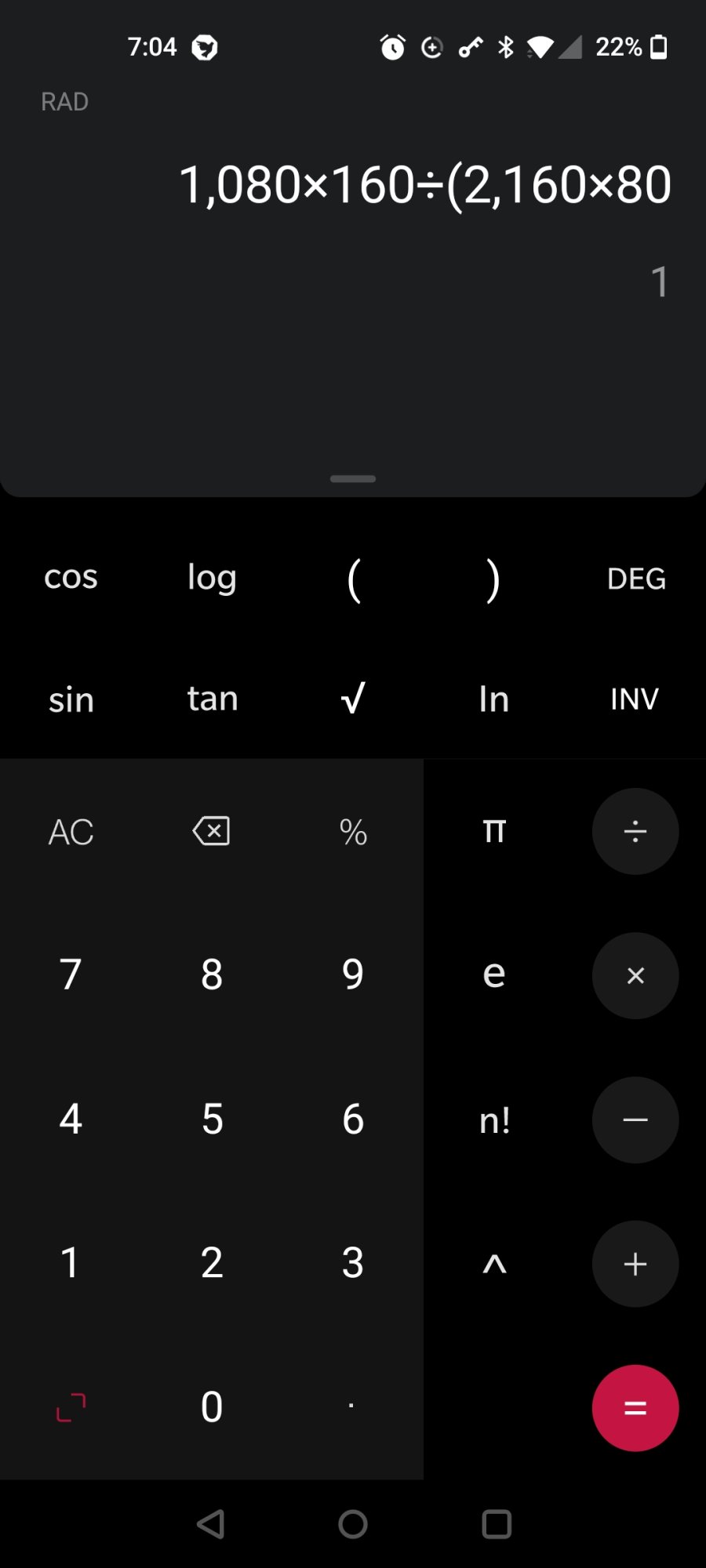

It's the same, actually:That's disappointing.

1080p at 160 is literally half the bandwidth versus 4k @80.

Why the limitations?

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

Gotta remember that CRT don't have "pixels" technically. They're limited by scan speed and vertical refresh rate.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,505

It's the same, actually:

View attachment 464406

That....is not how it works. You didn't even factor in the vertical resolution. Try the same thing but actually factor in the vertical resolutions of 1080 and 2160.

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

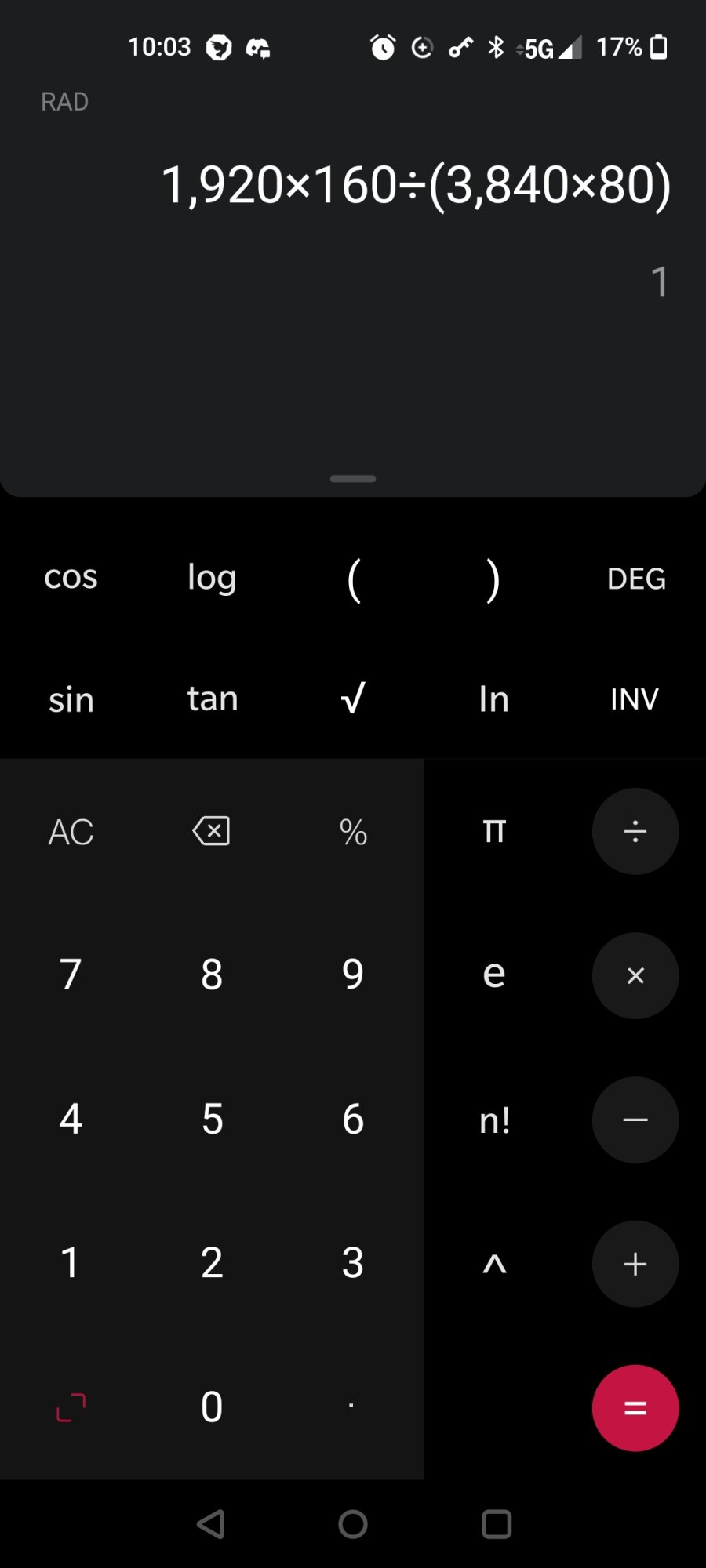

Sure...That....is not how it works. You didn't even factor in the vertical resolution. Try the same thing but actually factor in the vertical resolutions of 1080 and 2160.

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

And before you say do both at the same time, that's not how it works. But even if it were, you're talking about a simple multiplication problem. If they both equal 1 individually, they'll both equal 1 together as well.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,505

I give up.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

SMH... Sigh.And before you say do both at the same time, that's not how it works. But even if it were, you're talking about a simple multiplication problem. If they both equal 1 individually, they'll both equal 1 together as well.

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

Attachments

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

Yeah, I know, it is actually .5. But like I said, that's NOT how it works. The vertical refresh rate already takes into account the horizontal scan rate.SMH... Sigh.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

Stop digging yourself a deeper hole, if you're not just trolling...Yeah, I know, it is actually .5. But like I said, that's NOT how it works.

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

Then you explain why what I said is wrong. But don't use LCDs as example. CRTs have additional physical limitations which make certain resolutions impossible even if not bandwidth constrained. They are not the same.

whateverer

[H]ard|Gawd

- Joined

- Nov 2, 2016

- Messages

- 1,815

Were assuimg that this magical CRT has high-enough dot-pitch to display 8k. At that point, all your remaining limits are bandwidth (max refresh spec) plus dot-clock filters (blur increases with refresh rate, but most wont notice)Then you explain why what I said is wrong. But don't use LCDs as example. CRTs have additional physical limitations which make certain resolutions impossible even if not bandwidth constrained. They are not the same.

I mean other than interlaced on a native progressive I cant imagine what you're talking about!

Last edited:

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,051

The CRT might be able to display more vertical linesWere assuimg that this magical CRT has high-enough dot-pitch to display 8k. At that point, all your remaining limits are bandwidth (max refresh spec) plus dot-clock filters (blur increases with refresh rate, but most wont notice)

I mean other than interlaced on a native progressive I cant imagine what you're talking about!

Last edited:

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

My tube is in great shape. With polarizer it does 130-140 nits and polarizer has less than 50% transmittance so without it can do almost 300 nits (270-280 if I remember correctly) - of course at this level this monitor was completely unusable unlike LG 27GP950 which has rather gentle light which only starts to be really jarring at 400 nits and up and I usually use it at 300 nits. On FW900 at higher brightness levels was hardly usable also because around bright objects there would be rather bright halos ruining black level. Up to some point this brightening looks ok and anything higher than that and black becomes unacceptable. For IPS it is the same, there is upper limit of black level that is ok and the better panel the brighter image can get. Take this video at 0:10. On FW900 the black level at sides is brighter than I have on 27GP950 at 300nits brightness. This effect can be ignored just like rendering of darker scenes on IPS screens can be ignored. If you get used to it then its not noticeable it could be better.Maybe your tubes are wearing out or something? My Trinitrons are still vibrant and spectacular. IPS really doesn't compare. Looking to OLED and such to finally provide an off ramp.

(I'm talking about in a room with controlled ambient lighting. Admittedly, CRTs do not do well in a well lit room... )

EDIT: Or maybe it's because you're using that dark polarizing film on the FW900. The filter I use is dark enough to fix black, but is much lighter than the polarizer.

After few hours of using FW900 my brain switches to CRT way of displaying image and IPS looks jarring. Still black on this LG looks good enough. Its not an issue. More than it compression artifacts become obvious, somehow. Not sure even why that happens.

Going other way from IPS at 300 nits to FW900 the colors look obviously less saturated - SMPTE C phosphors are not quire Rec.709 level - and of course image is much dimmer. Its less of an issue if I keep IPS at normal brightness like 120-150 nits. Also CRT has this characteristic blurring and glow around bright objects which is at first jarring and then after getting used to it it missing on IPS is jarring for short while. And it flickers. Flicker-free screens do not flicker, kinda explains why they are gentler on eyes*

In either way I have my FW900 and like to use it from time to time. It is like vacuum tube audio amplifier. I like to use vacuum tube headphone amplifier when I use CRT for complete effect... effect like heating up room in winter ^^ It is just like we need today CRT's otherwise one cannot get good PC monitor. Modern displays are pretty good and in many aspects even better. Bright scenes on good screen at 300 nits look absolutely great.

*) Some at lest.

Actually lots of LCD screens are terrible on eyes, somehow. Like I had Acer XB271HK which was absolutely terrible on eyes and comparable to FW900 at similar brightness level if not even worse. I am not even how they did it. Its like AUO made it their life mission to blind people... maybe it is their mission, who knows. At least LG does much better job in their panels, at least from I can say using their panels.

ChaosCloud

n00b

- Joined

- Dec 17, 2021

- Messages

- 26

160 Hz on CRT would still have better motion clarity than any currently available LCD.That's disappointing.

1080p at 160 is literally half the bandwidth versus 4k @80.

Why the limitations?

rabidz7

[H]ard|Gawd

- Joined

- Jul 24, 2014

- Messages

- 1,331

The CRT doesn't seem to be currently an option, but I might be able to do 3840x2400@200Hz OLED soon

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,718

That would be the way to go!The CRT doesn't seem to be currently an option, but I might be able to do 3840x2400@200Hz OLED soon

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)