Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

doesn't Frame Generation actually introduce increased Input Latency?

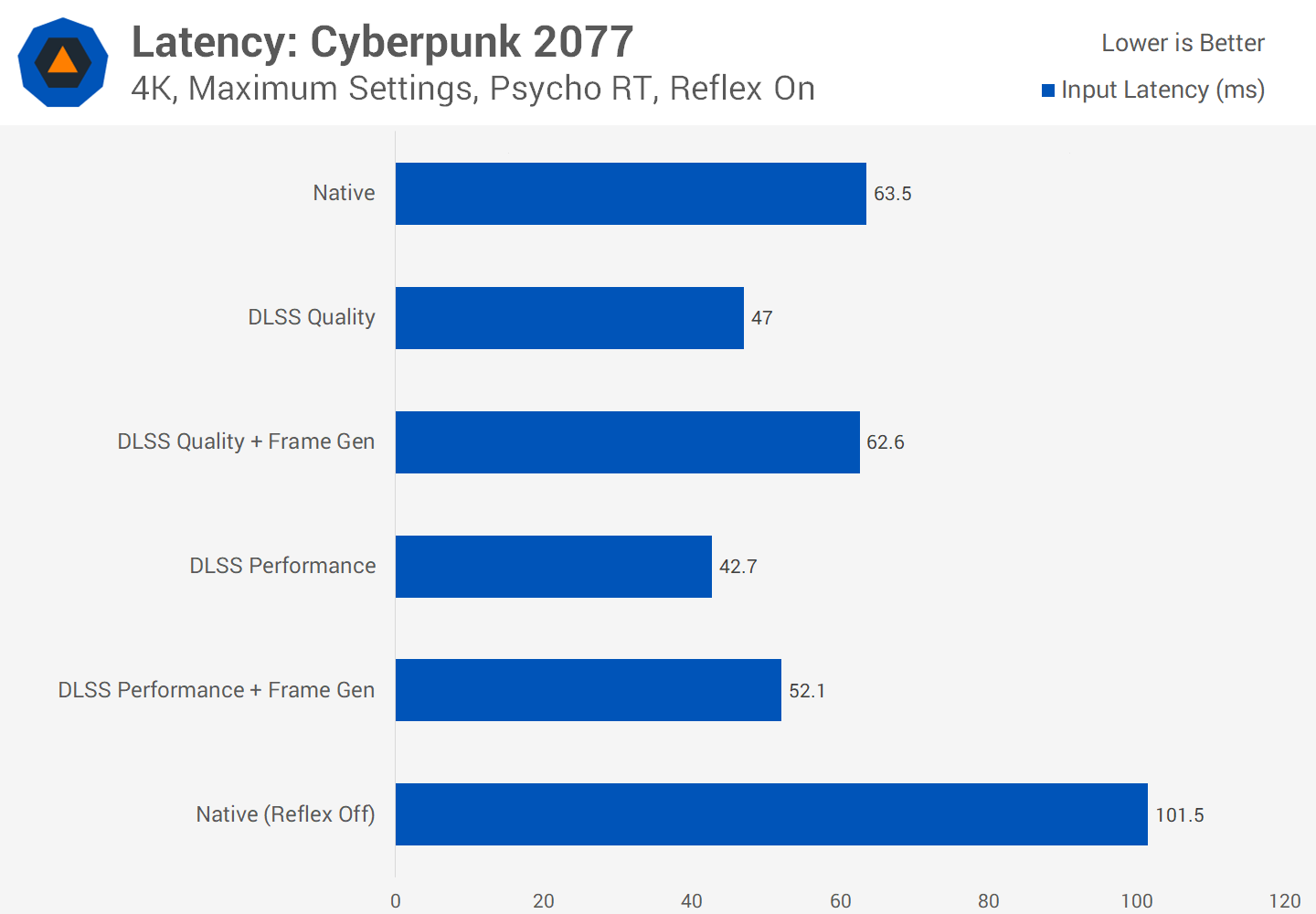

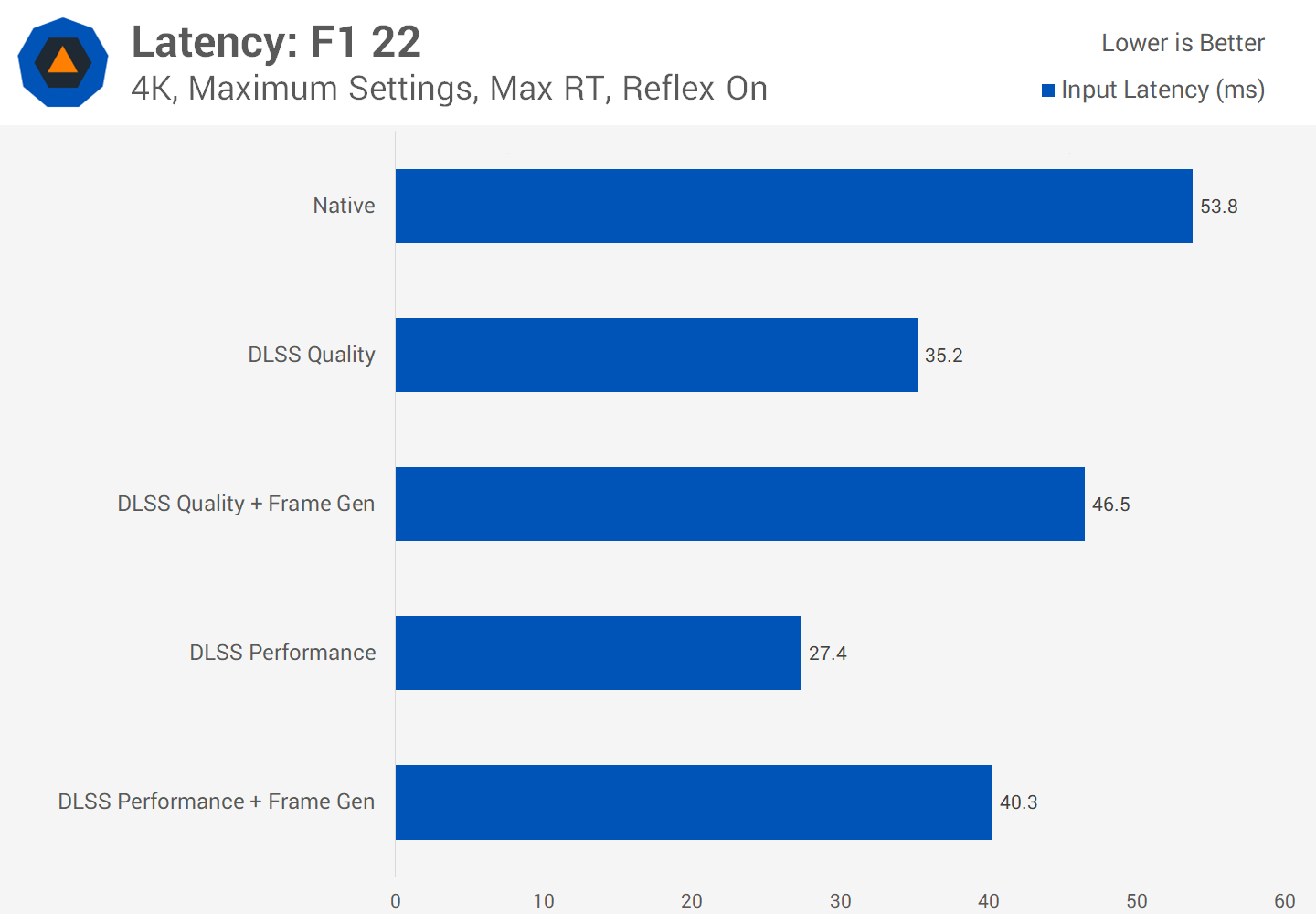

when enabling frame generation, while scene FPS rose again to 112 FPS, latency increased to 63 ms. What this means is that despite the game having the visual smoothness of 112 FPS, the latency and responsiveness you're experiencing is more like playing the game at 40 FPS. You end up with smooth motion but a slow feel, which is hard to show on a video. https://www.techspot.com/article/2546-dlss-3/

That's today's status quo.This is true, and for some games it is quite the boone but for fast-twitch games probably not so much.

Future reprojection-based frame generation will reduce latency.

Right now, DLSS 3.0 uses partially interpolation-based frame generation, but reprojection-based frame generation is superior (e.g. Oculus ASW 2.0), ported to PC context:

It will require some creativity like API hooks for new 6dof positional updates (translate XYZ, rolls XYZ) from mouse / keyboard movements, fed into the reprojection-powered frame generator.

(Note: Downloadable demo! It actually works; reprojection artifacts mostly disappear if you start at an original framerate above flicker fusion threshold, e.g. start reprojecting from 80-100fps, towards a higher frame rate like 360fps, 500fps or 1000fps).

There is enough horsepower for 4K 1000fps 1000Hz UE5-detail possible with today's 4000-series GPU.

Wait till roughly the 4.0 or 5.0 generation of DLSS, XeSS and FSR, I think, before latency-reducing frame generation comes out.

Even retroactive reprojection can also undo latency of 10ms frames (100fps) with 1ms mouse polls, turning 100fps 10ms rendertimes into 1000fps with 1ms latency. Research in future advanced multilayer Z-buffers with 3D reprojection (instead of just 2D reprojection) will also apply this to character movements, though initially will only be for zeroing out latency in mouselook / strafe / pans / turns.

This does not solve frame generation's unsuitability in esports, but this will change between now and the end of this decade. There's just no way to generate UE5.1-quality 4K 1000fps at 2nm-3nm transistor sizes without reprojection-based frame generation technology but at least there definitely is a path forward to the 4K 1000fps future (2030s).

The multitiered GPU rendering pipeline harkens, not too different from the video layering of 1 full frame per second and 23 predicted (interpolated) frames per second that is part of video codecs such as H.264 ...

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)