Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

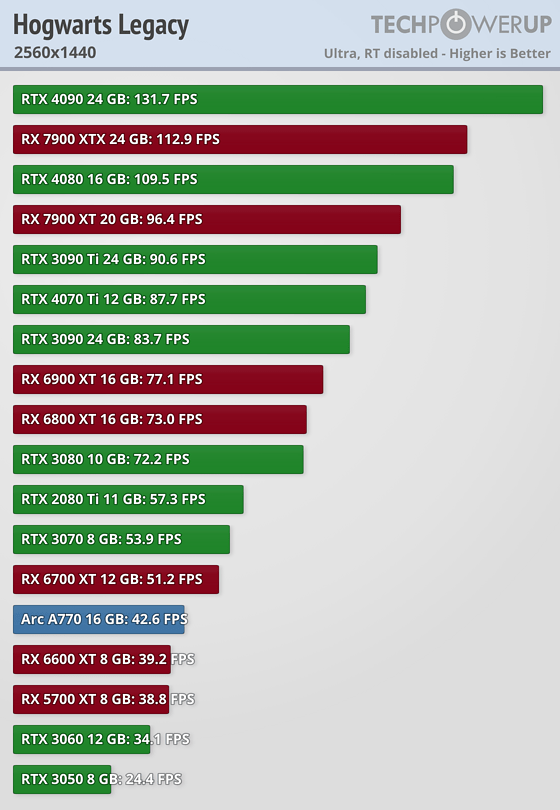

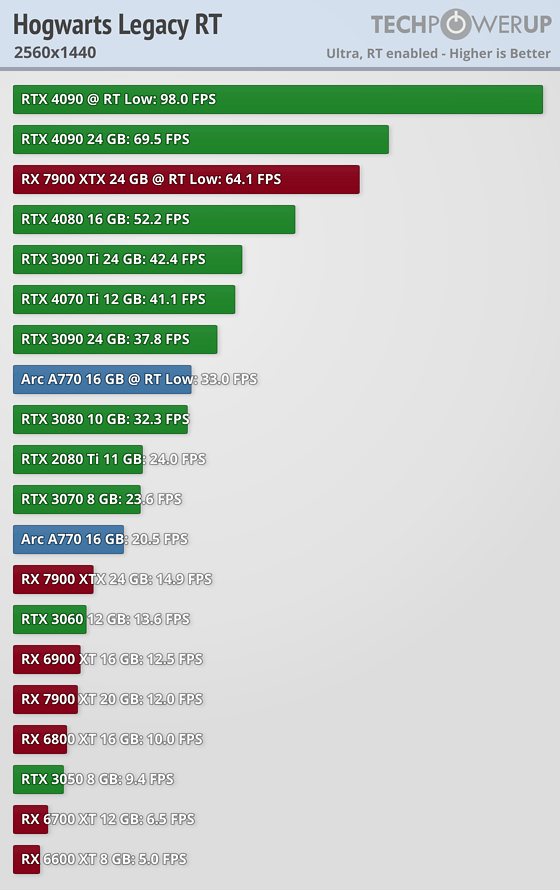

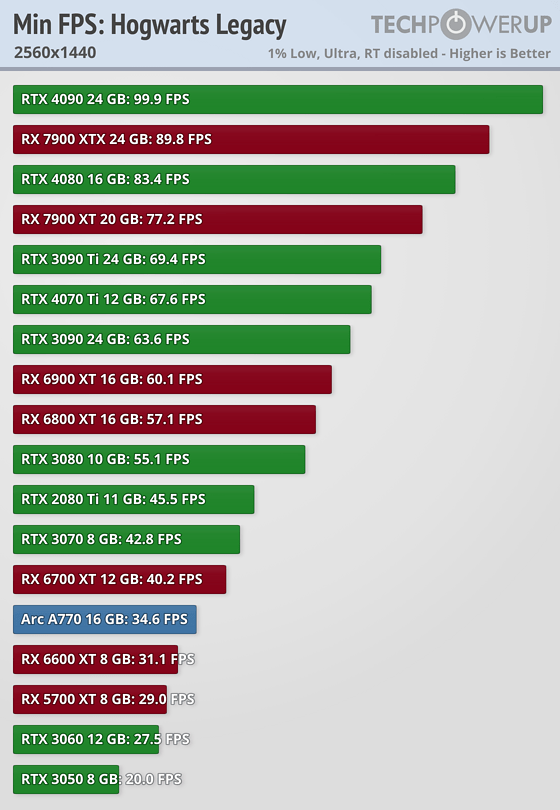

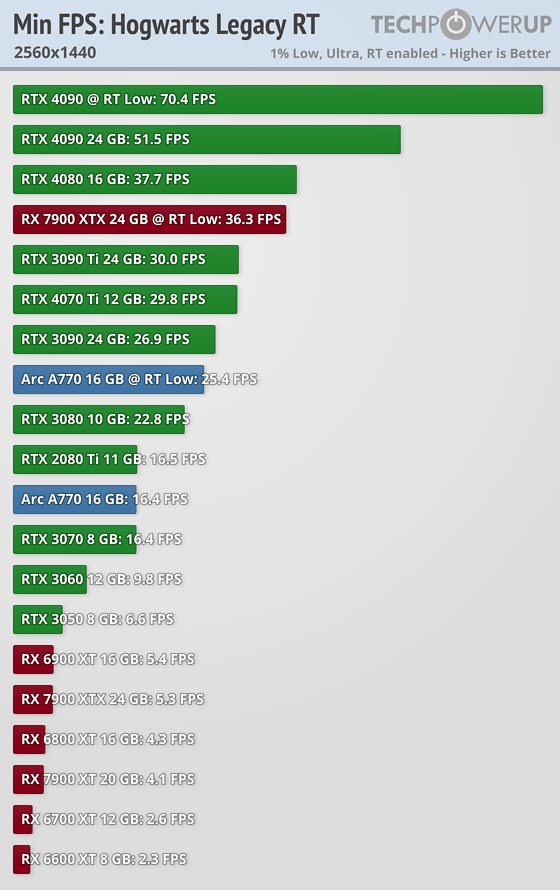

Intel’s Arc A770 Nearly 3x Faster than AMD’s Radeon RX 7900 XTX in Hogwarts with Ray Tracing

- Thread starter erek

- Start date

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,934

The way this works is that AMD's driver team should be testing games with their drivers before launch. Either that or AMD's RT performance is really bad and ain't no drivers fixing this.Or a bug in the game or a bug in the game engine. There is a lot more to graphics than RT. Reach beyond your singe game RT use case.

I'm not bound to any particular brand, and neither should you.All in all Intel is not in the same league as Nv or AMD, if you're so hot on intel GPUs, then go ahead and buy one.

Excuse me but you don't see all the threads here with people complaining about GPU prices? It's a theme here.Your price complaints really don't hold water in today's reality.

Not about support officially closing but support unofficially closing.Support windows close, it's a fact of life, not really valid either.

Yes seriously. Lots of games still use it. Besides most emulators using it, there's Doom 2016, Minecraft, and a lot of Indie games.Opengl? Seriously?

Most of us are used hardware owners. People are happy that AMD's RX 6000 series is getting cheaper, but these are now 2-3 years old. Don't go putting labels on me and thinking that supports your argument, which I don't know what that is. AMD fucked up in this one particular game, but it is a big game. Did Nvidia or Intel fuck up? Doesn't look like it so far.All in all, you just sound like a disgruntled used hardware owner. Please just go buy a new card from Nv or your extolled intel. I'm sure you'll be on there afterwards complaining about the opengl in that too or something.

Chris_B

Supreme [H]ardness

- Joined

- May 29, 2001

- Messages

- 5,357

Always fun seeing performance comparisons using a game that clearly has issues needing resolved. The patch for this game which listed performance improvements was only released on Friday, this article is from Friday and the linked benchmarks are from the 7th/9th so they're using the pre-patch version.

Last edited:

All this debate over unplayable framerates in a just released game that clearly needs some work, lol.

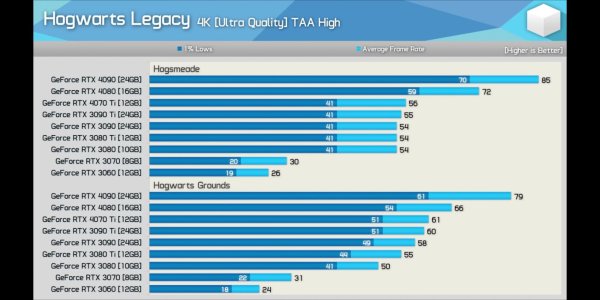

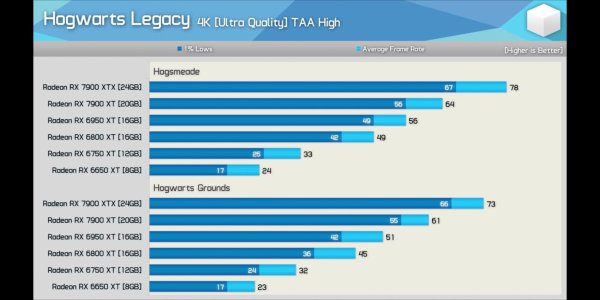

Once again if you want 4k max w/RT at playable(but still not great) framerates only the 4090 can deliver it which just reinforces my belief that we're still at least a couple generations away from it being a fully developed mainstream tech.

Once again if you want 4k max w/RT at playable(but still not great) framerates only the 4090 can deliver it which just reinforces my belief that we're still at least a couple generations away from it being a fully developed mainstream tech.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

Posting a picture of a CPU bound result of a GPU comparison as some kind of gotcha is even more embarrassing than the reviewer posting the video in the first place.

And we have a winner. Nailed it perfectly.All this debate over unplayable framerates in a just released game that clearly needs some work, lol.

Once again if you want 4k max w/RT at playable(but still not great) framerates only the 4090 can deliver it which just reinforces my belief that we're still at least a couple generations away from it being a fully developed mainstream tech.

There's a reason I've been saying we're at least two and more likely three generations away from truly usable ray tracing. The performance simply isn't there outside of halo cards and until the ray tracing performance of low to midrange cards reaches the level of the current halo card it's little more than a gimmick. There are other factors which have an effect such as what is implemented and how it's implemented but that's true of any graphical features in any game.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

You know, it's funny. I remember the same thing happening when Tessellation was released. It got racked over the coals for how expensive it was to run. But here we are now, not batting an eye at tessellation and repeating the same arguments over Ray tracing.And we have a winner. Nailed it perfectly.

There's a reason I've been saying we're at least two and more likely three generations away from truly usable ray tracing. The performance simply isn't there outside of halo cards and until the ray tracing performance of low to midrange cards reaches the level of the current halo card it's little more than a gimmick. There are other factors which have an effect such as what is implemented and how it's implemented but that's true of any graphical features in any game.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,668

Same. I also remember people proudly declaring how expensive antialiasing was and how it would never be enabled for them... Now we don't bat an eye at it. We won't bat an eye at raytracing soon enough eitherYou know, it's funny. I remember the same thing happening when Tessellation was released. It got racked over the coals for how expensive it was to run. But here we are now, not batting an eye at tessellation and repeating the same arguments over Ray tracing.

I agree that the techs appear to be on a similar trajectory and if that continues doing ray traced lighting and reflections will likely end up standard in games without a major performance penalty. However I do think the early performance complaints for both are/were valid, I still enjoy playing around with these sorts of things before they're fully baked but I don't make them a major focus in buying decisions.You know, it's funny. I remember the same thing happening when Tessellation was released. It got racked over the coals for how expensive it was to run. But here we are now, not batting an eye at tessellation and repeating the same arguments over Ray tracing.

When it first came out the Heaven benchmark looked great with full tessellation but also turned into a slideshow, now we have games with the same level of tessellation that don't even bother to make it optional since the performance impact is negligible. I think we'll get there but aren't there yet.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,001

And what's more impressive is just how prevalent Hardware Tessellation is in modern game engines and how nobody even realizes it.You know, it's funny. I remember the same thing happening when Tessellation was released. It got racked over the coals for how expensive it was to run. But here we are now, not batting an eye at tessellation and repeating the same arguments over Ray tracing.

Ah, yes, the anemic 7700X is just such an unfair crutch for Nvidia drivers.Posting a picture of a CPU bound result of a GPU comparison as some kind of gotcha is even more embarrassing than the reviewer posting the video in the first place.

View attachment 548676View attachment 548677View attachment 548678View attachment 548679

Sure, it's not a Nvidia optimization problem, it just so CPU restricted at 4K Ultra by a low end 7700X. And then limits the 3090 Ti but not the 4090. Do explain.

Last edited:

To be fair, "true" AA never stopped being too expensive and we've moved on to other techniques.Same. I also remember people proudly declaring how expensive antialiasing was and how it would never be enabled for them... Now we don't bat an eye at it. We won't bat an eye at raytracing soon enough either.

I'm actually not sure RT can be compared to things like Tessellation and AA where the hardware will catch up. I mean I am sure it will get faster... I'm still not convinced its a not a road that gets abandoned completely either.

The difference between tessellation as an example and RT is pretty huge. Tessellation is dealing with the same raster data that was already there, its simply doing a little bit of very basic math real time. (comparatively) RT requires a ton more work.

Where tessellation could be made a part of the standard raster hardware pipe. RT cannot. If anything as crazy as it sounds... Intel actually has the most likely to stick around hardware solution. NVidias dedicated units are a high silicon cost for something that does nothing when RT is not in play. Intel having one work unit to cover all situations is a great hedging of their graphics bet imo. This also however means there will probably always be a performance cost... the only time it won't be a major one is if the rest of what is going on is easily accelerated by the rest of the shader units. (so that there are free units not doing much else) At least their solution gives users a real choice... use all your shader units for raster, or loose some raster if you choose to flip RT on and have 20-30% of your raster units get retasked calculating rays.

We'll see how the future goes for RT its still pretty up in the air imo. As far as developer side having a easier go with RT then standard raster methods. It seems companies like Epic have that in hand to translate developer work with RT scenes to standard raster lighting. Once Nvidia goes full chiplet... I can't see them wanting to continue including a ton of separate RT hardware. I could even see Nvidia adopting an approach more like Intel and AMDs where the same raster units will get retasked.

The difference between tessellation as an example and RT is pretty huge. Tessellation is dealing with the same raster data that was already there, its simply doing a little bit of very basic math real time. (comparatively) RT requires a ton more work.

Where tessellation could be made a part of the standard raster hardware pipe. RT cannot. If anything as crazy as it sounds... Intel actually has the most likely to stick around hardware solution. NVidias dedicated units are a high silicon cost for something that does nothing when RT is not in play. Intel having one work unit to cover all situations is a great hedging of their graphics bet imo. This also however means there will probably always be a performance cost... the only time it won't be a major one is if the rest of what is going on is easily accelerated by the rest of the shader units. (so that there are free units not doing much else) At least their solution gives users a real choice... use all your shader units for raster, or loose some raster if you choose to flip RT on and have 20-30% of your raster units get retasked calculating rays.

We'll see how the future goes for RT its still pretty up in the air imo. As far as developer side having a easier go with RT then standard raster methods. It seems companies like Epic have that in hand to translate developer work with RT scenes to standard raster lighting. Once Nvidia goes full chiplet... I can't see them wanting to continue including a ton of separate RT hardware. I could even see Nvidia adopting an approach more like Intel and AMDs where the same raster units will get retasked.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

So now you post pictures of their 4K results, which is clearly GPU bound to make a case for the CPU? The embarrassment continues.Ah, yes, the anemic 7700X is just such an unfair crutch for Nvidia drivers.

View attachment 548703

View attachment 548704

Sure, it's not a Nvidia optimization problem, it just so CPU restricted at 4K Ultra by a low end 7700X. And then limits the 3090 Ti but not the 4090. Do explain.

I don't believe you've even looked at the results, let alone understood them.So now you post pictures of their 4K results, which is clearly GPU bound to make a case for the CPU? The embarrassment continues.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

You should repeat this while looking into a mirror.I don't believe you've even looked at the results, let alone understood them.

This is an issue I've also considered. Currently nVidia has the lead for two reasons, they came out with RT hardware first and they have dedicated hardware for nothing but RT. Going from the 3000 to 4000 series the difference in RT performance I believe is mostly about the additional RT hardware built in. This is already using a significant amount of transistors and there's a limit on the amount of extra transistors you can add before it becomes prohibitively expensive in many ways. I wouldn't be surprised to see this limit reached soon. My prediction of a minimum of two if not three generations yet before RT is truly ready is based on someone finding a more efficient way of processing RT or RT is implemented in specific instances only to reduce the computing needed or a mixture of both.I'm actually not sure RT can be compared to things like Tessellation and AA where the hardware will catch up. I mean I am sure it will get faster... I'm still not convinced its a not a road that gets abandoned completely either.

The difference between tessellation as an example and RT is pretty huge. Tessellation is dealing with the same raster data that was already there, its simply doing a little bit of very basic math real time. (comparatively) RT requires a ton more work.

Where tessellation could be made a part of the standard raster hardware pipe. RT cannot. If anything as crazy as it sounds... Intel actually has the most likely to stick around hardware solution. NVidias dedicated units are a high silicon cost for something that does nothing when RT is not in play. Intel having one work unit to cover all situations is a great hedging of their graphics bet imo. This also however means there will probably always be a performance cost... the only time it won't be a major one is if the rest of what is going on is easily accelerated by the rest of the shader units. (so that there are free units not doing much else) At least their solution gives users a real choice... use all your shader units for raster, or loose some raster if you choose to flip RT on and have 20-30% of your raster units get retasked calculating rays.

We'll see how the future goes for RT its still pretty up in the air imo. As far as developer side having a easier go with RT then standard raster methods. It seems companies like Epic have that in hand to translate developer work with RT scenes to standard raster lighting. Once Nvidia goes full chiplet... I can't see them wanting to continue including a ton of separate RT hardware. I could even see Nvidia adopting an approach more like Intel and AMDs where the same raster units will get retasked.

You are 100% correct in saying that RT capable cards are basically two different GPUs working simultaneously when RT is in play. At least that's how it is with nVidia cards from my understanding. I'm not sure how AMD's implementation works but I think it's still separate RT hardware but integrated with the raster units. I was originally under the impression that it wasn't necessarily dedicated to RT only hardware but it's not something I've looked into. If Intel's solution is basically retasking raster hardware for RT, it's likely the best way to go moving forward. Raster hardware is getting into diminishing returns at most resolutions and especially at anything below 4k. It would make sense to be able to retask unused raster cores for RT especially if additional raster IPC improvements keep moving along.

That doesn't mean there can't or won't be dedicated RT hardware. I wouldn't mind seeing something along the lines of dedicated RT hardware to act as a controller and efficiency multiplier for the raster cores doing RT if something like this is possible.

No matter what, something has to change. The current trajectory of more and more RT hardware alongside raster hardware isn't going to work. It's too many transistors using too much power at too high of a cost to manufacture.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

You know, it's funny. I remember the same thing happening when Tessellation was released. It got racked over the coals for how expensive it was to run. But here we are now, not batting an eye at tessellation and repeating the same arguments over Ray tracing.

i don't really remember anyone talking about tessellation being too hard to run. the problem tessellation had was nvidia's anti-competitive practices by making sure their version of tessellation was implemented in most mainstream games that were supporting it and neutered AMD cards in the process even though AMD had included tessellation support on their cards long before nvidia ever jumped on board.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,085

Sounds like the Intel 8087 days all over again.I'm actually not sure RT can be compared to things like Tessellation and AA where the hardware will catch up. I mean I am sure it will get faster... I'm still not convinced its a not a road that gets abandoned completely either.

The difference between tessellation as an example and RT is pretty huge. Tessellation is dealing with the same raster data that was already there, its simply doing a little bit of very basic math real time. (comparatively) RT requires a ton more work.

Where tessellation could be made a part of the standard raster hardware pipe. RT cannot. If anything as crazy as it sounds... Intel actually has the most likely to stick around hardware solution. NVidias dedicated units are a high silicon cost for something that does nothing when RT is not in play. Intel having one work unit to cover all situations is a great hedging of their graphics bet imo. This also however means there will probably always be a performance cost... the only time it won't be a major one is if the rest of what is going on is easily accelerated by the rest of the shader units. (so that there are free units not doing much else) At least their solution gives users a real choice... use all your shader units for raster, or loose some raster if you choose to flip RT on and have 20-30% of your raster units get retasked calculating rays.

We'll see how the future goes for RT its still pretty up in the air imo. As far as developer side having a easier go with RT then standard raster methods. It seems companies like Epic have that in hand to translate developer work with RT scenes to standard raster lighting. Once Nvidia goes full chiplet... I can't see them wanting to continue including a ton of separate RT hardware. I could even see Nvidia adopting an approach more like Intel and AMDs where the same raster units will get retasked.

But yeah a little Infinity Fabric with an ARM chip doing AI predictive calculations on where the player is likely to go so the GPU can render the area in advance would be sweet.

Yeah it ain't going anywhere. It's the end game any way you slice it and has been the holy grail forever.I'm actually not sure RT can be compared to things like Tessellation and AA where the hardware will catch up. I mean I am sure it will get faster... I'm still not convinced its a not a road that gets abandoned completely either.

The difference between tessellation as an example and RT is pretty huge. Tessellation is dealing with the same raster data that was already there, its simply doing a little bit of very basic math real time. (comparatively) RT requires a ton more work.

Where tessellation could be made a part of the standard raster hardware pipe. RT cannot. If anything as crazy as it sounds... Intel actually has the most likely to stick around hardware solution. NVidias dedicated units are a high silicon cost for something that does nothing when RT is not in play. Intel having one work unit to cover all situations is a great hedging of their graphics bet imo. This also however means there will probably always be a performance cost... the only time it won't be a major one is if the rest of what is going on is easily accelerated by the rest of the shader units. (so that there are free units not doing much else) At least their solution gives users a real choice... use all your shader units for raster, or loose some raster if you choose to flip RT on and have 20-30% of your raster units get retasked calculating rays.

We'll see how the future goes for RT its still pretty up in the air imo. As far as developer side having a easier go with RT then standard raster methods. It seems companies like Epic have that in hand to translate developer work with RT scenes to standard raster lighting. Once Nvidia goes full chiplet... I can't see them wanting to continue including a ton of separate RT hardware. I could even see Nvidia adopting an approach more like Intel and AMDs where the same raster units will get retasked.

There's just no feasible way to implement certain things in raster.

RT as implemented today is a sliver of RT eye candy effects. We are far off from an actual full uninhibited RT implementation for say an FPS game. When I was in comp graphics class in college, one full RT frame took hours, but yeah - damn it looked good. Suppose by now it's minutes per frame, depending on res, ect.Yeah it ain't going anywhere. It's the end game any way you slice it and has been the holy grail forever.

There's just no feasible way to implement certain things in raster.

Chris_B

Supreme [H]ardness

- Joined

- May 29, 2001

- Messages

- 5,357

Same. I also remember people proudly declaring how expensive antialiasing was and how it would never be enabled for them... Now we don't bat an eye at it. We won't bat an eye at raytracing soon enough either.

It still took around 12+ years until we essentially got anti aliasing for "free" from its introduction. It seemed like every other gpu release for amd/nvidia in that time period we were getting new variations of it promising less of a performance hit for better visuals. This is more of the same, though I can see this taking longer to get to that point.

For all the reasons mentioned above Ray Tracing is in a weird limbo. I do believe there are way more efficient ways to cook it into a game engine but I believe the lack of a market share is holding it back. It takes a lot of work to implement it at a low level as a core function in an engine where most implementations now are more like a bolt on extra that just sort of sticks out the side. I also believe the 2000 series isn’t up to the job for the most part. So until we have a new console generation with dedicated ray tracing accelerators and a PC market where the 3060 is the average I think we are going to see gimmicky implementations as the norm with Unreal Engine being the exception.

Yeah it ain't going anywhere. It's the end game any way you slice it and has been the holy grail forever.

There's just no feasible way to implement certain things in raster.

It has been and probably will continue to be the aspirational goal for many many years. The thing is what we have no is not actually real ray tracing either. Raster is a mathematical fake that uses perspective tricks artists have used for centuries to fake realistic 3d environments. You can use perspective to calculate where shadows should end... calculate viewer distance to determine edge softness and the like. It has worked well to fake reality. Ray tracing as people think about it in their heads is not what is happening today in games. True ray tracing calculates so much data that it still requires hours on high end CPUs per frame. What we are getting today is nothing but extra shadow and lighting calculations... we are getting some bounce calculation slaved to a perspective grid to keep the hardware costs at least somewhat reasonable. End of the day though current RT is still just a raster style illusion.... only thing that has changed really is instead of calculating shadows with object edges and applying some projection rules... we are calculating more edges following and looking for bounce lighting. Anyway its cool tech, no doubt... really though its a huge stretch to call what is happening today Real time ray tracing. I mean you could claim the same with standard raster and say its single point ray shadow generation. Technically a true assertion but we understand that isn't what Pixar does.

I suspect "Ray tracing" as has been defined so far in gaming is going to get reduced. We are more likely to see less intense implementations. I also believe companies like Epic are going to make the idea of "GPU RT" somewhat irrelevant. All GPU RT is doing today really is some bounce light calculation, we don't really need special hardware or to cast a million rays per frame to do that. We have seen game engines "Faking" RT lighting solutions, things like Lumen are going to get better and faster. Lumen is a much more elegant solution that blends with raster. We don't need to calculate millions of rays and toss 90% of them through a AI filter.... that is silly and I'm surprised Nvidia was able to convince anyone that was a logical solution. We haven't seen much from lumen yet, fortnight isn't a great showcase. I think considering consoles for the next decade are going to be raster heavy... I think the industry is going to pick up and run with lumen and other similar tech. If a game developer can get even 90% the same results and have their games run on anything... that is going to be where the money gets spent.

I'm not sure this is the greatest video... but at least it breaks down some of the visual differences or lack of difference between lumen and RTX.

It has been and probably will continue to be the aspirational goal for many many years. The thing is what we have no is not actually real ray tracing either. Raster is a mathematical fake that uses perspective tricks artists have used for centuries to fake realistic 3d environments. You can use perspective to calculate where shadows should end... calculate viewer distance to determine edge softness and the like. It has worked well to fake reality. Ray tracing as people think about it in their heads is not what is happening today in games. True ray tracing calculates so much data that it still requires hours on high end CPUs per frame. What we are getting today is nothing but extra shadow and lighting calculations... we are getting some bounce calculation slaved to a perspective grid to keep the hardware costs at least somewhat reasonable. End of the day though current RT is still just a raster style illusion.... only thing that has changed really is instead of calculating shadows with object edges and applying some projection rules... we are calculating more edges following and looking for bounce lighting. Anyway its cool tech, no doubt... really though its a huge stretch to call what is happening today Real time ray tracing. I mean you could claim the same with standard raster and say its single point ray shadow generation. Technically a true assertion but we understand that isn't what Pixar does.

I suspect "Ray tracing" as has been defined so far in gaming is going to get reduced. We are more likely to see less intense implementations. I also believe companies like Epic are going to make the idea of "GPU RT" somewhat irrelevant. All GPU RT is doing today really is some bounce light calculation, we don't really need special hardware or to cast a million rays per frame to do that. We have seen game engines "Faking" RT lighting solutions, things like Lumen are going to get better and faster. Lumen is a much more elegant solution that blends with raster. We don't need to calculate millions of rays and toss 90% of them through a AI filter.... that is silly and I'm surprised Nvidia was able to convince anyone that was a logical solution. We haven't seen much from lumen yet, fortnight isn't a great showcase. I think considering consoles for the next decade are going to be raster heavy... I think the industry is going to pick up and run with lumen and other similar tech. If a game developer can get even 90% the same results and have their games run on anything... that is going to be where the money gets spent.

I'm not sure this is the greatest video... but at least it breaks down some of the visual differences or lack of difference between lumen and RTX.

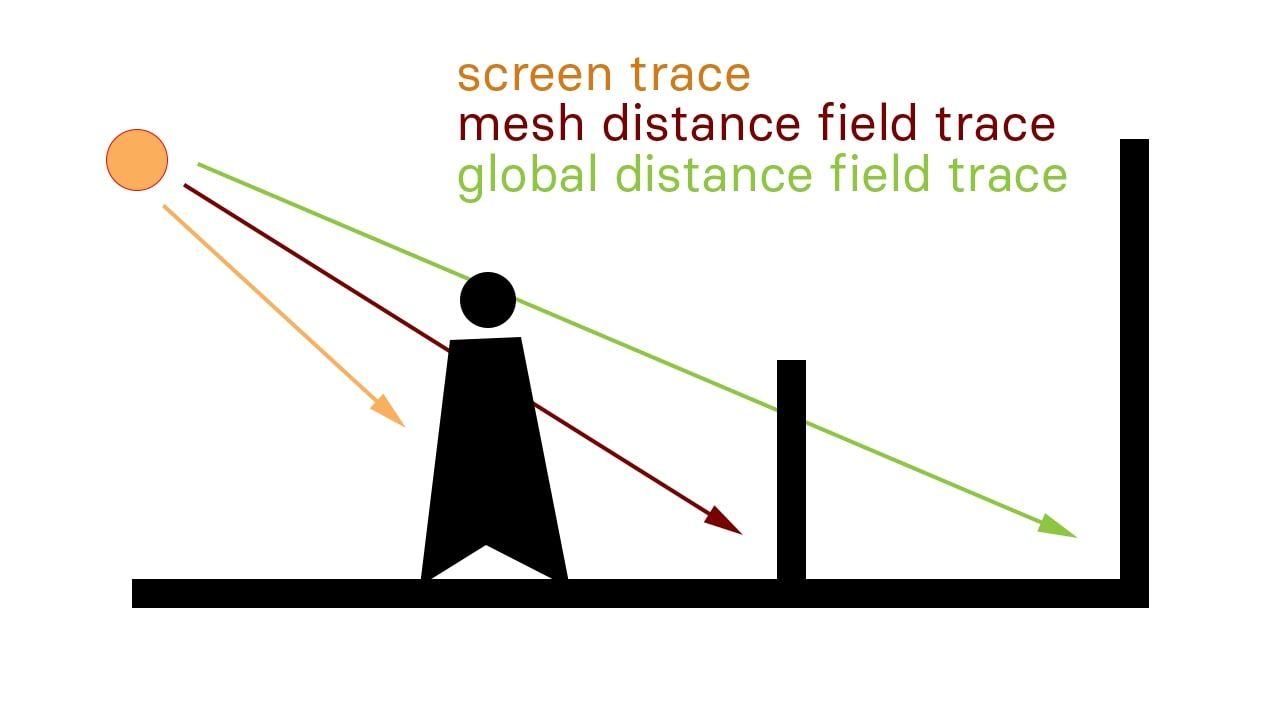

Lumen is an exception to the Ray Tracing rules, they have put in a crap load of work to get RTX enabled inside the engine at a core level and enable 3 different algorithms for ray tracing, Screen Trace, Mesh Distance Field Trace, and Global Distance Field Trace.

Nanite when combined with Lumen and the RTX functions allows the UE engine to work with some pre-generated numbers so the RTX cores on the GPU don't have to recalculate everything it can use some approximations that are good enough and those values are saved to the mesh.

Screen trace works with the approximations exclusively, Mesh Distance shortcuts things using those pre-generated approximations. Global is the "standard" where it calculates everything on the GPU and is what most games do automatically when enabling RTX.

Limiting what Global is required to calculate by using simpler pre-generated results and looser approximations, Nanite and Lumen get 90% of the RTX results at 50% of the GPU load, if you really stop and look at it then you can see a difference, but when doing anything at 60+ FPS, with DLSS/FSR on, all you are going to notice is it looks better than pure raster with vastly better performance.

At least this is how the UE Development documents have made me understand it, I could be off on my interpretation of the specifics of how the 3 different algorithms function.

sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,993

So what I see is a new game runs horrible on amd cards when ray tracing turned on, Intel cards do better, I mean I'm not shocked by any stretch Intel obviously went more in that direction with it...

Flogger23m

[H]F Junkie

- Joined

- Jun 19, 2009

- Messages

- 14,364

Lumen is an exception to the Ray Tracing rules, they have put in a crap load of work to get RTX enabled inside the engine at a core level and enable 3 different algorithms for ray tracing, Screen Trace, Mesh Distance Field Trace, and Global Distance Field Trace.

Nanite when combined with Lumen and the RTX functions allows the UE engine to work with some pre-generated numbers so the RTX cores on the GPU don't have to recalculate everything it can use some approximations that are good enough and those values are saved to the mesh.

Screen trace works with the approximations exclusively, Mesh Distance shortcuts things using those pre-generated approximations. Global is the "standard" where it calculates everything on the GPU and is what most games do automatically when enabling RTX.

Limiting what Global is required to calculate by using simpler pre-generated results and looser approximations, Nanite and Lumen get 90% of the RTX results at 50% of the GPU load, if you really stop and look at it then you can see a difference, but when doing anything at 60+ FPS, with DLSS/FSR on, all you are going to notice is it looks better than pure raster with vastly better performance.

At least this is how the UE Development documents have made me understand it, I could be off on my interpretation of the specifics of how the 3 different algorithms function.

Seems like a smart way to do things even if the overall quality isn't as good. Performance really matters and I think ray tracing will continue to be very demanding for a few more generations so anything to help with the performance is ideal.

The game is a PS5 title first, an Xbox title second, and PC coming in distant third. Ray tracing there is at best an afterthought and the amount of effort they have put into smoothing it out there shows as much.So what I see is a new game runs horrible on amd cards when ray tracing turned on, Intel cards do better, I mean I'm not shocked by any stretch Intel obviously went more in that direction with it...

Could go either way, has the cost of enabling hardware RT is small versus software RT and you seem to get significantly better results:. I also believe companies like Epic are going to make the idea of "GPU RT" somewhat irrelevant.

(less shadow pop up)

(shadow under movable object)

It could make for a smaller difference, but one that is not only fully possible to run on consoles-AMD-Intel gpu well enough and be quite common in most game has it is on a very popular game engine.

The next PS-Xbox generation could be the deterministic factor, do they save die space because the epic type seem to be close enough or do they keep it and make GPU RT happen in almost all title in a near future.

It certainly is the smarter way of doing things for sure, most games right now are basically brute forcing ray tracing, while Unreal is working to actively integrate it in a smart way, but they are spending very large sums of money on doing this, something most game studios using in house engines or older versions of Unreal aren't going to do. It really is a massive incentive for developers to switch to Unreal or upgrade their license or project from older to newer versions. This is great, yay for better visuals, but at the same time if everybody switches engines then everything starts being pretty "meh" as games all become Unreal titles, with very little variation in gameplay or mechanics. But that then gives Unreal more money and incentive to add features and functions that give more flexibility to what the engine can do which is good, but maybe adds bloat making the engine less efficient as a whole which is bad.Seems like a smart way to do things even if the overall quality isn't as good. Performance really matters and I think ray tracing will continue to be very demanding for a few more generations so anything to help with the performance is ideal.

Game developers are working in interesting times for sure either way.

Lumen is an exception to the Ray Tracing rules, they have put in a crap load of work to get RTX enabled inside the engine at a core level and enable 3 different algorithms for ray tracing, Screen Trace, Mesh Distance Field Trace, and Global Distance Field Trace.

Nanite when combined with Lumen and the RTX functions allows the UE engine to work with some pre-generated numbers so the RTX cores on the GPU don't have to recalculate everything it can use some approximations that are good enough and those values are saved to the mesh.

Screen trace works with the approximations exclusively, Mesh Distance shortcuts things using those pre-generated approximations. Global is the "standard" where it calculates everything on the GPU and is what most games do automatically when enabling RTX.

Limiting what Global is required to calculate by using simpler pre-generated results and looser approximations, Nanite and Lumen get 90% of the RTX results at 50% of the GPU load, if you really stop and look at it then you can see a difference, but when doing anything at 60+ FPS, with DLSS/FSR on, all you are going to notice is it looks better than pure raster with vastly better performance.

At least this is how the UE Development documents have made me understand it, I could be off on my interpretation of the specifics of how the 3 different algorithms function.

Yep this is exactly my point. Epic has shown the industry how to get 90% of RTX quality at 50% the cost... and lumen allows developers to include "RT" that will run on a console locked to 30 / 60 FPS. Which is why RTX Nvidia style is dead imo. Having looked at a bunch of fortnight screens and vides of Lumen vs Lumen+RT... its my opinion of course but I think Lumen looks better. (it could be a foresight artifact perhaps but to my eye RT does slightly better on things close to camera like explosive shadows through foliage ect... but Lumen seems to pick up further away world items for reflection) The fact that lumen also performs better is the icing.

I also believe third party developers will figure out how to optimize lumen to an even higher degree. Especially seeing as its going to allow them to sell games with full RT on both consoles. Its also far more future proof. I know it seems crazy that a company like Nviida may shave down RT in future generations. I believe its a real possibility. The 4090 may be the last time Nvidia sells a consumer chip that is so similar to their data center products. If the 5000s are chiplet, I could see them having no real improvement and perhaps even a slight regression in terms of RTX baked in. Especially if Nvidia feels like the development winds are just not getting behind RTX in favor of tech like lumen.

The game is a PS5 title first, an Xbox title second, and PC coming in distant third. Ray tracing there is at best an afterthought and the amount of effort they have put into smoothing it out there shows as much.

I agree will have to see more real game examples with lumen... its still hard to tell if it delivers.

Most developers that have been using it are pretty excited, it seems like its going to take off.

No doubt hardware RT is still better at some things. I think though game developers will find a way to avoid lumens weak spots and play to its strengths. There are a few things you can do with lumen lighting that are not very expensive that with RT would involve a ton of extra ray generation. You can already see it in foresight where they have clearly capped how far RT rays are bouncing as lumen is reflecting things at much further draw distance. I'm not a fortnight dev, but I suspect strongly that is a RT limitation. Casting rays out that far is very taxing, where as lumen is using the same raster old lighting tricks that make it much easier to determine where a shadow and reflection should be casting.

Software Lumen traces against a distance field of the mesh instead of triangles. It's blobby and low resolution and nothing dynamic is represented. So an animated object will not contribute to the scene.

You can't get a true mirror reflection out of it either.

So imagine Spider-Man where you can see cars and people reflected in a building's glass. Software won't look correct at all in this case.

Hardware Lumen has a feature where it traces like a kilometer out in the Matrix demo. The night time lighting is extremely gimped with software mode.

You can't get a true mirror reflection out of it either.

So imagine Spider-Man where you can see cars and people reflected in a building's glass. Software won't look correct at all in this case.

Hardware Lumen has a feature where it traces like a kilometer out in the Matrix demo. The night time lighting is extremely gimped with software mode.

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,579

The challenge is and will be - how can RT HW be an overall net positive?

We're seeing devs are throwing spaghetti at frustum walls right now, and frankly it is all over the place.

I have to assume hybrid solutions are and will be the answer for the foreseeable future. We have great new hardware tools - but they are just that. Tools. Not exclusionary items.

We're seeing devs are throwing spaghetti at frustum walls right now, and frankly it is all over the place.

I have to assume hybrid solutions are and will be the answer for the foreseeable future. We have great new hardware tools - but they are just that. Tools. Not exclusionary items.

next-Jin

Supreme [H]ardness

- Joined

- Mar 29, 2006

- Messages

- 7,387

That patch didn’t do anything other than release preorder changes or whatever iirc. There were no optimizations.Always fun seeing performance comparisons using a game that clearly has issues needing resolved. The patch for this game which listed performance improvements was only released on Friday, this article is from Friday and the linked benchmarks are from the 7th/9th so they're using the pre-patch version.

That said, I have around 40 hrs in and something is preventing the CPU from sending draw calls to the GPU. It’s not a CPU or GPU bottleneck.

I’m on a 7700 with 32GB 6000MTs and a 4090. I can literally run around Hogsmeade with 80fps ish at 30% CPU usage and 50% GPU usage at 4k Ultra with no DLSS upscaling bs, no frame generation, and Low TAA.

I disabled Hyper Threading so it’s only using the 8 functional cores.

Of those 8 cores on Afterburners OSD, they hover at most 30%.

The weird thing is, frame times are amazing. It’s not a shader compilation issue. But sometimes it’ll drop to 50ish going back up to 80ish with no real spike to frame times.

Chris_B

Supreme [H]ardness

- Joined

- May 29, 2001

- Messages

- 5,357

That patch didn’t do anything other than release preorder changes or whatever iirc. There were no optimizations.

That said, I have around 40 hrs in and something is preventing the CPU from sending draw calls to the GPU. It’s not a CPU or GPU bottleneck.

I’m on a 7700 with 32GB 6000MTs and a 4090. I can literally run around Hogsmeade with 80fps ish at 30% CPU usage and 50% GPU usage at 4k Ultra with no DLSS upscaling bs, no frame generation, and Low TAA.

I disabled Hyper Threading so it’s only using the 8 functional cores.

Of those 8 cores on Afterburners OSD, they hover at most 30%.

The weird thing is, frame times are amazing. It’s not a shader compilation issue. But sometimes it’ll drop to 50ish going back up to 80ish with no real spike to frame times.

The patch had this listed as being in it:

- Fixes to game crashing issues.

- Address the stuttering and lag issues.

- Add gameplay adjustments and optimizations.

- Add general stability fixes.

- Add performance improvements.

- Other minor fixes.

Yep this is exactly my point. Epic has shown the industry how to get 90% of RTX quality at 50% the cost... and lumen allows developers to include "RT" that will run on a console locked to 30 / 60 FPS. Which is why RTX Nvidia style is dead imo. Having looked at a bunch of fortnight screens and vides of Lumen vs Lumen+RT... its my opinion of course but I think Lumen looks better. (it could be a foresight artifact perhaps but to my eye RT does slightly better on things close to camera like explosive shadows through foliage ect... but Lumen seems to pick up further away world items for reflection) The fact that lumen also performs better is the icing.

I also believe third party developers will figure out how to optimize lumen to an even higher degree. Especially seeing as its going to allow them to sell games with full RT on both consoles. Its also far more future proof. I know it seems crazy that a company like Nviida may shave down RT in future generations. I believe its a real possibility. The 4090 may be the last time Nvidia sells a consumer chip that is so similar to their data center products. If the 5000s are chiplet, I could see them having no real improvement and perhaps even a slight regression in terms of RTX baked in. Especially if Nvidia feels like the development winds are just not getting behind RTX in favor of tech like lumen.

I have only a passing experience with Lumen, I have a dev environment for Unreal and we have students using it so I have been around to assist with the setup and have been in a few classes to assist with troubleshooting as the need arises (because why delegate the fun jobs right), and the things I have seen the students do so far is pretty fun.I agree will have to see more real game examples with lumen... its still hard to tell if it delivers.

Most developers that have been using it are pretty excited, it seems like its going to take off.

No doubt hardware RT is still better at some things. I think though game developers will find a way to avoid lumens weak spots and play to its strengths. There are a few things you can do with lumen lighting that are not very expensive that with RT would involve a ton of extra ray generation. You can already see it in foresight where they have clearly capped how far RT rays are bouncing as lumen is reflecting things at much further draw distance. I'm not a fortnight dev, but I suspect strongly that is a RT limitation. Casting rays out that far is very taxing, where as lumen is using the same raster old lighting tricks that make it much easier to determine where a shadow and reflection should be casting.

This is from one of the Dev Communities:

First, Lumen will trace against the depth buffer, these screen traces will trace until they hit anything and if it hits a mesh and can’t go through, Lumen will switch the trace to another virtual representation of the scene, which uses Signed Distance Fields. And then, when this Mesh Distance Field trace hits a mesh, Lumen will grab the lighting information of that hit on the surface cache. Since the mesh distance field trace works also offscreen, they’re also an expensive operation, and to balance that cost, Lumen switches to Global Distance Field trace at a certain distance, which is a much cheaper and faster trace but with lower reliability.

In a way, Lumen gives us a very optimized way of creating dynamic lighting that covers both outdoor and indoor spaces because it switches between those tracing methods more efficiently.

Most of the other implementations I have seen only use the expensive methods where it is tracing everything on screen out to a specified draw distance, where they then just stop ray tracing altogether.

For more details here is the tutorial page:

https://dev.epicgames.com/community...ghting-outdoor-environments-in-ue5-with-lumen

With RDNA3 AMD added AI cores(for RT) to their compute units that function similar to the tensor cores that Nvidia uses so they're both using specific hardware suited to the task. I think it will take at least a couple of generations of improvements and optimizations in both hardware and games before nice RT effects will be able to be used with only a reasonable performance hit, these will of course still be bolt on effects over a rasterized image because we're a loooonnng way off from fully ray traced graphically complex games.This is an issue I've also considered. Currently nVidia has the lead for two reasons, they came out with RT hardware first and they have dedicated hardware for nothing but RT. Going from the 3000 to 4000 series the difference in RT performance I believe is mostly about the additional RT hardware built in. This is already using a significant amount of transistors and there's a limit on the amount of extra transistors you can add before it becomes prohibitively expensive in many ways. I wouldn't be surprised to see this limit reached soon. My prediction of a minimum of two if not three generations yet before RT is truly ready is based on someone finding a more efficient way of processing RT or RT is implemented in specific instances only to reduce the computing needed or a mixture of both.

You are 100% correct in saying that RT capable cards are basically two different GPUs working simultaneously when RT is in play. At least that's how it is with nVidia cards from my understanding. I'm not sure how AMD's implementation works but I think it's still separate RT hardware but integrated with the raster units. I was originally under the impression that it wasn't necessarily dedicated to RT only hardware but it's not something I've looked into. If Intel's solution is basically retasking raster hardware for RT, it's likely the best way to go moving forward. Raster hardware is getting into diminishing returns at most resolutions and especially at anything below 4k. It would make sense to be able to retask unused raster cores for RT especially if additional raster IPC improvements keep moving along.

That doesn't mean there can't or won't be dedicated RT hardware. I wouldn't mind seeing something along the lines of dedicated RT hardware to act as a controller and efficiency multiplier for the raster cores doing RT if something like this is possible.

No matter what, something has to change. The current trajectory of more and more RT hardware alongside raster hardware isn't going to work. It's too many transistors using too much power at too high of a cost to manufacture.

next-Jin

Supreme [H]ardness

- Joined

- Mar 29, 2006

- Messages

- 7,387

The patch had this listed as being in it:

Seems this game needs a lot of work still.

- Fixes to game crashing issues.

- Address the stuttering and lag issues.

- Add gameplay adjustments and optimizations.

- Add general stability fixes.

- Add performance improvements.

- Other minor fixes.

Is that sourced from the developers or that German website? The patch had zero impact on any of my machines.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)