erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,874

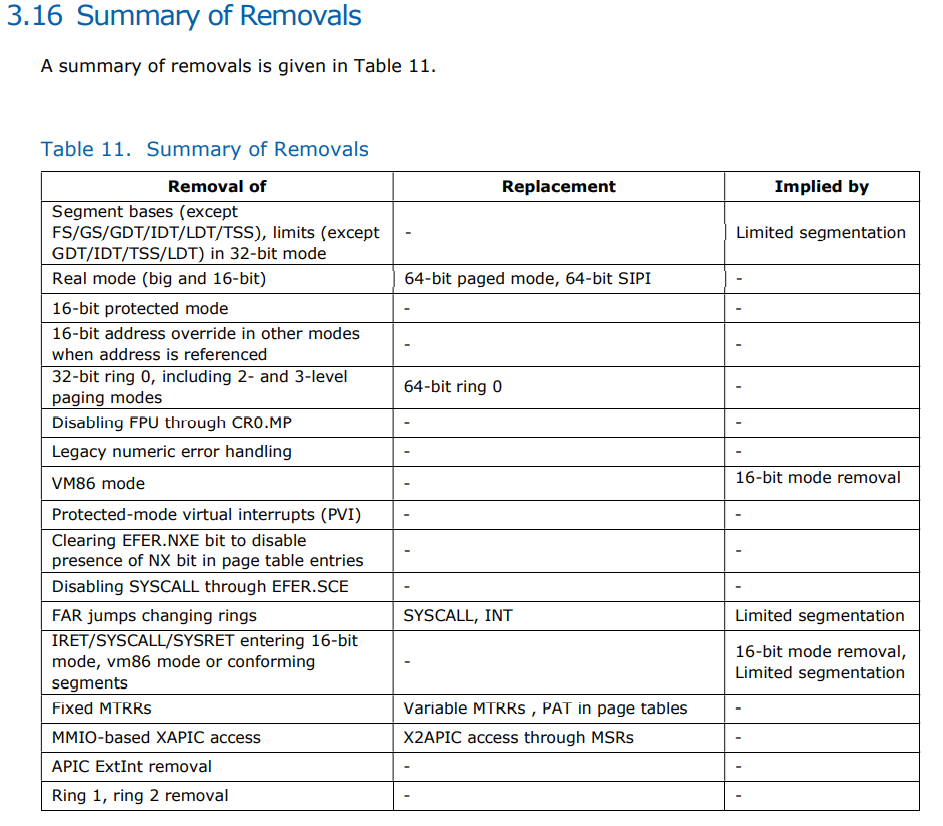

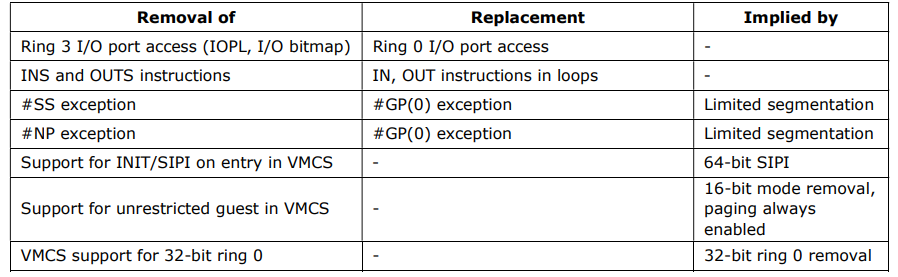

New architectures are cool

“Under this proposal, those wanting to run legacy operating systems or 32-bit x86 software would have to rely on virtualization.

Those interested can see Intel's documentation around the proposed 64-bit only architecture X86-S via Intel.com.

It's still likely some years away before seeing this possible x86S/X86-S architecture for going 64-bit only but very interesting to see Intel beginning these moves for doing away with the legacy modes.”

Source: https://www.phoronix.com/news/Intel-X86-S-64-bit-Only

“Under this proposal, those wanting to run legacy operating systems or 32-bit x86 software would have to rely on virtualization.

Those interested can see Intel's documentation around the proposed 64-bit only architecture X86-S via Intel.com.

It's still likely some years away before seeing this possible x86S/X86-S architecture for going 64-bit only but very interesting to see Intel beginning these moves for doing away with the legacy modes.”

Source: https://www.phoronix.com/news/Intel-X86-S-64-bit-Only

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)