I have an Intel P3608 1.6 TB in my home workstation. Not sure why I really did this, but decided to try out the Squid from Amfeltec and put 4 1TB Samsung 960 Pro NVME drives on there and do a comparison. Though I would share the results:

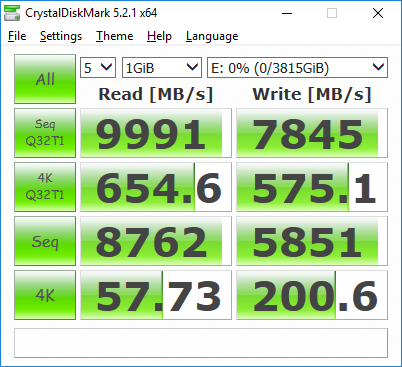

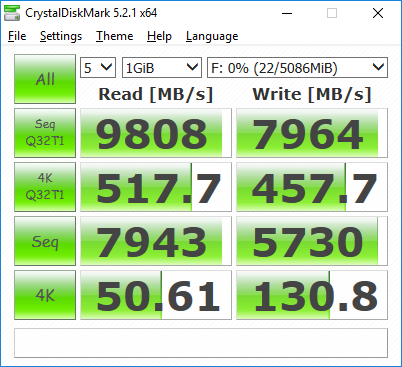

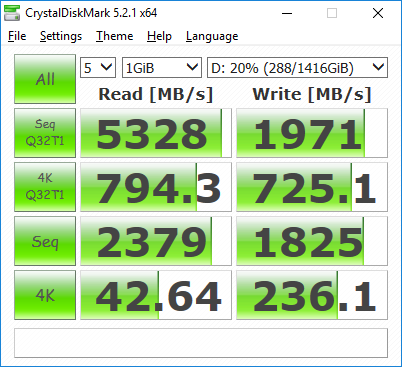

P3608

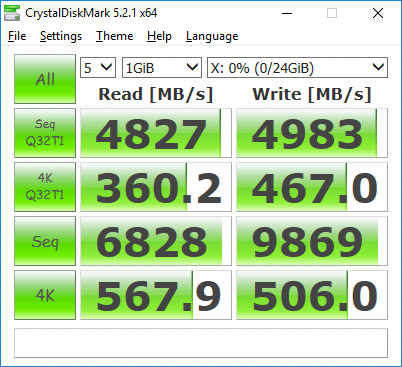

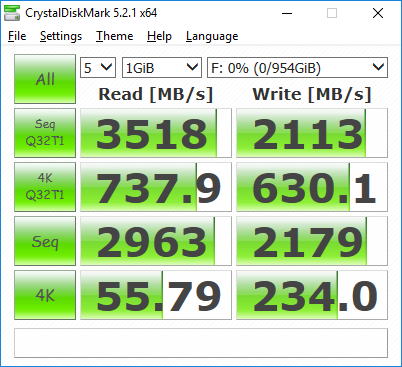

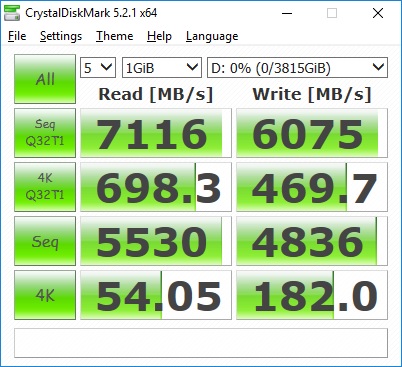

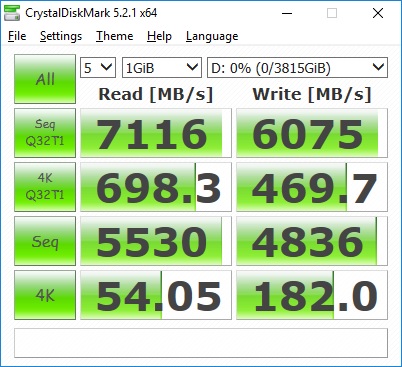

Amfeltec:

Anyone have comparison numbers to something similar so I can glean whether I am getting the full performance out of these?

P3608

Amfeltec:

Anyone have comparison numbers to something similar so I can glean whether I am getting the full performance out of these?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)