Flexion

[H]ard|Gawd

- Joined

- Jul 20, 2004

- Messages

- 1,607

"Intel Has a Core Issue..."

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The question then is, why does that latter task require tons of CPU horsepower?

It will of course depend on the application, but I find myself at a loss to imagine one that would do so.

Intel and AMD both need the next Killer App, something highly parallelizable, requiring insane amounts of CPU, that has wide mass-market appeal.

An app that is simply highly parallellzable would probably just be done on GPU's.

This has mass-market appeal? For business, maybe, but if I had a home like that, I'd be taking an ice pick to all those cameras.Think having every non-private corner of a residence (or small business) under 4k60+ surveillance; that footage has to be piped around and analyzed, at a basic level for changes, and for changes to be piped to inference engines. Dozens of cameras, which themselves should be far smaller than current surveillance cameras supposing they are based on current cell-phone camera designs. Perhaps having stereo and IR capability as well.

This has mass-market appeal? For business, maybe, but if I had a home like that, I'd be taking an ice pick to all those cameras.

The truth is that none of us NEED anything other than a basic celeron computer, but we like to have nice things.

I agree with respect to 'needs', and for desktop computing I feel that you're absolutely right; but for stuff that's compute-intensive, be it software development or content creation of some sort, applications do exist for these many-cores-per-socket products. They're particularly great for work that's perhaps already well-threaded but not currently well-distributed between nodes; stuff that's latency sensitive really needs network technologies that do not exist at the workstation level, and more cores helps.

Good point, as well I can add that there may not be ONE thing that can use all my cores, but I can run 10 different active applications AND game without slowdown. That in itself is a blessing.

Isnt the advantage of ThreadRipper2 the fact that it offers more PCIe Lanes for motherboard manufactureers to use for more features?

Basically I experimented with the idea of adding 10Gbase-T to a consumer board. The result was seeing that it's going to cost +$100, and if the controller isn't built in- Intel or AMD sub-HEDT- you're gonna lose GPU lanes. Most Intel HEDT are there as well. So for 10Gbit or other higher-bandwidth connection needs, AMD is certainly hitting a market point that Intel hasn't yet chosen to.

I have an Intel 10GBase-T adapter in my Asus P9x79 WS workstation board and it works great.

PCMag.com's encyclopedia defines it as "An Intel term for high-performance desktop computers." As others here have mentioned referencing the X58 chipset, Intel has been using the term for over 10 years as seen on page 4 of this pdf dated April 2, 2008..

So long as you use the Aquantia NIC (or pay up for Intel PCIe 3.0), it should be fine, yeah. The main problem is with the CPUs that skimp on PCIe lanes, and how those boards are usually set up.

02:00.0 Ethernet controller: Intel Corporation 82598EB 10-Gigabit AT2 Server Adapter (rev 01)

Subsystem: Intel Corporation 82598EB 10-Gigabit AT2 Server Adapter

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 64 bytes

Interrupt: pin A routed to IRQ 26

Region 0: Memory at fa640000 (32-bit, non-prefetchable) [size=128K]

Region 1: Memory at fa600000 (32-bit, non-prefetchable) [size=256K]

Region 2: I/O ports at d000 [disabled] [size=32]

Region 3: Memory at fa660000 (32-bit, non-prefetchable) [size=16K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI+ D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot+,D3cold-)

Status: D0 NoSoftRst- PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [50] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [60] MSI-X: Enable+ Count=18 Masked-

Vector table: BAR=3 offset=00000000

PBA: BAR=3 offset=00002000

Capabilities: [a0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s <512ns, L1 <64us

ExtTag- AttnBtn- AttnInd- PwrInd- RBE+ FLReset-

DevCtl: Report errors: Correctable+ Non-Fatal+ Fatal+ Unsupported+

RlxdOrd- ExtTag- PhantFunc- AuxPwr- NoSnoop+

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- UncorrErr- FatalErr- UnsuppReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 2.5GT/s, Width x8, ASPM L0s L1, Exit Latency L0s <4us, L1 <64us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp-

LnkCtl: ASPM Disabled; RCB 64 bytes Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s, Width x8, TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range ABCD, TimeoutDis+, LTR-, OBFF Not Supported

DevCtl2: Completion Timeout: 16ms to 55ms, TimeoutDis-, LTR-, OBFF Disabled

LnkCtl2: Target Link Speed: 2.5GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance De-emphasis: -6dB

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete-, EqualizationPhase1-

EqualizationPhase2-, EqualizationPhase3-, LinkEqualizationRequest-

Capabilities: [100 v1] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- NonFatalErr+

AERCap: First Error Pointer: 00, GenCap- CGenEn- ChkCap- ChkEn-

Capabilities: [140 v1] Device Serial Number xx-xx-xx-xx-xx-xx-xx-xx

Kernel driver in use: ixgbe

Kernel modules: ixgbeDoes anyone here actually remember using it 10 years ago? Even 5 years ago?

Yup, that's part of the overall problem; if you want 10Gbase-T, and I do me the RJ-45 version, and you also want it on PCIe 3.0 (so that it's not potentially downgrading anything else to PCIe 2.0 {or PCIe 1.0!!}, you can be a bit limited in terms of platform support. It's a further issue if you want to build a "hyperconverged" system that has a ton of local storage, as even 10Gbase-T is dog slow if you're not running some form of RDMA, and then it's still slower than local storage in terms of latency, most especially if you're comparing to local NVMe.

However, with respect to your solution, I can't say that I disagree; I did spend up for a 10Gbase-T switch (that has two shared SFP+) from HP simply so that I can expand going forward, and I'm hoping to be able to trunk it over 10Gbit to an Aruba switch sourced from eBay.

They need to License Infinity Fabric from AMD like x64 or make their own variant of the tech. They can call it "IntellaGlue"

Not arguing on the worthiness of HEDT as an acronym.. just stating that its use did not just start recently.

Here are 4 examples from 2013 of various websites using the term "HEDT"..

Intel Roadmap Leaks haswell-E "Lituya Bay" HEDT Platform

Intel Core i7 "Ivey Bridge-E" HEDT Lineup Detailed

Ivy Bridge-E HEDT Core i7 is actual six-core Xeon die

Intel HEDT processors are designed for enthusiasts..

BTW, [H] has also been using the term since 2014..

The X99 platform has been a success with enthusiasts despite the cost of entry to the HEDT (High End Desktop) market being the expense of DDR4 RAM.

If you’re in the market for an entry into the HEDT segment then the X99-A is an excellent choice and a great value to boot.

as well as [H] forum members since 2013..

What it boils down to is that Ivy Bridge-E is a waste of time for the HEDT crowd.

I've never actually tested disk access latency of remote drives. How would you even do that? Put a drive image in a VM on it, and then do a Disk Bench?

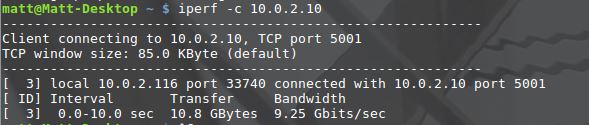

I do all things that require low latency locally though. My remote NAS is just for file storage, and it does very well at that. I have - on occasion - seen it hit ~1.2GB/s accross the adapter. This was probably for stuff that was already in RAM cache on the NAS server though.