erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

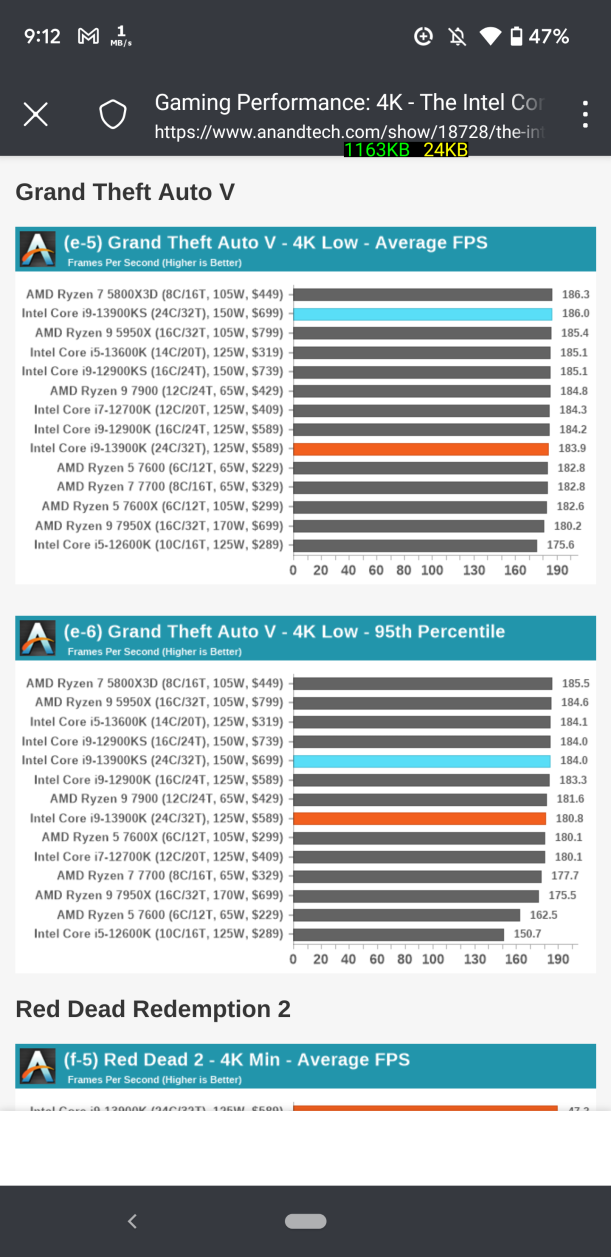

The fastest and most power hungry PC chip ever.

"The KS model has the same default profile as the 13900K but also has its own new Extreme Power Profile that allows for a 320W PL1/PL2 and 400A ceiling. You'll need to ensure that your motherboard can deliver the peak current if you want to unleash the full power of the KS, as not all motherboards can for a long period of time. Motherboard vendors allow assigning a higher ICCMax value in the BIOS, typically under settings like "Core/CPU Current Limit" (the name varies by mobo maker), but that doesn't mean the motherboard can actually deliver that amount of current. Obviously, B- and H-series boards don't make the cut.Intel defines these recommended power profiles but allows motherboard vendors to ignore them completely, and exceeding the default values doesn't void the warranty. Thus, by default, most motherboard makers completely ignore the limits and assign the maximum values for PL1, PL2, and ICCMax, resulting in higher performance and more heat.

Even at stock settings, the Core i9-13900KS hit up to 328W of power and 100C in our testing. We'll see what power draw, performance, and thermals look like, including gaming and productivity benchmarks, on the following pages."

https://www.tomshardware.com/reviews/intel-core-i9-13900ks-cpu-review

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)