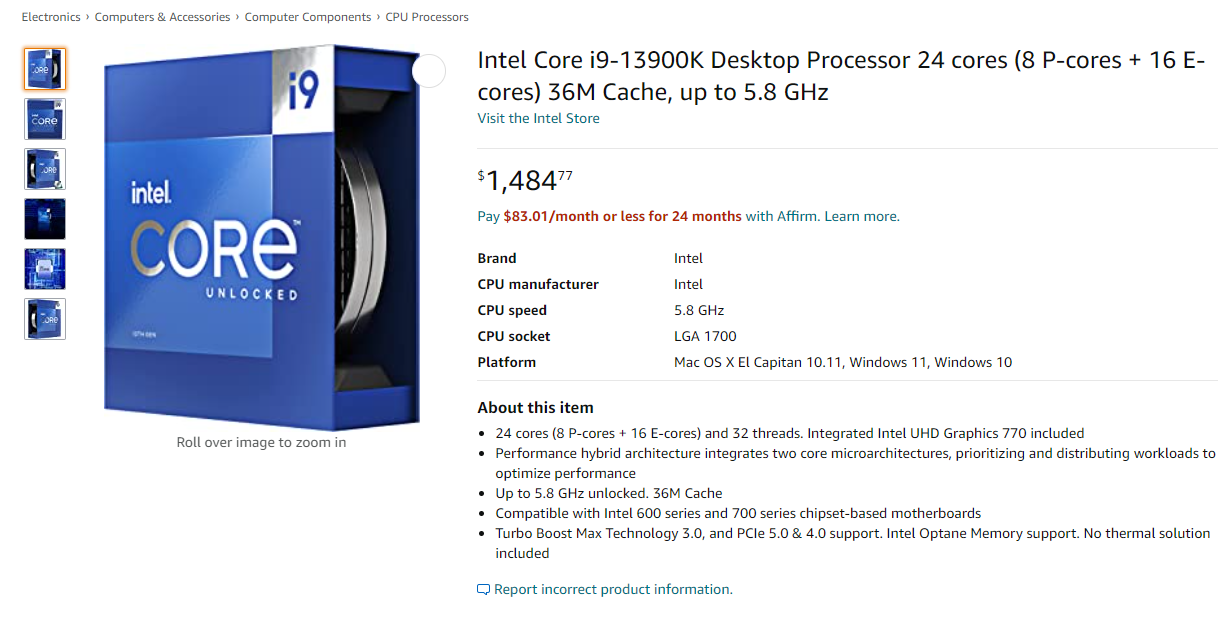

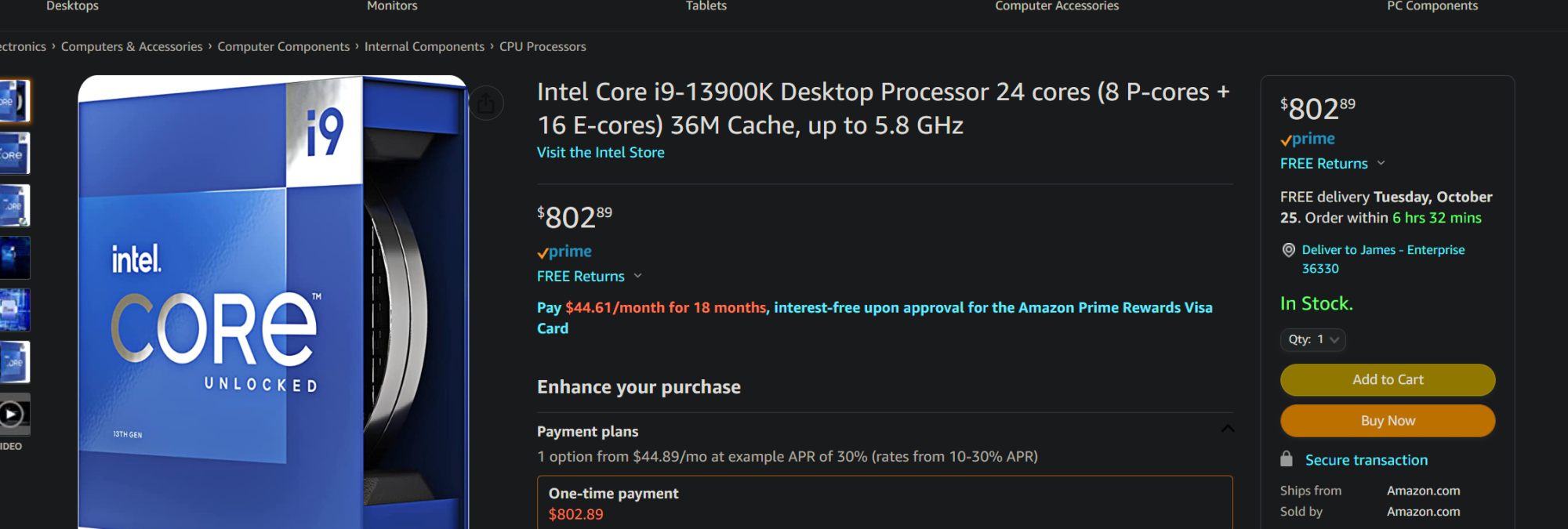

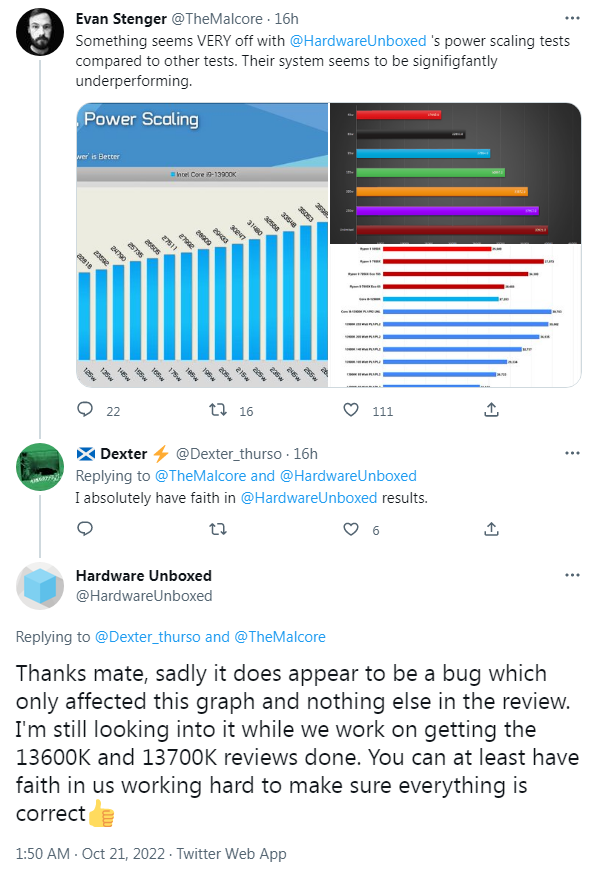

That is fair... of course if your buying either the 13900 or 7950 and all your doing is gaming your probably got your budget priorities messed up a little.The 300W number is a worst-case scenario for power consumption, by the way. In gaming, a worst-case scenario like Civ 6 consumes around 190W. Still worse than AMD, but let's get some context in the discussion. The 13900K isn't going to be consuming 300W all the time.

If your doing the things that you buy a high core CPU for... the 13900 throttles after 17s under water, and does suck double the power.

Light loads like gaming sure its not insanely more.... I mean sitting idle it is probably not sucking any more power at all. Not being a jerk just ya I think we all know the flag ship CPUs are waste of money for gaming alone. I mean if your buying flagship CPUs your almost for sure trying to game at 4k... and well, basically every mid range or better CPU from the past 2 gens will be damn close to =.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)