402blownstroker

[H]ard|DCer of the Month - Nov. 2012

- Joined

- Jan 5, 2006

- Messages

- 3,242

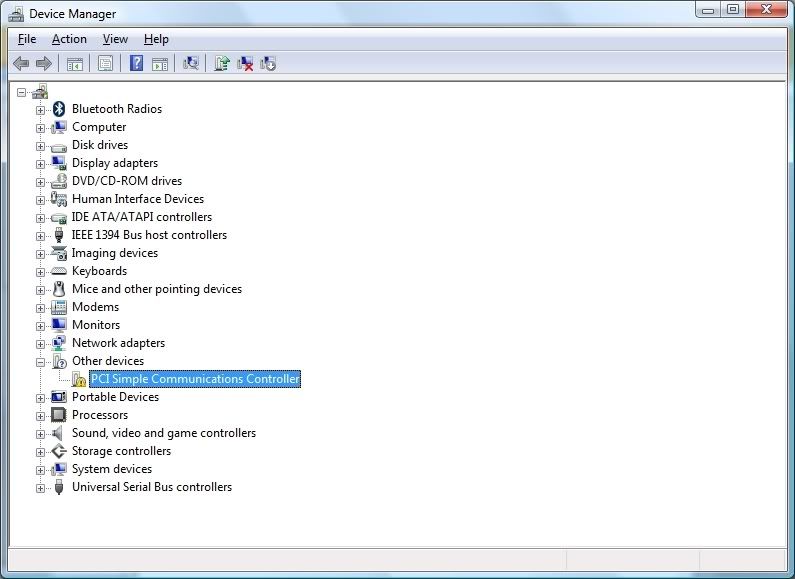

I picked up a pair of Mellanox 4x dual porrt HCA cards that are rebranded HP 483513-B21 cards. For the life of me I can not find a Server 2008r2 driver for them. I found the 'generic' windows driver package for them on the Mellanox site, but after install the driver the card is still not recognized. The HP site only has firmware  Does anyone know where a driver is or has been able to get one of these cards to work in Server 2008?

Does anyone know where a driver is or has been able to get one of these cards to work in Server 2008?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)