defaultluser

[H]F Junkie

- Joined

- Jan 14, 2006

- Messages

- 14,398

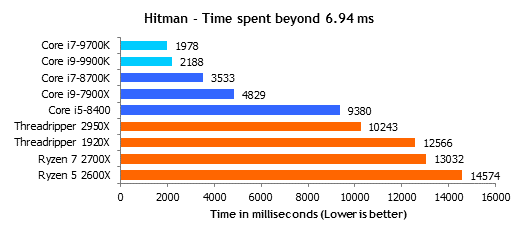

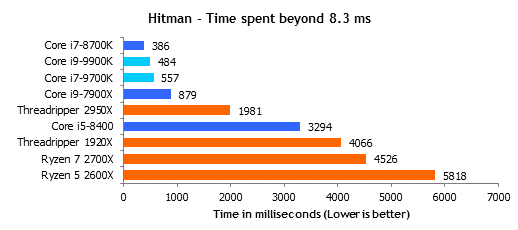

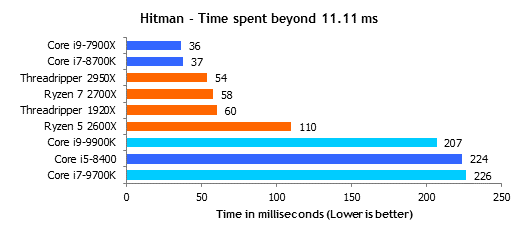

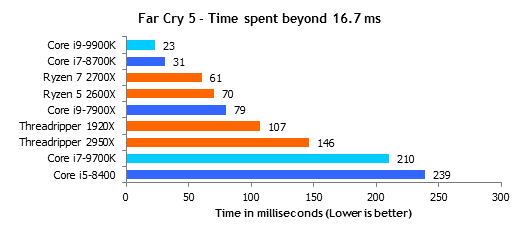

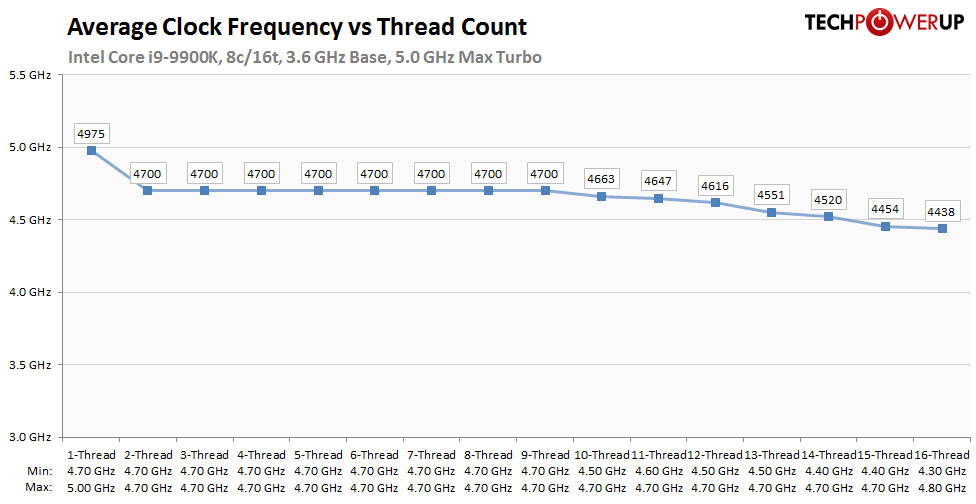

I guess I don't get the logic here. Going from 4/4 to 4/8 does way more for gaming than going 4/8 to 6/6. The only reason we don't see a boost going from 6/6 to 6/12 is mostly due to gpu limitations or possibly thread limitations in games.

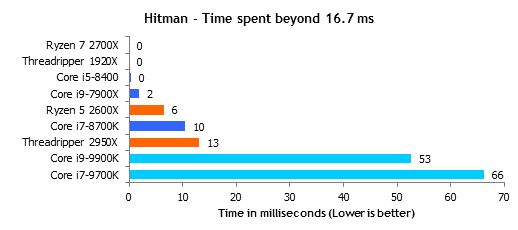

9700k will be slightly faster than 8700k in games with 8 major threads.

It will also come with integrated solder. No expense or risk of delidding needed.

I can see the "don't buy the 9900k" logic in this thread, but anyone thinking you shouldn't buy the 9700k instead is just smoking crack.

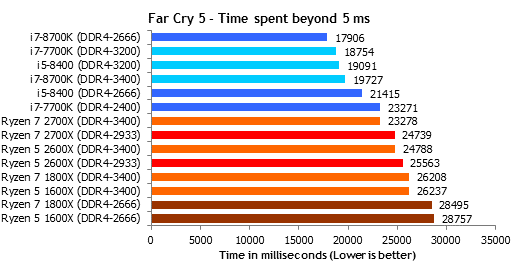

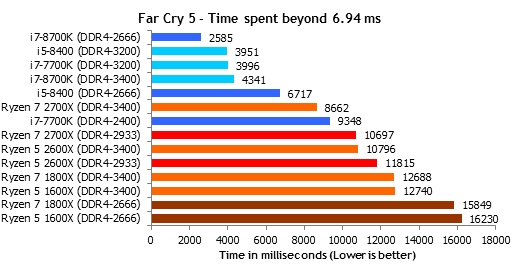

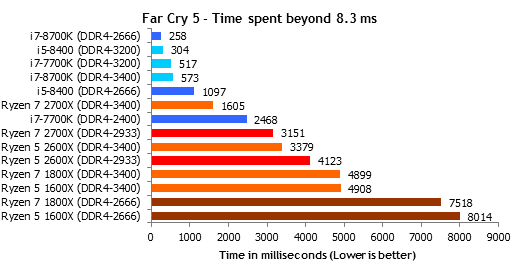

Adding hyperthreading adds a theoretical 30% performance increase to 6 cores/6 threads. But you need software that scales to TWELVE threads to realize that 30% performance increase.

Bumping the core count from 6 cores/6 threads to 8 cores/8 threads adds a theoretical 33% performance increase. But you need software that scales to EIGHT threads to realize that performance increase.

We are very unlikely to see new consoles with 16 threads until the 2020s. For now 8 threads is the maximum you can do on the PS4/Xbox one.

8700k = 9700k, if you have 12 thread-aware software.

9700k > 8700k if you have 8-thread aware software. This is the more likely situation were going to see in games in the next five years.

In my experience, having hyperthreading off gives you exactly the same minimum framerates as HT on, if the number of major threads used by the software is not DOUBLE or more the number of cores on the processor. This is why the Core i5s were giving the same minimum frame rate in games as 8 thread processors, back when 4-thread engines were big (but now they're having trouble keeping up in games like Battlefield 1 MP).

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)