schoolslave

[H]ard|Gawd

- Joined

- Dec 7, 2010

- Messages

- 1,293

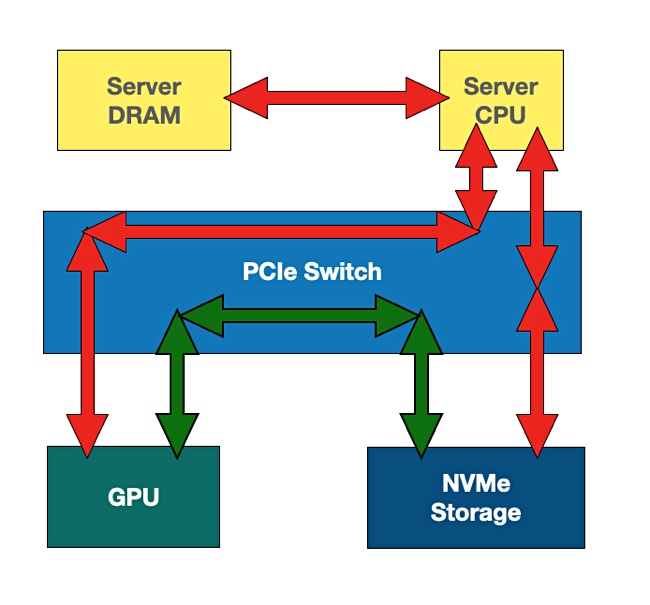

That's incorrect. These technologies remove the need for a bounce-buffer in system RAM for CPU -> GPU or GPU <-> GPU or GPU <-> Accelerator accesses. Also SmartAccess is primarily (only?) for gaming (AMD's compute equivalent is "large BAR" on systems using their ROCM stack) while GPUDirect is (or was?) primarily for compute....stuff...

Making those commands mandatory as part of the PCIe 5 specification goes a very long way to speeding adoption and making things better for everyone.

This same issue but for GPU to Ram communication is why AMD SmartAccess, and Nvidia GPU Direct were such big deals when they launched.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)