wormholev2

n00b

- Joined

- Jun 13, 2015

- Messages

- 9

Okay so here's the idea: I have a NUC5i5RYK laying around and my GTX980 from my main mATX build.

The NUC has an m.2 port which can provide up to x4 pcie lanes which in theory should be plenty, even for the GTX980.

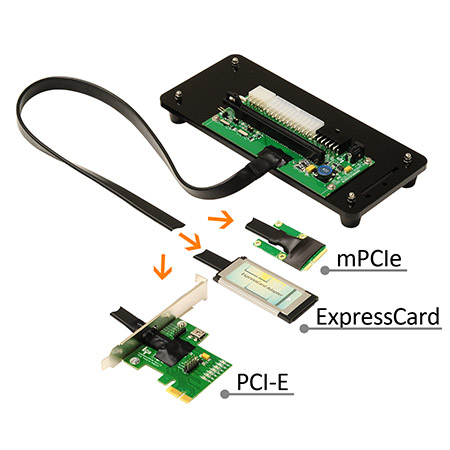

I got an m.2 to PCIe x4 adapter and a PCIe x4 to x16 riser.

Since the m.2 slot will be used for the GPU and I can't seem to find the tiny 5-pin to sata power cable for the NUC, I'll try installing Windows on a USB drive and hope for the best.

In theory, this could end up being a <2L system (excluding the power supply).

Thoughts?

----------------------------------------------

Edit: Actually got this working!

Here are some more pictures and details about the current setup.

The NUC has an m.2 port which can provide up to x4 pcie lanes which in theory should be plenty, even for the GTX980.

I got an m.2 to PCIe x4 adapter and a PCIe x4 to x16 riser.

Since the m.2 slot will be used for the GPU and I can't seem to find the tiny 5-pin to sata power cable for the NUC, I'll try installing Windows on a USB drive and hope for the best.

In theory, this could end up being a <2L system (excluding the power supply).

Thoughts?

----------------------------------------------

Edit: Actually got this working!

Here are some more pictures and details about the current setup.

Last edited:

As an Amazon Associate, HardForum may earn from qualifying purchases.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)