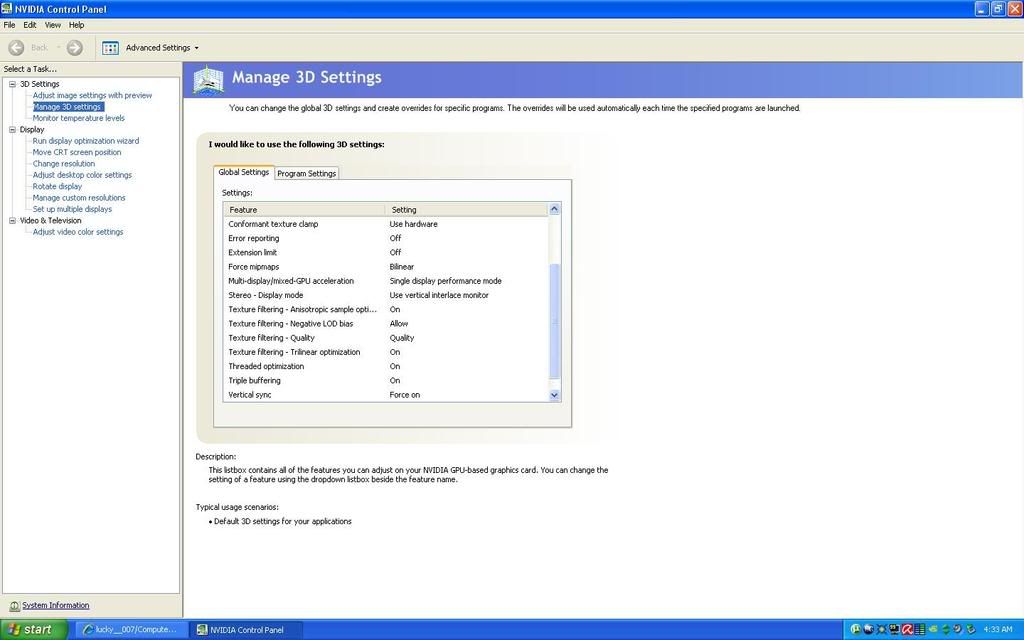

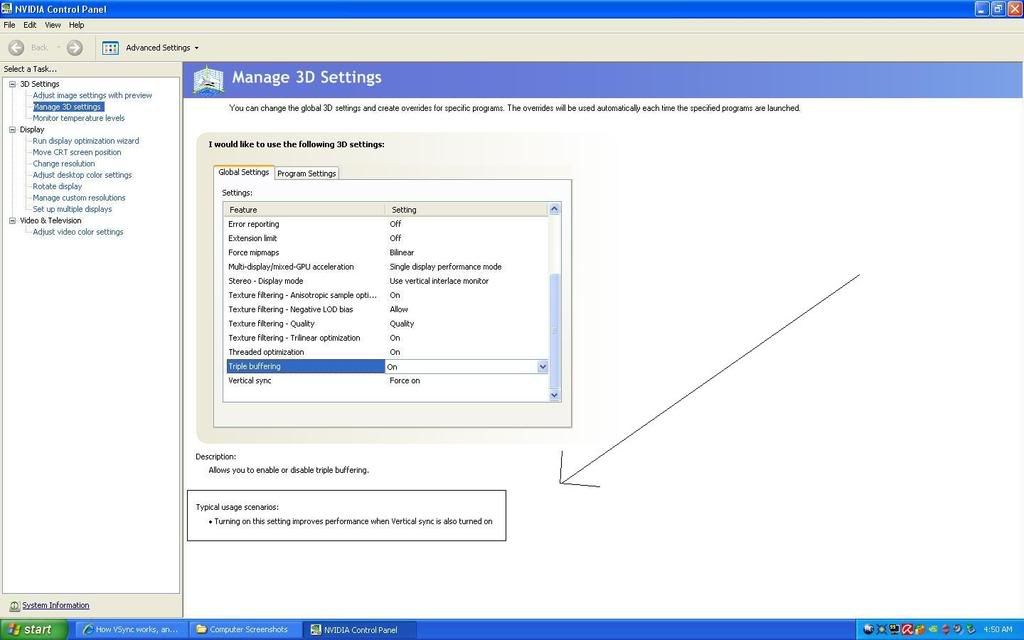

All OpenGL titles should support triple buffering -- no specific game support required. Just enable it and go, though some games have in-game settings where you can enable it, so enable it there if possible. It's also possible to force triple buffering in D3D games on most hardware.So is there a list of games that support triple buffering? because I have seen that too.

As I understand it, sort of.Is there any point to turning on triple buffering without using vsync as well?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)