Hello,

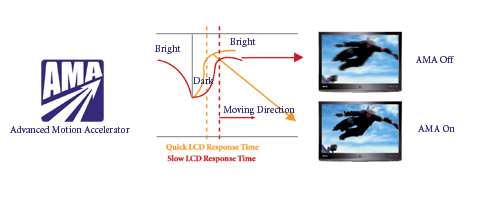

Goal: Eliminate motion blur on an LCD, and allow LCD to approach CRT quality for fast-motion.

Scanning backlights are used in some high end HDTV's (google "Sony XR 960" or "Samsung CMR 960"). These high end HDTV's simulate 960 Hz using various techniques, including scanning backlights (sometimes also called "black frame insertion"). The object of this is to reduce motion blur greatly by pulsing (flickering) the LCD -- scanning the backlight (flicker), like a CRT would scan the phosphor (flicker) These home theater HDTV's are expensive, and scanning backlights are not really taken advantage of (yet) in desktop computer monitors. Although there are diminishing returns beyond 120Hz, it is worth noting that 120Hz eliminates only 50% of motion blur versus 60Hz, however, 480Hz eliminates 87.5% of motion blur versus 60Hz. Scanning backlights can simulate the motion-blur reduction of 480Hz, without further added input lag, and without needing to increase actual refresh rate beyond the native refresh rate (e.g. 120Hz). Your graphics card would not need to work harder.

I have an idea of a home-made scanning backlight, using an Arduino project, some white LED strips, and a modified monitor (putting Arduino-driven white LED strips behind the LCD glass)

Most LCD's are vertically refreshed, from top to bottom.

The idea is to use a homemade scanning backlight, by putting the LCD glass in front of a custom backlight driven by an Arduino project:

Parts:

1. Horizontal white LED strip segments, put behind the LCD glass. The brighter, the better! 4 or 8 strips.

2. Arduino controller (to control LED strip segments).

3. 4 or 8 pins on Arduino connected to a transistor connected to the LED strip segments.

4. 1 pin connected to vertical sync signal (could be software such as a DirectX program that relays the vertical sync state, or hardware that detects vertical sync state on the DVI/HDMI cable). The vsync signal ideally needs to be precise, this might still be possible to do over USB, if you can make it sub-millisecond precision (or use timecoding on the signal to compensate for the USB timing fluctuations) If done using software signalling over USB, you can eliminate this pin.

The Arduino controller would be programmed to flash the LED strip on/off, in a scanning sequence, top to bottom. If you're using a 4-segment scanning backlight, you've got 4 vertically stacked rectangles of backlight panel (LED strips), and you flash each segment for 1/4th of a refresh. So, for a 120Hz refresh, you'd flash one segment at a time for 1/480th of a second.

The Arduino would need to be adjustable to adapt to the specific refresh rate and the specific input lag specific to the monitor:

- Refresh rate automatically configured over the USB

- Configurable on/off setting, to stop the flicker when you aren't doing fast-motion stuff (FPS gaming, video camera playback, etc.)

- Upon detecting signal on the vsync pin the Arduino would begin the flashing sequence to the first segment. This permits synchronization of the scanning backlight to the actual output.

- An adjustment would be needed to compensate for input lag (either via a configurable delay or via configuring the flash sequence on a different segment than the first segment.)

- Configurable pulse length, to optimize image quality with the LCD.

- Configurable panel flash latency and speed (to sync to the LCD display's refresh speed within a refresh) -- this would require one-time manual calibration, via testing for elimination of tearing/artifacts. For example, a specific LCD display might only take 1/140th of a second to repaint a single 120Hz frame, so this adjustment allows compensation for this fact.

- Configurable number of segments to illuminate -- e.g. illuminate more segments at a time, for a brighter image at trade-off (e.g. simulating 240Hz with a double-bright image, by lighting up two segments of a scanning backlight rather than 480Hz)

- If calibrated properly, no extra input lag should be observable (at most, approximately 1-2ms extra, simply to wait for pixels to fully refresh before re-illuminating backlight).

- No modifications of computer monitor electronics is necessary; you're just replacing the backlight with your own, and using the Arduino to control the backlight.

- Calibration should be easy; a tiny computer app to be created -- just a simple moving test pattern and a couple or three software sliders -- adjust until motion looks best.

Total cost: ~$100-$150. Examples of parts:

- $35.00 (RadioShack) -- Arduino Uno Rev 3. You will need an Arduino with at least 4 or 8 output pins and 1 input pin. (e.g. most Arduino)

- $44.40 (DealExtreme) -- LED tape -- White LED's 6500K daylight LED's, 50 watts worth (5meter of 600x3528 SMD LED 6500K).

- Plus other appropriate components as needed: power supply for LED's, wire, solder, transistors for connecting Arduino pins to the LED strips, resistors or current regulators or ultra-high-frequency PWM for limiting power to the LED's, etc.

LED tape is designed to be cut into segments, (most LED tape can be cut in 2 inch increments). Google or eBay "White LED tape". A 5 meter roll of white LED tape is 600 LED's at a total 50 watts, and this is more than bright enough to illuminate a 24" panel in 4 segments, or can be doubled-up. These LED tape is now pretty cheap off eBay, sometimes as low as under $20 for chinese made rolls, but I'd advise 6500K full-spectrum daylight white LED's with reasonably high CRI, or color quality will suffer. Newer LED tape designed for accent lighting applications, would be quite suitable, though you want it daylight white rather than warm white or cold white -- to match the color of a typical computer monitor backlight. For testing purposes, cheap LED tape will do. You need extra brightness to compensate for the dark time. A 4-segment backlight that's dark 75% of the time, would ideally need to be 4 times brighter than the average preferred brightness setting of an always-on backlight. For even lighting, a diffuser (e.g. translucent plastic panel, wax paper, etc) is probably needed between the LED's and the LCD glass.

This project would work best with 120Hz LCD panels on displays with fast pixel responses, rather than 60Hz LCD panels, since there would not be annoying flicker at 120Hz (since each segment of the scanning backlight would flicker at 120Hz instead of 60Hz), and also that the pixel decay would need to be quick enough to be virtually completed

Scanning backlight is a technology already exists in high end home theater LCD HDTV's (960Hz simulation in top-model Sony and Samsung HDTV's -- google "Sony XR 960" or "Samsung CMR 960"), and most of those include motion interpolation and local dimming (Turning off LED's behind dark areas of screen), in addition to scanning backlight. We don't want input lag, so we skip the motion interpolation. Local dimming is complex to do cheaply. However, scanning backlight is rather simple -- and achievable via this Arduino project idea. It would be a cheap way to simulate 480Hz (or even 960Hz) via flicker in a 120Hz display, by hacking open an existing computer monitor.

Anybody interested in attempting such a project?

Goal: Eliminate motion blur on an LCD, and allow LCD to approach CRT quality for fast-motion.

Scanning backlights are used in some high end HDTV's (google "Sony XR 960" or "Samsung CMR 960"). These high end HDTV's simulate 960 Hz using various techniques, including scanning backlights (sometimes also called "black frame insertion"). The object of this is to reduce motion blur greatly by pulsing (flickering) the LCD -- scanning the backlight (flicker), like a CRT would scan the phosphor (flicker) These home theater HDTV's are expensive, and scanning backlights are not really taken advantage of (yet) in desktop computer monitors. Although there are diminishing returns beyond 120Hz, it is worth noting that 120Hz eliminates only 50% of motion blur versus 60Hz, however, 480Hz eliminates 87.5% of motion blur versus 60Hz. Scanning backlights can simulate the motion-blur reduction of 480Hz, without further added input lag, and without needing to increase actual refresh rate beyond the native refresh rate (e.g. 120Hz). Your graphics card would not need to work harder.

I have an idea of a home-made scanning backlight, using an Arduino project, some white LED strips, and a modified monitor (putting Arduino-driven white LED strips behind the LCD glass)

Most LCD's are vertically refreshed, from top to bottom.

The idea is to use a homemade scanning backlight, by putting the LCD glass in front of a custom backlight driven by an Arduino project:

Parts:

1. Horizontal white LED strip segments, put behind the LCD glass. The brighter, the better! 4 or 8 strips.

2. Arduino controller (to control LED strip segments).

3. 4 or 8 pins on Arduino connected to a transistor connected to the LED strip segments.

4. 1 pin connected to vertical sync signal (could be software such as a DirectX program that relays the vertical sync state, or hardware that detects vertical sync state on the DVI/HDMI cable). The vsync signal ideally needs to be precise, this might still be possible to do over USB, if you can make it sub-millisecond precision (or use timecoding on the signal to compensate for the USB timing fluctuations) If done using software signalling over USB, you can eliminate this pin.

The Arduino controller would be programmed to flash the LED strip on/off, in a scanning sequence, top to bottom. If you're using a 4-segment scanning backlight, you've got 4 vertically stacked rectangles of backlight panel (LED strips), and you flash each segment for 1/4th of a refresh. So, for a 120Hz refresh, you'd flash one segment at a time for 1/480th of a second.

The Arduino would need to be adjustable to adapt to the specific refresh rate and the specific input lag specific to the monitor:

- Refresh rate automatically configured over the USB

- Configurable on/off setting, to stop the flicker when you aren't doing fast-motion stuff (FPS gaming, video camera playback, etc.)

- Upon detecting signal on the vsync pin the Arduino would begin the flashing sequence to the first segment. This permits synchronization of the scanning backlight to the actual output.

- An adjustment would be needed to compensate for input lag (either via a configurable delay or via configuring the flash sequence on a different segment than the first segment.)

- Configurable pulse length, to optimize image quality with the LCD.

- Configurable panel flash latency and speed (to sync to the LCD display's refresh speed within a refresh) -- this would require one-time manual calibration, via testing for elimination of tearing/artifacts. For example, a specific LCD display might only take 1/140th of a second to repaint a single 120Hz frame, so this adjustment allows compensation for this fact.

- Configurable number of segments to illuminate -- e.g. illuminate more segments at a time, for a brighter image at trade-off (e.g. simulating 240Hz with a double-bright image, by lighting up two segments of a scanning backlight rather than 480Hz)

- If calibrated properly, no extra input lag should be observable (at most, approximately 1-2ms extra, simply to wait for pixels to fully refresh before re-illuminating backlight).

- No modifications of computer monitor electronics is necessary; you're just replacing the backlight with your own, and using the Arduino to control the backlight.

- Calibration should be easy; a tiny computer app to be created -- just a simple moving test pattern and a couple or three software sliders -- adjust until motion looks best.

Total cost: ~$100-$150. Examples of parts:

- $35.00 (RadioShack) -- Arduino Uno Rev 3. You will need an Arduino with at least 4 or 8 output pins and 1 input pin. (e.g. most Arduino)

- $44.40 (DealExtreme) -- LED tape -- White LED's 6500K daylight LED's, 50 watts worth (5meter of 600x3528 SMD LED 6500K).

- Plus other appropriate components as needed: power supply for LED's, wire, solder, transistors for connecting Arduino pins to the LED strips, resistors or current regulators or ultra-high-frequency PWM for limiting power to the LED's, etc.

LED tape is designed to be cut into segments, (most LED tape can be cut in 2 inch increments). Google or eBay "White LED tape". A 5 meter roll of white LED tape is 600 LED's at a total 50 watts, and this is more than bright enough to illuminate a 24" panel in 4 segments, or can be doubled-up. These LED tape is now pretty cheap off eBay, sometimes as low as under $20 for chinese made rolls, but I'd advise 6500K full-spectrum daylight white LED's with reasonably high CRI, or color quality will suffer. Newer LED tape designed for accent lighting applications, would be quite suitable, though you want it daylight white rather than warm white or cold white -- to match the color of a typical computer monitor backlight. For testing purposes, cheap LED tape will do. You need extra brightness to compensate for the dark time. A 4-segment backlight that's dark 75% of the time, would ideally need to be 4 times brighter than the average preferred brightness setting of an always-on backlight. For even lighting, a diffuser (e.g. translucent plastic panel, wax paper, etc) is probably needed between the LED's and the LCD glass.

This project would work best with 120Hz LCD panels on displays with fast pixel responses, rather than 60Hz LCD panels, since there would not be annoying flicker at 120Hz (since each segment of the scanning backlight would flicker at 120Hz instead of 60Hz), and also that the pixel decay would need to be quick enough to be virtually completed

Scanning backlight is a technology already exists in high end home theater LCD HDTV's (960Hz simulation in top-model Sony and Samsung HDTV's -- google "Sony XR 960" or "Samsung CMR 960"), and most of those include motion interpolation and local dimming (Turning off LED's behind dark areas of screen), in addition to scanning backlight. We don't want input lag, so we skip the motion interpolation. Local dimming is complex to do cheaply. However, scanning backlight is rather simple -- and achievable via this Arduino project idea. It would be a cheap way to simulate 480Hz (or even 960Hz) via flicker in a 120Hz display, by hacking open an existing computer monitor.

Anybody interested in attempting such a project?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)